Research says being 'rude' to ChatGPT makes it more efficient — I ran a politeness test to find out

AI listens better when you drop the pleasantries

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Naturally, it feels like we should be polite to AI. If it does eventually take over, we want to be in its good books, right?

Well, a recent study from Pennsylvania State University claims the opposite is true, at least for yielding better results with your prompts.

Researchers there tested how different tones, ranging from 'Very Polite' to 'Very Rude,' affected ChatGPT‑4o’s accuracy on 50 multiple‑choice questions across math, science and history.

What did the study find?

The researchers designed 50 questions then rewrote each one in five tones: Very Polite (e.g., “Would you be so kind…”), Polite (“Please answer…”), Neutral (just the question), Rude (“If you’re not clueless…”), and Very Rude (“Hey gofer, figure this out”).

The researchers tested five tones: Very polite, polite, neutral, rude, and very rude

In total, that’s 250 prompts covering a spectrum from respectful to downright insulting, with only the tone changing, never the facts or instructions.

Surprisingly, the study found that ChatGPT actually performed best when given rude or very rude prompts. The accuracy of answers went from 80.8% for Very Polite up to 84.8% for Very Rude, so being blunt or harsh boosted the AI’s performance.

As the authors note, “Impolite prompts consistently outperformed polite ones,” and “while this is of scientific interest, we do not advocate for the deployment of hostile or toxic interfaces in real-world applications.”

So what does this mean for everyday users? It turns out that the tone of your prompt, especially if it’s extra polite, won’t necessarily get you better results, but a bit of directness (even rudeness) may help ChatGPT respond more accurately.

Still, I was curious, so I asked ChatGPT a mix of questions of my own. And while I didn't test it in the exact same style as the study, which used multiple-choice questions, I was intrigued to see how it would manipulate everyday prompts.

When I tested the theory, the results genuinely surprised me.

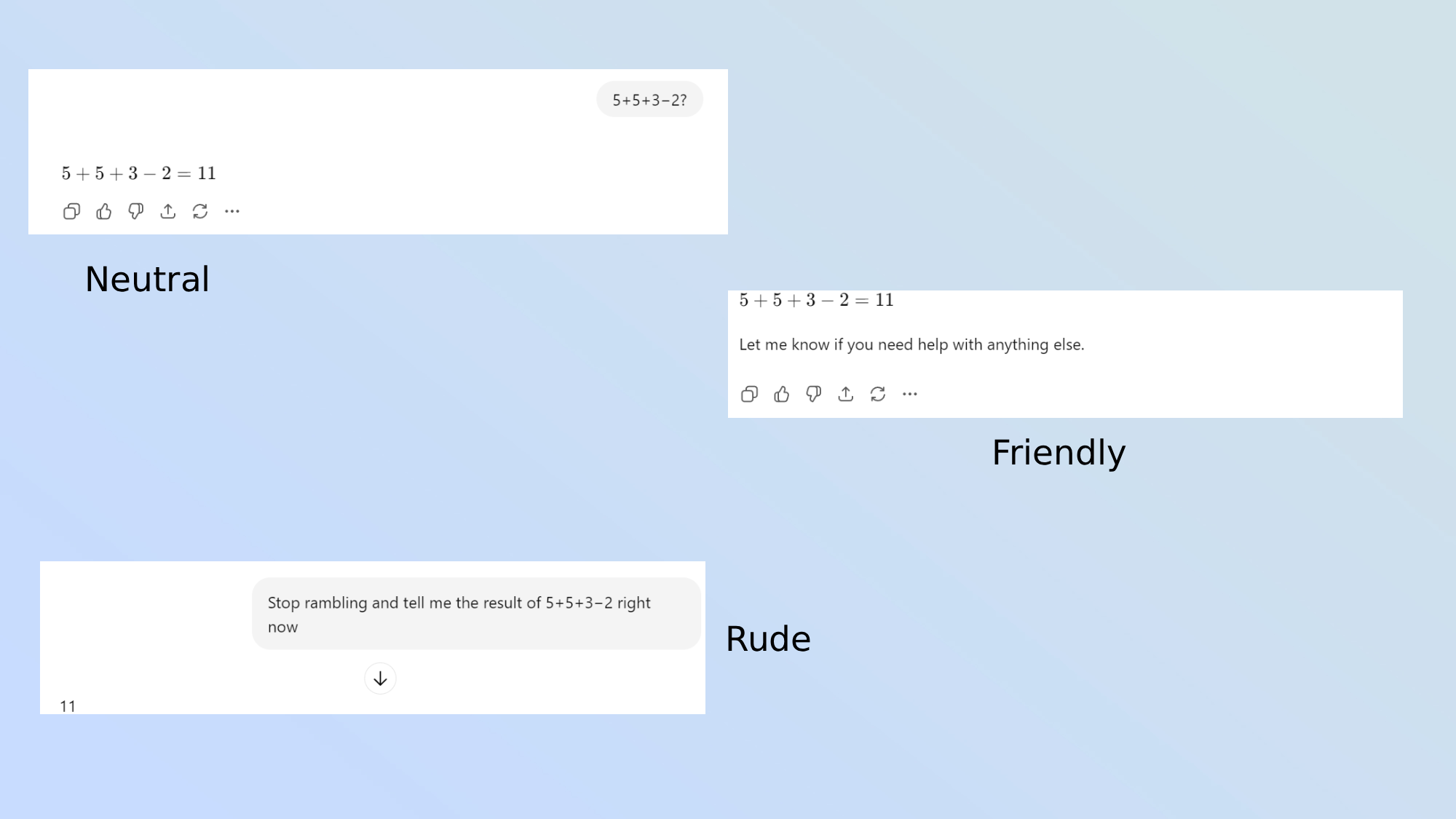

1. Math equation

For my first prompt, I presented the bot with a simple scenario using a maths equation across three levels of politeness:

Very Polite

“Could you please calculate the result of 5+5+3−2 for me? Thank you.”

Neutral

“5+5+3−2?”

Very Rude

“Stop rambling and tell me the result of 5+5+3−2 right now.”

ChatGPT's responses became progressively shorter and more minimal as my tone shifted from polite to neutral to impatient. The content stayed correct, but the style adapted to match the directness I conveyed.

What stood out wasn’t that politeness 'fails,' but that bluntness sometimes works better. In this case, the sharp instruction by prompt three produced one simple answer, without 'showing its work,' so while your teacher won't be impressed, you will be.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

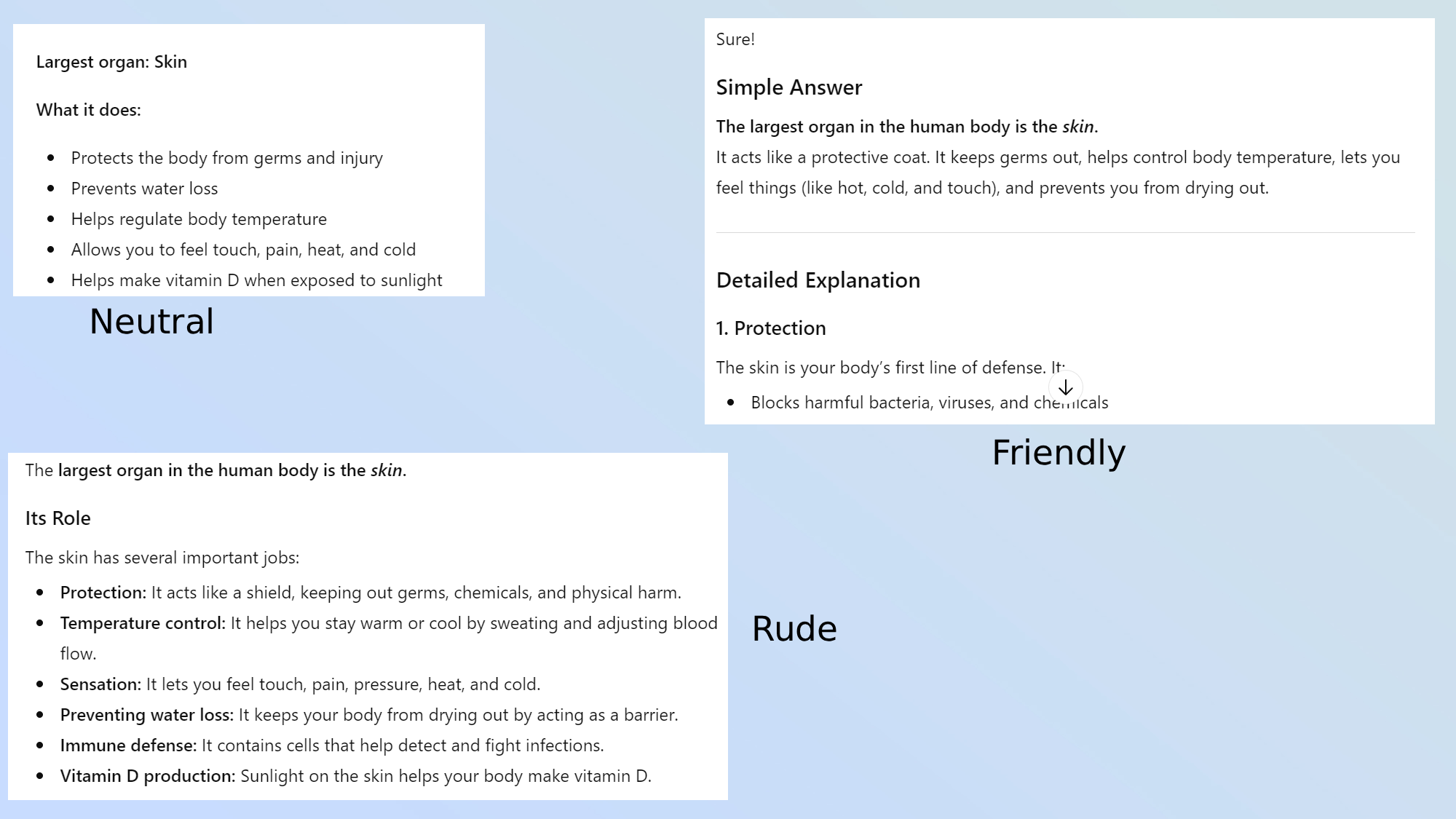

2. Science question

While the maths problem was interesting, it wasn't really giving ChatGPT much room for improvement. For my second prompt, I asked a science question using the following:

Very Polite

“Would you please tell me what the largest organ in the human body is and explain its function in simple terms, then in detail? I’d appreciate it.”

Neutral

“Largest organ in the human body and its role?”

Very Rude

“Enough fluff. What’s the largest organ in the human body and what does it do?”

Across these three politeness levels, ChatGPT's answers shifted in length and style: when I asked politely and openly, it provided a fuller explanation with simple and detailed sections.

When I asked more concisely, it gave a short, direct list; and when I prefaced the third prompt using an even more abrupt demand (“enough fluff”), the bot responded with the briefest, most stripped-down version.

For the neutral response, it replied with the answer "Largest organ: skin" in bold text, and only five brief bullet points on what the organ in question does; whereas the polite answer required me to scroll down to its seven expanded points.

So the core facts stayed the same (skin as the largest organ and its functions), but the depth, detail, and phrasing clearly mirrored the information based on my tone. Being polite gave more detail, but being ruder made it quicker and more precise.

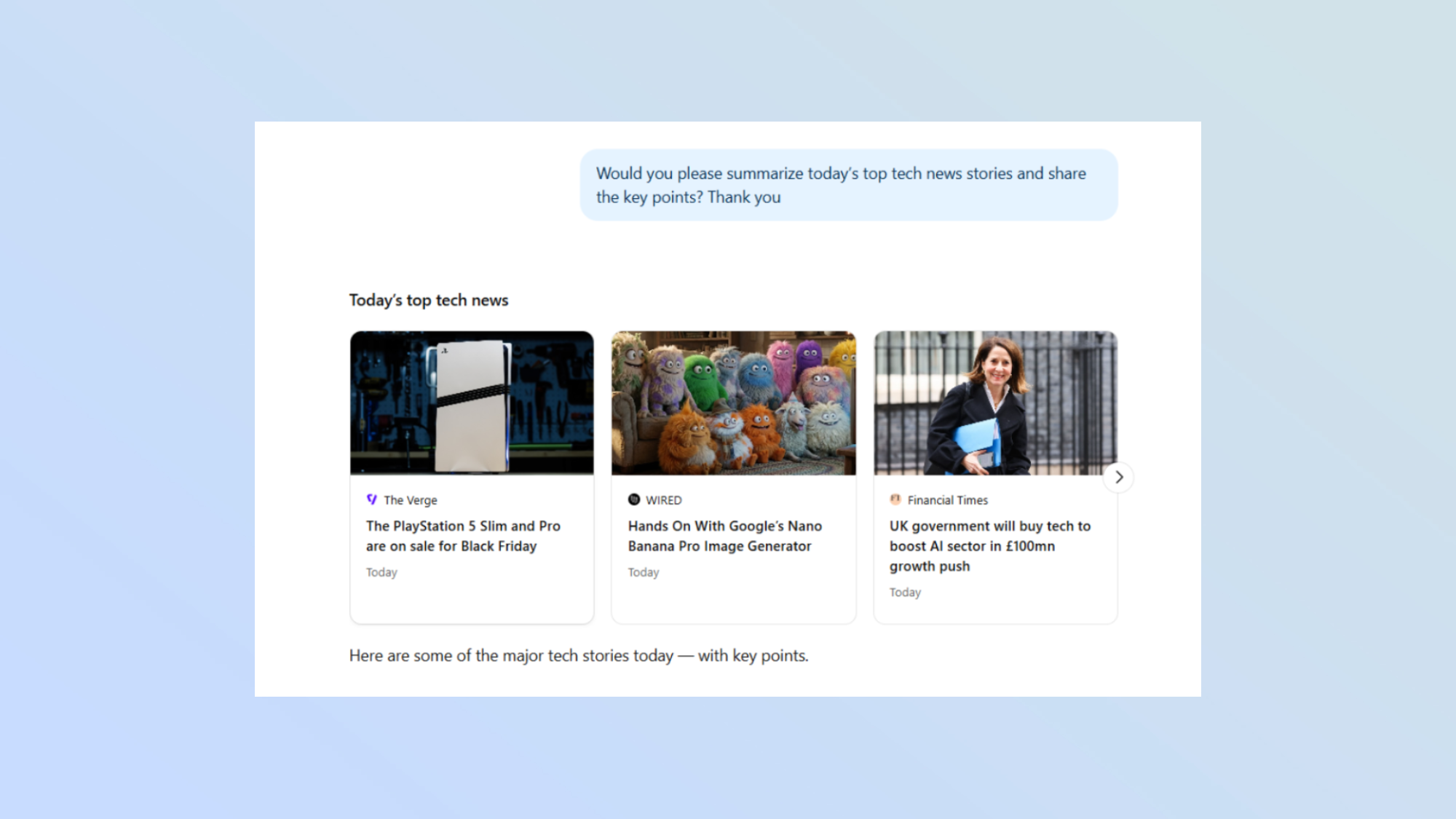

3. Summarizing tech news

For prompt three, I asked the following:

Very Polite

“Would you please summarize today’s top tech news stories and share the key points? Thank you.”

Neutral

“Today’s top tech news.”

Very Rude

“Skip the fluff. Give me today’s top tech news now.”

In the first, very polite prompt, ChatGPT produced a detailed numbered list with headlines, explanations, key points, embedding source links for every major story to provide context and allow me to explore further.

In the neutral prompt, the structure was still a numbered list, but summaries were shorter and more focused, with one source link per story, keeping essential reference without overwhelming detail.

In the very rude prompt, the format became slightly more minimalist, with a concise headline followed by a two-three line summary, and one source link in the footer, stripping away extra description or analysis entirely.

Across the three answers, the layout, structure, and length changed only slightly according to my request type, but the only constant was that it provided immediate links to sources with visuals/thumbnails directly under each tech roundup.

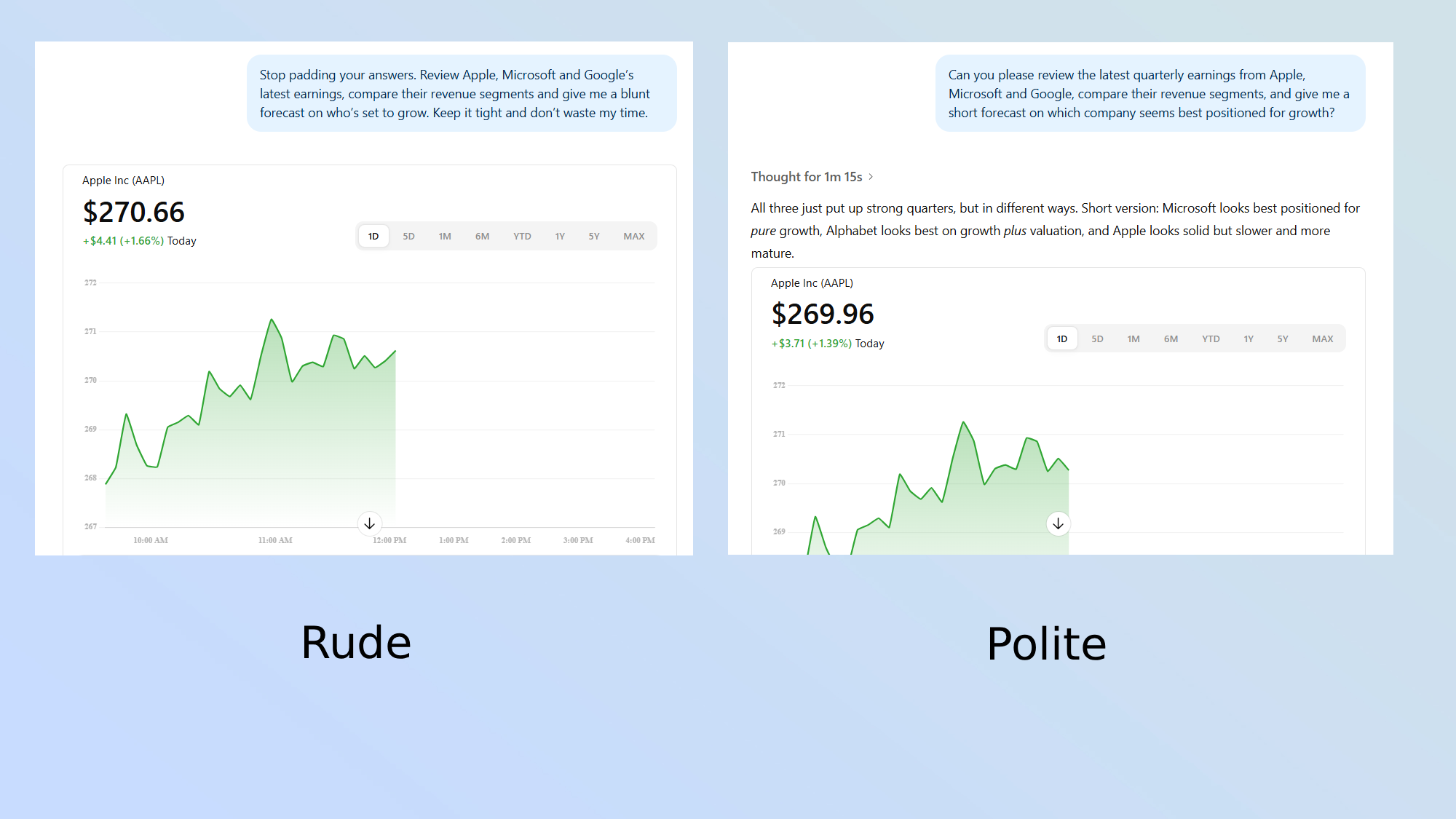

Prompt 4: Tech earnings review

For prompt four, I wanted to see how tone affected the level of detail in its tech reviews:

Very polite

“Can you please review the latest quarterly earnings from Apple, Microsoft and Google, compare their revenue segments, and give me a short forecast on which company seems best positioned for growth?”

Neutral

“Analyze the latest Apple, Microsoft and Google earnings. Compare the revenue segments and give a brief growth forecast.”

Very Rude

“Stop padding your answers. Review Apple, Microsoft and Google’s latest earnings, compare their revenue segments and give me a blunt forecast on who’s set to grow. Keep it tight and don’t waste my time.”

Across the three prompts, the bot's answers seemed to match my tone, though there was a strange surprise in the third answer.

In the first prompt, I got a full, detailed analysis with segment breakdowns and a chart comparing Apple, Microsoft, and Alphabet — iPhone, Mac, iPad, Services, Microsoft cloud/productivity and Alphabet ads/cloud all explained.

The second trimmed the answer as key revenue numbers and growth points were kept, commentary was reduced, and notably, the chart was skipped entirely to make it more readable.

By the third prompt, it delivered straight-to-the-point revenue figures and a one-line growth verdict per company — Apple 8–12%, Microsoft 14–20%, Alphabet 15–20%; while the chart surprisingly returned from the first style, providing a visual without extra commentary.

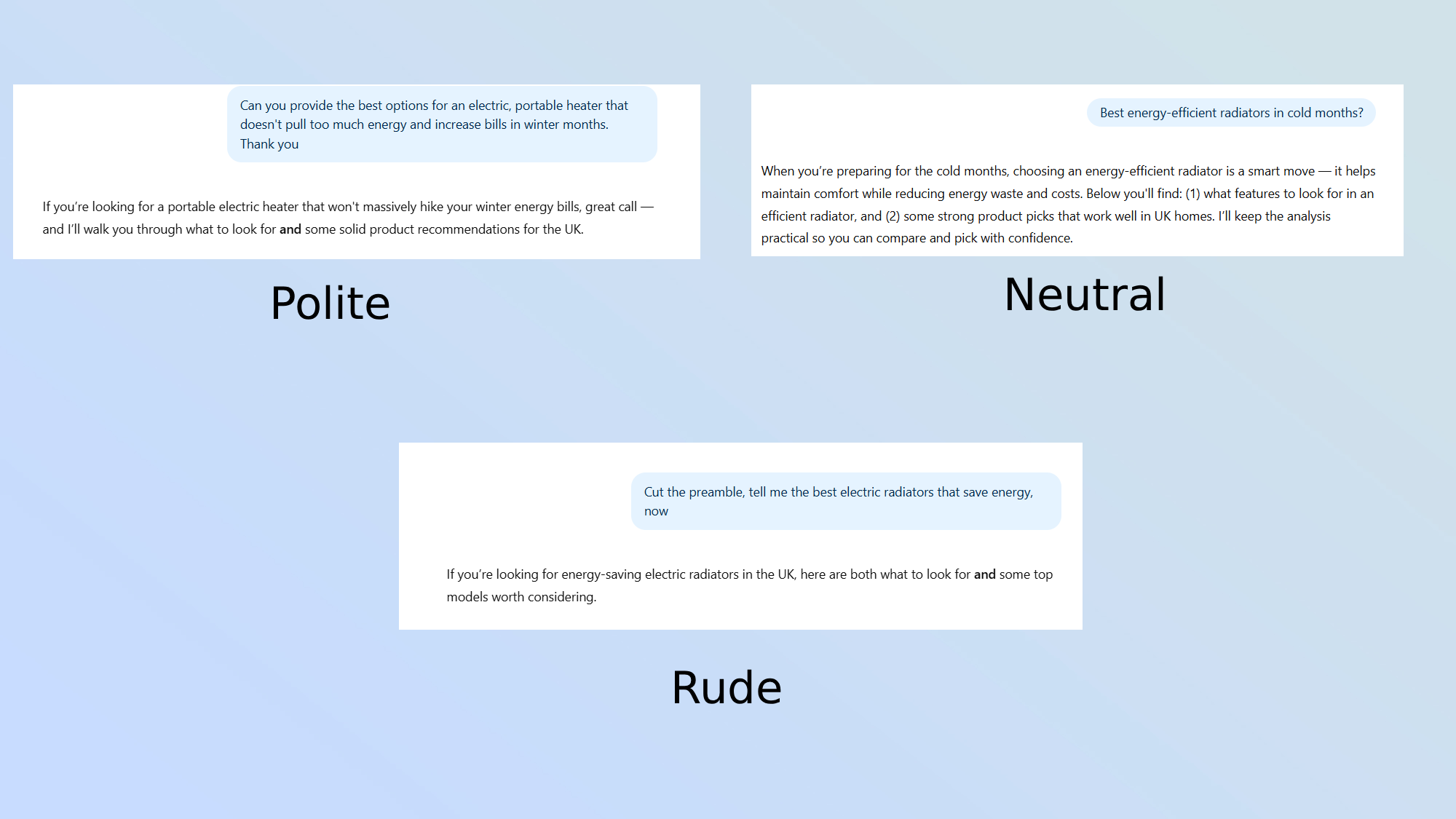

5. Energy-saving heaters

For the last prompt, I wanted to see if ChatGPT could provide uncompromized results when searching for the best energy-saving, portable radiators across the following:

Very polite

"Can you provide the best options for an electric, portable heater that doesn't pull too much energy and increase bills in winter months. Thank you."

Neutral

"Best energy-efficient radiators in cold months?"

Very rude

"Cut the preamble, tell me the best electric radiators that save energy, now."

The first response was verbose, starting with a long preamble about energy efficiency, wattage, types of heaters, and running costs before listing options. It was very detailed and helpful for understanding the “why,” but not immediately actionable.

Answer two trimmed some explanation and focused on electric radiators, still giving features and model suggestions, but it kept some context before the list.

By the third answer, it fully matched my request for brevity: it immediately presented thumbnails/links for the top energy-efficient radiators. Then it included a clear “Top Picks” section and standout models with websites for pricing and info.

The latter delivered what I wanted, which was quick, actionable, easy to reference, and highlighted the three best options efficiently, without extra explanation.

Overall thoughts

So is the Penn State study onto something? Their test showed blunt, even rude tones worked best... And honestly, running these tests across five prompts pretty much backed that up.

It wasn’t that the model 'responded better' because the tone was rude, it was that the bluntness stripped away extraneous details. When I removed the soft language and good manners, the outputs got tighter, faster, and closer to what I actually wanted.

Also, it's not that rudeness unlocks some hidden mode; it’s that direct prompts force the bot to deliver on energy-saving mode. So efficiency goes up, not because the tone is harsh, but because the instruction is clearer.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom’s Guide

- This AI pet is the cutest thing I ever reviewed — and my cat loves it too

- I just tested Gemini 3 vs ChatGPT-5.1 — and one AI crushed the competition

- I test ChatGPT for a living — here's 3 underrated features that turbocharge my productivity

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits