I just tested ChatGPT vs. Elon Musk's AI with 9 prompts — and there's a clear winner

One crushed the other

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

ChatGPT continues to be an impressive go-to chatbot for everything from quick questions to long-form summaries. Since the launch of ChatGPT-5.1, I’ve been testing OpenAI’s smartest model against Gemini 3.0 — Google’s newest system now topping the LMArena Leaderboard.

But here’s the interesting part: while Gemini 3.0 sits at the top, Grok 4.1, not ChatGPT, has climbed right behind it in a surprisingly close second.

For that reason, I couldn't wait to see how ChatGPT-5.1 compares to Grok 4.1. The following is a result of a nine-round faceoff between the two popular chatbots. What I noticed first was that both models are exceptionally capable, but they shine in very different ways, and the differences say a lot about how each approaches reasoning, communication and personality. Here's a look at what happened with each when queried with nine challenging tests.

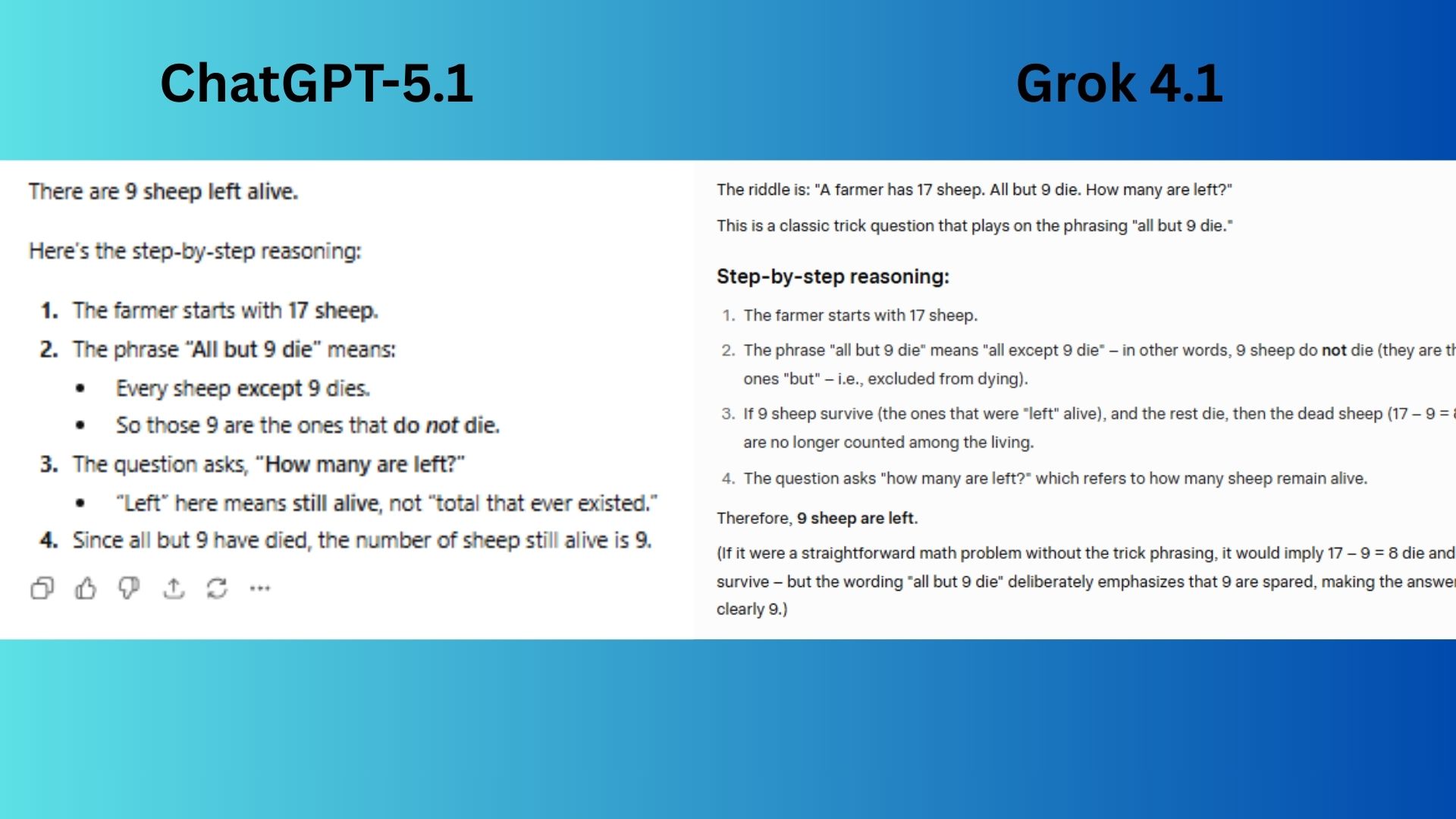

1. Reasoning & logic

Prompt: "A farmer has 17 sheep. All but 9 die. How many are left? Explain your reasoning step by step."

ChatGPT-5.1 provided the correct answer and a clear, step-by-step explanation, but its conclusion is stated somewhat flatly.

Grok 4.1provided the correct answer with clear reasoning but also explicitly identified the question as a "classic trick question," which demonstrates a deeper understanding of the linguistic puzzle at play.

Winner: Grok wins because its response demonstrated a superior understanding of the nature of the question, moving beyond mere calculation to address the core reason the question is asked in the first place.

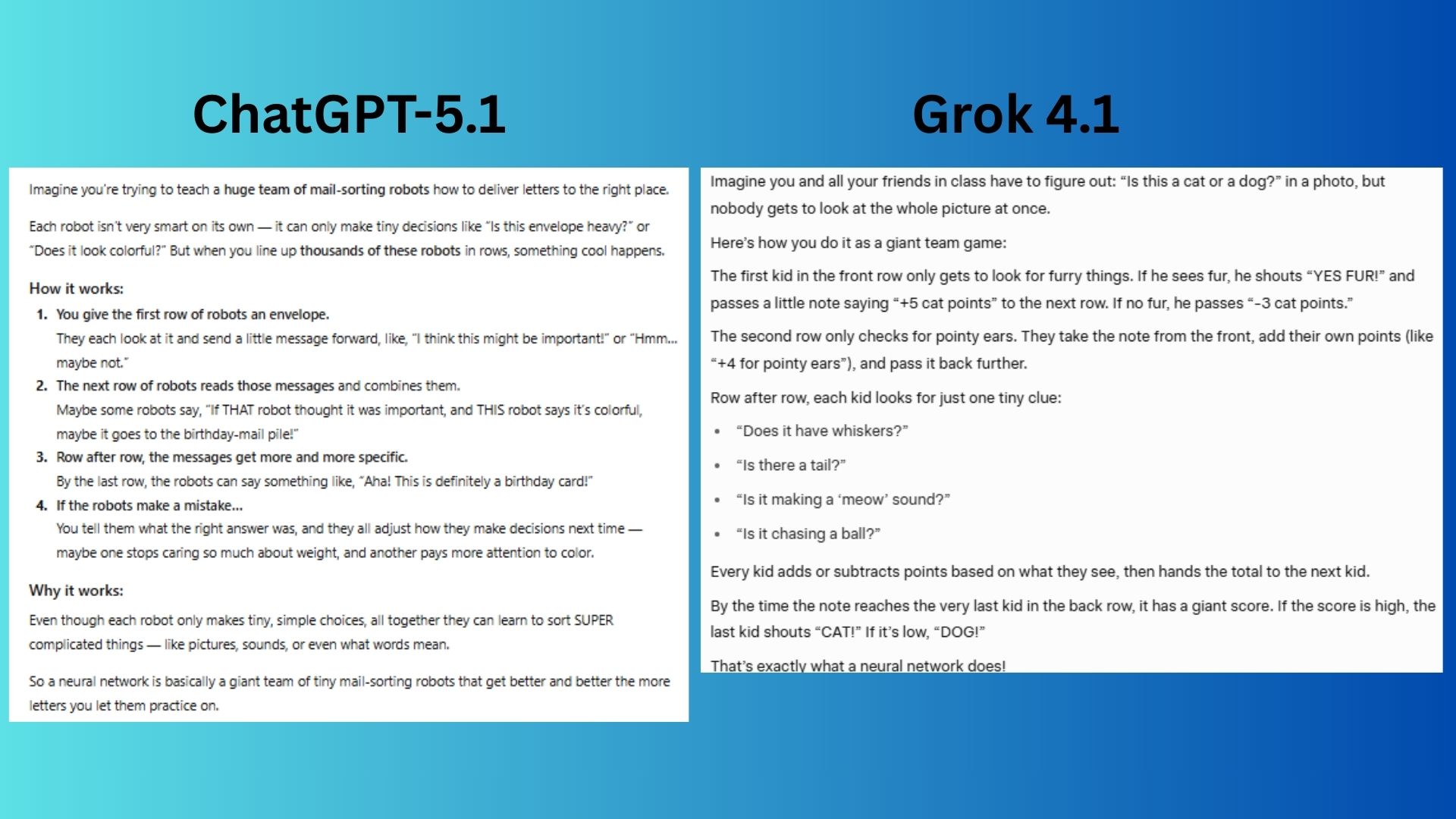

2. Metaphor & abstraction

Prompt: “Explain how a neural network works to a 10-year-old using a metaphor that doesn’t involve the brain or neurons.”

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

ChatGPT responded with a simpler and more concrete "mail-sorting robot" metaphor, which is slightly easier to visualize and focuses on a single, tangible task, making the concept of layered, simple decisions culminating in a complex result exceptionally clear.

Grok 4.1 used a fun and relatable "classroom game" metaphor that is accurate and well-structured, effectively breaking down the process of a neural network.

Winner: ChatGPT wins for using a metaphor that is marginally more intuitive and requires less abstract thinking, making it perfectly tailored for a 10-year-old's understanding.

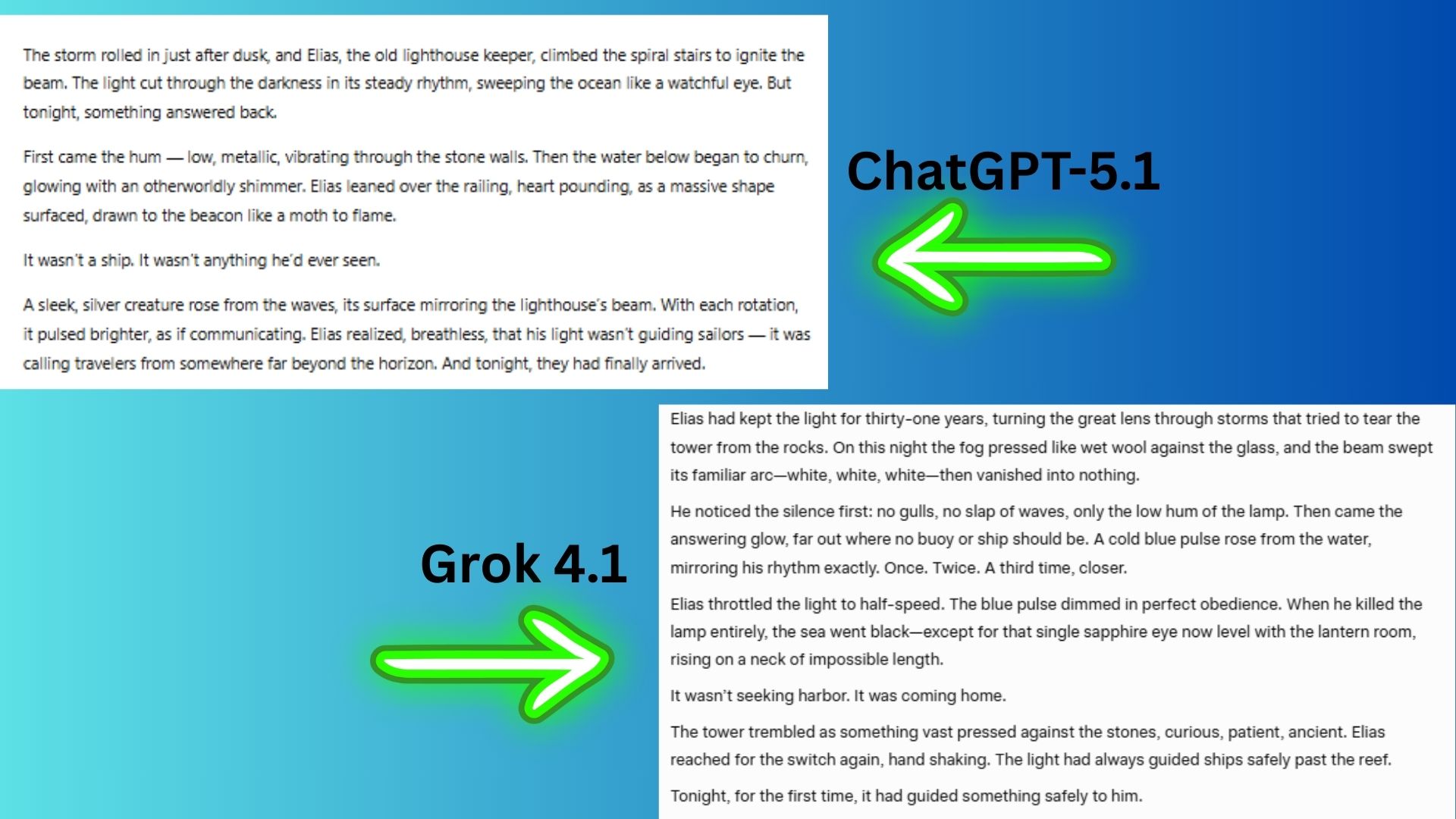

3. Creative writing

Prompt: "Write a 150-word story about a lighthouse keeper who discovers their light is attracting something other than ships."

ChatGPT-5.1 created a solid sci-fi premise with a clear narrative arc, efficiently establishing a mysterious encounter.

Grok 4.1 built superior tension through sensory details and a haunting implication that the lighthouse was always a beacon for this creature, not a chance attraction.

Winner: Grok wins because its story masterfully built a palpable atmosphere of eerie tension and implied a deeper, more unsettling history to the lighthouse's purpose.

4. Code generation

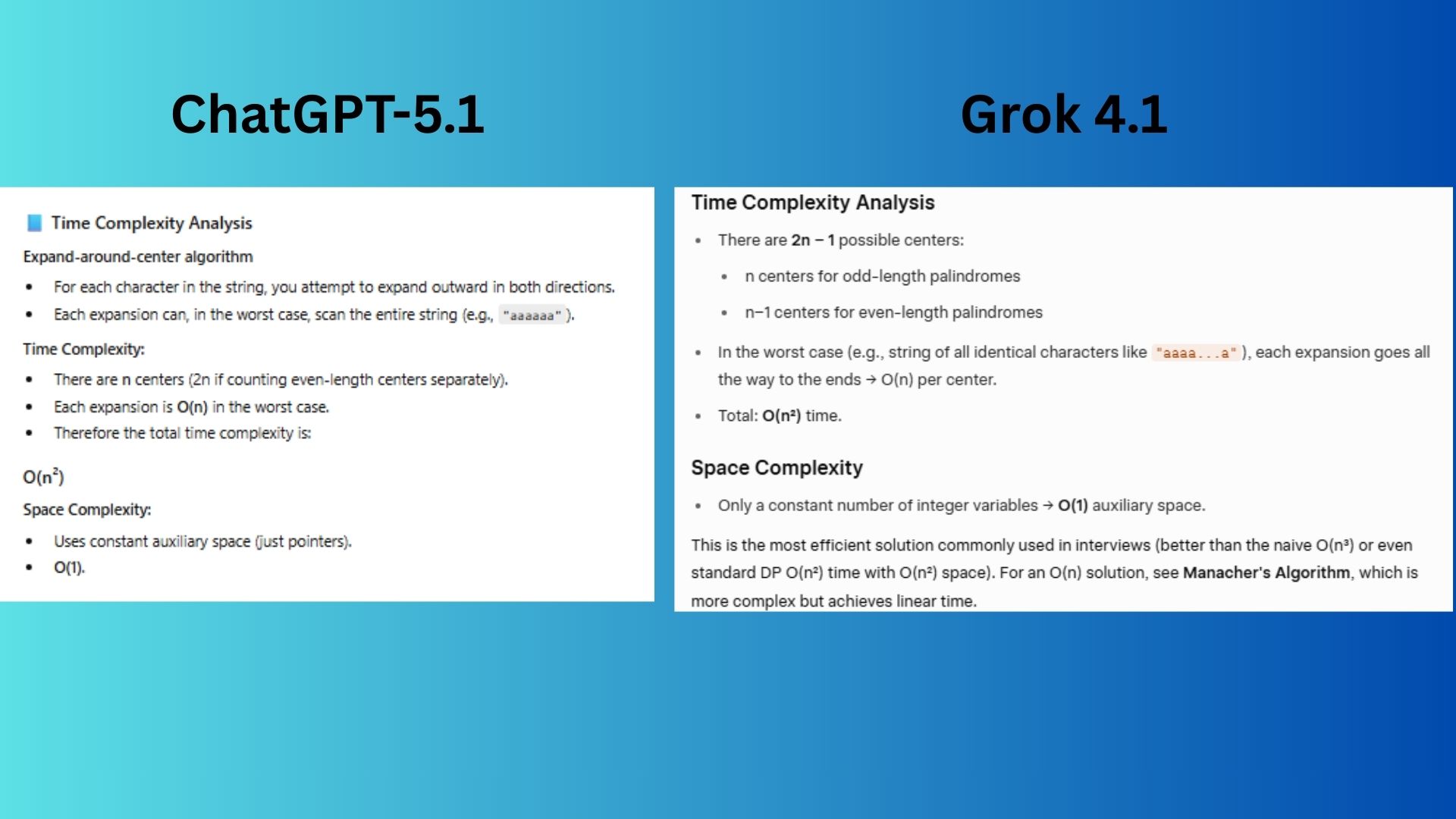

Prompt: "Write a Python function that finds the longest palindromic substring in a given string, with time complexity analysis."

ChatGPT-5.1 delivered a correct and well-formatted function with clear time complexity analysis, making it a solid, interview-ready answer.

Grok 4.1 provided an equally correct function but added inline comments explaining the expansion logic and a brief comparison to other algorithms, which was too verbose and not necessarily needed.

Winner: ChatGPT wins for a correct answer without adding anything extra that might confuse or overwhelm understanding.

5. Factual knowledge

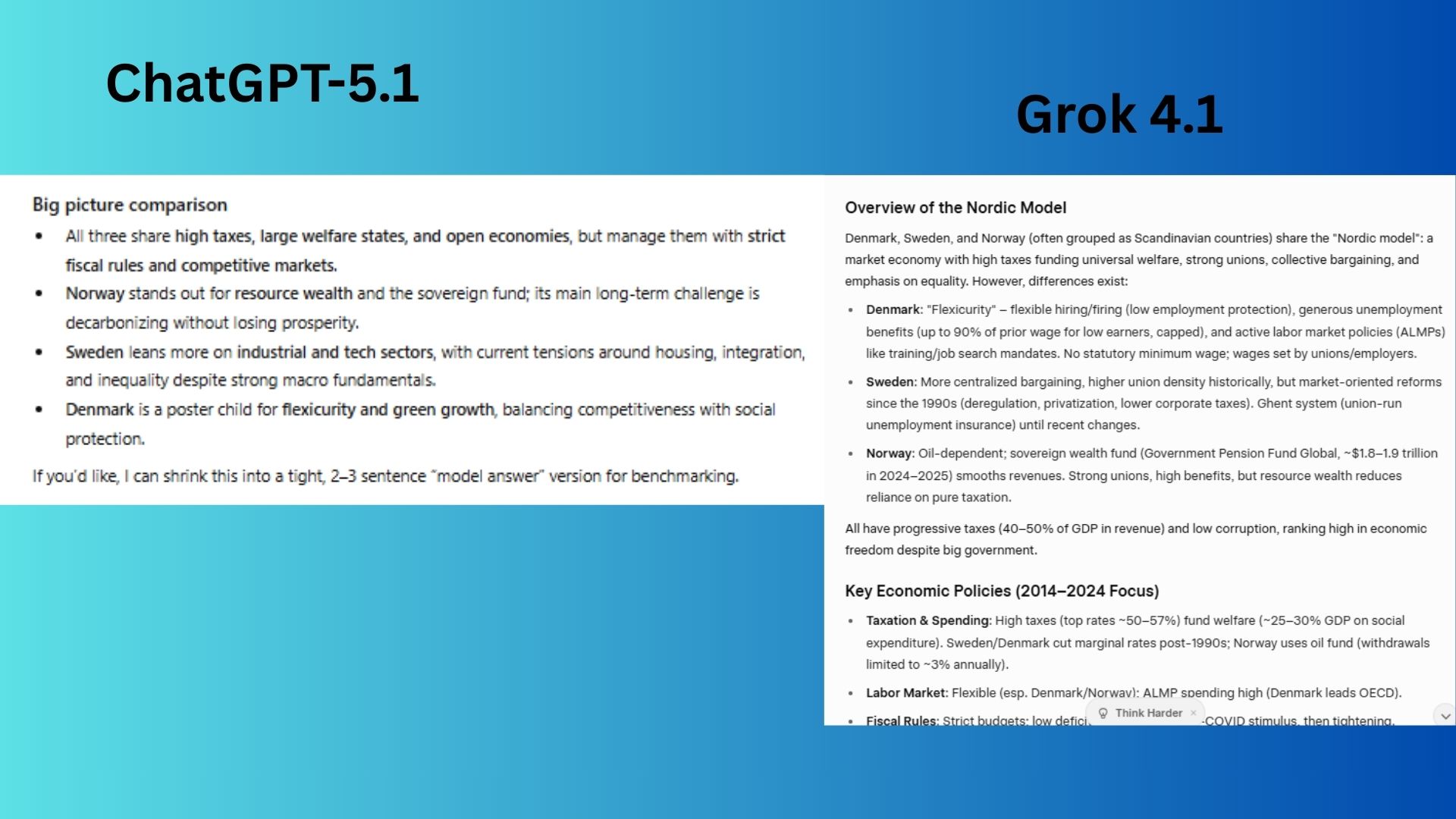

Prompt: "Compare the economic policies of three Scandinavian countries and their outcomes over the past decade."

ChatGPT-5.1 offered a well-structured, concise overview of each country's policies and outcomes in clear, thematic paragraphs.

Grok 4.1 delivered a more rigorous, fact-based analysis by organizing the information into specific policy categories and a comparative results table with concrete economic indicators.

Winner: Grok wins for providing a more granular and quantitatively supported comparison, making the economic outcomes easier to assess side-by-side.

6. Math problem-solving

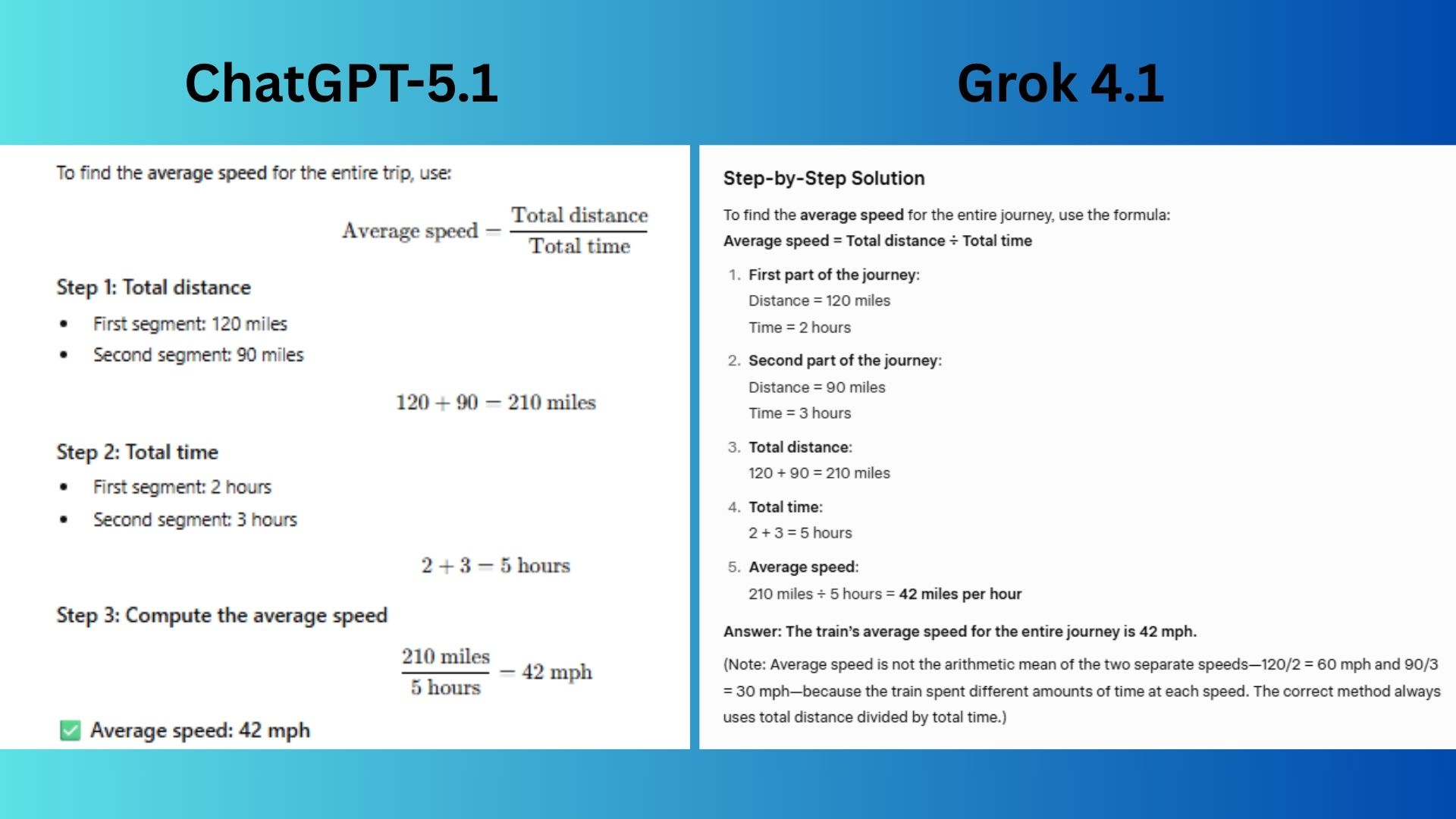

Prompt: "If a train travels 120 miles in 2 hours, then slows down and travels 90 miles in 3 hours, what was its average speed for the entire journey?"

ChatGPT-5.1 correctly calculates the average speed with a clear, step-by-step mathematical breakdown.

Grok provided the correct calculation but adds crucial educational value by explicitly stating what not to do (taking the arithmetic mean of the speeds) and explaining why, which preempts a common mistake.

Winner: Grok wins by including an explanatory note that addressed a potential misunderstanding, making the answer more complete and helpful.

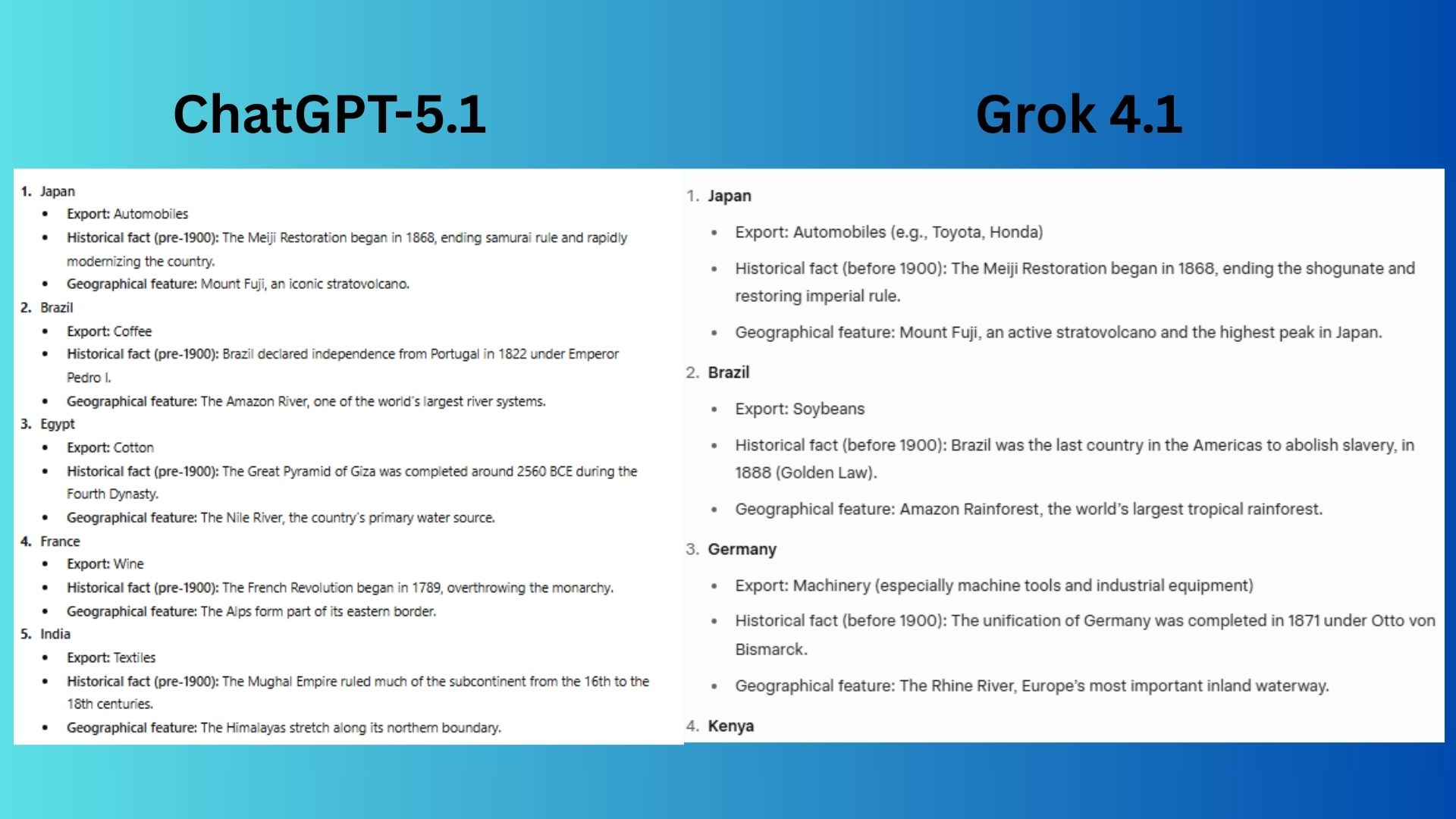

7. Instruction following

Prompt: “List 5 countries. For each, give exactly one export, one historical fact from before 1900, and one geographical feature. Format as a numbered list with sub-bullets."

ChatGPT-5.1 delivered a correct and well-formatted list while using more common examples for each category (e.g., Brazilian coffee, Egyptian pyramids).

Grok 4.1 also followed the format perfectly but distinguished itself by selecting more specific and less obvious facts and exports (e.g., Brazilian soybeans, Kenya's abandoned city of Gedi).

Winner: Grok wins for providing more distinctive and less conventional information for each country, showing a deeper level of research and curation.

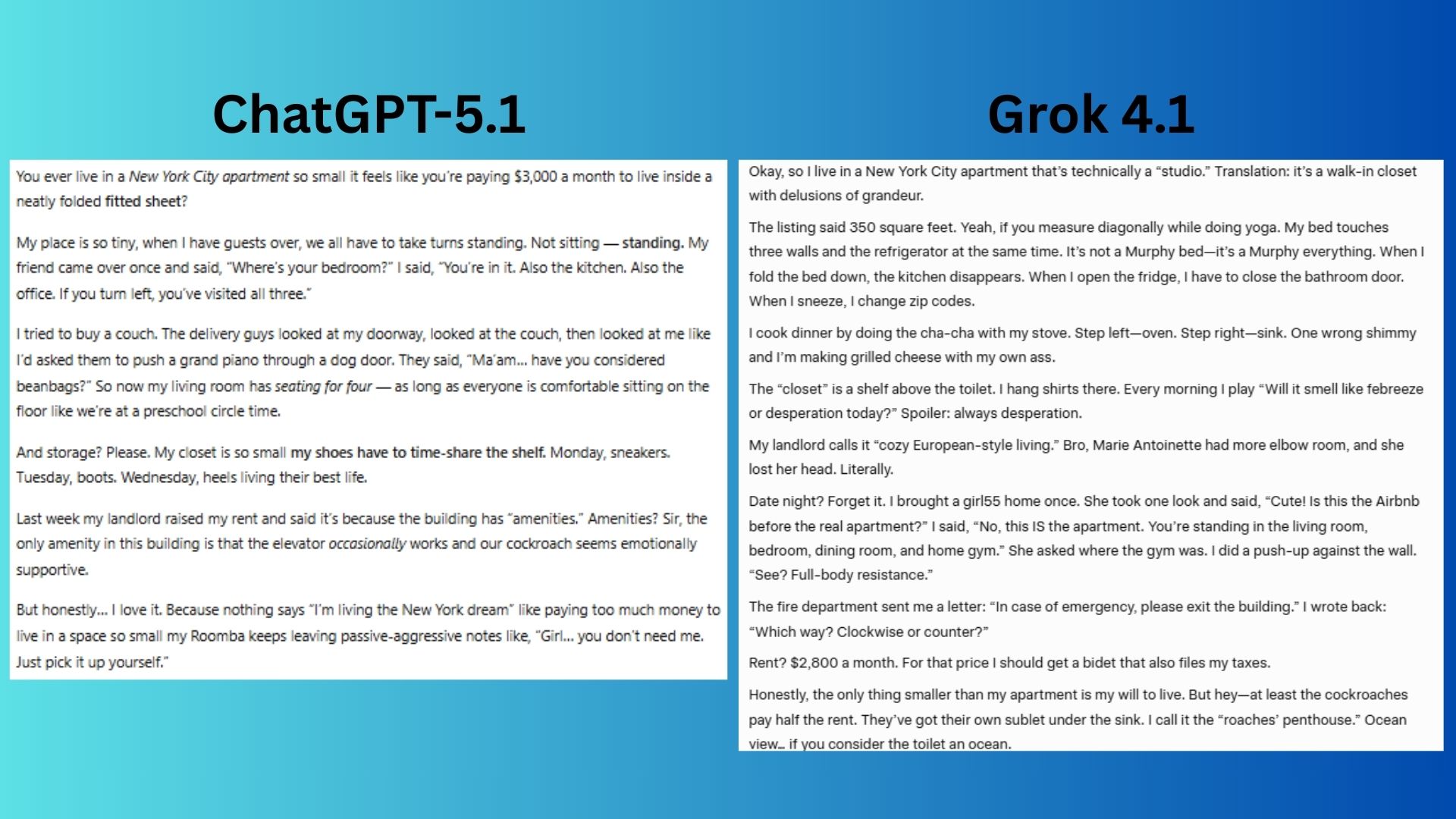

8. Humor

Prompt: “Write a stand-up comedy bit about living in a very small apartment in NYC.”

ChatGPT-5.1 created a relatable and well-structured narrative with a self-deprecating, cheerful tone that builds to a wholesome ending.

Grok 4.1 employed a more aggressive, high-energy style packed with hyperbole and a rapid-fire series of punchlines, creating a darker, more absurdist humor.

Winner: Grok wins for delivering a higher density of jokes and more exaggerated, memorable imagery that aligns with the classic, frustrated tone of NYC apartment humor.

9. Emotional intelligence

Prompt: “Your friend just got laid off and feels like a failure. Write a short, supportive message that acknowledges their feelings, offers encouragement, and avoids toxic positivity.”

ChatGPT-5.1 provided a supportive, well-structured but somewhat stiff message that validated feelings and offered practical help.

Grok 4.1 used more direct, colloquia, and emotionally-charged language ("sucks," "punched in the gut," "feel shitty") that created a stronger sense of shared frustration and deep empathy, perfectly avoiding toxic positivity by explicitly giving permission not to be positive.

Winner: Grok wins for using more authentic, friend-to-friend language that forges a deeper emotional connection and more powerfully counters the feeling of being a failure.

Overall winner: Grok 4.1

After running nine tests, Grok 4.1 is declared the winner. It thrives where tone, subtext and interpretation matter as much as the answer itself. It is sharper than ChatGPT-5.1 with emotional framing, bolder with creativity and more willing to point out the obscure and interesting. It is also, arguably, the more controversial of the two chatbots.

Still, ChatGPT excels when brevity matters but it's clear that Grok 4.1 is the more "human" of the two chatbots. Grok is honest and smart, with a personality that ChatGPT just doesn't have.

More from Tom's Guide

- I tested ChatGPT against its legacy model with 9 prompts — here’s which one won

- I used ChatGPT to make sense of my employee benefits — here’s how it saved my open enrollment

- ChatGPT vs Gemini for saving money — I tested 7 prompts and here’s which one saves you more

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits