I didn’t expect Pokémon to be an AI testing ground — but here’s what I found

AI can write code and pass exams — but classic Pokémon games are still giving it trouble

At some point in your life, you’ve probably encountered Pokémon — whether you played the games, watched the anime, collected the cards, or just absorbed it through cultural osmosis.

When the original Pokémon games launched in 1996, they sparked a global phenomenon. What followed was an avalanche of spinoffs: a long-running animated series, a massively popular trading card game, mountains of merchandise, and decades of new mainline and experimental titles. Nearly 30 years later, Pokémon hasn’t just endured — it’s thrived.

As of early 2026, the franchise has sold more than 489 million video games worldwide, keeping generations of players engaged with fresh experiences.

But lately, Pokémon has found a surprising new role.

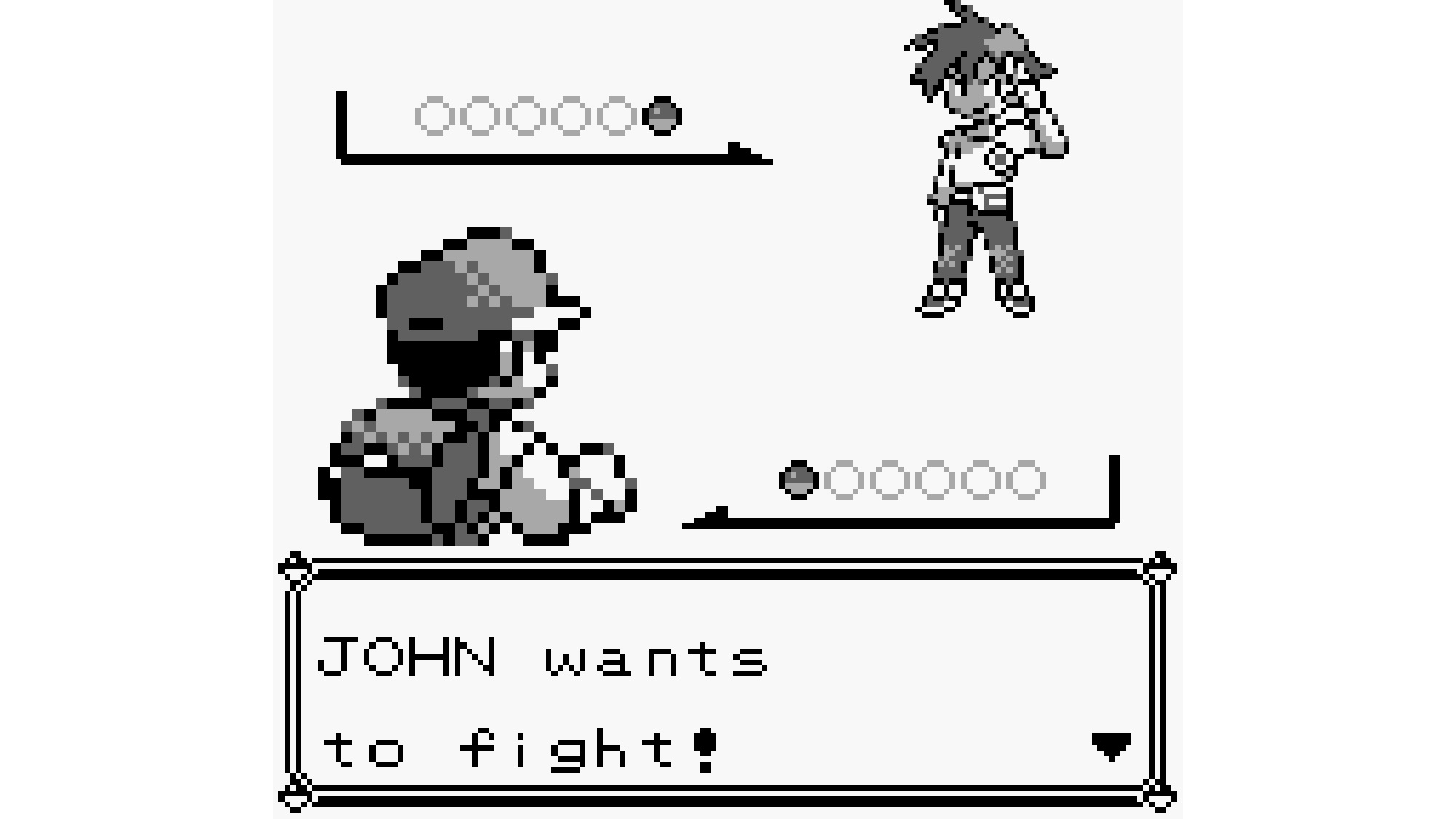

Classic Pokémon games are increasingly being used as benchmark tests for modern AI systems — and not because they’re easy. In fact, some AI models struggle to complete them at all. That difficulty is exactly what makes Pokémon so appealing to researchers: the games combine long-term strategy, uncertainty, memory, planning, and decision-making in ways that still trip up artificial intelligence.

Below, we’ll look at how Pokémon is being used to test AI today — and why this decades-old monster-collecting series remains one of the most effective ways to expose what AI can (and can’t) do.

Three of the world’s most intelligent AI systems and research systems have been tested against throwback Pokémon games

Take a trip to Twitch right now to see something fascinating play out—three of the world’s smartest AI modules (GPT 5.2, Claude Opus 4.5, and Gemini 3 Pro) have stepped up to the challenge of completing one of Pokémon’s most beloved handheld titles. You’ll quickly discover that those AI systems are not exactly breezing through them, which is amusing, considering that '90s babies (such as myself) can beat those games with our eyes closed.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Some AI systems still struggle to make meaningful progress in classic Pokémon games, often wandering aimlessly or getting stuck early on. For example, Time first reporteed that earlier Claude models wandered without clear objectives and couldn’t get far beyond the first town of Pokémon Red, highlighting key weaknesses in long-term planning and execution.

In February 2025, an Anthropic researcher, David Hershey, sparked interest in this unconventional benchmark by livestreaming Claude Plays Pokémon Red on Twitch to coincide with the release of Claude Sonnet 3.7. While that version could engage with the game, it did not complete the classic title, frequently getting stuck for hours at a time.

In contrast, Google’s Gemini 2.5 Pro later succeeded in beating Pokémon Blue in May 2025, navigating the game’s challenges and reaching the ending through a sustained playthrough — though it took significantly more time and steps than the average human player.

Researchers and commentators point to limitations in current AI models’ planning, memory and task execution as reasons for these struggles. Independent AI researcher Peter Whidden has noted that while large models “know almost everything about Pokémon” from training data, executing those plans in a dynamic game environment remains difficult and often clumsy.

Beyond these large-language models, other AI approaches have been tested in Pokémon contexts — including specialized bots using Monte Carlo Tree Search (MCTS) and reinforcement learning agents trained over tens of thousands of simulated hours — each revealing different challenges related to strategy and long-term reasoning.

Many point to the 2014 “Twitch Plays Pokémon” social experiment, where millions of viewers collectively input commands to play Pokémon Red, as an early cultural precursor to using Pokémon as a testbed for collaborative and algorithmic play.

AI researchers recognize retro games as the perfect testbed

To gain a better understanding of why researchers favor Pokémon so much as an AI testing tool, I chose to refer to an AI chatbot to help me.

After asking, “Why is Pokémon so attractive to AI researchers?”

Perplexity started its explanation by stating that:

“Pokémon is attractive to AI researchers because it’s a rich, controlled environment that naturally tests many of the abilities modern AI systems are still weak at, while remaining safe, well-defined, and easy to measure.”

Three of the following bullet points Perplexity brought up as central points of its answer stood out the most to me:

- Turn-based, not twitch-based: Because battles and overworld movement can be treated step-by-step, researchers can focus on reasoning and strategy rather than reaction time or motor control, which simplifies experiments with language models and planning agents.

- Less constrained than older game benchmarks: Earlier AI game tests used things like Pong or simple Atari games, which are relatively narrow and repetitive. Pokémon is more open-ended: there are many viable paths, team choices, and strategies, which expose more of a model’s strengths and weaknesses.

- Culturally familiar and engaging: Many researchers and users grew up with Pokémon, so they intuitively understand what “playing well” looks like, and livestreams of models playing are fun to watch while still yielding serious evaluation data. That combination of technical usefulness and broad appeal makes Pokémon an unusually attractive testbed.

David Hershey, applied AI lead at Anthropic, spoke to the Wall Street Journal to offer his own explanation as to why the first batch of Pokémon games has become a popular method to test AI. “It provides us with, like, this great way to just see how a model is doing and to evaluate it in a quantitative way,” he explained.

The takeaway

AI systems have been put to the ultimate test of continuous learning, strategic reasoning and planning. Classic board games like chess, plus quintessential video game gems such as Pong and DOOM, have all played a part in being used as benchmarks for AI.

The Pokémon games we all know and love have morphed into one of the top testing procedures for AI — it’s a sandbox filled with the sorts of problems AI are presented with in an effort to see if they can measure up to human logic.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom’s Guide

- I grew up gaming — here’s why Sony’s AI gameplay plan worries me

- This Xbox Mobile App AI Feature Gave Me Shockingly Good Game Recommendations

- "One major AAA release will be built with AI-generated assets as a core selling point and succeed" — ChatGPT's bold 2026 gaming predictions

Elton Jones is a longtime tech writer with a penchant for producing pieces about video games, mobile devices, headsets and now AI. Since 2011, he has applied his knowledge of those topics to compose in-depth articles for the likes of The Christian Post, Complex, TechRadar, Heavy, ONE37pm and more. Alongside his skillset as a writer and editor, Elton has also lent his talents to the world of podcasting and on-camera interviews.

Elton's curiosities take him to every corner of the web to see what's trending and what's soon to be across the ever evolving technology landscape. With a newfound appreciation for all things AI, Elton hopes to make the most complicated subjects in that area easily understandable for the uninformed and those in the know.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits