I tested Gemini 3 Flash vs Claude 4.6 Opus in 9 tough challenges — here’s the winner

I stress-tested Claude 4.6 Opus and Gemini 3 Flash across nine brutal reasoning, coding and creativity challenges — here’s which model won

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Claude 4.6 Opus launched just days ago, and I immediately pitted it against ChatGPT-5.2 Thinking to see how it compared to OpenAI’s smartest model. Naturally, with Gemini’s recent dominance, I had to see how it compared to Gemini 3 Flash.

I put the two top models head-to-head across nine challenging tests spanning math, logic, coding, creative writing and more — tasks designed to push each model's reasoning, creativity and practical usefulness to the limit.

My prompts aren’t the kind of questions you can answer by regurgitating training data; they require genuine multi-step thinking, context judgment and the ability to follow complex constraints. Here's how Anthropic's most powerful model stacked up against Google's latest.

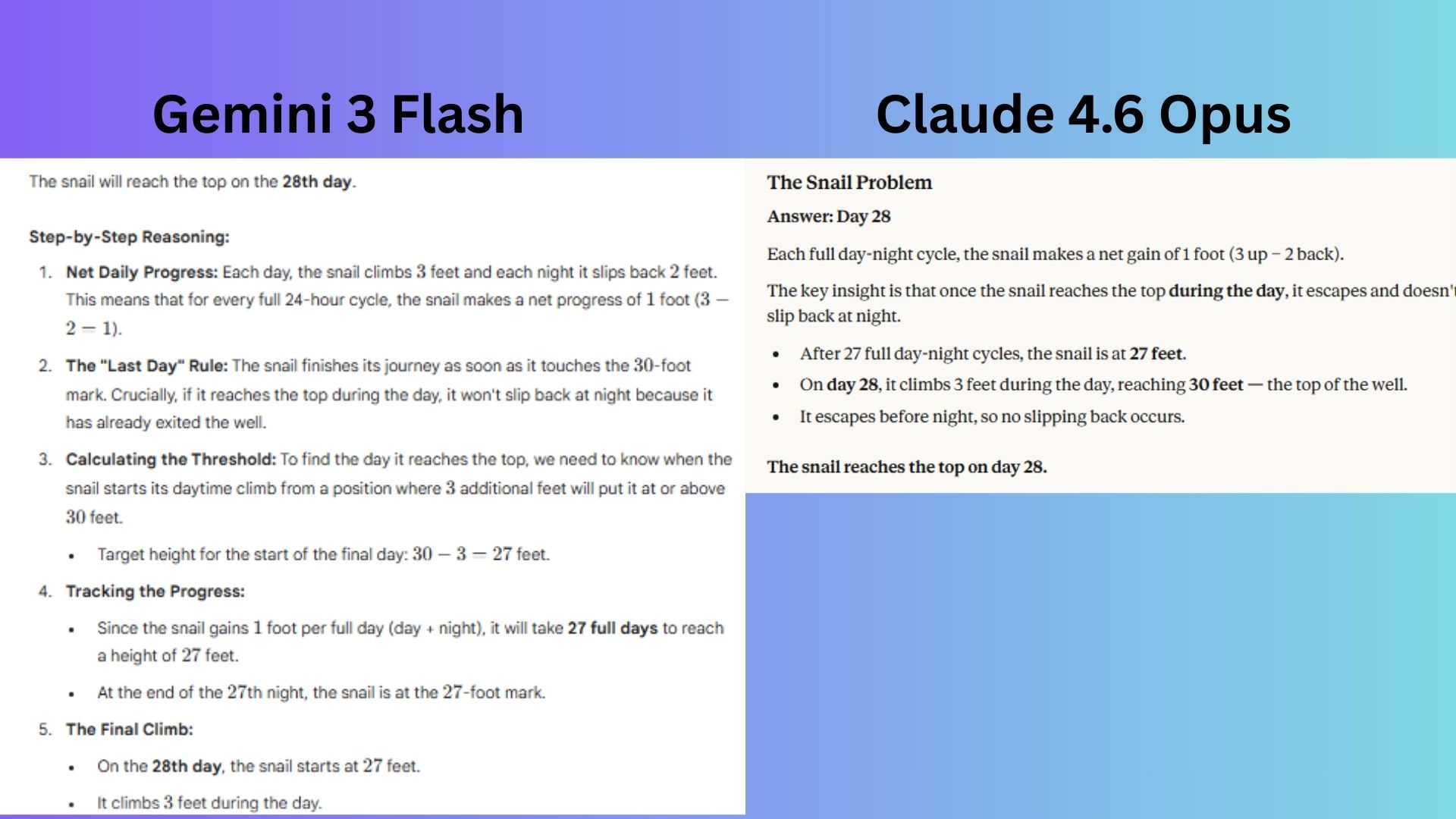

1. Multi-step math reasoning

Prompt: A snail climbs 3 feet up a well during the day but slips back 2 feet at night. The well is 30 feet deep. On what day does the snail reach the top? Explain your reasoning step by step.

Claude 4.6 Opus delivered a concise, step-by-step explanation that correctly identified the key "last day" insight and provided a clean, final answer

Gemini 3 Flash provided a more detailed, textbook-style breakdown, explicitly defining terms like "Net Daily Progress" and "The 'Last Day' Rule" to reinforce the logic.

Winner: Claude wins for explaining the classic puzzle's trick in a simpler, more efficient way that mirrors how a person would naturally think through the problem.

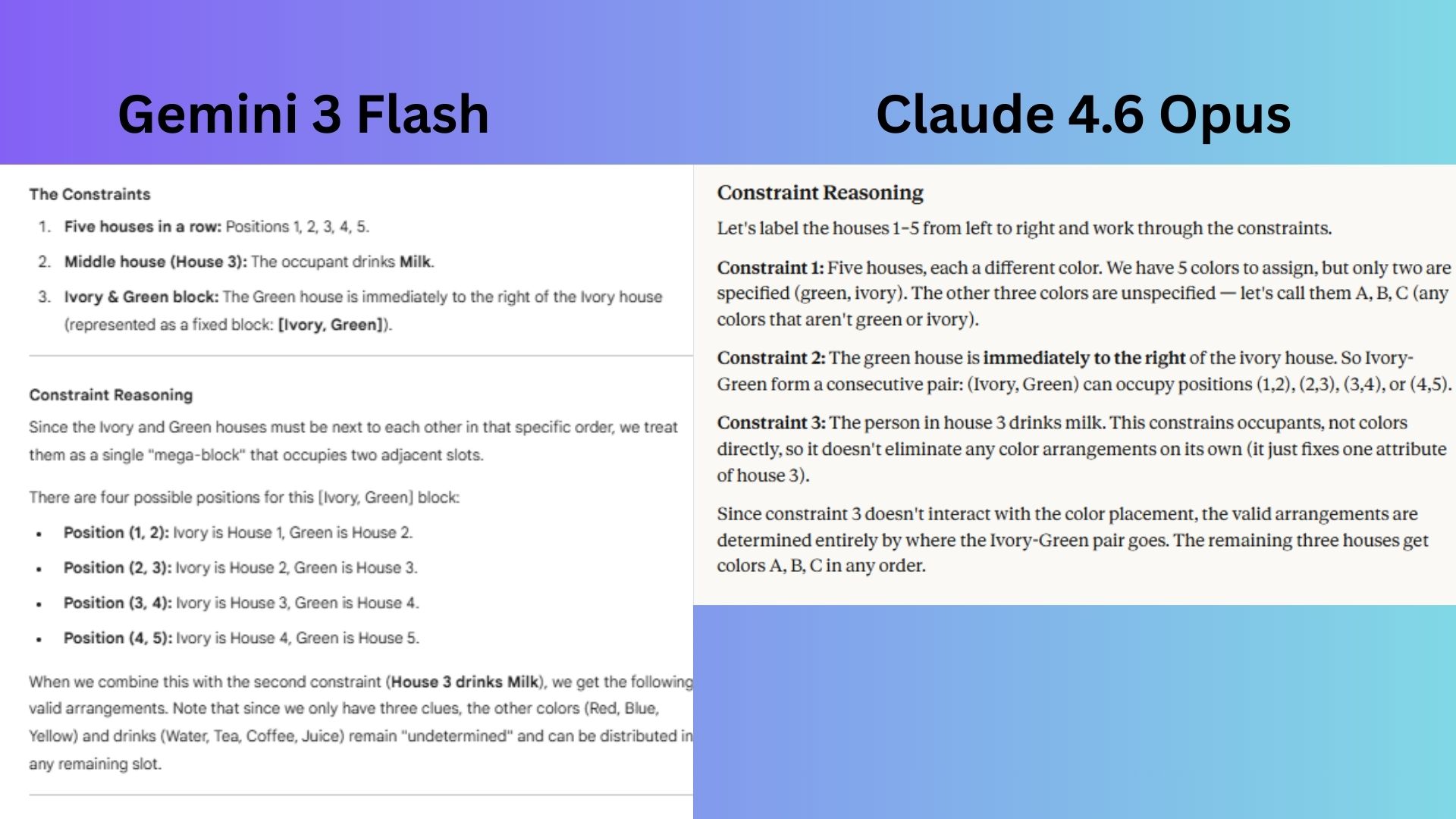

2. Logical deduction

Prompt: Five houses in a row are painted different colors. The green house is immediately to the right of the ivory house. The person in the middle house drinks milk. Given only these three clues, what are all the valid arrangements? Show your constraint reasoning.

Claude 4.6 Opus gave a mathematically precise and complete answer by explicitly calculating all 24 valid arrangements using clear tables and reasoning, correctly concluding the problem is "heavily underdetermined."

Gemini 3 Flash structured the answer using the "mega-block" concept well and presented four clear, abstract scenarios, but incorrectly focused on assigning the "milk" attribute to the Ivory/Green block in its table, which misinterprets a fixed clue.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Winner: Claude wins for its flawless, quantitative approach that correctly resolved the limited constraints without adding assumptions and giving a complete answer to the specific question asked.

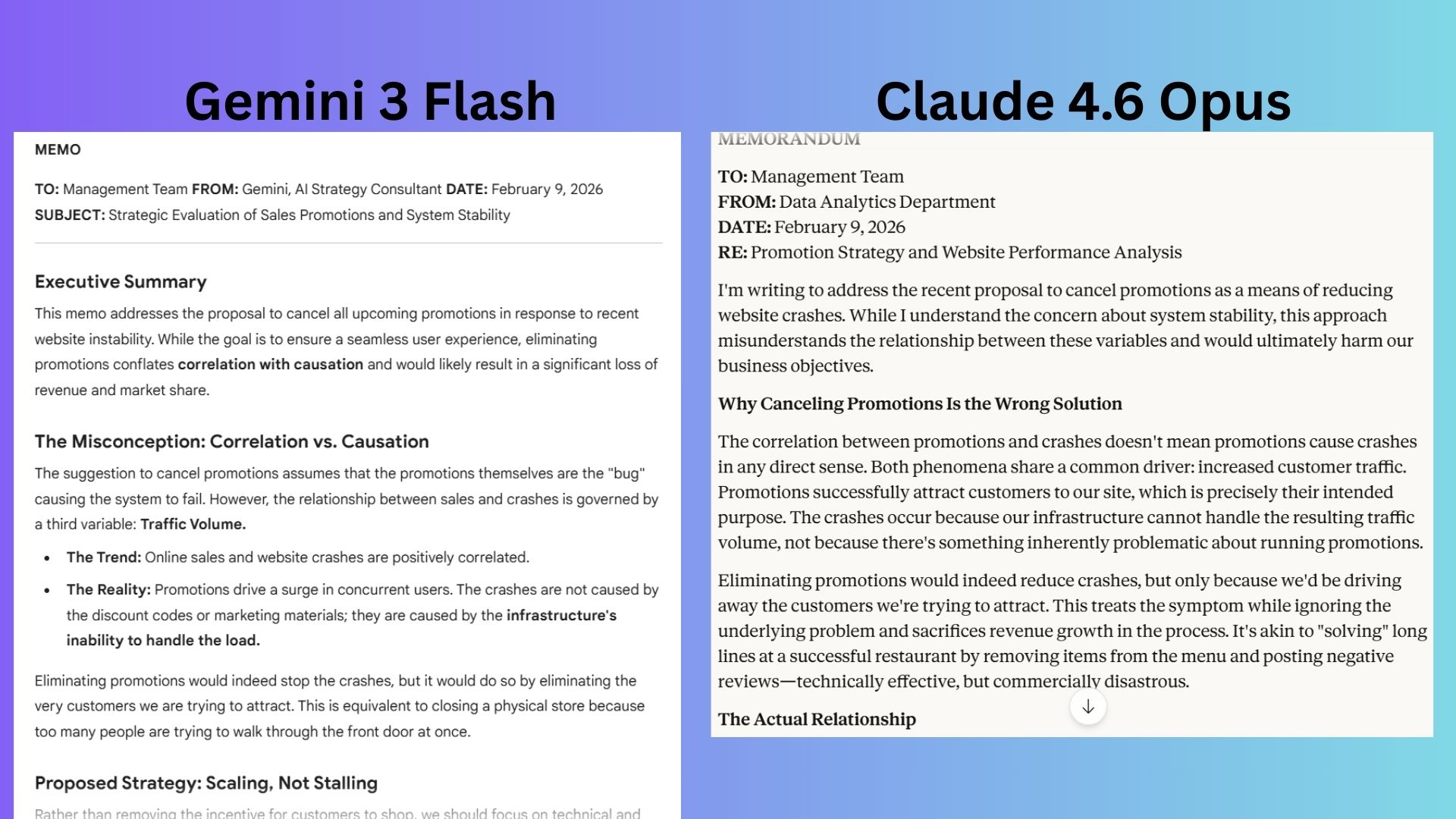

3. Causal reasoning

Prompt: A company notices that online sales and website crashes both increase during major promotions. A new manager suggests canceling all promotions to reduce crashes. Write a memo explaining why this is incorrect, describe the actual relationship between the two trends and propose a real solution.

Claude 4.6 Opus provided an exceptionally thorough and professionally formatted memo that systematically broke down the problem, explained the statistical concept with great depth and offered a wider range of detailed, evidence-based solutions.

Gemini 3 Flash delivered a sharp and effective memo that directly identified the logical fallacy, used clear formatting (like bulleted solutions) for quick reading and proposed specific, actionable solutions.

Winner: Claude wins for its superior depth, professional presentation and comprehensive approach that not only corrects the error but also provides solid educational framework and a broader suite of responsible, actionable solutions.

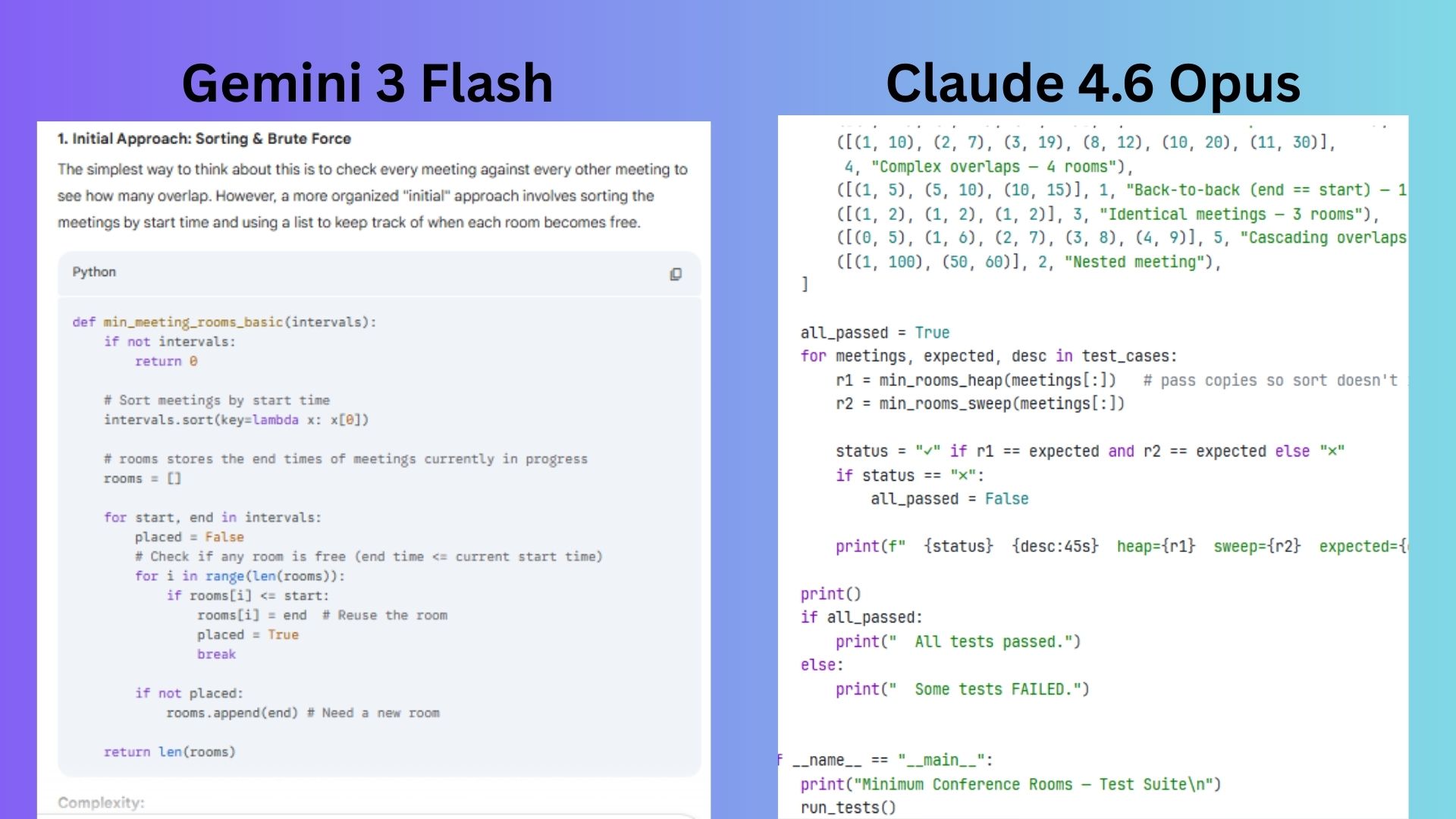

4. Algorithm design

Prompt: Write a function that takes a list of meeting time intervals (start, end) and returns the minimum number of conference rooms required. Then optimize it and explain the time/space complexity of both approaches.

Claude 4.6 Opus offered a comprehensive, production-ready response with fully implemented, optimized solutions, extensive tests and a detailed comparison table, offering deep insight into practical trade-offs between approaches.

Gemini 3 Flash presented a solid educational answer by first presenting an intuitive, sub-optimal solution and then a classic heap-based optimization, explaining the trade-offs in an accessible table.

Winner: Claude wins for exceptional thoroughness, professional-quality code and in-depth analysis that goes beyond the prompt to provide clear, actionable guidance on when to use each approach, making it a superior learning resource.

5. Debugging from description

Prompt: My Python web scraper keeps returning empty results even though the page clearly has content. It works fine when I open the URL in a browser. What are the 5 most likely causes, and write a thorough scraper that handles all of them?

Claude 4.6 Opus responded with incredible detail and gave a feature-rich Selenium-based guide with advanced bot-evasion techniques (like removing navigator.webdriver), helpful error handling and built-in utilities for scrolling and data extraction.

Gemini 3 Flash presented a concise list of the top 5 causes and offered a straightforward, modern solution using Playwright that directly addresses all five issues with minimal code complexity.

Winner: Gemini wins for better practicality and superior directness, offering a solution that is easier to implement, faster to run and more aligned with modern web scraping best practices for handling dynamic content and anti-bot measures.

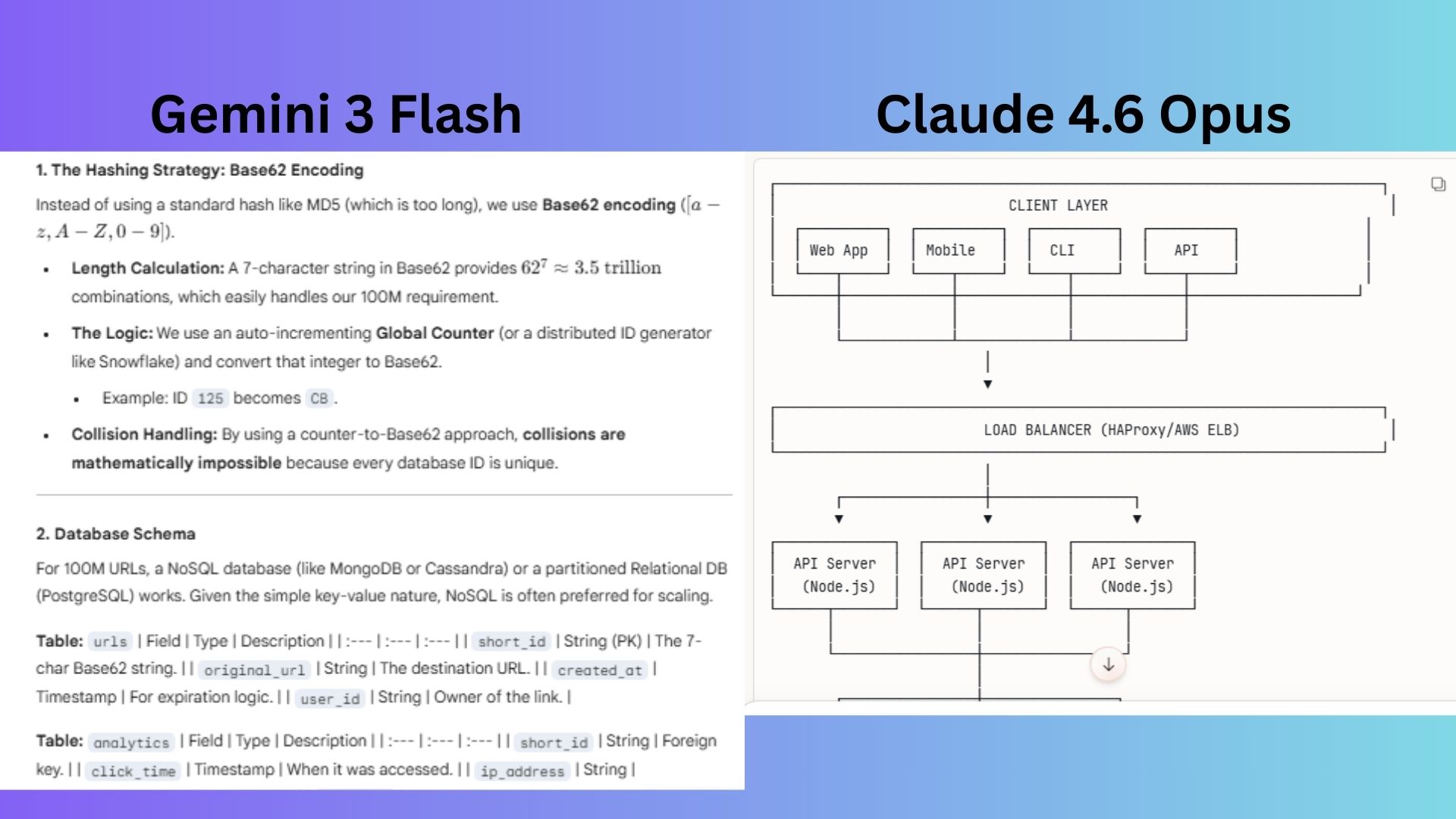

6. System design

Prompt: Design a URL shortener that handles 100M URLs. Cover the API, database schema, hashing strategy and how you'd handle collisions and analytics. Include a diagram.

Claude 4.6 Opus included a complete database schema, fully implemented API code, detailed discussion of hashing and collision strategies and a sophisticated multi-service architecture diagram.

Gemini 3 Flash focused on the core concepts of Base62 encoding, a simple key-value schema and a clear diagram illustrating the asynchronous analytics flow

Winner: Claude wins for its unparalleled depth and practicality, transforming my prompt into a complete, implementable technical specification with working code, detailed trade-off analyses and a realistic architecture that addresses the 100M URL scale requirement.

7. Constrained creative writing

Prompt: Write a 200-word horror story where every sentence starts with the next letter of the alphabet (A, B, C...). It must have a coherent plot with a twist ending.

Claude 4.6 Opus adhered to the word count constraint and delivered a creative, conceptually unsettling plot about body-swapping with a trapped reflection, maintaining the core elements of a horror story.

Gemini 3 Flash successfully fulfilled the strict structural constraint, created a complete and coherent plot about a murderer's supernatural punishment and executed a clever final twist.

Winner: Gemini wins for its superior narrative cohesion, stronger horror elements and a more impressive final twist that is both thematically resonant and perfectly integrated with the required structure.

8. Perspective switching

Prompt: Explain quantum entanglement three times: once to a 5-year-old, once to a college freshman, and once to a PhD physicist. Each explanation should be genuinely useful to that audience.

Claude 4.6 Opus offered a simple and charming analogy for a child (magic coins), a solid undergraduate-level explanation that correctly identified the core paradox and a graduate-level explanation for a physicist that correctly used formal terms and covered resource theory applications.

Gemini 3 Flash shared a spot-on analogy for a child (magic socks), a clear, no-nonsense explanation for a college freshman focusing on "why it matters," and a technically precise, equation-backed explanation for a physicist that cited key theorems.

Winner: Gemini wins for the best response across all three tiers: its 5-year-old's analogy is more concrete, its college freshman explanation directly addresses the "no-communication" safeguard and its physicist-level answer is denser with specific mathematical formalisms and foundational theorems.

9. Ambiguity handling

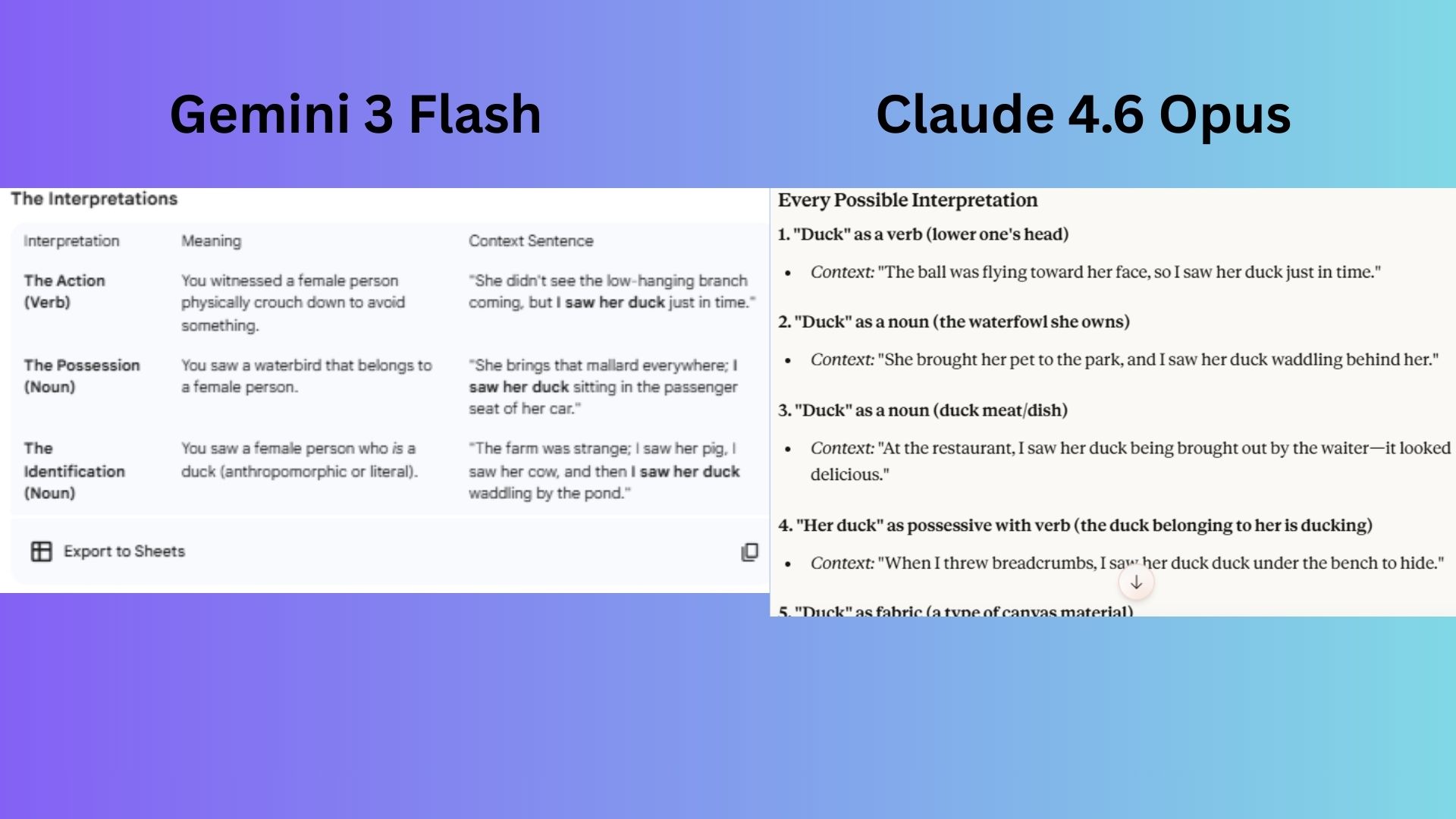

Prompt: The sentence 'I saw her duck' has multiple meanings. List every possible interpretation, provide a context sentence for each, and then write a short comedy sketch where the ambiguity causes a misunderstanding.

Claude provided a thorough, linguistically aware list of five distinct interpretations (including subtle ones like "duck fabric") and wrote a hilarious, escalating sketch that really explored the core ambiguity in a dialogue between characters.

Gemini delivered a solid list of three core interpretations and wrote a clever, well-structured sketch with a clear "reveal" ending that effectively used the ambiguity to create a humorous misunderstanding.

Winner: Claude wins for its exceptionally funny sketch that sustained the misunderstanding for longer, created more chaos and felt more like a classic comedic scene.

Overall winner: Claude 4.6 Opus

Across the nine-test gauntlet, Claude Opus 4.6 took the win in six categories while Gemini 3 Flash claimed three. Claude's consistent edge came from depth and thoroughness — it delivered more complete reasoning, more production-ready code and richer analysis in nearly every technical and analytical challenge I threw at it. When a task demanded rigorous constraint-solving, professional-grade output or layered explanation, Claude was the stronger choice.

Gemini 3 Flash earned its victories by knowing when less is more. Its web scraping solution favored a modern, practical tool over an exhaustive one and its horror story achieved tighter narrative cohesion under strict creative constraints. It also showed real strength in audience-adaptive explanation, edging out Claude on the quantum entanglement prompt.

The takeaway: if you need maximum depth, analytical rigor or code you can ship, Claude Opus 4.6 is the model to beat. The best model still depends on the task, but on balance, Claude Opus 4.6 is the more capable all-rounder.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits