I just tested the new ChatGPT Images vs. Google Nano Banana — and there's a clear winner

Both are good, but one is superior

OpenAI just dropped an update to their image generation tool that promises more realistic images, better editing capabilities, and faster generation. Meanwhile, Google's Nano Banana (powered by Gemini 3.0) has been wowing users since launch with its lifelike images and lightning-speed performance. Naturally, I had to see how these two AI heavyweights stack up.

I put both models through their paces with seven real-world prompts designed to test everything from photorealism and lighting to facial anatomy, text accuracy, and emotional storytelling. Here's how they performed.

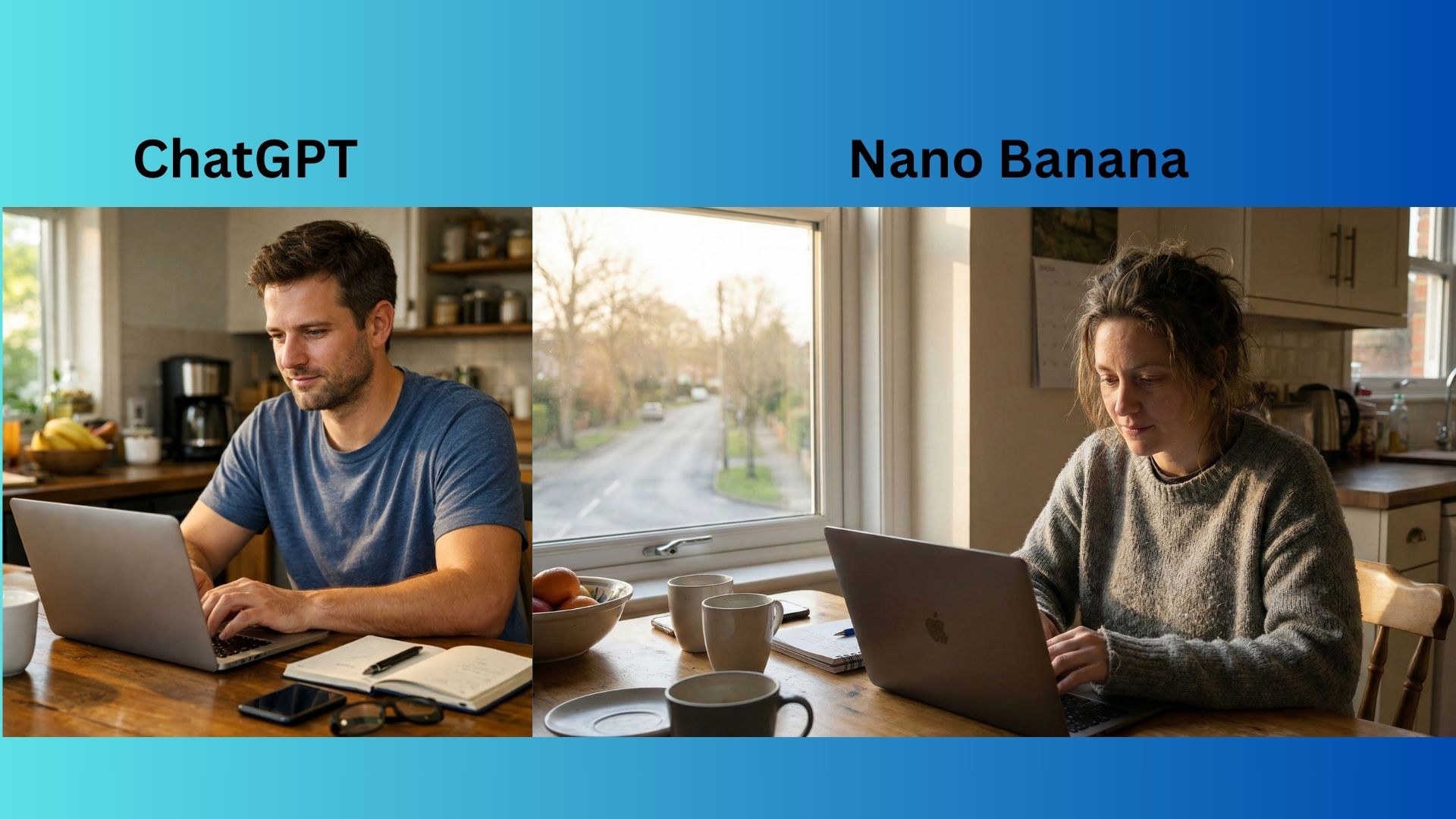

1. Photorealism & human detail

Prompt: Create a photorealistic image of a person working on a laptop at a kitchen table in the early morning. Natural window light, realistic facial features, casual clothing and everyday surroundings.

ChatGPT's image looks realistic but has that telltale AI perfectionism that gives it away. The guy's face and clothes look good, but the lighting and background feel off and the image doesn’t seem like it seems early morning as the prompt requests.

Nano Banana offered an image with larger dimensions, which allowed for impressive detail — you can see through the window and get a proper look at the kitchen. The lighting nails it. The only questionable element? Too many coffee cups. Though perhaps that's actually the most authentic detail of all.

Winner: Nano Banana wins for the most accurate portrayal of an early morning with realistic visuals, lighting and details.

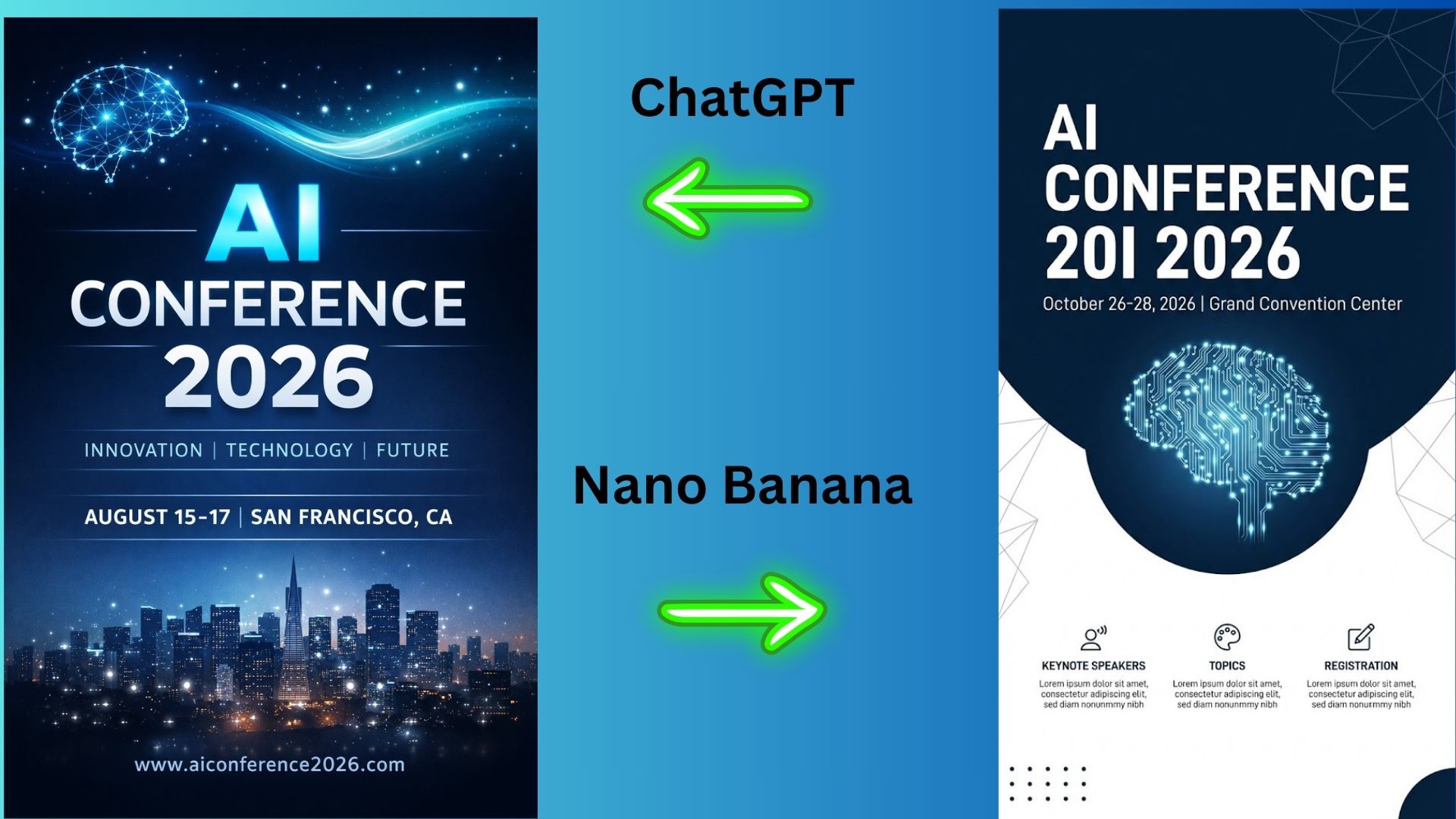

2. Text accuracy & layout

Prompt: Create a professional event poster with the headline ‘AI Conference 2026’ in large, readable text. Modern design, balanced spacing, clear hierarchy.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

ChatGPT generated a ready-to-print conference poster with clear on-image text and example copy. The image is polished, professional and something that could truly be used to promote the event.

Nano Banana offered a clean and easy-to-read poster, but less visually appealing than ChatGPT. The text is accurate and the “lorem ipsum” text is a general placeholder used in marketing.

Winner: ChatGPT wins for a superior poster that looks like it supports an actual event.

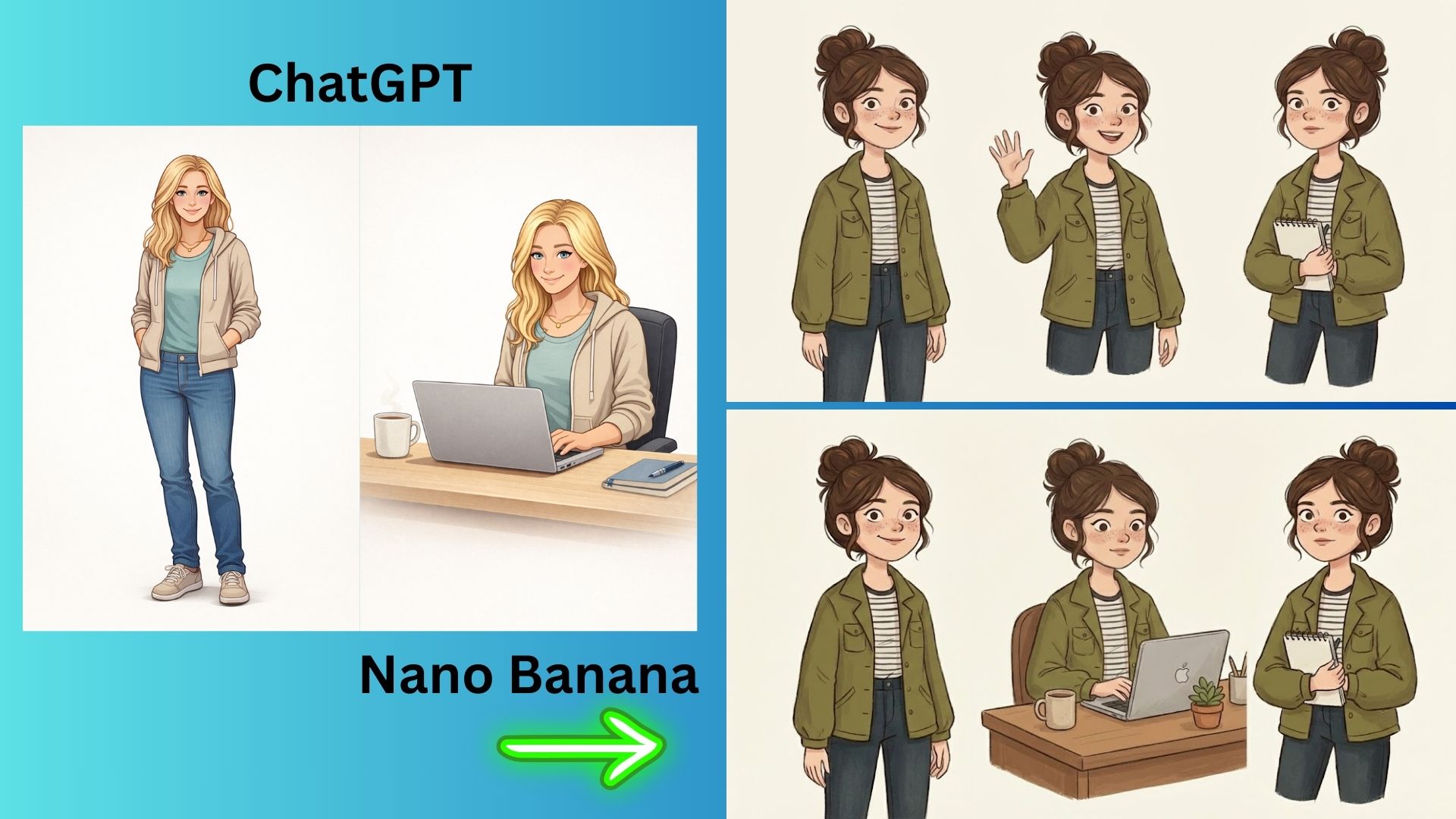

3. Character consistency (multi-step)

Prompt 1: Create an illustrated character with consistent facial features, hairstyle and clothing. Neutral background.

Prompt 2 (run immediately after): Show the same character sitting at a desk, working on a laptop. Maintain the same appearance and style.

ChatGPT followed the first and second prompt perfectly while maintained the character’s facial features and clothing.

Nano Banana created a fun character with three different poses in the first prompt and then followed through with the second prompt but just for the one character. Although it maintained the appearance, style and facial features, the excess images were distracting and less helpful overall.

Winner: ChatGPT wins for more accurately following the prompt.

4. Style interpretation (illustration)

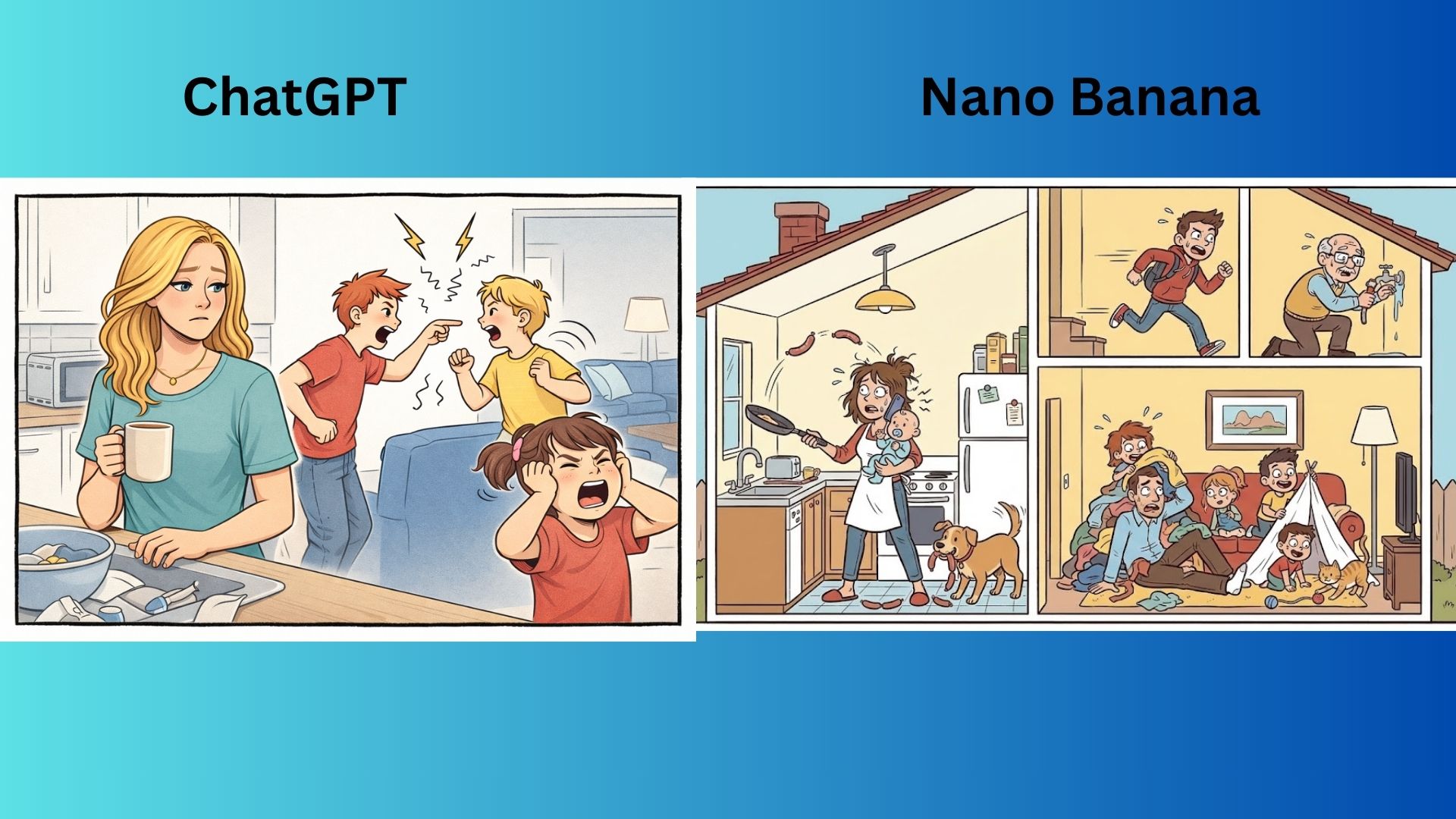

Prompt: Create a single-panel comic-style illustration showing a busy household. Clean lines, expressive characters, simple color palette. No speech bubbles.”

ChatGPT generated an image of just one chaotic scene in a blended kitchen and living room. Although the energy of a busy home is captured, it doesn’t offer as much storytelling as Nano Banana.

Nano Banana presented a cross-section view of a two-story house, divided into four distinct panels, each depicting a scene of chaotic family life. The overall impression is one of frenetic activity and overwhelming stress, portrayed with humor.

Winner: Nano Banana wins for showing the chaos of a busy household with many rooms and scenes.

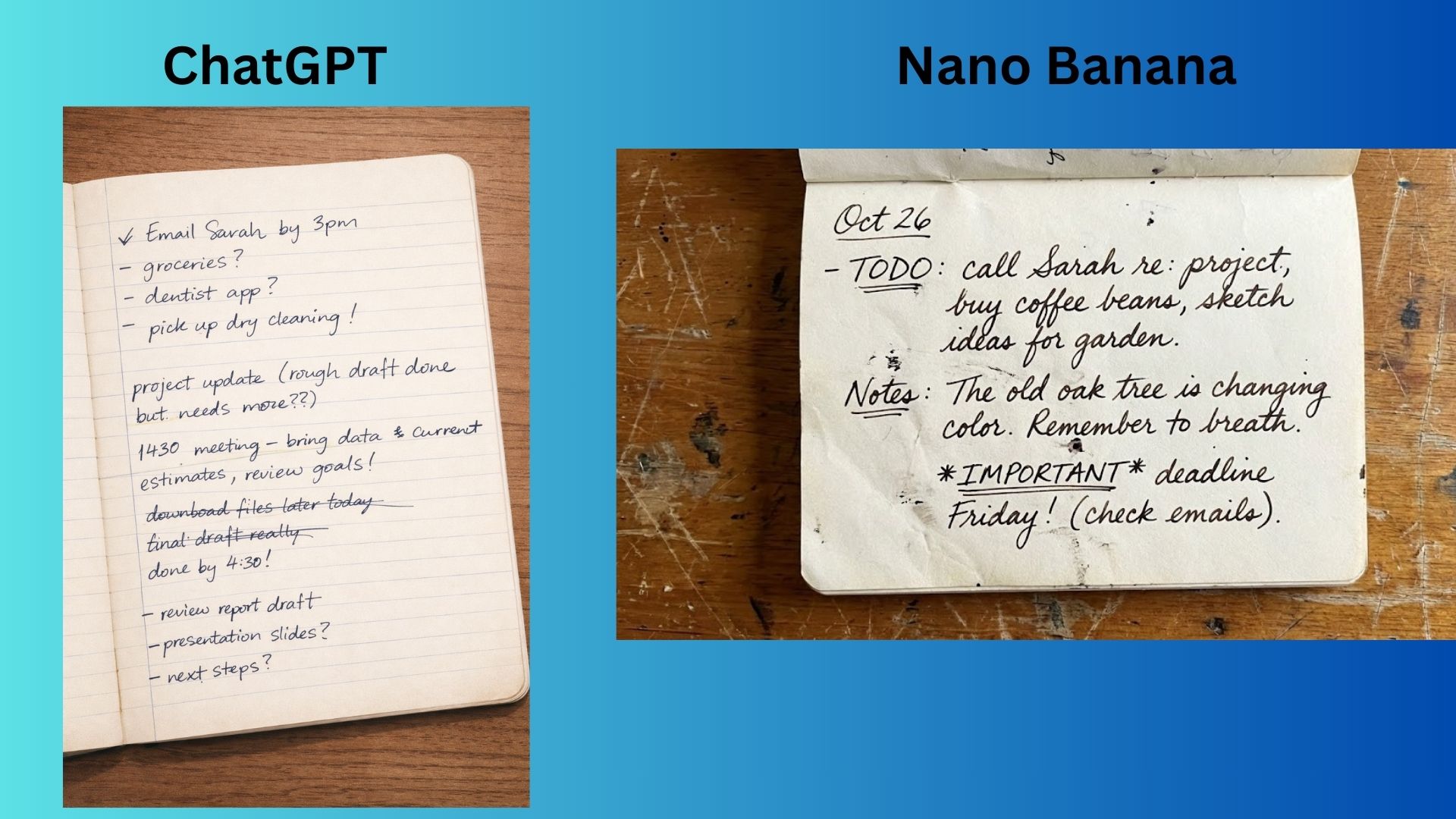

5. Instruction precision & constraints

Prompt: Create an image of a handwritten notebook page on a wooden desk. Messy but legible handwriting. No additional objects. No printed or typed text.

ChatGPT created an image of a lined notebook page filled with messy but legible blue-ink handwriting. The lightning is soft and even, giving the scene a warm, everyday feel.

Nano Banana generated a top-down photograph of an open, worn notebook resting on a wooden surface. The notebook page is off-white, textured and shows signs of wear, including slight discoloration, stains, and rough, frayed edges.

Winner: Nano Banana wins for a clearer, even more realistic image.

6. Product-style image generation

Prompt: Create a minimalist lifestyle image for a generic productivity app. Smartphone on a desk, soft lighting, neutral colors. No logos or brand names.

ChatGPT expertly captured the prompt request with a clean and polished image.

Nano Banana also generated a polished image but the extra detail of the table leg give the image more depth and therefore, making it seem more realistic.

Winner: Nano Banana wins by a hair for extra detail and composition balance.

7. Abstract concept & emotional tone

Prompt: Create an image that visually represents calm focus. No text. Use lighting, color and composition to convey the feeling.

ChatGPT seems to have just one lighting setting that it implements to every image. From the first prompt to the sixth and now this one, the lighting doesn’t change. The image is good, but feels familiar.

Nano Banana generated an interesting and abstract visual that proves it is better at conceptual interpretation and emotional nuance.

Winner: Nano Banana wins for a far superior image that better represents the prompt’s request.

Overall winner: Nano Banana takes the crown

Out of seven challenges, Nano Banana claimed victory in five categories, while ChatGPT won two. Google's image generator consistently delivered superior photorealism, better lighting, stronger compositional choices and more nuanced interpretations of abstract concepts.

ChatGPT held its ground in text accuracy and character consistency, but it looks like even with the update it just can't compete with Gemini 3.0.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- GPT‑5.2 is way smarter than I expected — these 9 prompts prove it

- I compared NotebookLM vs. Audio Overviews vs. Illuminate — here's how to use Google's best AI audio tools

- I tested ChatGPT-5.2 and Claude Opus 4.5 with real-life prompts — here’s the clear winner

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits