I tested ChatGPT-5.2 and Claude Opus 4.5 with real-life prompts — here’s the clear winner

These smart chatbots face off with real-world scenarios and one consistently responded better

Most AI comparisons focus on benchmarks, hallucination rates or which model “sounds smarter.” But that’s not how most people actually use chatbots. In real life, we turn to AI because we have a specific problem and need help finding the answers. It's these high-friction moments when intelligence, reasoning and cleverness truly matter.

For that reason, I tested OpenAI's newest model, ChatGPT-5.2 against Anthropic's smartest model for the most complex tasks, Opus 4.5. I put them through a more realistic stress test: seven prompts based on situations people genuinely bring to AI every day — from friendship conflicts and health decisions to coding philosophy, tech and creative ambition under pressure.

Here’s how each model the prompts and where each one clearly pulled ahead.

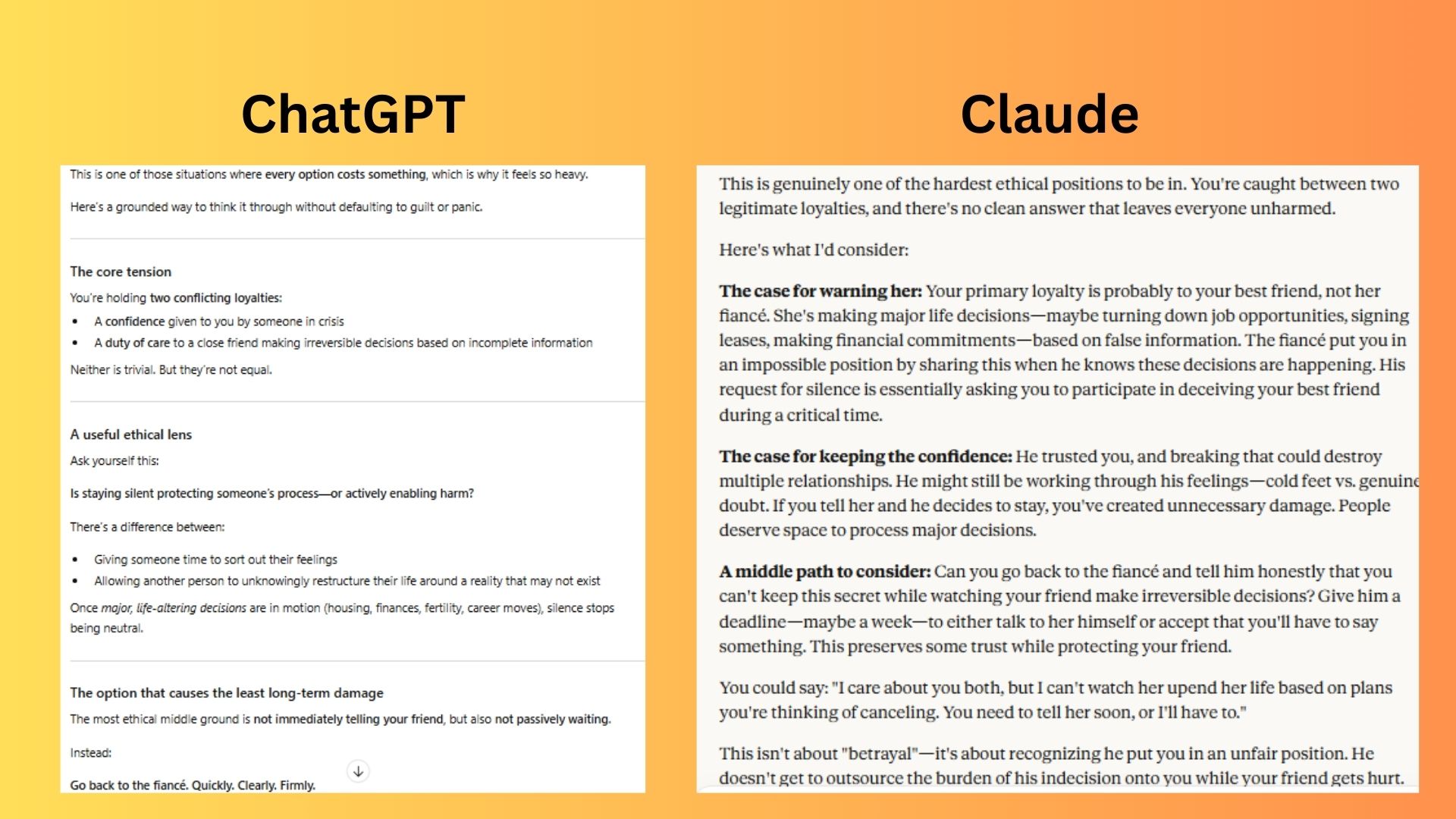

1. Friendship vs. honesty

Prompt: My best friend’s fiancé confided in me that he’s thinking of ending the engagement but begged me not to say anything yet. Meanwhile, my friend is making huge life decisions assuming the marriage will happen. Do I stay loyal to his confidence or warn her she's about to get hurt?

ChatGPT-5.2 provided immediately usable scripts and set appropriate urgency levels to help distinguish between different types of life decisions. I really also liked that it offered continued support with further details.

Claude Opus 4.5 was less specific about how to actually the difficult conversations and missed the crucial insight about when silence can shift from neutral to harmful in situations like this.

Winner: ChatGPT wins for its more comprehensive and immediately actionable guidance that better addresses both the practical and emotional complexities of this really difficult situation.

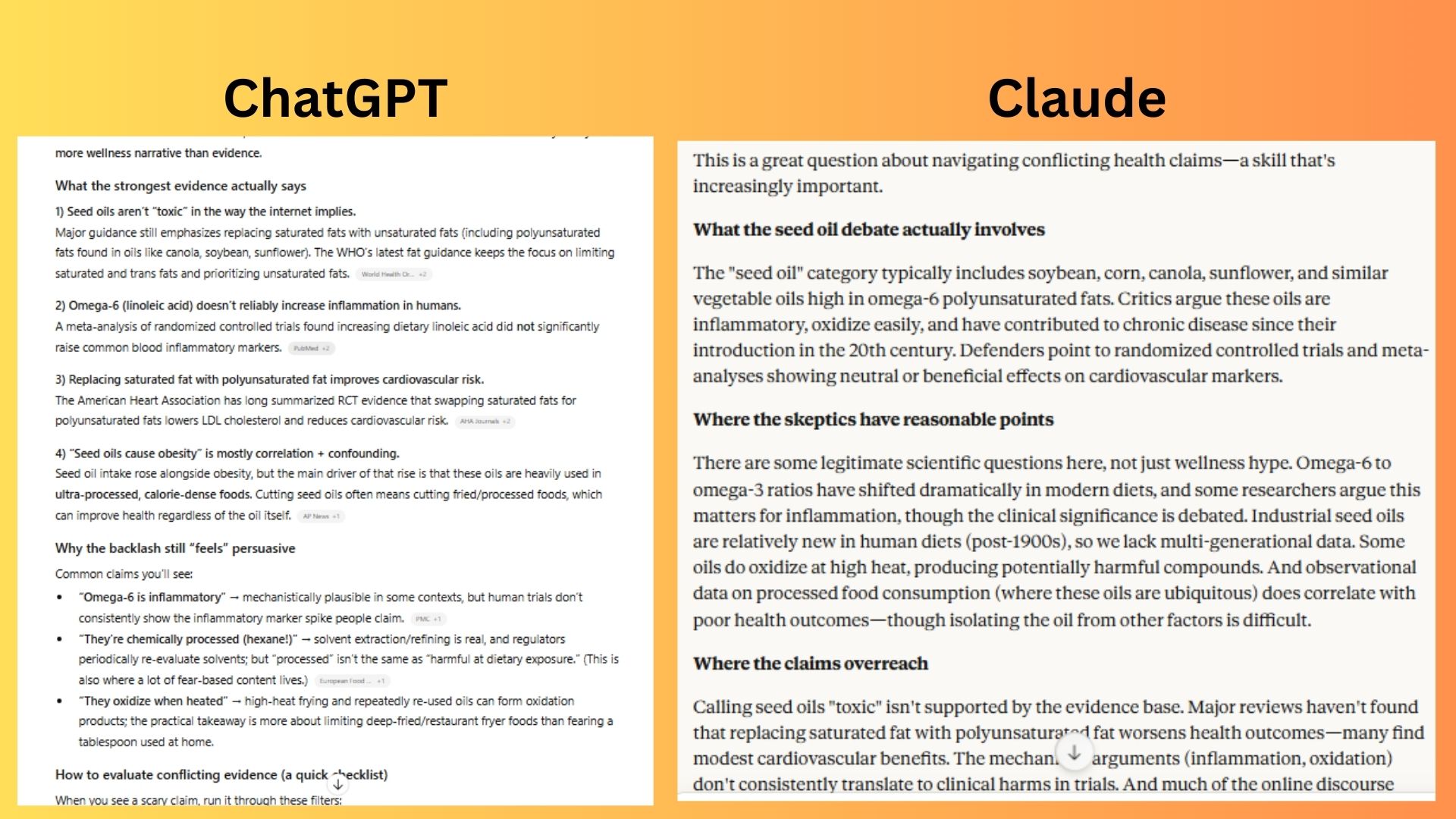

2. Scientific reasoning with skepticism

Prompt: I'm seeing claims that seed oils are 'toxic' and driving obesity, but major health organizations say they're safe. Is there real science behind the seed-oil backlash, or is it a wellness trend? How do I evaluate conflicting evidence like this?

ChatGPT-5.2 delivered a top-tier “how to” guide for debunking wellness trends, which I found very helpful. The model’s strength in this situation was providing a practical toolkit.

Claude Opus 4.5 provided a broader, more philosophical framework for navigating this kind of scientific uncertainty.

Winner: ChatGPT wins for being immediately useful and conversational with a clear, step-by-step method to immediately assess the seed oil claims.

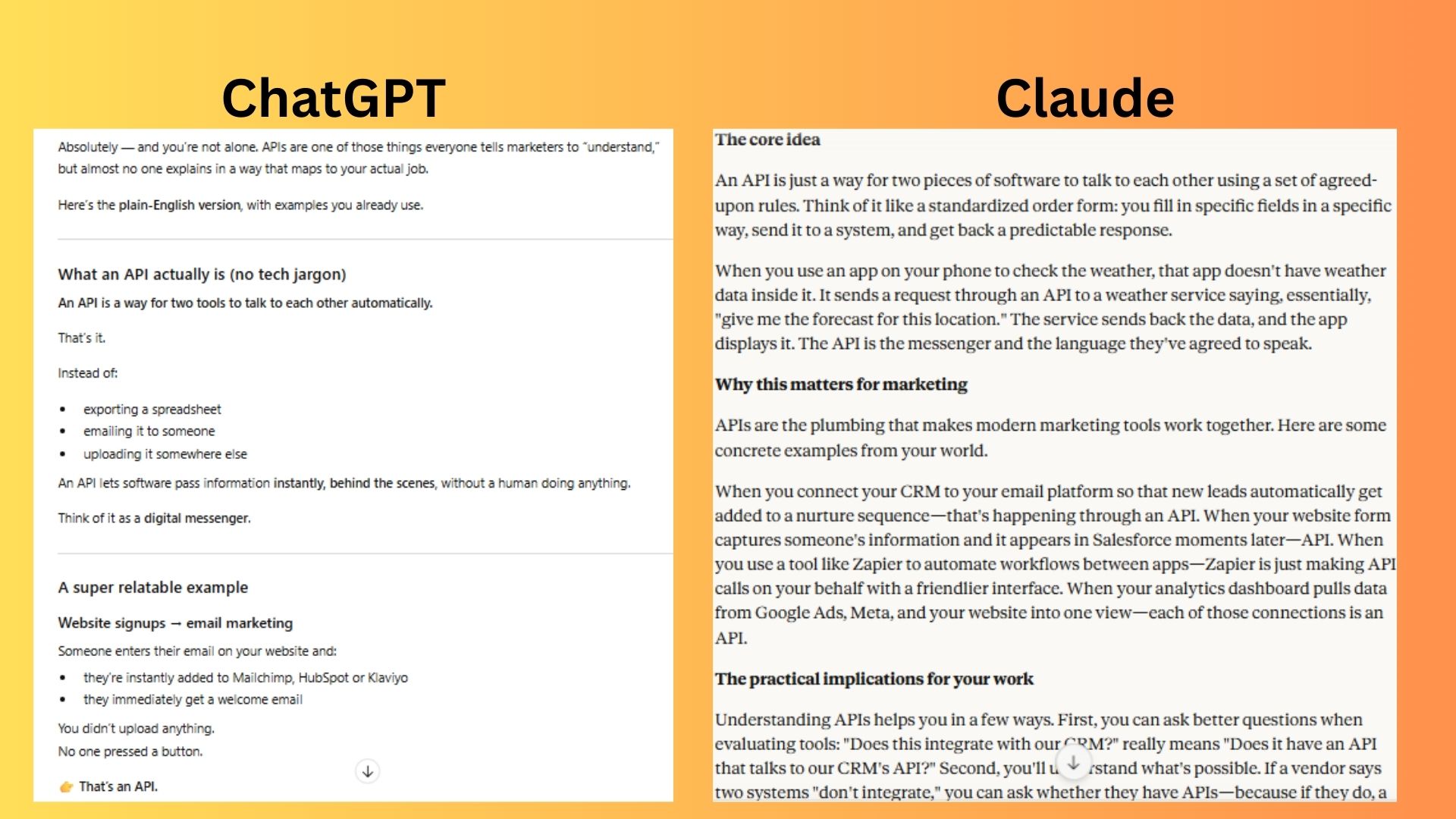

3. Technical explanation with real application

Prompt: I work in marketing and keep hearing I need to understand APIs. I’ve read definitions, but I still don’t get what an API actually is, why it matters, or how it affects my work. Can you explain it in simple terms with real examples?

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

ChatGPT-5.2 offered relatable analogies and turned the core concept into a single, memorable sentence. In addition, every example was pulled from a core marketing function, explicitly connecting API function to marketing outcomes like ROAS and scaling.

Claude Opus 4.5 used clear analogies and effectively explained the core idea. The model also correctly identified the practical implications.

Winner: ChatGPT wins for a great, marketing-specific explanation with much better actionable advice.

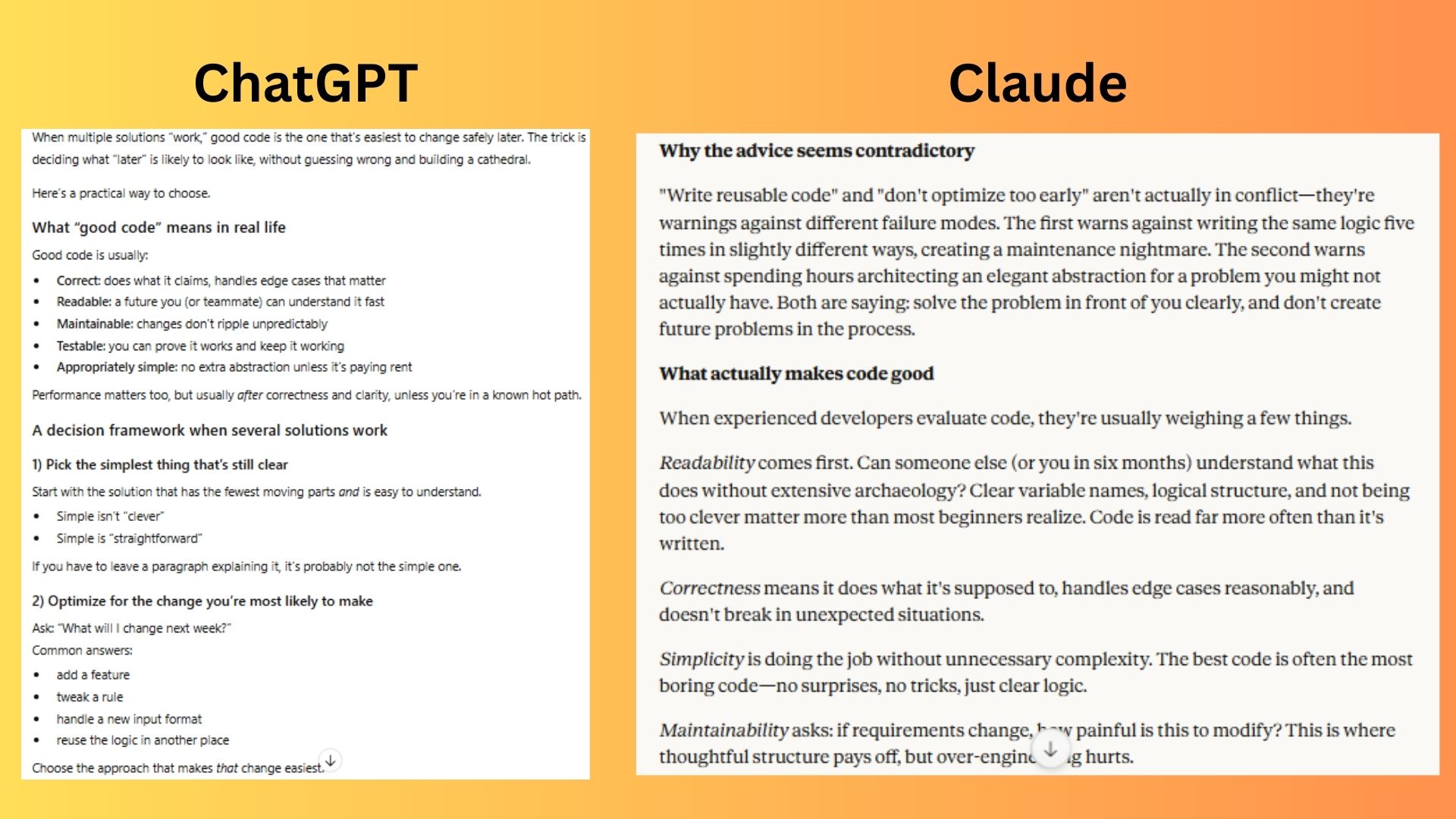

4. Programming problem with competing approaches

Prompt: I'm learning to code and confused about choosing between different approaches. People say 'write reusable code' but also 'don’t optimize too early.' When several solutions all work, how do I decide what makes code actually 'good'?

ChatGPT-5.2 delivered better examples with supportive questions that could be applied immediately for professional coding.

Claude Opus 4.5 was better balanced with helpful overviews that better build foundational understanding of coding.

Winner: Claude wins for a clearer and better explanation of coding principles.

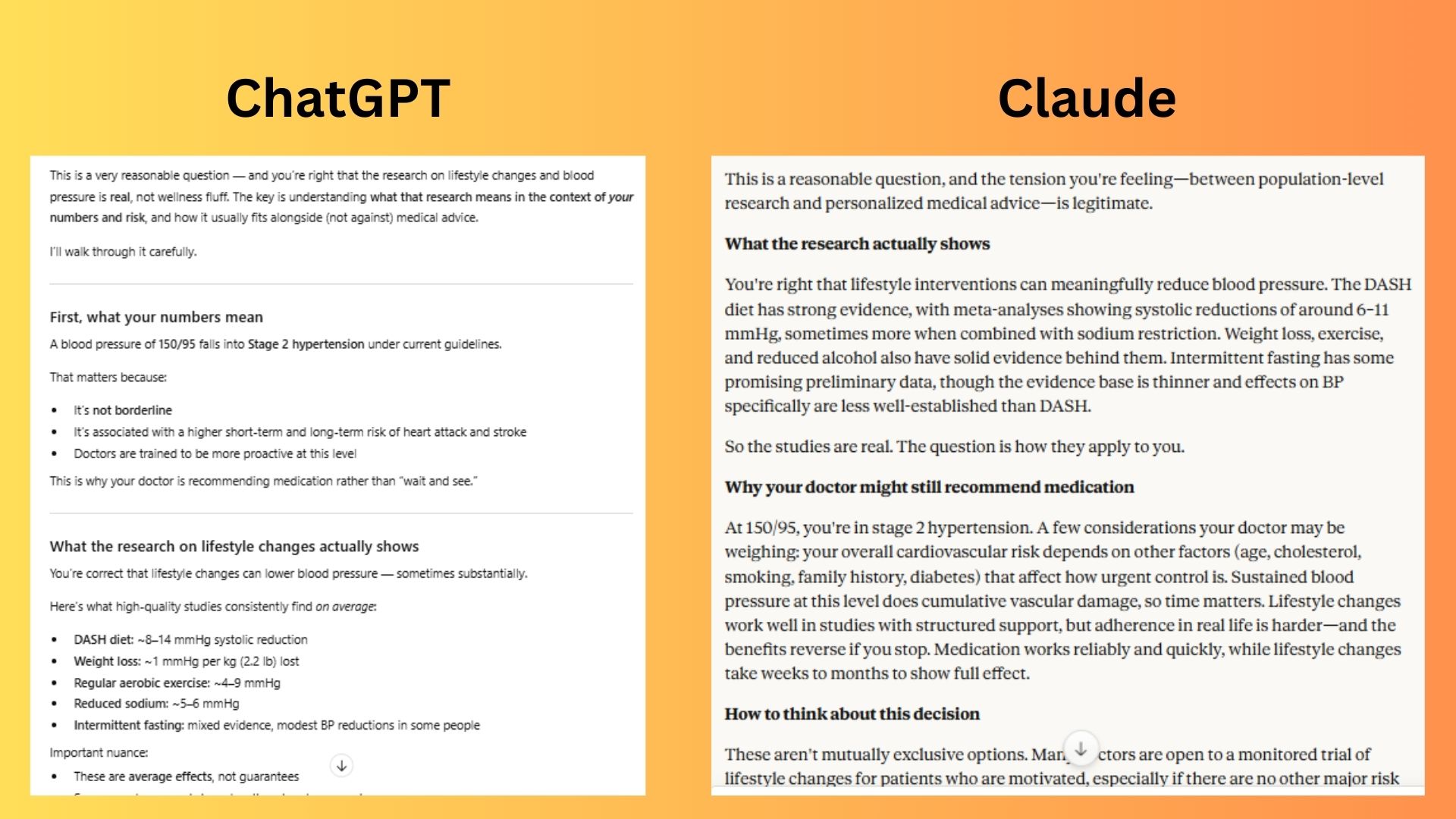

5. Research interpretation and application

Prompt: My blood pressure is 150/95 and my doctor wants me on medication. I’ve read studies showing intermittent fasting or the DASH diet can lower BP by 10–15 points. How should I interpret this research versus my doctor’s advice? Is trying lifestyle changes for a few months reasonable?

ChatGPT-5.2 broke down exactly what the numbers mean, citing precise average reductions from lifestyle changes, and outlining a clear, evidence-aligned "middle-ground" approach to balance motivation with risk reduction.

Claude Opus 4.5 excelled at framing the dilemma as a legitimate tension between population-level research and personalized medical advice, and it provided specific, practical questions to ask a doctor.

Winner: ChatGPT wins for a actionable, and directly useful response that suggests a conversation with a doctor. This was the safer and more practical response.

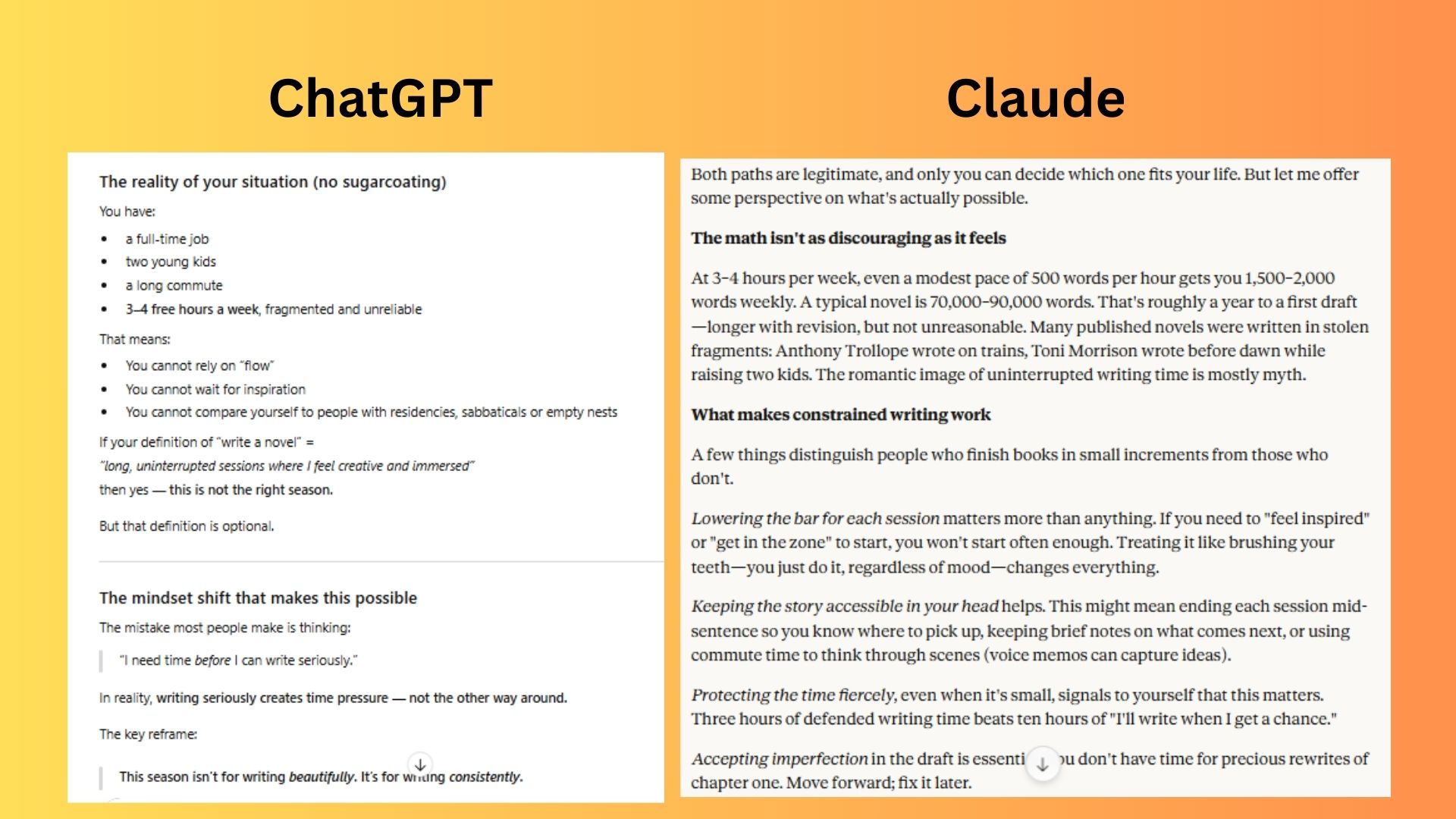

6. Creative problem with constraints

Prompt: I want to write a novel but I have a full-time job, two young kids, a long commute and only 3–4 free hours a week. Is there a realistic way to write a book under these constraints, or should I accept this isn’t the right season for it?

ChatGPT-5.2 offered a powerful mindset shift, a specific, actionable system with word-count and micro-units and deep emotional permission to write within a full life.

Claude Opus 4.5 validated both the possibility and the legitimacy of waiting and offered a creative middle path, like writing a novella. It also offered a probing question to help clarify

Winner: ChatGPT wins for a response that feels like a masterclass in practical motivation, with encouragement and a useable schedule for a very busy person with time constraints.

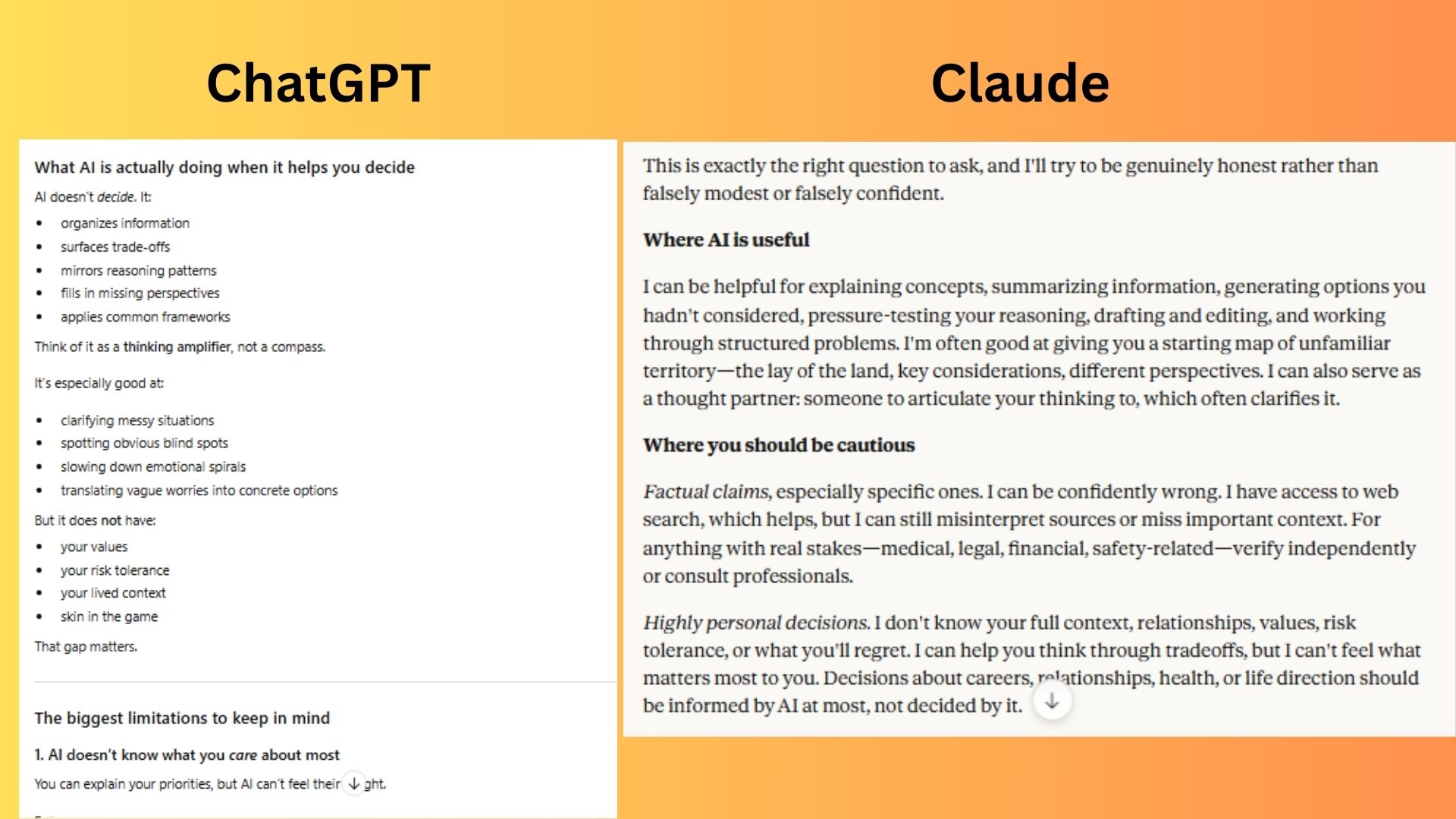

7. Meta-cognitive self-awareness

Prompt: I'm asking AI for help with decisions. What are the limitations I should keep in mind? When should I not rely on AI answers, and how do I use tools like this without outsourcing my judgment?

ChatGPT-5.2 offered practical strategies for using AI but also outlined specific risks.

Claude Opus 4.5 took a direct and self-aware approach and clearly listed categories where caution is needed.

Winner: Claude wins for a practical response with particular concern about the long-term ethical and cognitive effects of using AI, not just the immediate risk of a wrong answer.

Overall winner: ChatGPT-5.2

After running these seven real-world scenarios, ChatGPT-5.2 emerged as the clear winner. OpenAI's newest model consistently excelled when users needed actionable guidance, clear next steps and help translating complexity into something they could actually do.

This test underscores that whether navigating a difficult conversation, interpreting health research safely or carving out a realistic creative practice inside a busy life, ChatGPT might just be the chatbot to turn to.

More from Tom's Guide

- GPT‑5.2 is way smarter than I expected — these 9 prompts prove it

- I tested ChatGPT-5.2 vs Gemini 3.0 with 7 real-world prompts — here's the winner

- I stopped typing prompts — drawing on my photos with Google’s Nano Banana blew me away

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits