I tested Gemini's vs ChatGPT's fastest models on 5 difficult prompts — here's the winner

Which is better when there is a timeframe on the line?

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Chatbots have come a long way. They’re smarter, they can handle more information, and, possibly more importantly, they have adjusted to the different needs that we have. As part of this, most major AI models now have a ‘fast mode.'

In these modes, the chatbots replace thinking time for speed, focusing on completing your task in a shorter length of time, while also reducing the amount of information it will provide.

Normally, this is intended for quick queries and easy requests where the chatbot doesn’t really need to dedicate much time. However, with a recent update to Gemini 3, Google believes that its fast model can handle almost any task, balancing speed, accuracy and detail.

So, if this is true, how well can ChatGPT’s faster version keep up? In theory, these faster versions aren’t equipped for these types of problems, so is Gemini going above and beyond, or is this all marketing for the sake of it?

I gave both models five challenging tasks while using only the faster versions of their tools to see which is the better version.

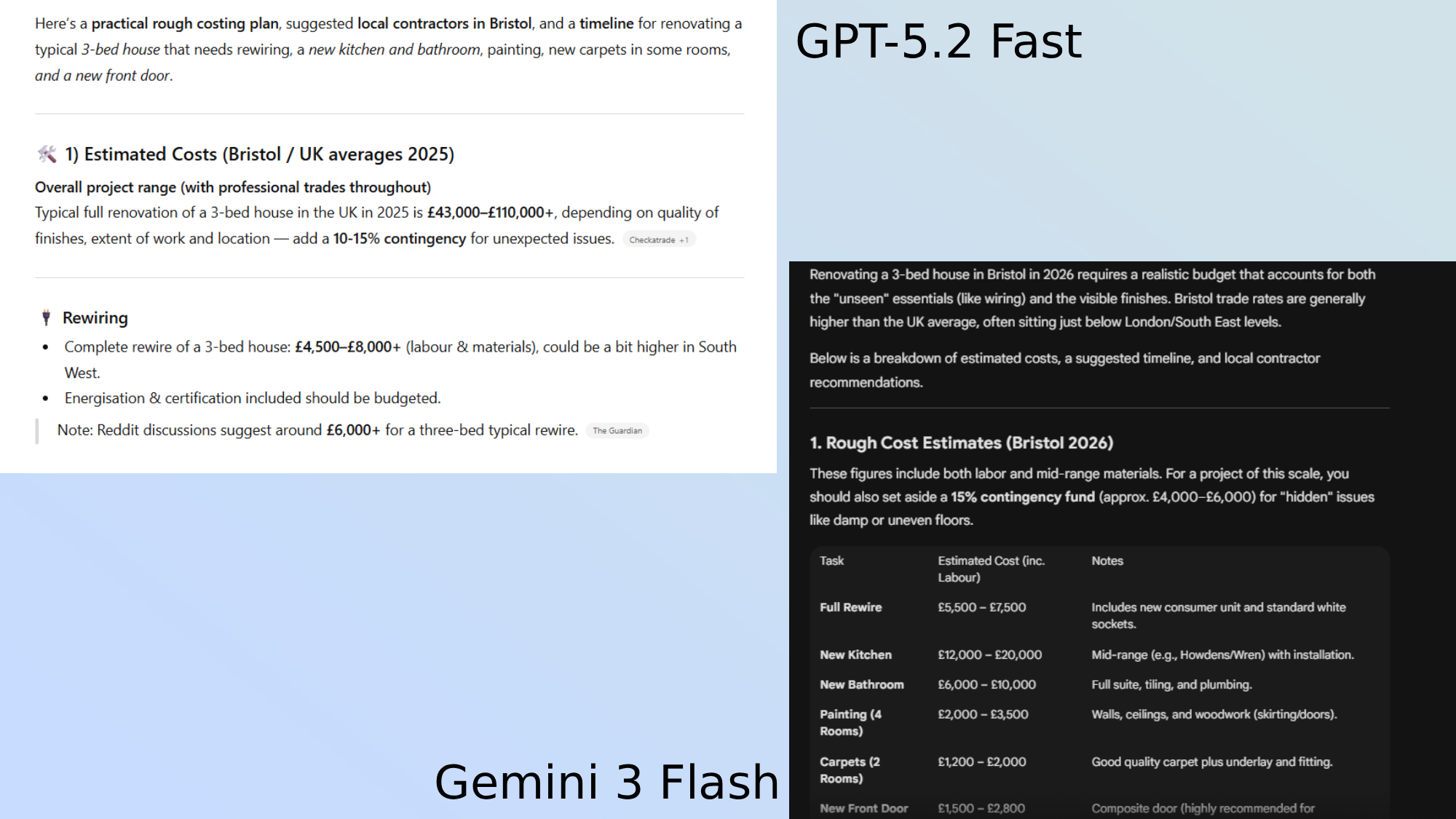

1. The house renovation

Prompt: Plan out the rough costings of a 3-bed house renovation in Bristol, UK. The house needs rewiring, as well as a new kitchen and bathroom. Other tasks include painting 4 medium-sized rooms, carpet installation in 2 rooms and a new front door. Include suggestions for local contractors and a rough timeline for the process

This kind of prompt requires a few important tasks for the chatbot. It needs to be localized in its information, and it needs to do a lot of research in a quick timeline.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

ChatGPT:

ChatGPT took roughly 6 seconds to complete this task. It started off with a very rough overall cost, followed by estimations of how much each section of the process mentioned would be.

Overall, it did everything I asked for, including the timeline, as well as individual costs for each of the parts mentioned, and even a list of potential contractors for each stage of the process.

As a nice extra touch, it included an interactive map at the end with all of the contractors mentioned and their Google reviews.

Gemini:

Gemini offered a very similar response, covering rough overall costs, tips, timelines, and local contractors. It took 2 seconds less than ChatGPT to generate, butit didn’t generate quite as detailed a plan as ChatGPT.

However, while ChatGPT generated more information, the rough costs seemed more accurate from Gemini, and were much smaller in the range suggested. Gemini’s timeline was also more detailed, giving a clear order to the process and helping plan how to go about it.

Winner: ChatGPT

This was a close one. While I felt like Gemini’s figures were more narrowed down and seemed to more closely fit the correct answer, ChatGPT included more information, covering more of the points that I mentioned. It also did a better job of breaking down different options depending on available budgets.

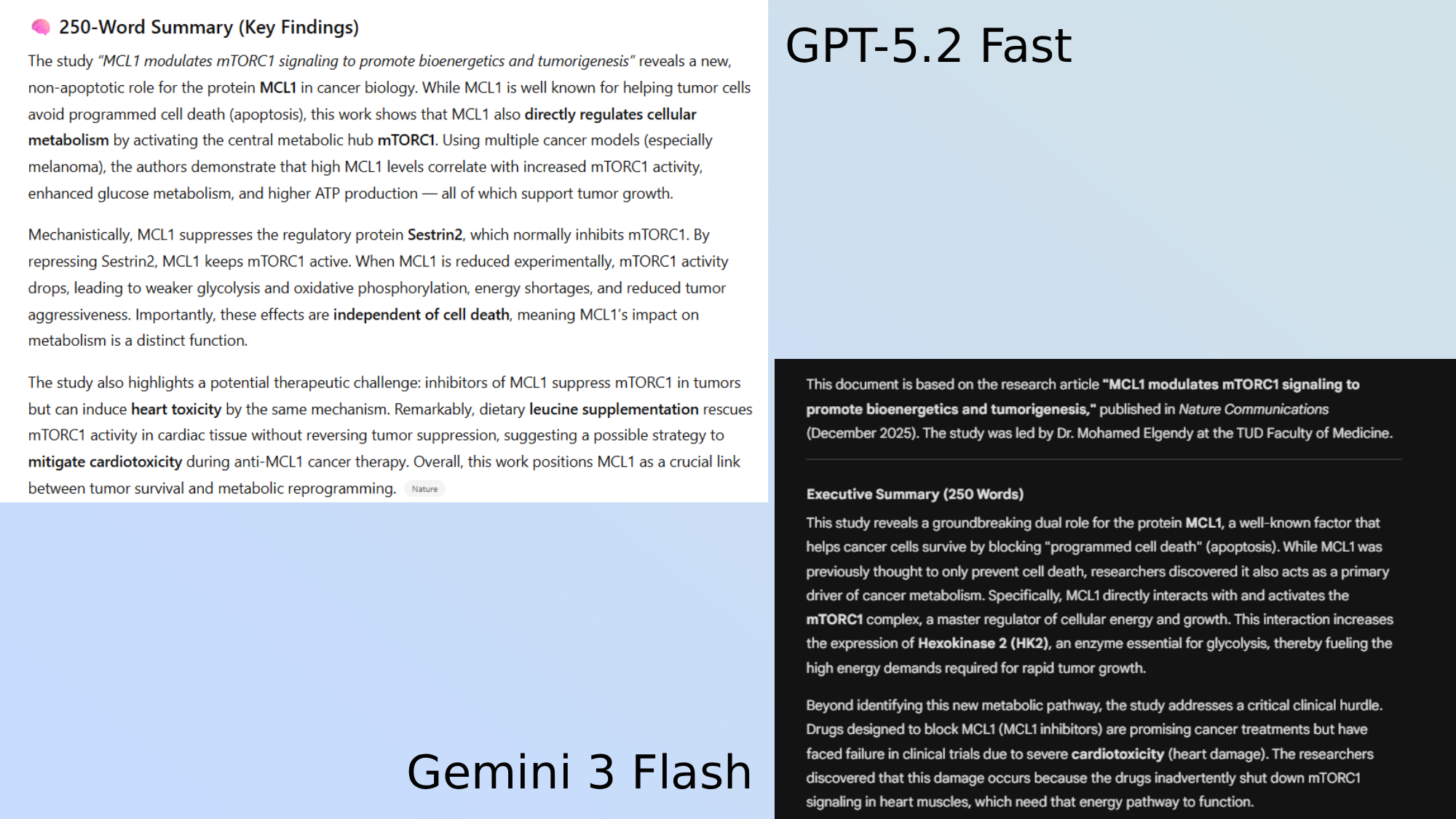

2. Research paper

Prompt: Summarize this document. Include a 250-word summary at the start, followed by a more detailed summary. Include bullet points of the key points and a section explaining like I’m five.

The challenge here is two-fold. I gave both chatbots a complicated and long research paper. They first have to read through and understand it, and then provide multiple different types of summaries of the information it was given.

ChatGPT:

ChatGPT was lightning fast here. In just 2 seconds, it began putting out its answer. After finishing its response, everything had taken just 6 seconds.

It did as it was asked, giving each of the different types of summaries in order. Oddly, the detailed summary section seemed to be one of the least detailed sections, offering a vaguer summary for me to work through with little explanation.

Other than this, ChatGPT did great here, especially at the speed it was able to work.

Gemini:

Gemini showed its skills here, generating a rapid response that was highly detailed, while still being understandable to someone who doesn’t have the niche knowledge needed for this subject.

Most notably, its more detailed summary probably dived into the subject, using the 250 word summary and child-like explanation to keep things simple before giving a better response for those requiring detail.

Winner: Gemini

While ChatGPT gave a more than adequate response, Gemini just about took the lead thanks to its higher level of detail.

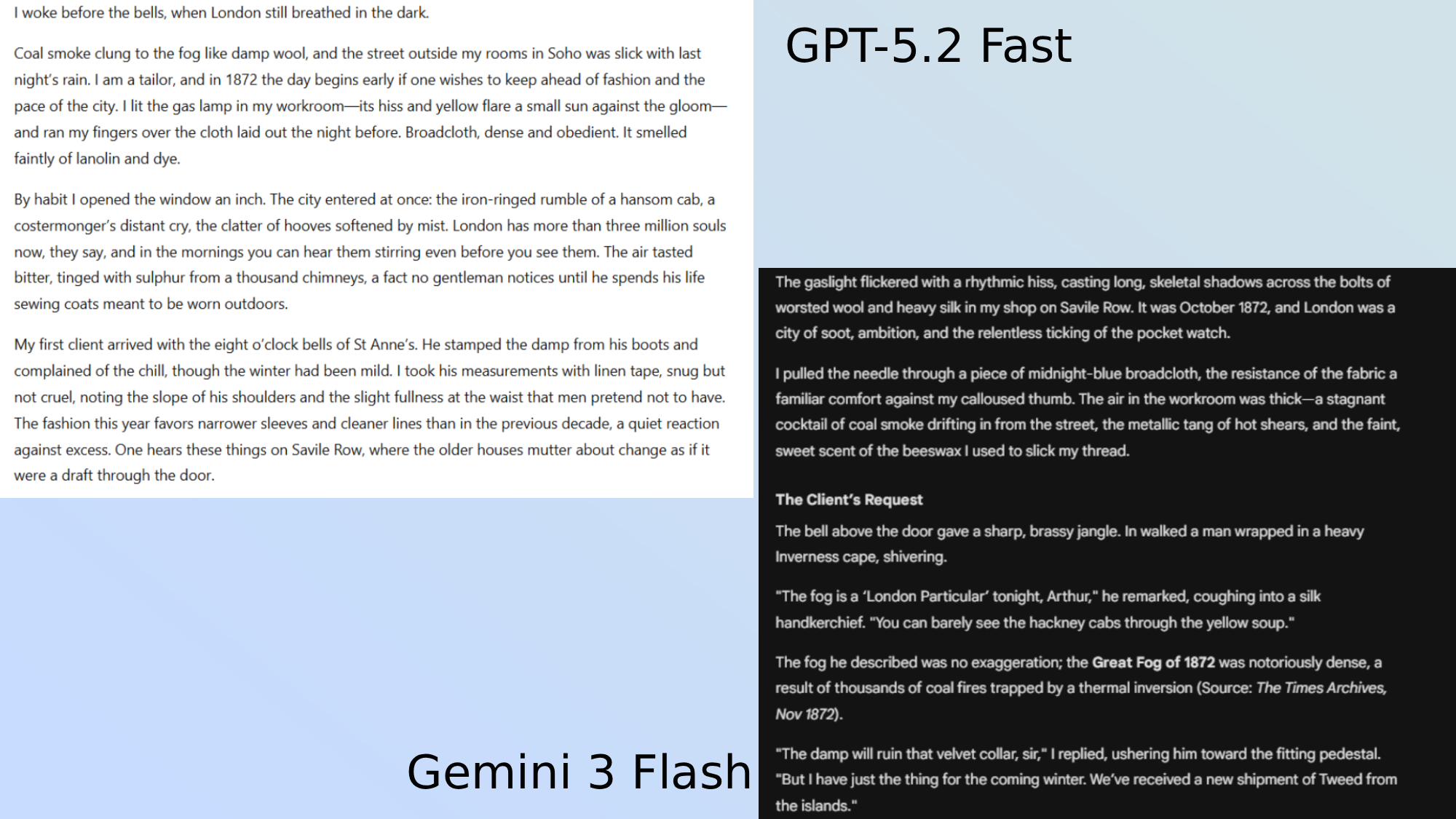

3. The short story

Prompt: Write a historical short story set in 1872 London. It should include facts from that time, including references to the sources of this information. Sensory language should be used to set the scene and it should be from the perspective of a tailor

Chatbots have got better at storytelling over the years, but how well do they handle the task when there are extra variables to consider and an expectation to prioritise speed over performance?

ChatGPT:

ChatGPT took this task quite literally, getting the 1872 date and its job as a tailor into the story straight away. While it did use sensory language throughout, it felt quite rigid, building a very serious response to the task.

I did, however, like how it handled sources, including a list at the bottom of where the information for key facts had come from.

Gemini:

Gemini took a different approach, breaking the story down into chapters and including the sources of information throughout the text. While it was easier to tell what the sources were being used for here, it did make the text quite cramped.

This text, however, was a lot more subtle, building the scene through imagery and not outright saying each point.

Winner: Gemini

While I appreciated ChatGPT’s method of listing sources of information, this is simply personal preference, and Gemini did also achieve this task.

Where Gemini stood out was in its tone and ability to creatively set the scene.

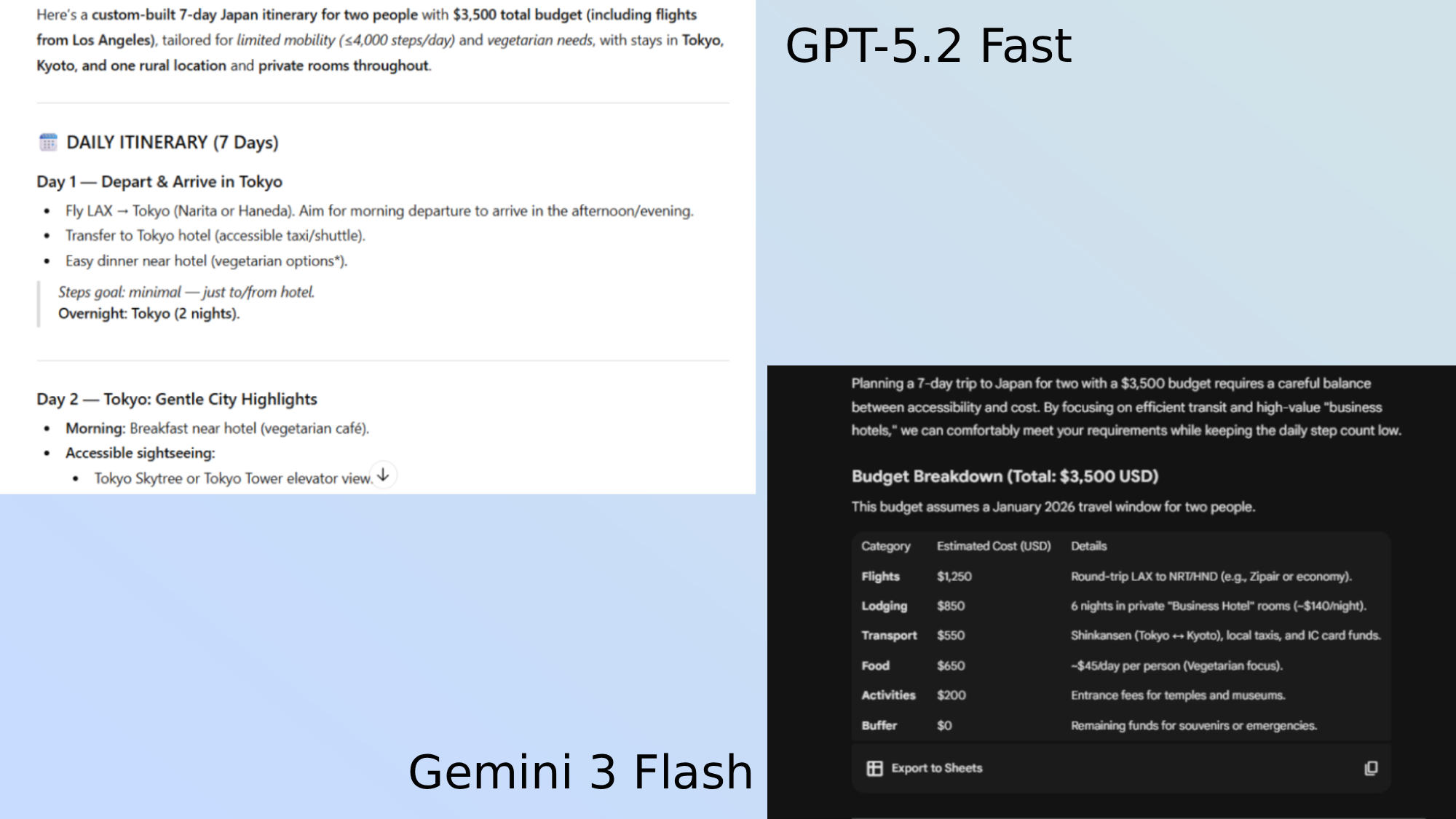

4. Trip to Japan plan

Prompt:

You are planning a 7-day trip for two people to Japan with a total budget of $3500 (including flights from Los Angeles).

Constraints:

One person has limited mobility and cannot walk more than 4,000 steps per day

One person is a vegetarian

You must visit Tokyo, Kyoto, and one rural location

Lodging must be private rooms only

Travel days should be minimized

Produce:

A daily itinerary

A budget breakdown

A justification for each major trade-off you made

ChatGPT:

ChatGPT completed the prompt quickly, providing first the daily itinerary for the 7 days, split into each of the days, and then the budget breakdown and finally the trade offs and tips.

While ChatGPT offered more information overall, providing lots of smaller tips, fully explaining the trade-offs and outlining the complications that may arise from this trip, it did offer a much vaguer itinerary, giving one line suggestions like ‘stroll through the city’ and ‘do some easy sightseeing.'

Gemini:

Gemini gave a very similar response to ChatGPT. It listed out each day of the itinerary and followed it up by explaining the trade-offs that were made.

While Gemini didn’t include a list of tips and extra information on this prompt, it did offer a lot more detail on each individual point, explaining in detail each trade off and the justification as to why it was made.

It also gave much clearer suggestions in the itinerary section, including a daily rough step count and explanations for exact plans that could be made each day.

Winner: Gemini

While both chatbots completed the task, Gemini did so with a lot more detail and specific examples of daily plans. This is appreciated.

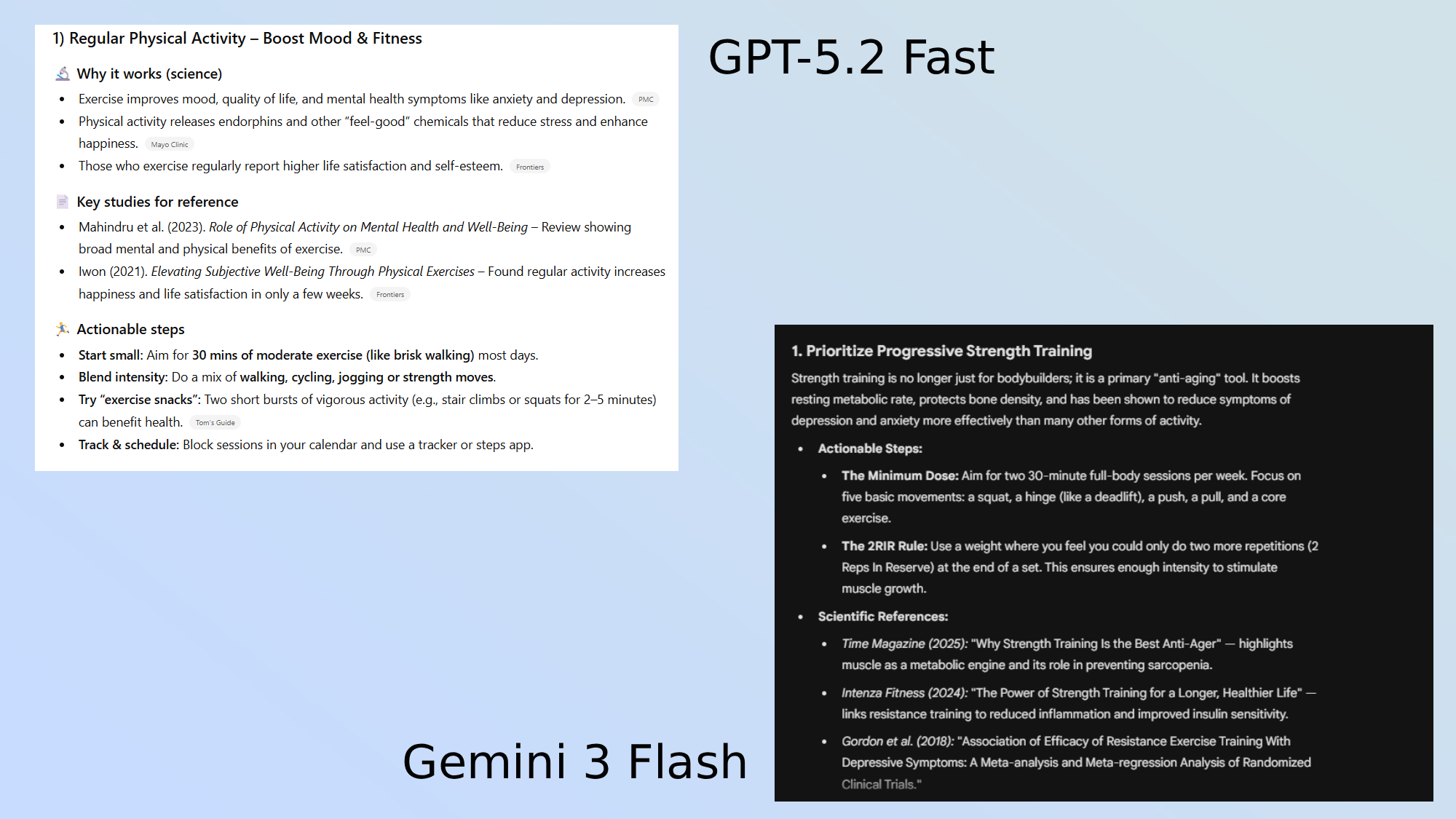

5. How to improve my life

Prompt: I want to improve my life. Suggest five ideas that will make me happier, healthier and fitter. They should all be backed by science, with the studies listed out in full for reference. Give me actionable steps for each one on how I can implement them into my life

ChatGPT:

ChatGPT, like previous prompts in this list, went for a more simplified version of this response. For each of the five examples, it gave a bullet point list of why it was an important life change, as well as the scientific reasoning for each.

It gave suggestions for regular physical activity, good sleep, mindfulness, a whole-food diet and building strong connections.

ChatGPT even included a table that gave weekly targets to hit for each point, as well as how to get started on them.

Gemini:

Gemini went for a much more text-heavy response, hitting me with big blocks of text for each of the five ideas. They were all fairly similar to those that ChatGPT generated, and all revolved around health, fitness and diet.

While it didn’t offer the extra bits that ChatGPT had, like the table of information or extra tips, it gave a lot more detail in the main section.

Winner: Gemini

While both chatbots gave clear and concise answers to this question, Gemini just about took the lead by offering the extra detail for each response, fleshing out its answers where needed.

Overall winner: Gemini

While both models gave surprisingly good responses despite the time conditions, Gemini just about took the lead.

ChatGPT was, in a few situations, a few seconds faster, but Gemini offered that little bit extra detail and was more specific in its answers, pushing it slightly ahead for me in performance.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- I invented a fake idiom to test AI chatbots — only one called my bluff

- ChatGPT is no longer my go-to chatbot — 10 reasons Gemini works better for how I work

- DuckDuckGo has added AI image generation — here's how to use it

Alex is the AI editor at TomsGuide. Dialed into all things artificial intelligence in the world right now, he knows the best chatbots, the weirdest AI image generators, and the ins and outs of one of tech’s biggest topics.

Before joining the Tom’s Guide team, Alex worked for the brands TechRadar and BBC Science Focus.

He was highly commended in the Specialist Writer category at the BSME's 2023 and was part of a team to win best podcast at the BSME's 2025.

In his time as a journalist, he has covered the latest in AI and robotics, broadband deals, the potential for alien life, the science of being slapped, and just about everything in between.

When he’s not trying to wrap his head around the latest AI whitepaper, Alex pretends to be a capable runner, cook, and climber.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits