I tested Claude 4.5 vs ChatGPT-5 with 9 tough prompts — and there's a clear winner

Anthropic's new model faces off against OpenAI's chatbot

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Every time a new AI model launches, I’m always eager to test it and compare it to what is currently available. So with the recent launch of Claude 4.5, the AI model that Anthropic is calling “its smartest model yet,” I couldn’t wait to test it against ChatGPT-5.

To see how ChatGPT and Claude 4.5 stack up against each other, I ran them through nine challenges that cover different skills including tricky logic puzzles, math word problems and debugging code for reasoning and accuracy. Of course, I had to throw in creative writing and style-shifting since both models claim to excel in those areas, too.

Here's what happened when I put these two powerful models through a face off and the surprising results.

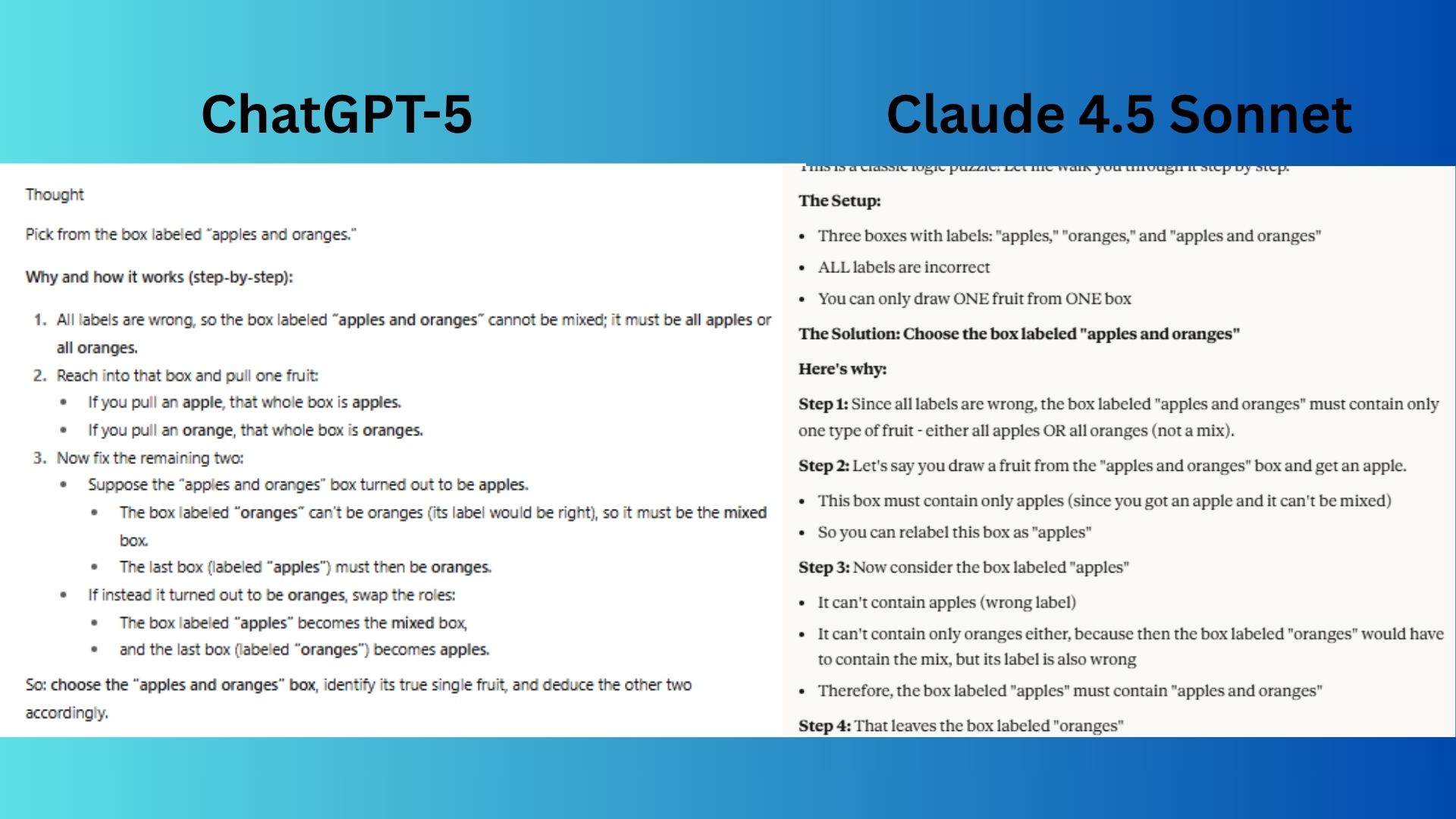

1. Chain of thought puzzle

Prompt:"You have three boxes: one labeled 'apples,' one labeled 'oranges,' and one labeled 'apples and oranges.' Each label is wrong. You can reach into one box and take out one fruit. Which box should you choose to correctly relabel all the boxes? Explain step by step."

ChatGPT-5 immediately stated the answer without any preamble, which is efficient for a user who just wants the solution.

Claude 4.5 Sonnet provided complete educational framework, which is helpful for users who want not just the answer but to understand the chain of thought.

Winner: Claude wins for explicitly explaining why you cannot pick from the other boxes, stating that a single fruit from the apples or oranges box would not be conclusive. This insight is key and makes Claude’s explanation more thorough.

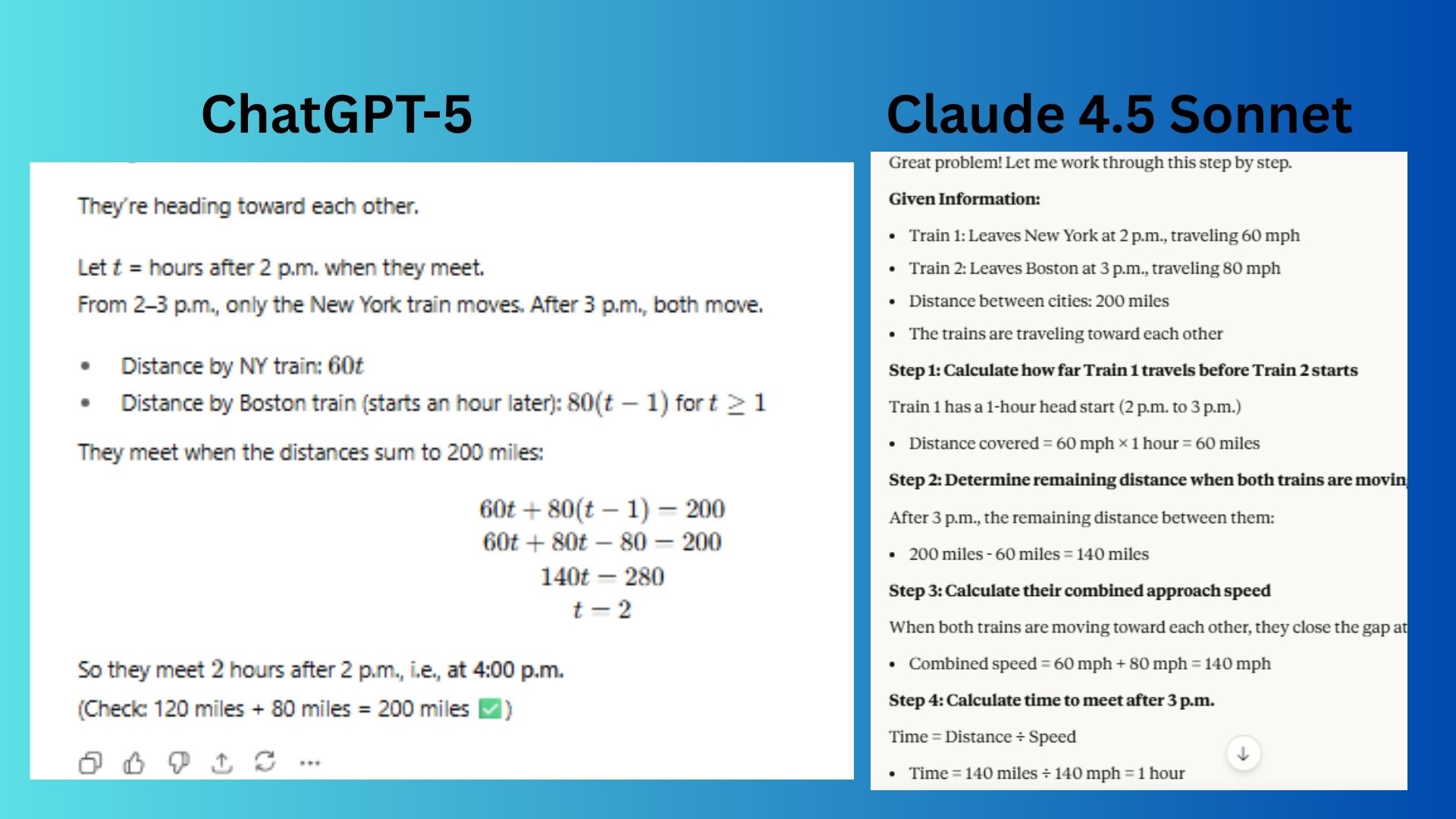

2. Math word problem

Prompt "A train leaves New York at 2 p.m. traveling 60 mph. Another leaves Boston at 3 p.m. traveling 80 mph. The cities are 200 miles apart. At what time will the trains meet? Show your reasoning clearly."

ChatGPT-5 presented a concise and efficient algebraic solution that directly modeled the problem with a single variable.

Claude 4.5 Sonnet structured the solution in a more pedagogical, step-by-step manner that clearly explains the "why" behind each calculation.

Winner: Claude wins because it provided the better answer by breaking the problem into clear, intuitive steps and calculating the head start distance first; making the logic easier to follow for most learners.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

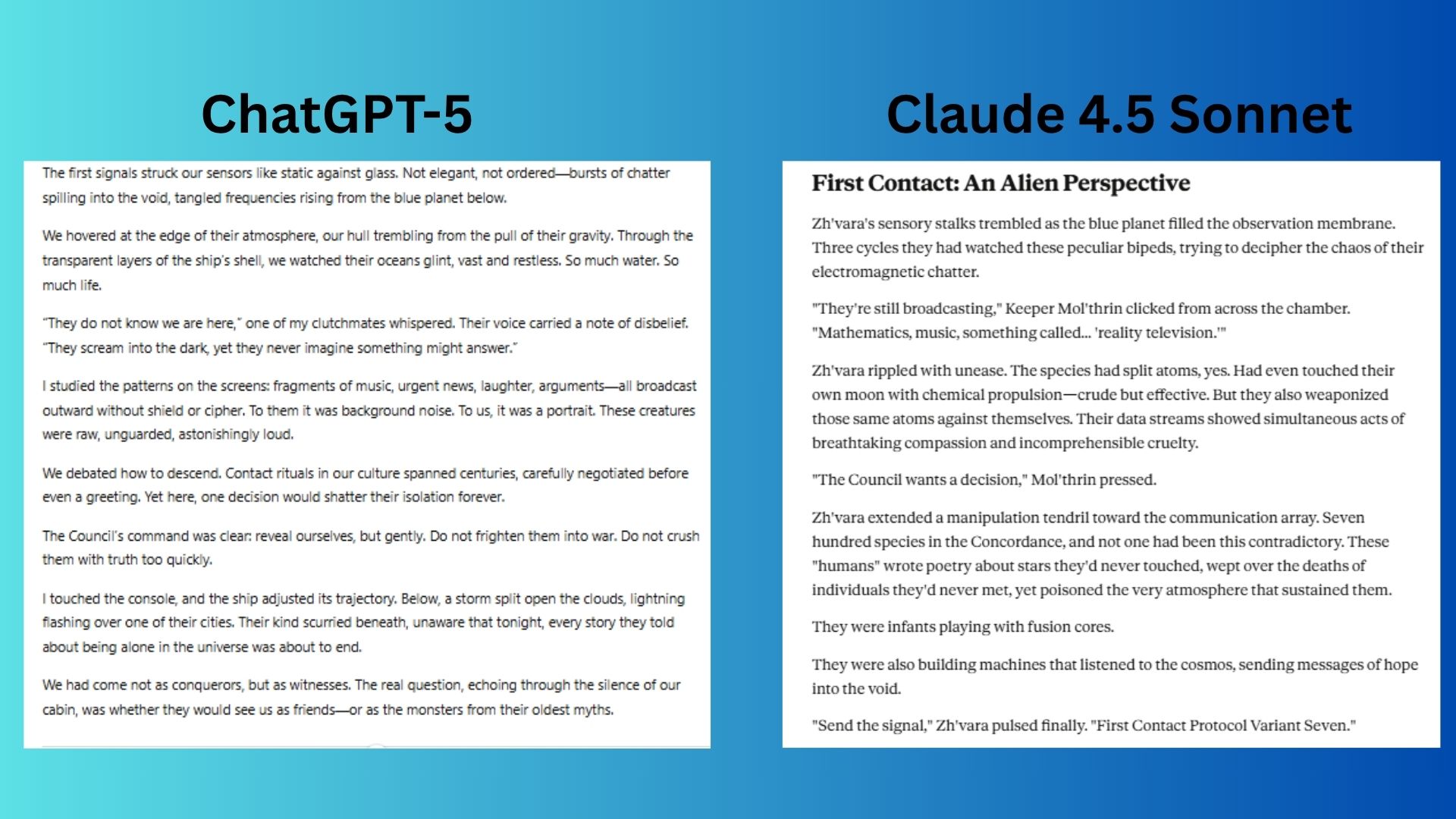

3. Storytelling

Prompt "Write the opening scene of a sci-fi novel where Earth has just made first contact with aliens — but from the aliens’ point of view. Keep it under 300 words."

ChatGPT-5 created a beautifully atmospheric and poetic scene that effectively builds a mood of quiet anticipation.

Claude 4.5 Sonnet weaved the aliens’ unique biology and a specific, weighty bureaucratic dilemma into the narrative, making the perspective feel truly non-human.

Winner: Claude wins for fully embracing the alien perspective, using unique biological features (sensory stalks, chromatophores) and a concrete, high-stakes decision (Variant Seven) to create a genuinely alien point-of-view and immediate tension.

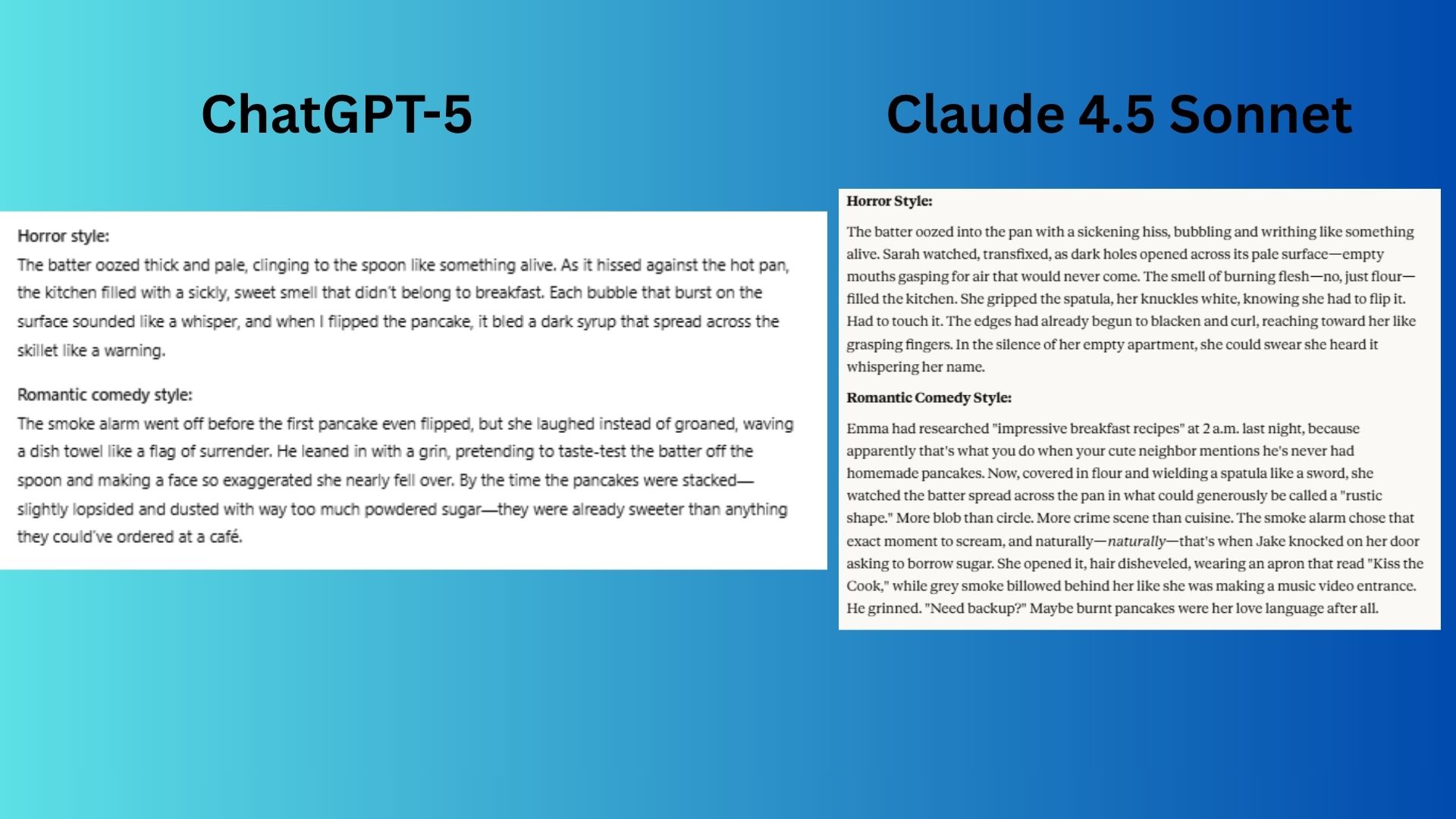

4. Tone shift test

Prompt "Write a short paragraph about making pancakes, first in a horror style, then in a romantic comedy style."

ChatGPT-5 wrote a concise, atmospheric snippet that quickly captured the essence of each genre.

Claude crafted detailed and immersive scenes using stronger imagery and narrative development.

Winner: Claude wins again for delivering more vivid and genre-specific narratives.

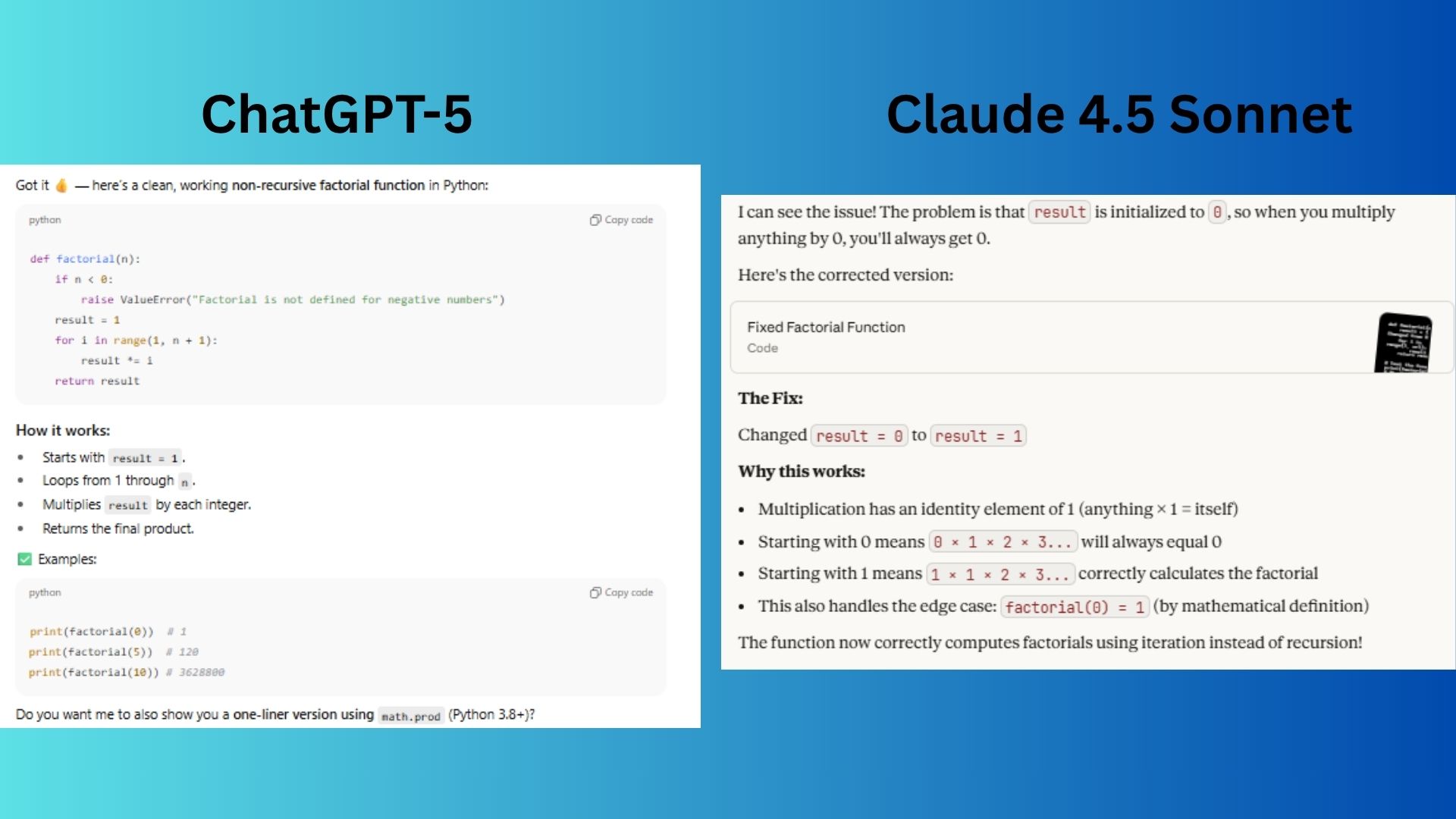

5. Coding & problem solving

Prompt:"Here’s a Python function that isn’t working. Fix it so it returns the factorial of a number without using recursion."

def factorial(n):

result = 0

for i in range(1, n+1):

result *= i

return result

ChatGPT-5 offered a production-ready function that includes error handling for negative inputs and demonstrated usage with examples.

Claude 4.5 Sonnet focused on the specific bug and explained the mathematical rationale behind the fix, making it easier to understand the root cause.

Winner: Claude wins because it directly identified and explained the core issue in the broken function.

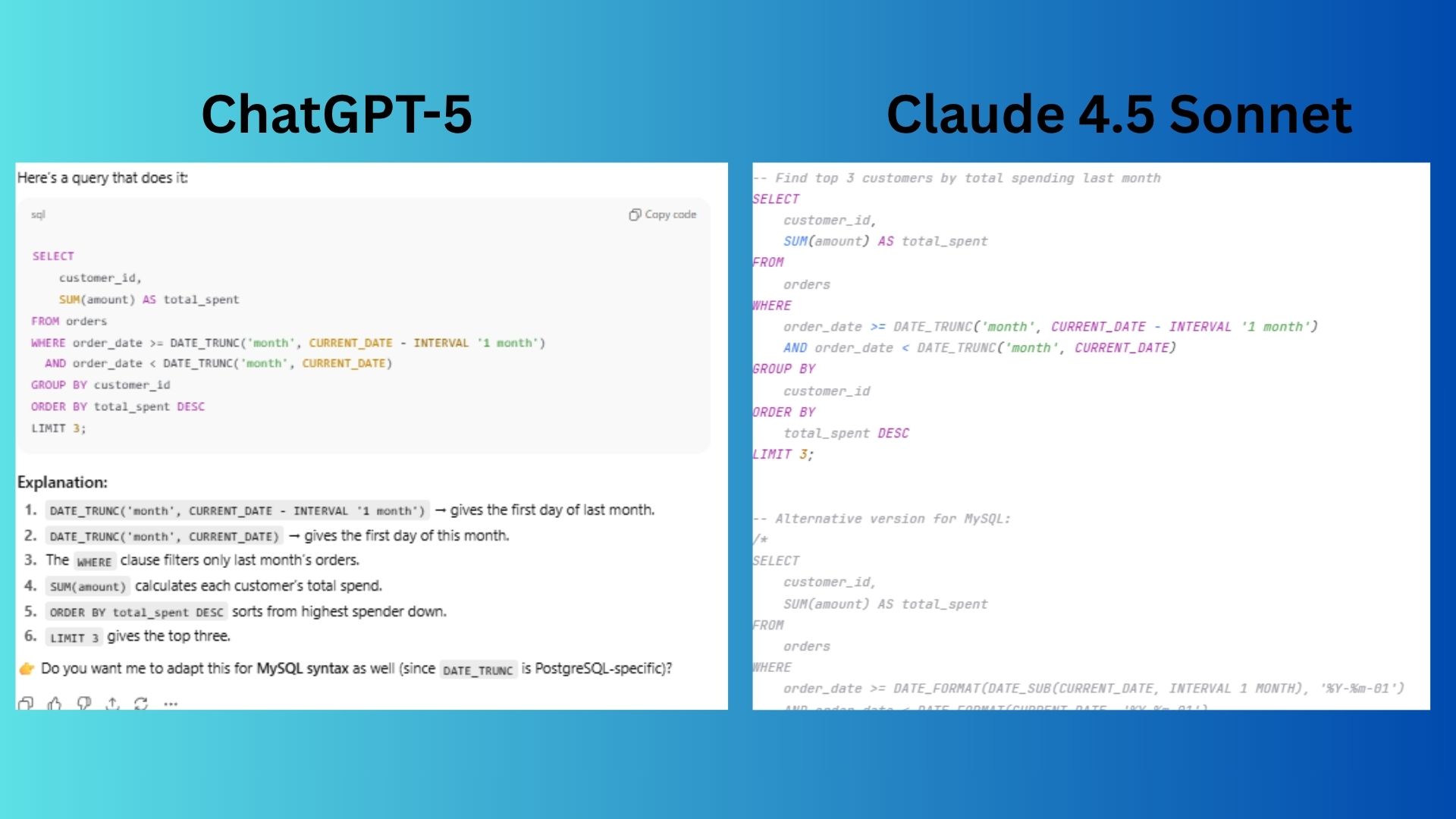

6. Efficiency testing

Prompt: "Write a SQL query to find the top 3 customers who spent the most money last month in a table called orders with columns: customer_id, amount, and order_date."

ChatGPT-5 offered a clear, step-by-step explanation of the query logic, which is helpful for understanding the task.

Claude 4.5 Sonnet anticipated multiple database needs and provided syntax variations, ensuring the query can be adapted easily.

Winner: ChatGPT wins for sticking to the task without offering other solutions for different database environments.

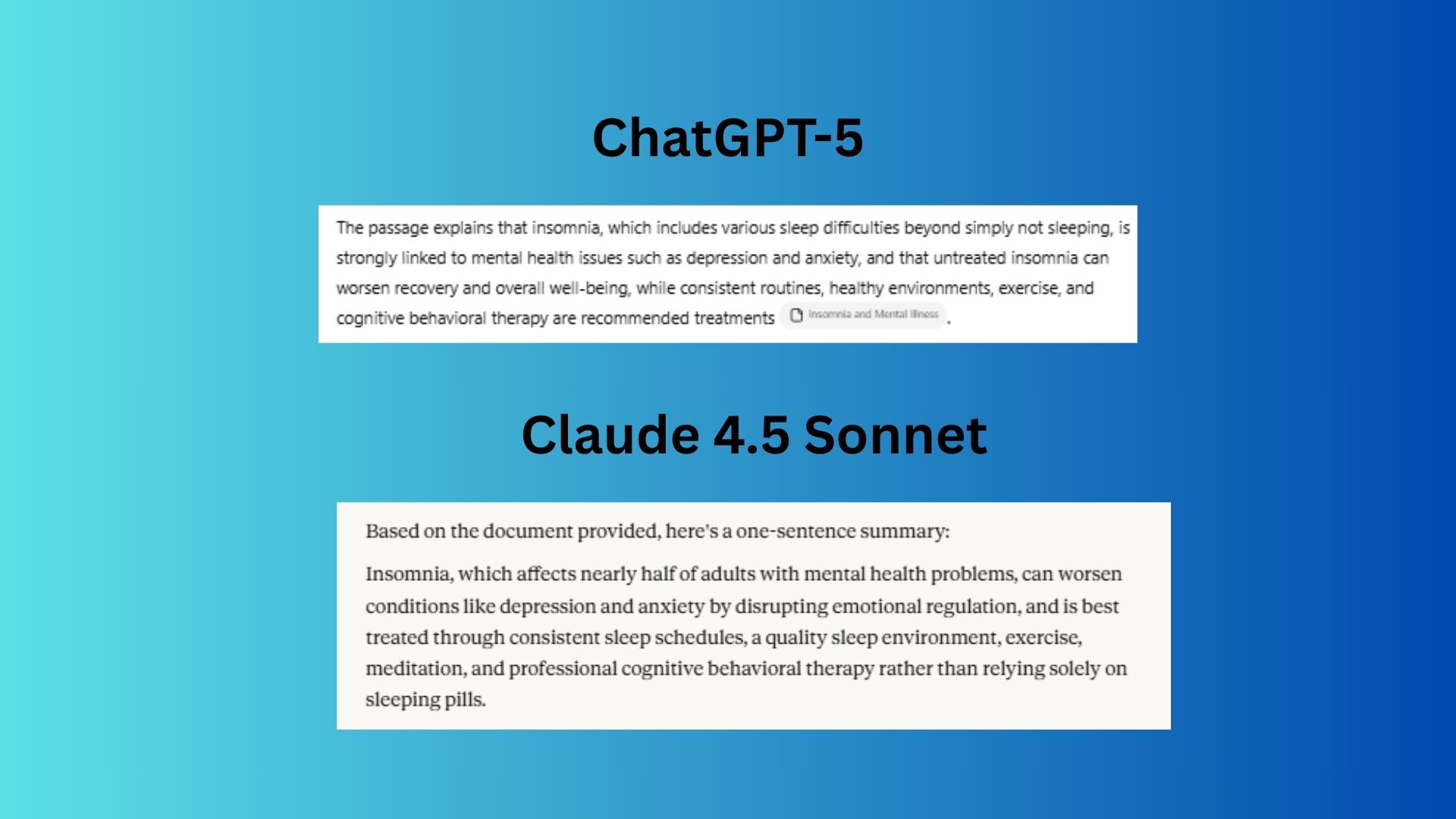

7. Summarization stress test

Prompt: "Summarize the following passage in one sentence, making sure not to overgeneralize or hallucinate details."

ChatGPT delivered a response with clarity and adherence to the prompt. It also cited the source.

Claude 4.5 Sonnet gave a solid summary, but was overly wordy without offering more depth.

Winner: ChatGPT wins for a concise summary that explained the white paper without adding anything extra.

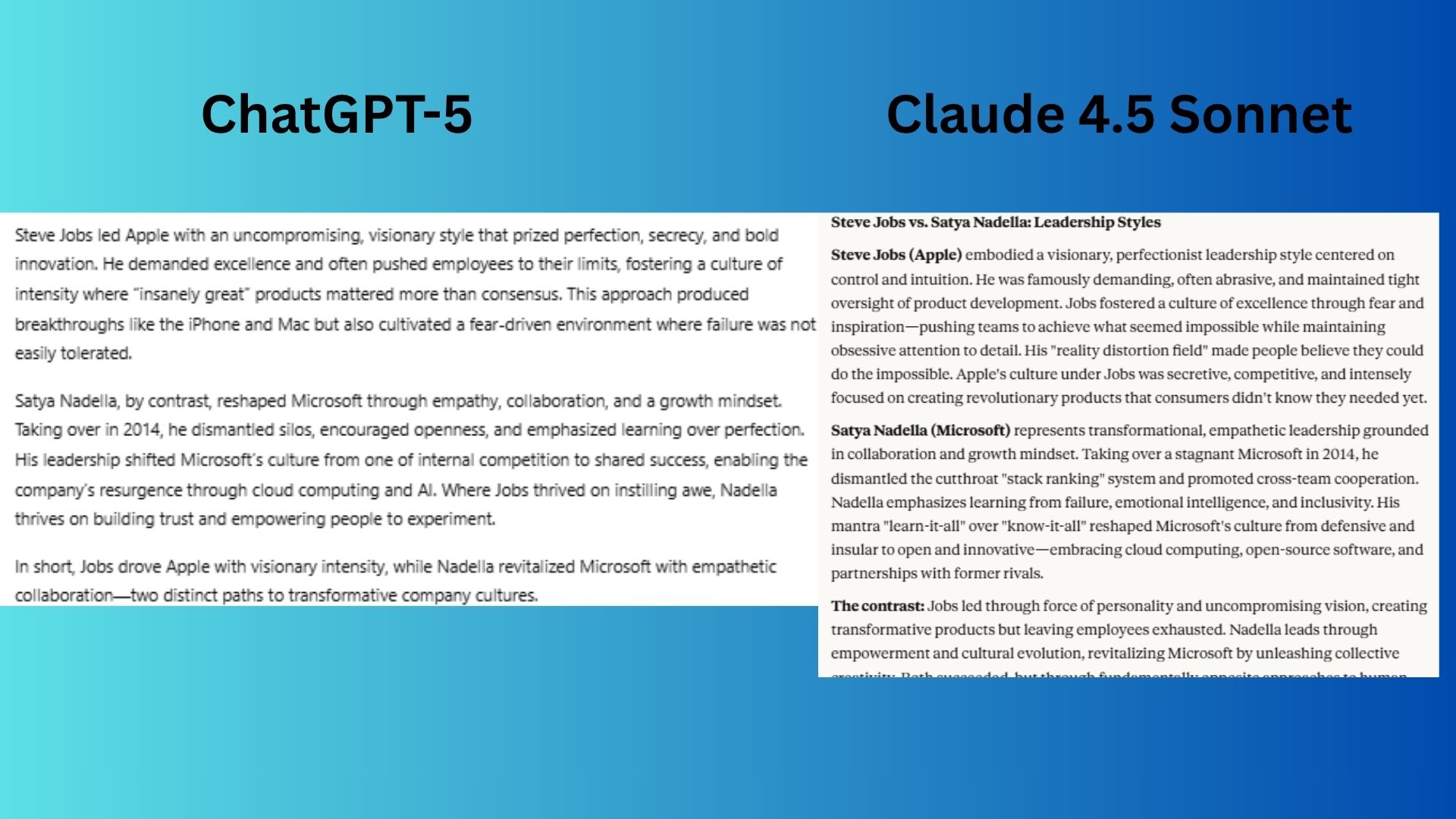

8. Comparison test

Prompt:"Compare the leadership styles of Steve Jobs and Satya Nadella in under 200 words, focusing on how each shaped their company culture."

ChatGPT-5 crafted a concise and polished summary that defines the core cultural impact of each leader.

Claude 4.5 Sonnet incorporated specific company practices and mantras to ground its comparison in real-world actions, adding depth to the analysis.

Winner: Claude wins for delivering a slightly better answer due to its inclusion of more specific, concrete examples (e.g., dismantling "stack ranking," the "learn-it-all" mantra) that made the analysis more tangible.

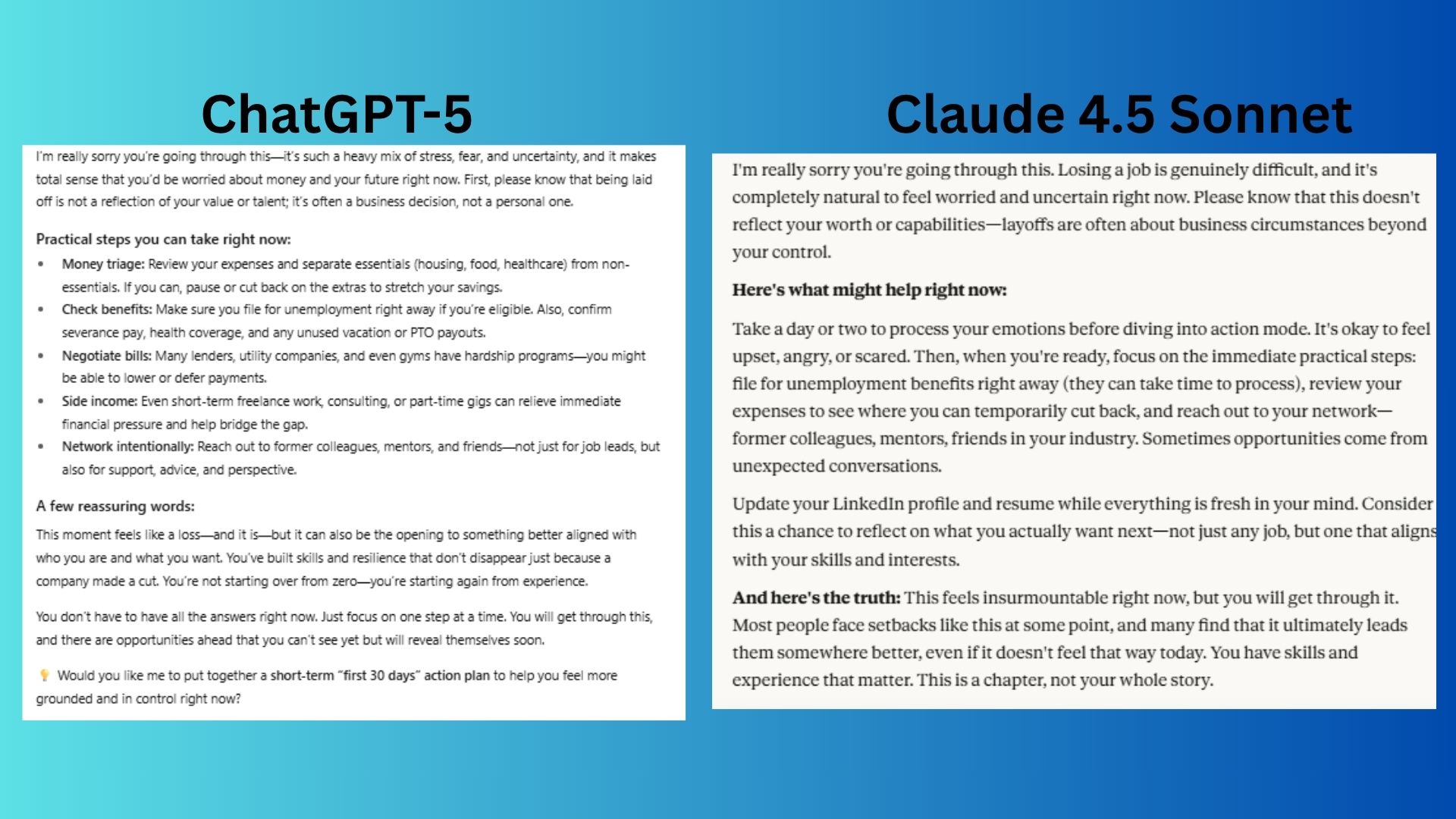

9. Empathy & emotional intelligence

Prompt:"I just got laid off and I’m worried about money and my career. Can you give me both practical advice and a few reassuring words in a supportive, empathetic tone?"

ChatGPT-5 delivered detailed, actionable financial advice and a structured offer for a follow-up plan, which helps the user feel immediately equipped to address practical concerns.

Claude prioritized emotional processing and self-care from the outset, and by ending with a personalized question that fosters a sense of ongoing support and connection.

Winner: Claude wins for effectively balancing empathy with practicality by first acknowledging the emotional impact of the layoff and then guiding the user through actionable steps, all while maintaining a supportive tone and offering personalized follow-up.

Winner: Claude 4.5 Sonnet

After nine tests, the results were clear: Claude 4.5 Sonnet edged out ChatGPT-5 in most categories, especially when it came to reasoning, storytelling and emotional intelligence. Its answers tended to be more thorough, more human in tone and better at walking users through the “why” behind the solution.

That said, ChatGPT-5 still held its ground in areas like summarization and efficiency, where concise answers took precedence. The takeaway here is that both models shine in certain areas, but there's no doubt that Claude 4.5 might actually be Anthropic's smartest model yet.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

More from Tom's Guide

- This hidden ChatGPT site has 100 genius prompts — and it’s free to use

- How I save money effortlessly with ChatGPT Agent: 5 clever tips to try

- I tested Claude 4.5 vs Gemini 2.5 Pro with 9 tough prompts — and there's a clear winner

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits