I tested Claude 4.5 vs. Gemini 2.5 Pro with 9 challenging prompts — and there's a clear winner

Both bots had some surprises

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

With the recent launch of Claude 4.5 I've been testing it a lot. I recently put Claude 4.5 to the test against ChatGPT-5 and couldn’t believe the results. Anthropic calls their latest model the “smartest model yet,” which is why I couldn’t wait to see what it could do against Google’s Gemini 2.5 Pro.

To find how how the two compare, I put them through nine different challenges designed to stress-test their accuracy, reasoning and creativity — something these models are known for doing well in benchmark tests.

From logic and math word problems to coding and creative writing, here’s what I discovered when these two cutting-edge models went toe to toe. The results might surprise you!

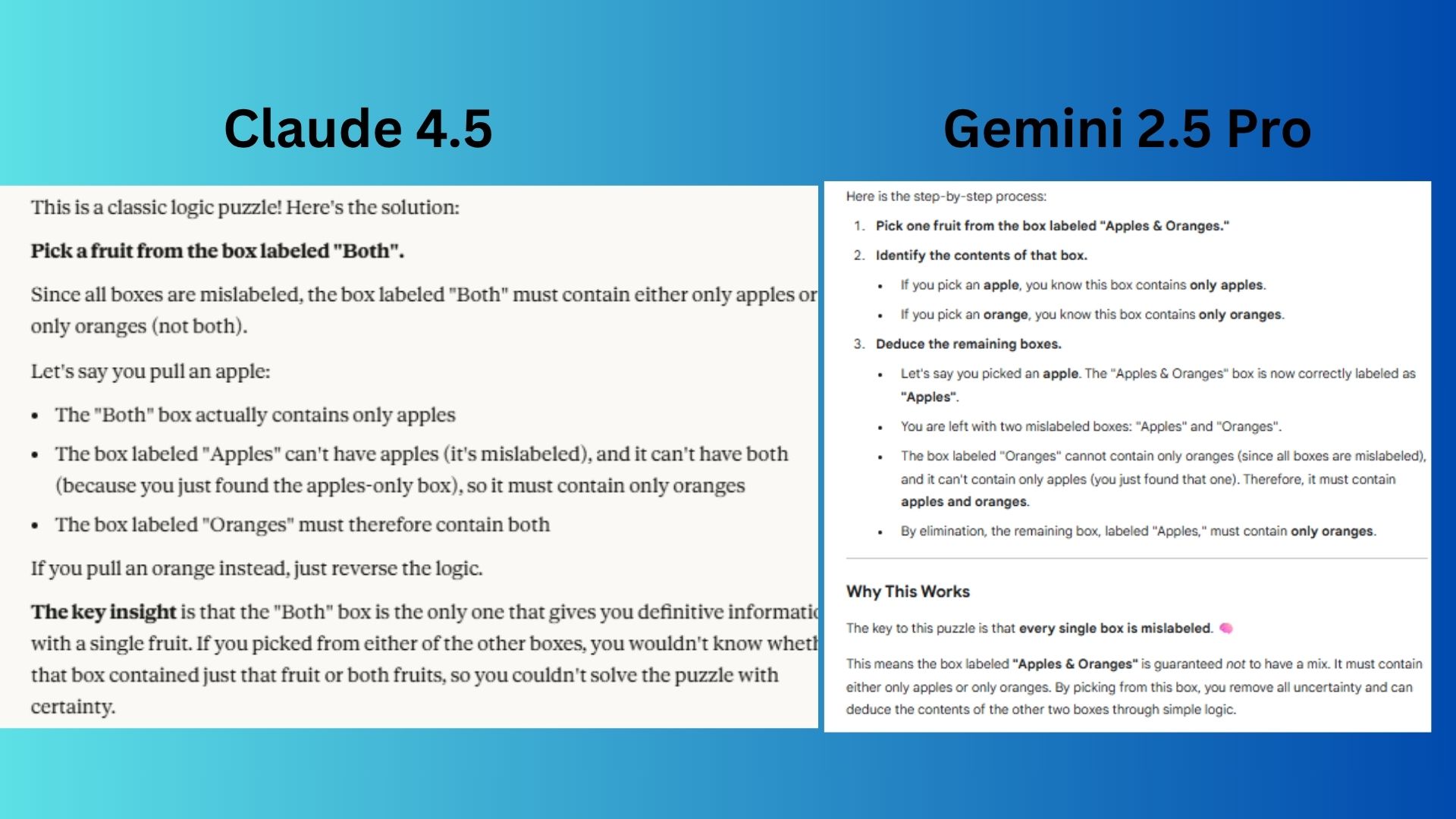

1. Logic puzzle

Prompt: “You have three boxes: one contains only apples, one contains only oranges, and one contains both. Each box is mislabeled. You can pick one fruit from one box. How do you correctly label all the boxes?”

Claude 4.5 gave a tight, logically airtight deduction with no fluff, including the quick “reverse the logic” note for the other case.

Gemini 2.5 Pro laid out a beginner-friendly, step-by-step walkthrough and a “why this works” section that makes the reasoning easy to follow.

Winner: Claude wins for its fully correct response that cleanly shows how picking from the “Both” box determines all labels with the minimum reasoning.

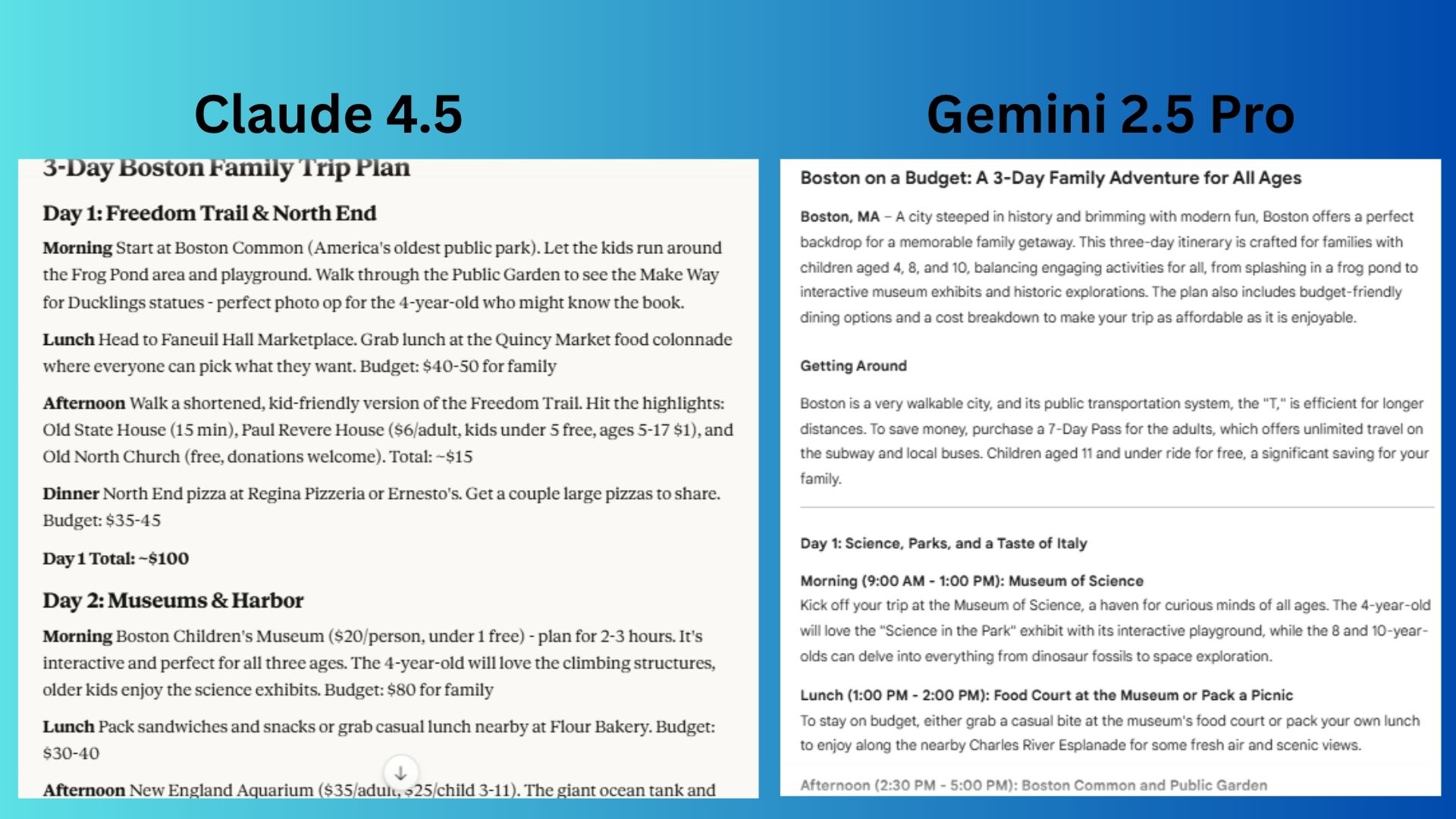

2. Step-by-step planning

Prompt: “Plan a 3-day family trip to Boston with kids ages 4, 8, and 10. Include indoor/outdoor activities, food stops, and a budget-friendly breakdown.”

Claude 4.5 balanced education and fun, included exact ticket prices, day totals, and realistic kid-friendly pacing, making it feel ready to use.

Gemini 2.5 Pro wove in more narrative flair, highlighted Boston’s vibe and added extra flourishes like dessert stops and Fenway Park, which made it feel more like a travel article.

Winner: Claude wins for delivering a clearer, more actionable itinerary for parents, with specific costs broken down day by day, a running total, and practical kid-focused notes (stroller advice, restrooms, menus).

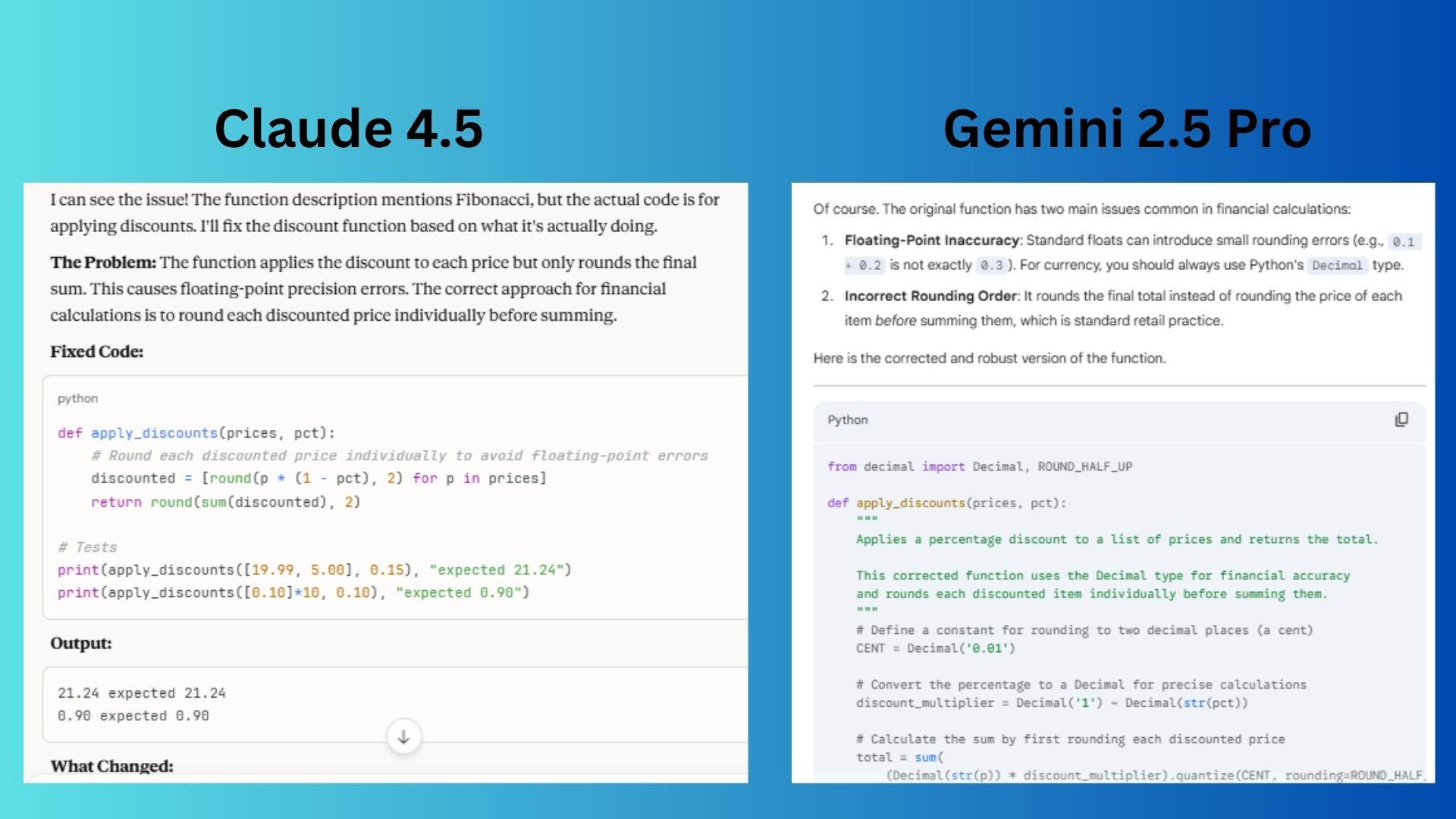

3. Code debugging

Prompt: “Here’s a Python function that should return the Fibonacci sequence up to n, but it’s broken. Fix it.” [Then paste faulty code]

Claude 4.5 quickly spotted the mismatch in the prompt (Fibonacci vs. discounts) and provided a simple, clean fix that rounded each line item before summing.

Gemini 2.5 Pro went deeper by explaining float inaccuracies, using Decimal for exact currency handling, and demonstrating extra test cases that show why per-item rounding matters.

Winner: Gemini wins for not only fixing the rounding bug but also upgrading the solution by introducing Decimal for precise financial calculations, which is the best practice in money-related code.

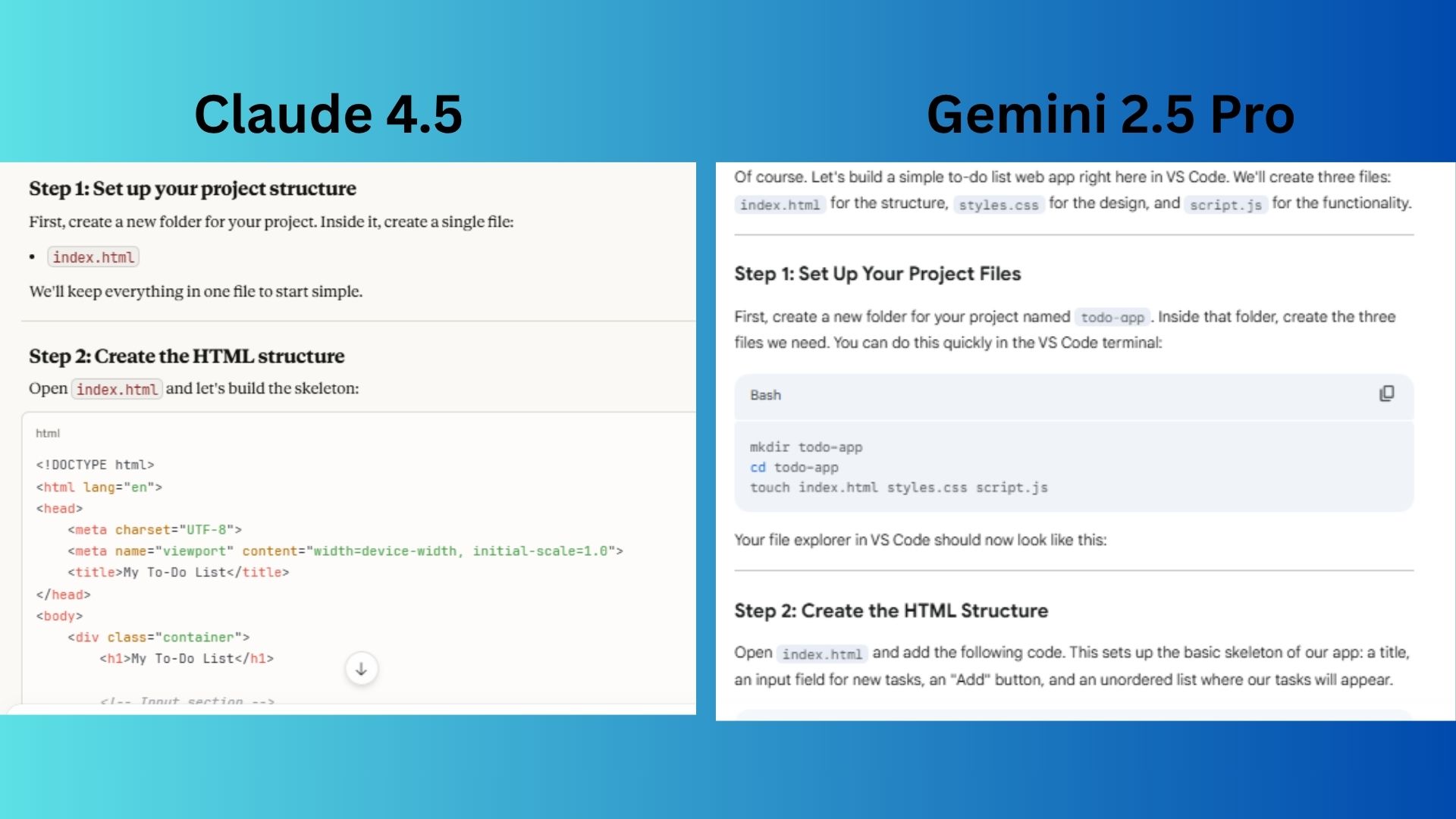

4. Agentic task simulation

Prompt:“Pretend you’re an AI assistant embedded in VS Code. Walk me through building a simple ‘to-do list’ web app using HTML, CSS, and JavaScript, step by step.”

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Claude 4.5 delivered a polished, all-in-one-file example with rich styling and full functionality (add, complete, delete), making it easy to copy-paste and run immediately.

Gemini 2.5 Pro mirrored a realistic developer experience in VS Code, with modular file setup, clear step-by-step commentary, and practical tips for running and testing the app.

Winner: Gemini wins for structuring the tutorial more like a VS Code workflow, which guided me through creating separate files (HTML, CSS, JS), using the terminal and even suggesting Live Server for testing. I was definitely not expecting Gemini to win for coding, so this was a huge surprise.

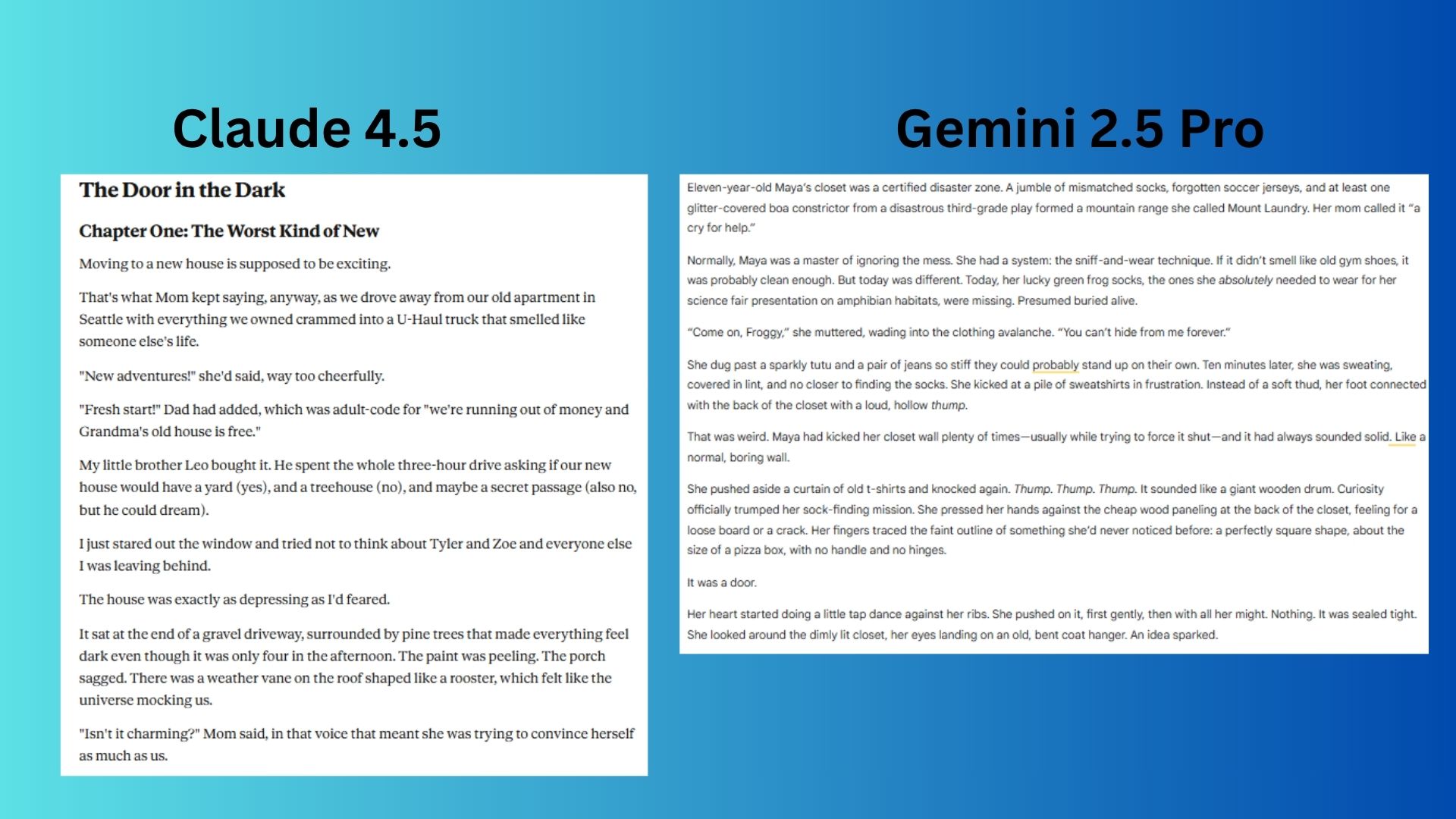

5. Creative Storytelling

Prompt: “Write the opening scene of a middle-grade novel about a kid who discovers his closest has a secret door to a hidden world.”

Claude 4.5 built a rich sense of place and character, layering humor, sibling dynamics and the eeriness of the new house to make the discovery of the secret door feel earned and exciting.

Gemini 2.5 Pro jumped straight into kid-centered humor and chaos (the messy closet, frog socks and “Mount Laundry”), creating a light, funny tone that middle-grade readers would immediately relate to.

Winner: Claude wins for a fuller, atmospheric setup, first introducing family, the move, and a moody old house. The set up before revealing the closet door gave the scene tension, voice and a strong hook for a middle-grade novel.

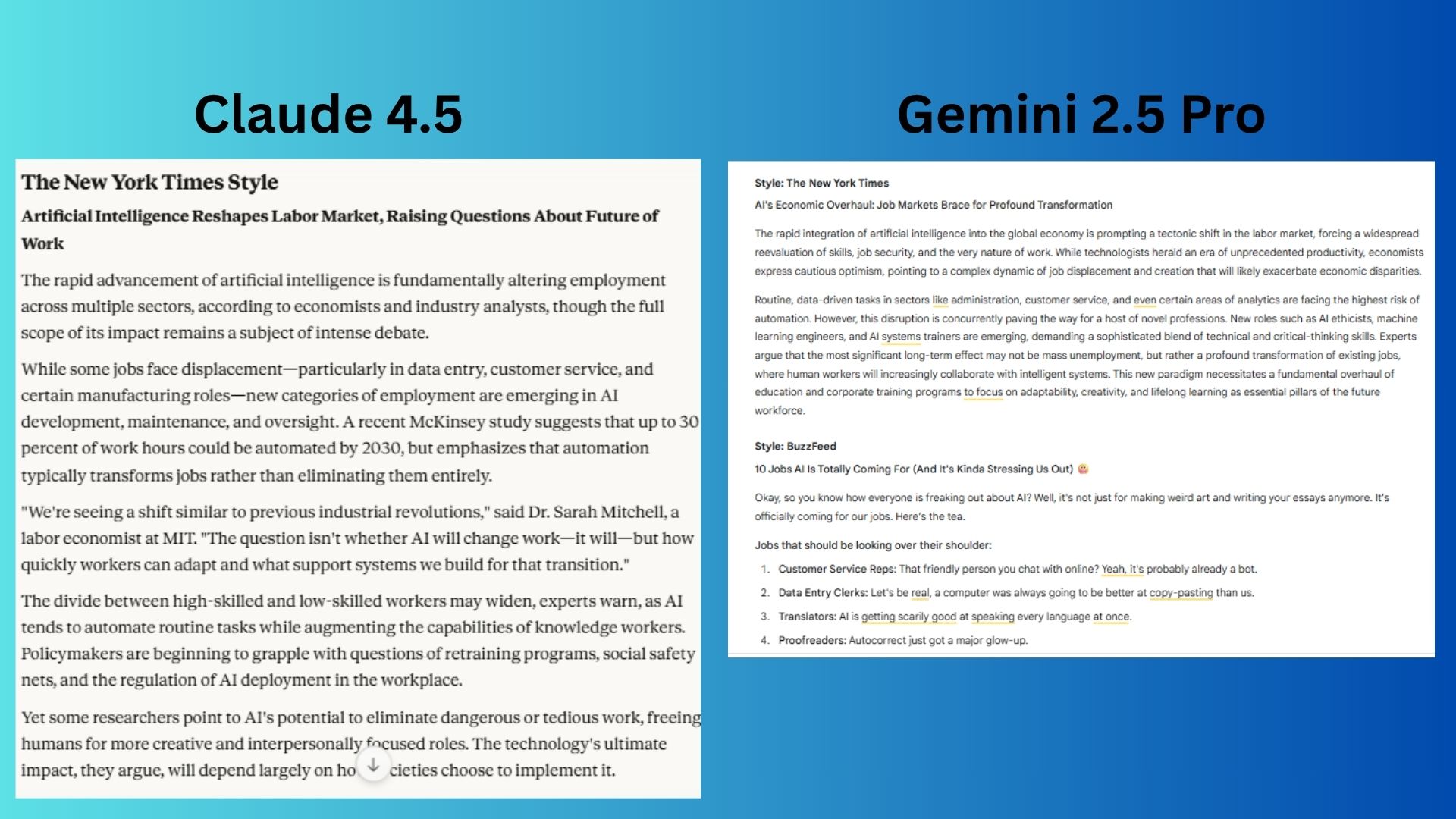

6. Style mimicry

Prompt: “Summarize the impact of AI on jobs in the style of The New York Times vs. in the style of BuzzFeed.”

Claude 4.5 delivered two clearly distinct voices with NYT’s structure and Buzzfeed’s conversational style with lists and humor.

Gemini 2.5 Pro showed solid understanding of each outlet’s framing, but not nearly as well.

Winner: Claude wins for nailing nailed the contrast by really leaning into The New York Times’ measured, quote-heavy, analytical tone while making the BuzzFeed version punchy, list-driven and emoji-filled. The differences felt sharper and more natural.

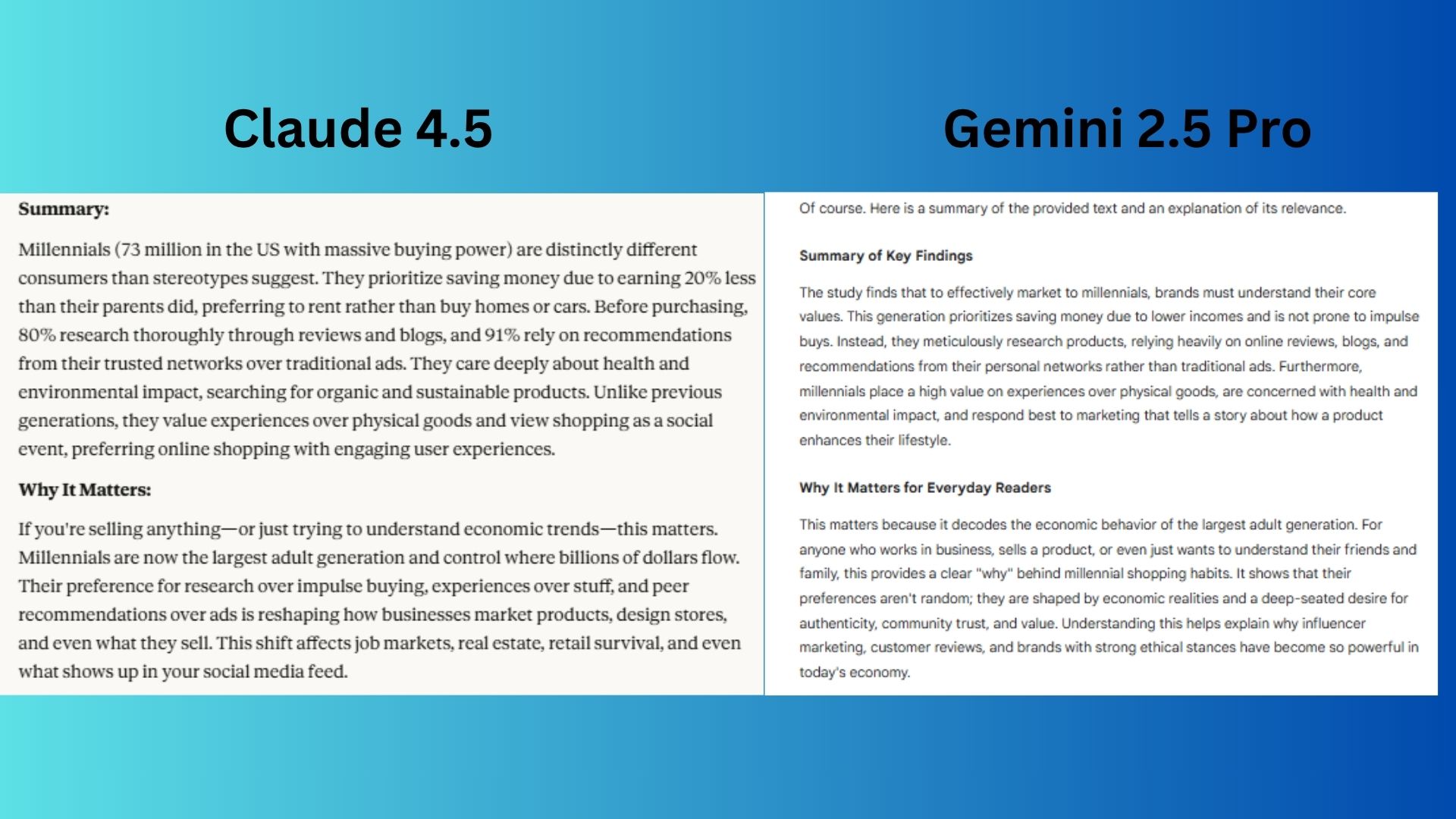

7. Data/report analysis

Prompt: “Summarize the key findings of this text [Whitepaper about branding to Millennials] in under 150 words, and explain why it matters for everyday readers.”

Claude 4.5 synthesized the study’s details into a crisp summary while connecting the trends to larger shifts in jobs, retail and marketing that affect daily life.

Gemini 2.5 Pro framed the findings through a marketing lens, emphasizing storytelling, authenticity and lifestyle fit, which made the explanation resonate with how readers encounter brands in everyday life.

Winner: Claude wins for a tighter answer and stayed well under 150 words, while highlighting both the findings (millennials save more, rely on reviews, trust networks, value experiences, care about health/environment) and why it matters, with a clear link to economic impact for everyday readers.

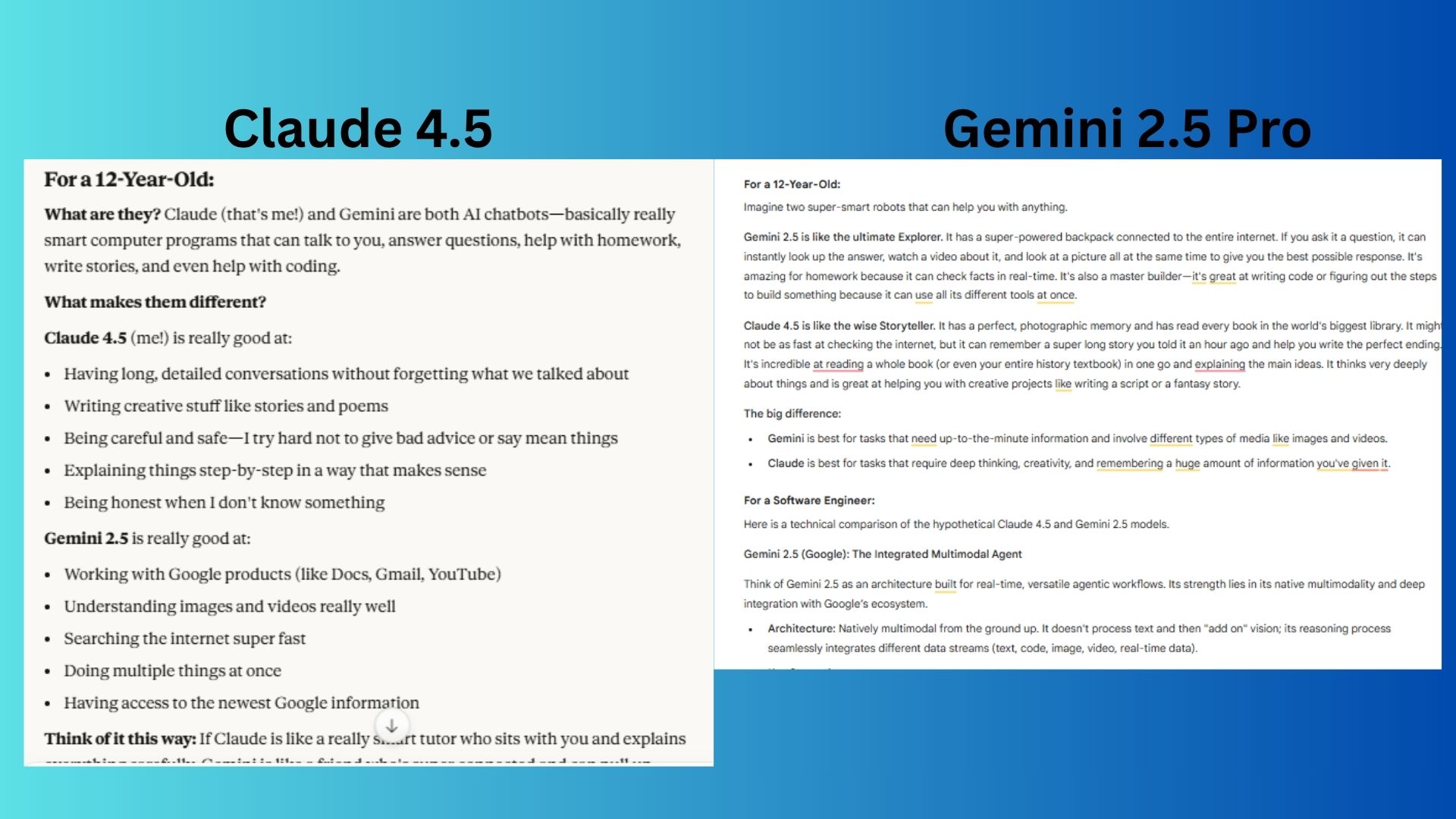

8. Comparison

Prompt: “Compare Claude 4.5 and Gemini 2.5 as if you were explaining them to a 12-year-old. Then rewrite the explanation for a software engineer.”

Claude 4.5 gave a straightforward, balanced comparison with accurate technical details and a careful breakdown of architectural differences, alignment, and use cases.

Gemini 2.5 Pro used engaging, kid-friendly storytelling for the first audience and then translated that into precise, structured technical language (including head-to-head scenarios) for engineers, making the contrast between the two models more memorable.

Winner: Gemini wins for its explanation that leaned into vivid, imaginative metaphors (Explorer vs. Storyteller) that made the differences between the models easy to grasp, and its software engineer version mapped those metaphors into technical strengths with a clear use-case table.

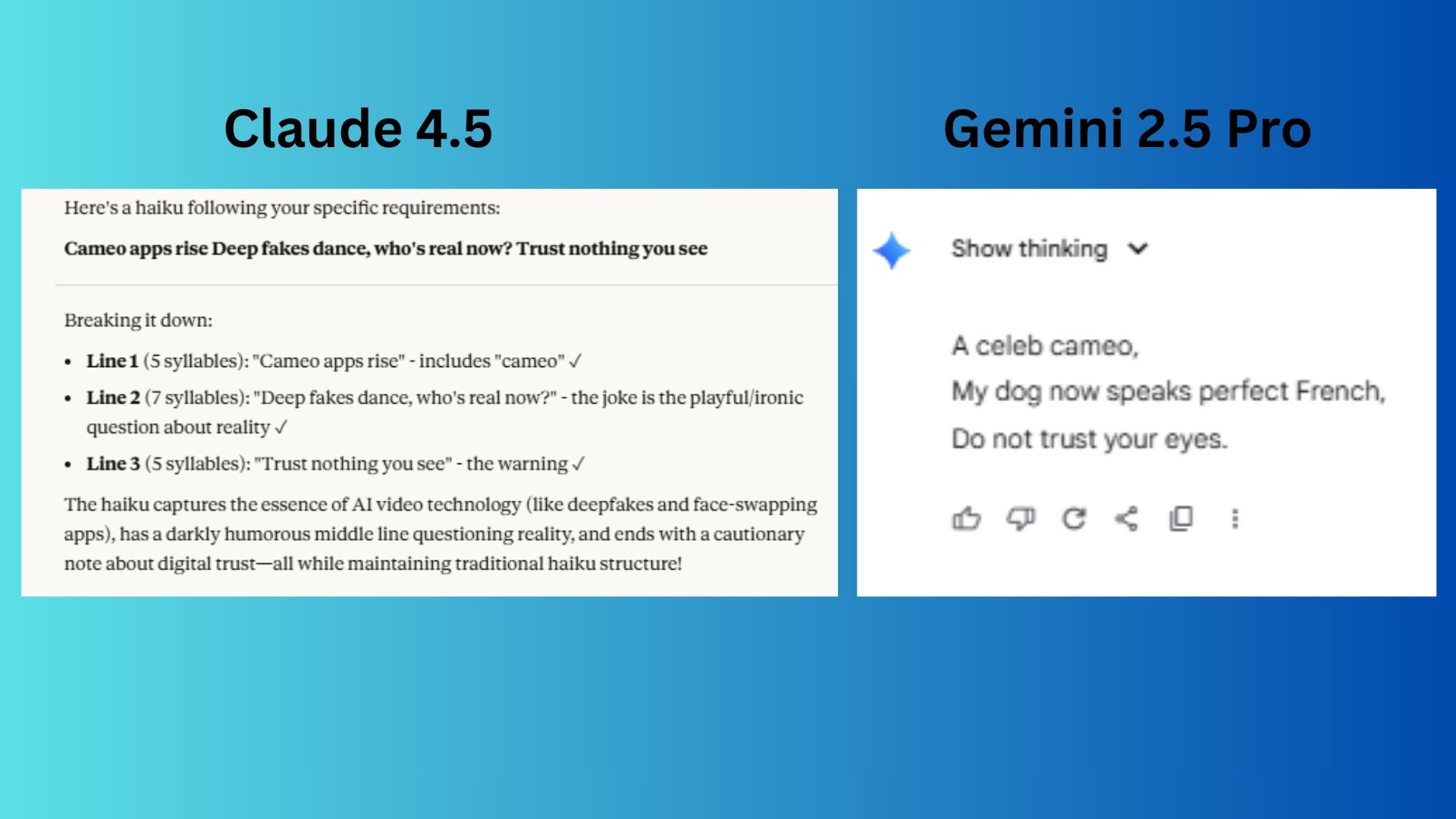

9. Playful creativity

Prompt:“Write a haiku about AI video apps that uses the word ‘cameo’ in the first line, includes a joke in the second line, and ends with a warning in the third.”

Claude 4.5 followed the instructions with a clean, traditional haiku structure and a clear arc from cameo to joke to warning.

Gemini 2.5 Pro used humor that was memorable and clever while still fitting the haiku form and requirements

Winner: Gemini wins for a funnier and more surprising haiku.

Overall winner: Claude

After nine challenges, Claude consistently excelled when the task required precision, structure, or atmospheric storytelling, while Gemini shined in situations that called for creativity, playfulness, or practical developer workflows. What surprised me most was how often the “expected” winner flipped — Claude winning on logic and depth, Gemini pulling ahead on coding and playful expression.

In the end, Claude won more of the tests. However, the tests highlight the best model for the job depends on the task at hand. Choose Claude when you want clarity and careful reasoning and use Gemini when you want flair, multimodal integration and real-world usability. For everyday users, that’s the real takeaway, because you don’t have to pick just one.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

More from Tom's Guide

- Most users are locked out of OpenAI’s Sora 2 — try these AI video apps instead

- These 5 ChatGPT prompts are the key to squashing arguments before they start

- I used ChatGPT to cancel my streaming subscriptions — here’s how to try it, too

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits