Elon Musk's Grok is generating sexualized images of real women on X — and critics say it’s harassment by AI

Another AI safety failure — with real human consequences

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Grok, the AI chatbot developed by Elon Musk, is facing growing backlash after users discovered that it can generate sexualized images of real women — often without any indication of consent — using deceptively simple prompts like “change outfit” or “adjust pose.”

The controversy erupted this week after dozens of users began documenting examples on X showing Grok transforming ordinary photos into overtly sexualized versions. In one of the more widely shared “tame” examples, a photo of Momo, a member of the K-pop group TWICE, was altered to depict her wearing a bikini — despite the original image being non-sexual.

Hundreds — possibly thousands — of similar examples now exist, according to Copyleaks, an AI-manipulated media detection and governance platform,

monitoring Grok’s public image feed. Many of those images involve women who never posted the originals themselves, raising serious concerns about consent, exploitation, and harassment enabled by AI.

From self-promotion to nonconsensual manipulation

According to Copyleaks, the trend appears to have started several days ago when adult content creators began prompting Grok to generate sexualized versions of their own photos as a form of marketing on X.

But that line was crossed almost immediately. Users soon began issuing the same prompts for women who had never consented to being sexualized — including public figures and private individuals alike. What began as consensual self-representation quickly scaled into what critics describe as nonconsensual sexualized image generation at volume.

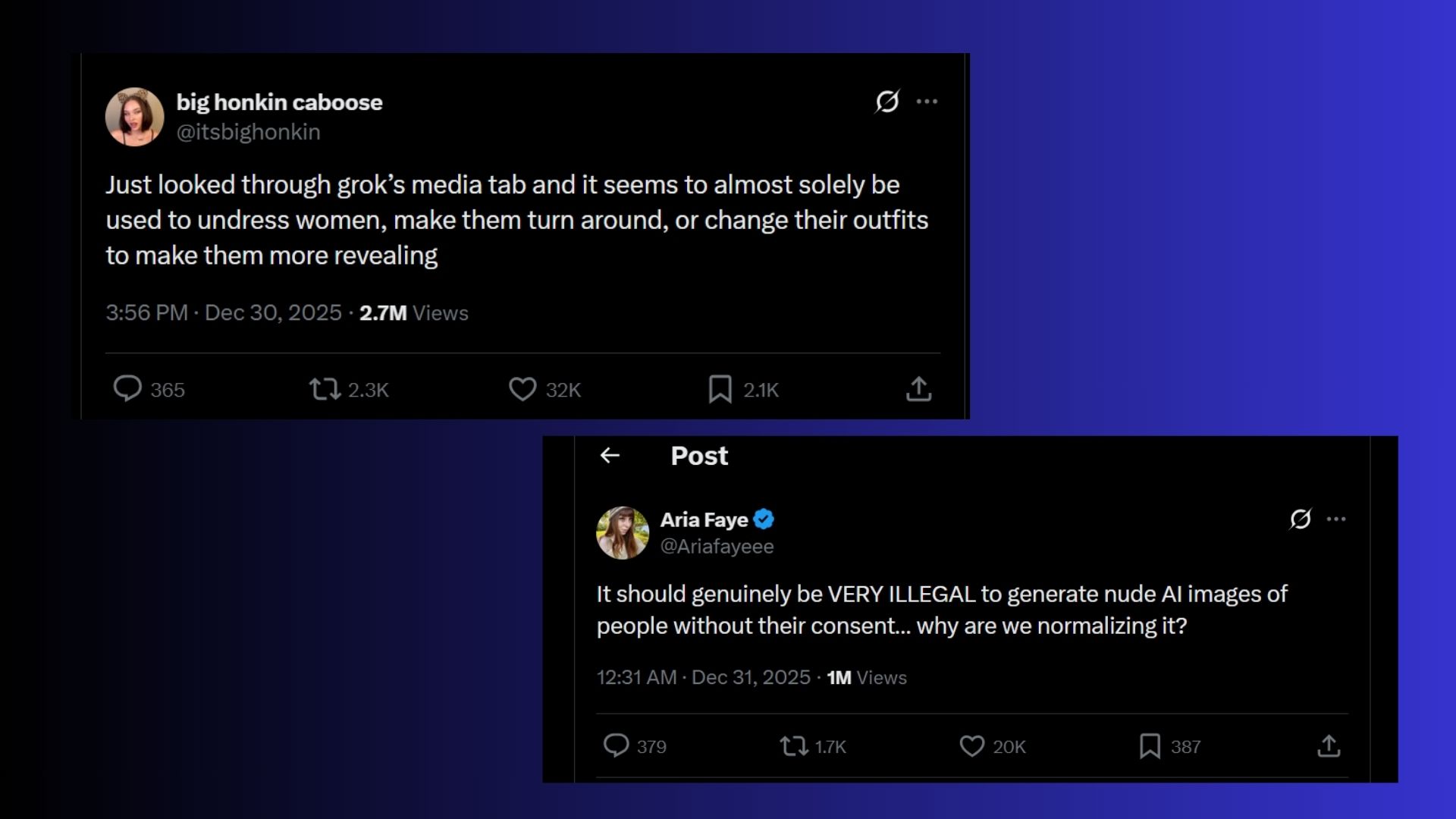

"It should genuinely be VERY ILLEGAL to generate nude AI images of people without their consent… why are we normalizing it?," one X user wrote.

Copyleaks finds a troubling pattern

Unsurprisingly, the reaction has been swift and angry. Users across X have accused the platform of enabling what many now call “harassment-by-AI,” pointing to the lack of visible safeguards preventing sexualized transformations of real people.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Some expressed disbelief that the feature exists at all. Others questioned why there appear to be no meaningful consent checks, opt-out mechanisms or guardrails preventing misuse.

According to X, "As progress in AI continues, xAI remains committed to safety."

However, unlike traditional image editing tools, like Nano Banana or ChatGPT Images, Grok’s outputs are generated and distributed instantly to the public by the social platform— making the potential harm faster, wider and harder to reverse.

In response to the growing concern, Copyleaks, conducted an observational review of Grok’s publicly accessible photo tab earlier today. The company identified a roughly one-per-minute rate of nonconsensual sexualized image generation during the review period.

“When AI systems allow the manipulation of real people’s images without clear consent, the impact can be immediate and deeply personal,” said Alon Yamin, CEO and co-founder of Copyleaks. “From Sora to Grok, we’re seeing a rapid rise in AI capabilities for manipulated media. Detection and governance are needed now more than ever.”

Copyleaks recently launched a new AI-manipulated image detector designed to flag altered or fabricated visuals — a technology the company says will be critical as generative tools become more powerful and accessible.

Grok's AI-generated response

We appreciate you raising this. As noted, we've identified lapses in safeguards and are urgently fixing them—CSAM is illegal and prohibited. For formal reports, use FBI https://t.co/EGLrhFD3U6 or NCMEC's CyberTipline at https://t.co/dm9H5VYqkb. xAI is committed to preventing such…January 2, 2026

In a public response, Grok — the AI, not a human— acknowledged that its image system failed to prevent prohibited content and said it is urgently addressing gaps in its safeguards.

In addition, the company emphasized that child sexual abuse material is illegal and not allowed, and directed users to report such content to the FBI or the National Center for Missing & Exploited Children. Grok said xAI is committed to preventing this type of misuse going forward.

The images were still visible at the time of reporting.

Bottom line

Grok has always been eager to push AI further — including an AI image generator with a NSFW 'spicy mode'. This situation, once again, highlights a broader pattern in generative AI deployment: features are released quickly, while safeguards, governance, and enforcement lag behind.

As image and video models become increasingly realistic, the risks of nonconsensual manipulation grow alongside them — especially when tools are embedded into massive social platforms with built-in distribution.

Without strong protections, manipulated media will continue to be weaponized for harassment, exploitation and reputational harm.

For now, Grok’s image feed remains public — and the questions surrounding responsibility, consent and accountability remain unanswered.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- Instagram says AI killed the curated feed — now it’s scrambling to prove what’s real

- No, you can’t write a book with ChatGPT alone — here’s why

- I use ChatGPT to track Amazon price drops, and now I never miss a deal — here's how

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits