Neon app that sold recorded phone calls for AI training is now offline after a security flaw exposed all of its users' data

You definitely don't want to willingly give up your personal data for a few bucks

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Editor's note: After yesterday's story was published, TechCrunch received additional information about the Neon app that indicated there had been a security flaw that allowed any user to access the phone numbers, call recordings, and transcripts of any other Neon user. Alex Kiam, the founder of Neon app, took down the app's servers and notified users about a pause in service after being notified of the issue.

The original story follows below.

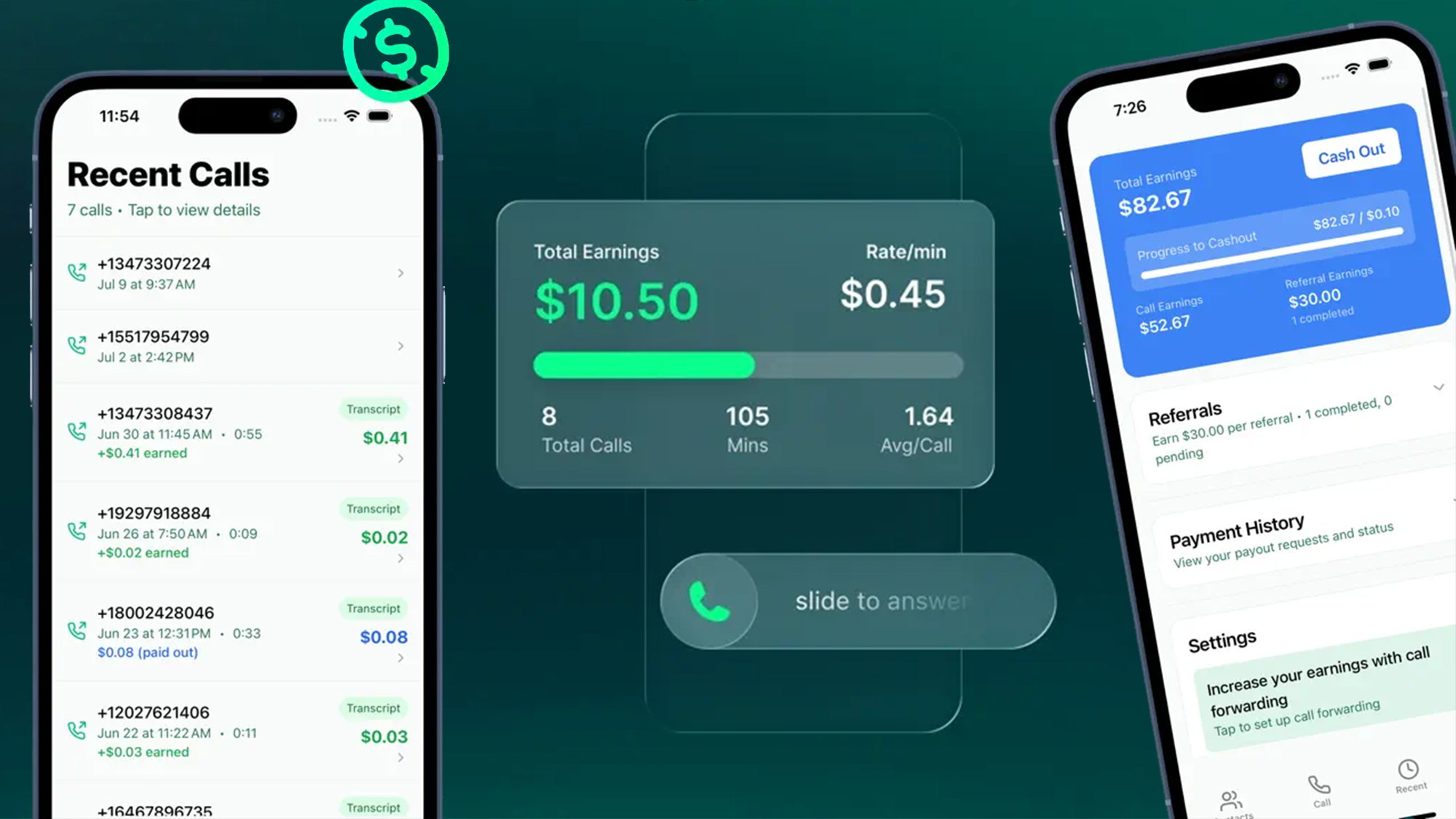

A new app called Neon Mobile has skyrocketed to the number two spot in the App Store's social networking category which pays users for the recorded audio from their phone calls.

As reported by TechCrunch, the app refers to itself as a “moneymaking tool” and offers to pay users for access to their audio conversations, which it then turns around and sells to AI companies.

The fee is 30 cents a minute for phone calls to other Neon users, or up to $30 a day for calls to non-Neon users, and the app pays for referrals as well. It captures inbound and outbound calls on the best iPhones and best Android phones, but only records your side of the call if it’s connected to another Neon user. This collected data is then sold to AI companies, according to Neon, “for the purpose of developing, training, testing, and improving machine learning models, artificial intelligence tools and systems, and related technologies.”

Neon says that it removes personal data like names, emails and phone numbers before this data gets handed over but doesn’t specify how the AI companies it sells that data to will be using it. That’s troubling in an era of deepfakes and phishing or vishing, and especially so when Neon’s vague and inclusive license statement give it so much access to further potential usage. Those terms include beta features that have no warranty, and the data set is a gold mine for a potential breach.

In TechCrunch’s reporting, they state that while testing the app it did not provide proper notification that the user was even being recorded during the call – and the recipient wasn't alerted about possible recording either. Given the popularity of the app, many users seem unconcerned about selling out their privacy. And given the amount of data breaches that occur over the course of a year, many people may figure that data privacy is a lost cause already. However, there’s a stark difference between having your car get broken into and leaving your keys in it.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

How AI training puts you and your data at risk

Just like with a data leak, willingly giving away huge chunks of personal information can leave you open to serious consequences. For instance, once your information is out of your control after you hand it over to someone else, it can be sold, resold, exposed in a breach, leaked to the dark web and more.

AI models can reveal sensitive information, which may lead to potential issues with employment, lending or acquiring housing. Additionally, there are few safeguards or standards that are consistent with AI companies, and data breaches are becoming more and more common. Privacy issues could occur too and if a company is sold or goes out of business ,there could be legal complications.

Your data is like currency, which is why I recommend going to great lengths to protect it by investing in one of the best identity theft protection services and by installing one of the best antivirus software suites on all of your devices. You also want to make sure that you don't fall for phishing attacks and don't click on unexpected links or fall for scams. Why willingly hand over this information for such a cheap price?

The Neon Mobile app is climbing the rankings now but we'll see if it can maintain a top spot in Apple's App Store for long. Just like with the other security stories I cover, if something seems too good to be true, it probably is and that's the feeling I get with this new app. However, I could be wrong and Neon Mobile could be the first of many apps that pay your for your data for AI training. Only time will tell but based on what I've see and learned so far, I'd recommend avoiding it for now.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

More from Tom's Guide

- The TikTok ban saga appears to finally be over as reports suggest a deal has been done

- The top 3 cybersecurity risks posed by age verification

- Macs under attack from malware impersonating popular password managers — how to stay safe

Amber Bouman is the senior security editor at Tom's Guide where she writes about antivirus software, home security, identity theft and more. She has long had an interest in personal security, both online and off, and also has an appreciation for martial arts and edged weapons. With over two decades of experience working in tech journalism, Amber has written for a number of publications including PC World, Maximum PC, Tech Hive, and Engadget covering everything from smartphones to smart breast pumps.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits