Google's adding more accessibility features to Chrome and Android — and they're powered by Gemini

There are some helpful changes incoming for phones and laptops

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

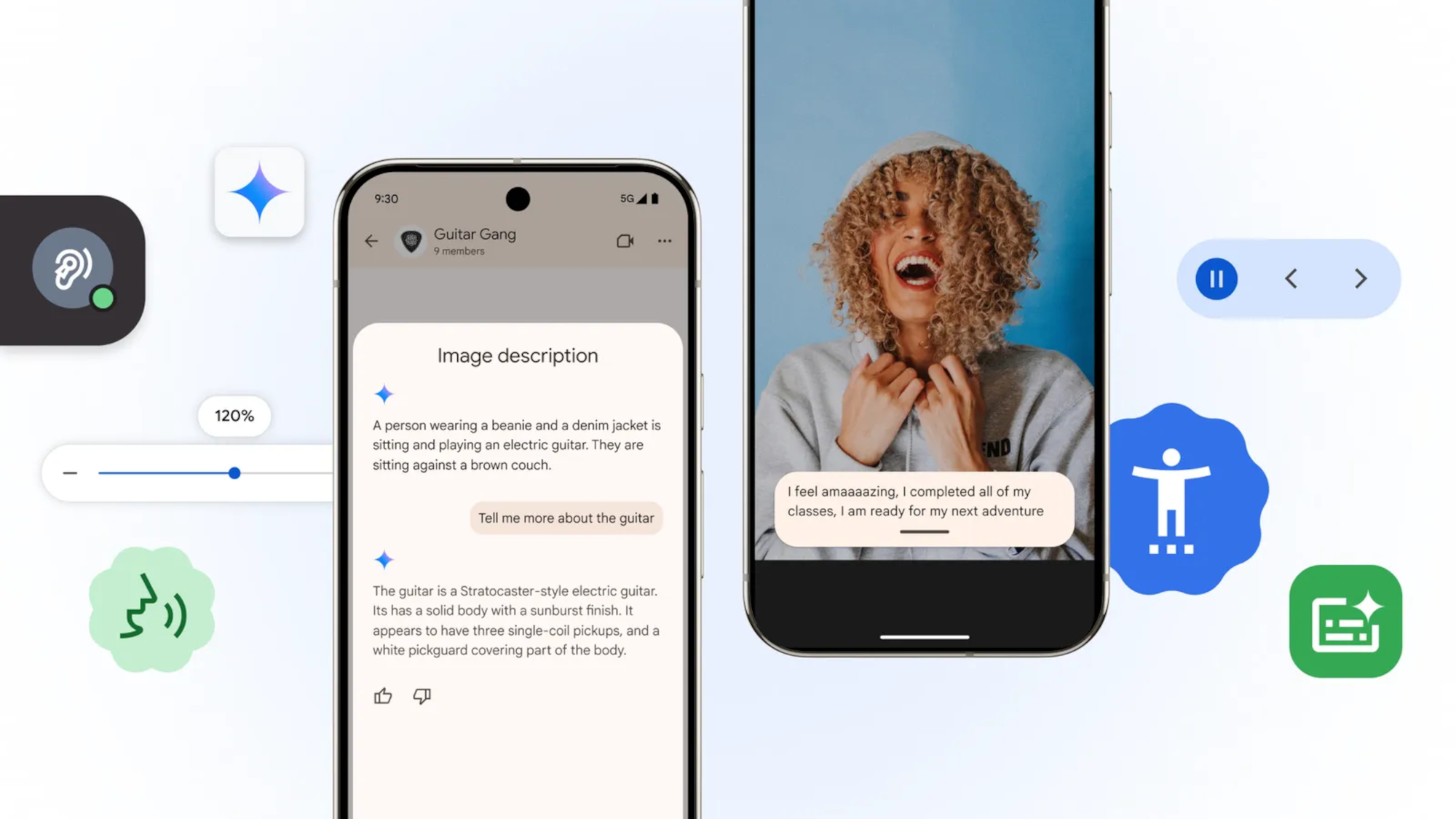

Google has revealed new Gemini-powered accessibility updates for Android and Chrome ahead of Google I/O, in honor of Global Accessibility Awareness Day.

The new updates were revealed in a Google blog post, the first set of which use Google Gemini to help people better understand what is happening on their screens. Last year, we saw Google introduce Talk Back, which uses AI to generate descriptions of images, even if they had no alt-text. However, thanks to Gemini, users will soon be able to ask questions about images and receive generated responses. For instance, you could ask about the color of a car that your friend sent you an image of.

On top of that, Google Gemini can be asked questions about your whole screen, making it much faster to search through a webpage. For example, you could ask Gemini if there are any deals on a store page, or if there are any critical updates on a news site.

For those who are hard of hearing, Google is rolling out its Expressive Captions feature for its devices. This will allow the captions on the video to better match the tone and cadence of the person speaking. For instance, if the speaker extends their vowels in excitement, the text will match that. Google is also adding more labels for specific sounds, making it easier to tell if someone is coughing, whistling or clearing their throat.

Chrome is also becoming more accessible

The blog post includes several new additions to Chrome on both desktops/laptops and mobile. This includes the addition of Optical Character Recognition, which allows your device to easily recognise a PDF that’s been scanned into your desktop Chrome browser. This is especially useful for users with screen readers, apps which struggled with these file types before.

Google is also adding page zoom functionality to Google Chrome on Android, making it much easier to change the text size to fit your preference. To make use of the feature, users will need to tap the three-dot menu in the top right corner of the screen. From there, all you’ll need to do is select the zoom preferences option to change the size of the text.

Special needs students are getting extra help

Google is building upon features like Face Control and Reading mode by allowing Chromebooks to work with the College Board's Bluebook testing app. This means that when students take their SATs as well as most Advanced Placement exams, they’ll have access to all of Google’s accessibility features, including ChromeVox screen reader and Dictation, as well as the College Board's own tools.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Finally, app developers will be getting access to Google’s open-source repositories via Project Euphonia’s GitHub page. This will allow them to better train their models and develop personalized audio tools using diverse speech patterns.

Overall, these are some great-sounding additions for anyone with special needs, and will help to make some of the best Android phones and best laptops that much easier to use. We expect more features to be announced during Google I/O next week, so keep an eye out for all the news as we hear it.

More from Tom's Guide

- I tried this anti-distraction phone for two weeks — here's my verdict

- New Spotify features have dropped — here's how to use them

- I’ve spent 24 hours with the Samsung Galaxy S25 Edge — here’s what I like and what I don’t

Josh is a staff writer for Tom's Guide and is based in the UK. He has worked for several publications but now works primarily on mobile phones. Outside of phones, he has a passion for video games, novels, and Warhammer.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits