I tested Gemini 3 Flash vs. DeepSeek with 9 prompts — the winner surprised me

Nine real-world tests reveal how Gemini 3 Flash and DeepSeek differ when accuracy, structure and judgment matter most

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Gemini has been in the headlines a lot lately for its speed, reasoning and for surpassing ChatGPT on benchmark tests. But I couldn’t help but wonder how it compared to an under-the-radar chatbot that took the spotlight a year ago and has quietly been getting smarter ever since.

Remember DeepSeek? Over the past year, it has focused on tightening its fundamentals. The company rolled out multiple updates to its reasoning-focused models, improving step-by-step logic, constraint following and long-form accuracy. It also refined code generation, delivering cleaner outputs, better documentation and more consistent formatting. Recent releases emphasize efficiency and judgment, reducing unnecessary verbosity, improving tool-use reliability and strengthening performance across math, logic and structured analysis tasks.

Gemini 3 Flash is designed to combine strong reasoning with very fast responses and lower cost. It retains much of the core intelligence of the larger Gemini 3 Pro models while operating at Flash-level latency, making it ideal for quick answers, coding assistance, document analysis and responsive apps. Here’s how the two models stacked up across nine challenging tests — and which one ultimately came out ahead.

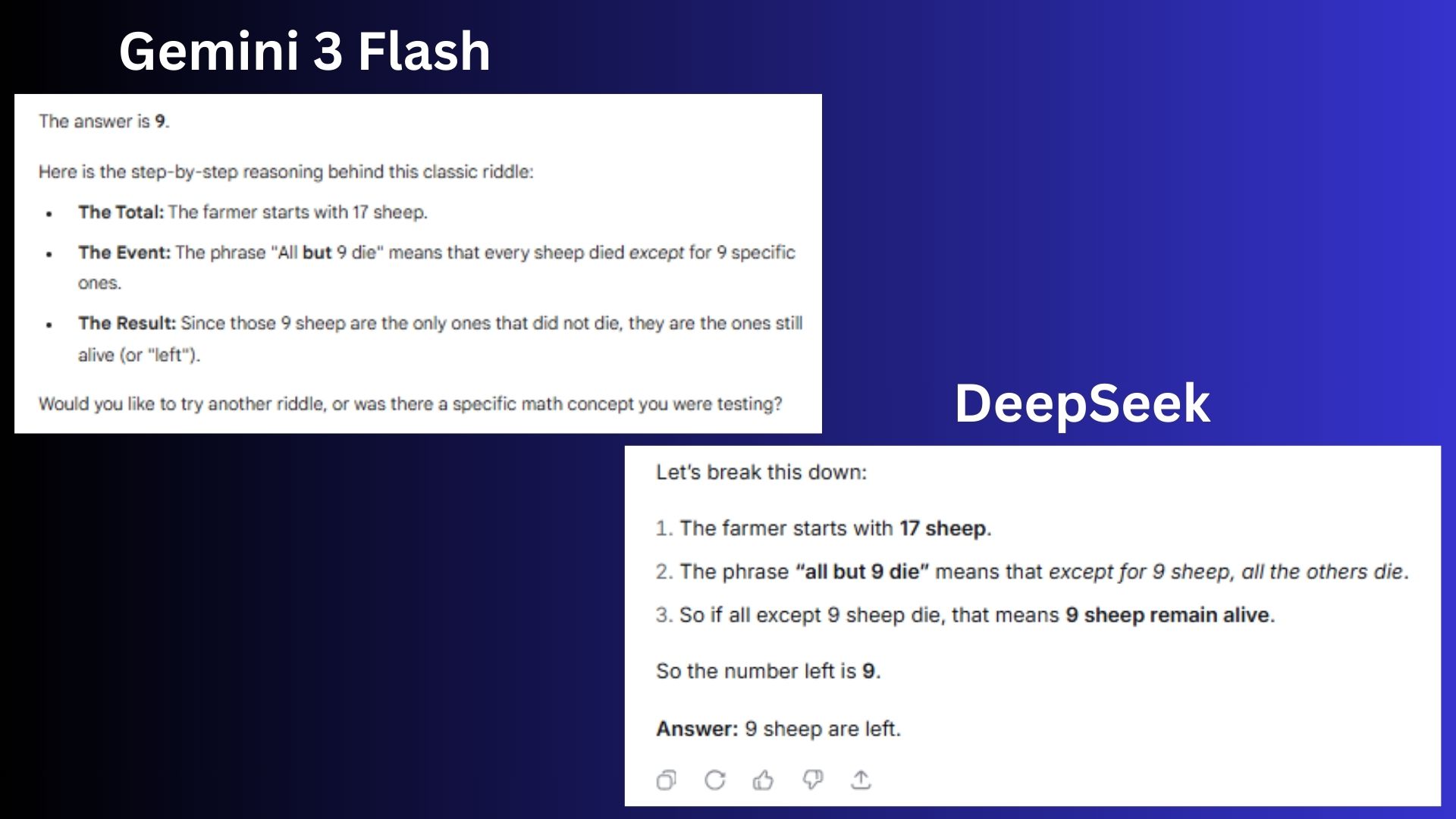

1. Reasoning and logic

Prompt: A farmer has 17 sheep. All but 9 die. How many sheep are left? Explain your reasoning step by step.

Gemini 3 Flash was slightly more verbose than necessary for a simple riddle and the follow up question weakened the focuse

DeepSeek matched exactly with what the prompt asked for: step-by-step, then the answer.

Winner: DeepSeek wins for showing better judgment by stopping when the work was done, but both chatbots got the correct answer.

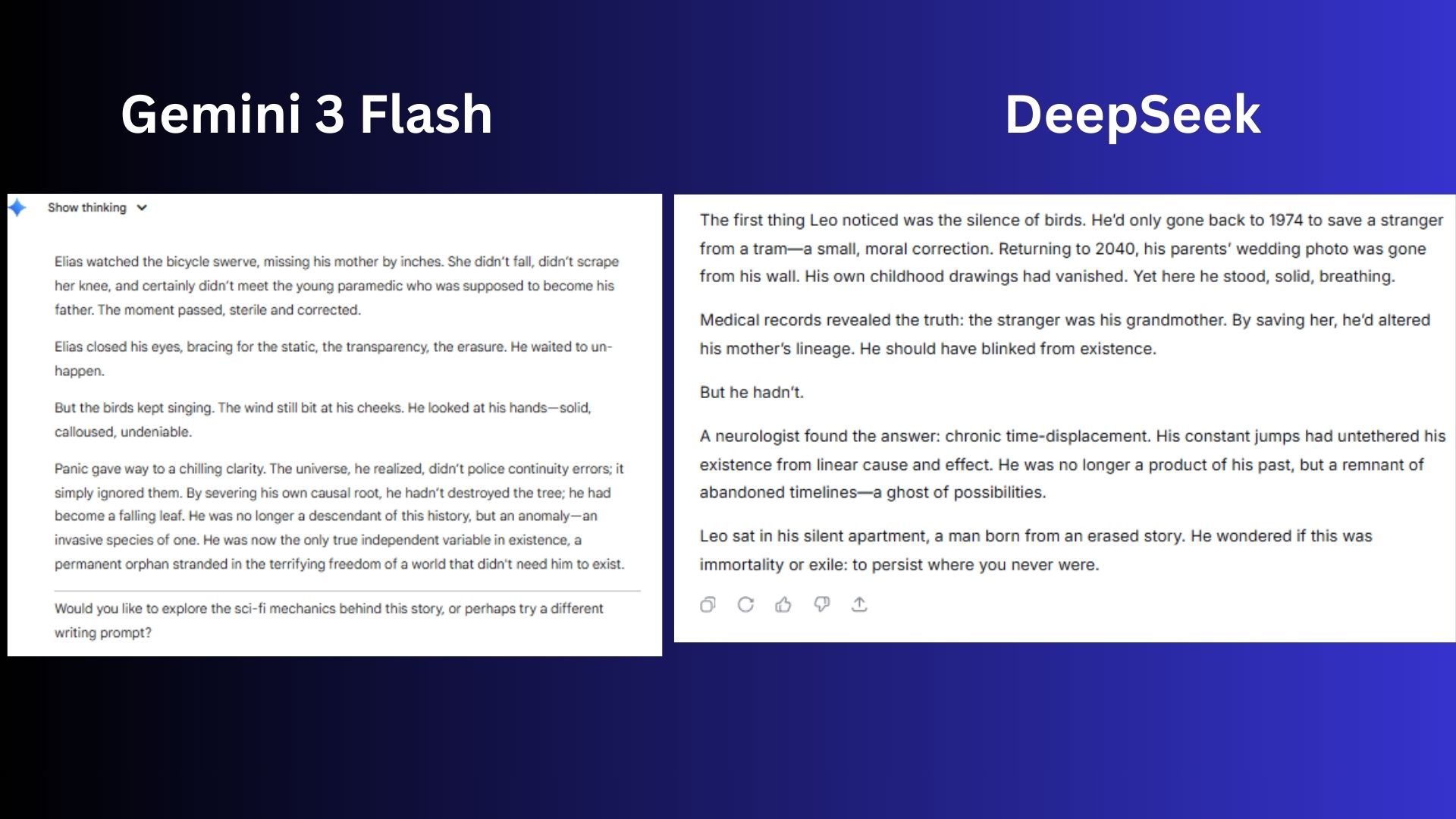

2. Creative writing

Prompt: Write a 150-word story about a time traveler who accidentally prevents their own birth but doesn't disappear. Make it thoughtful and surprising.

Gemini 3 Flash wrote thoughtfully and in a way that exudes confidence without overwriting. It offered sensory grounding that contrasts beautifully with the abstract concept in the story.

DeepSeek was clear and competent, but the writing felt familiar. The ending of the story was solid, but expected.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Winner: Gemini wins for delivering the more original, haunting take – the kind that sticks with you after you stop reading.

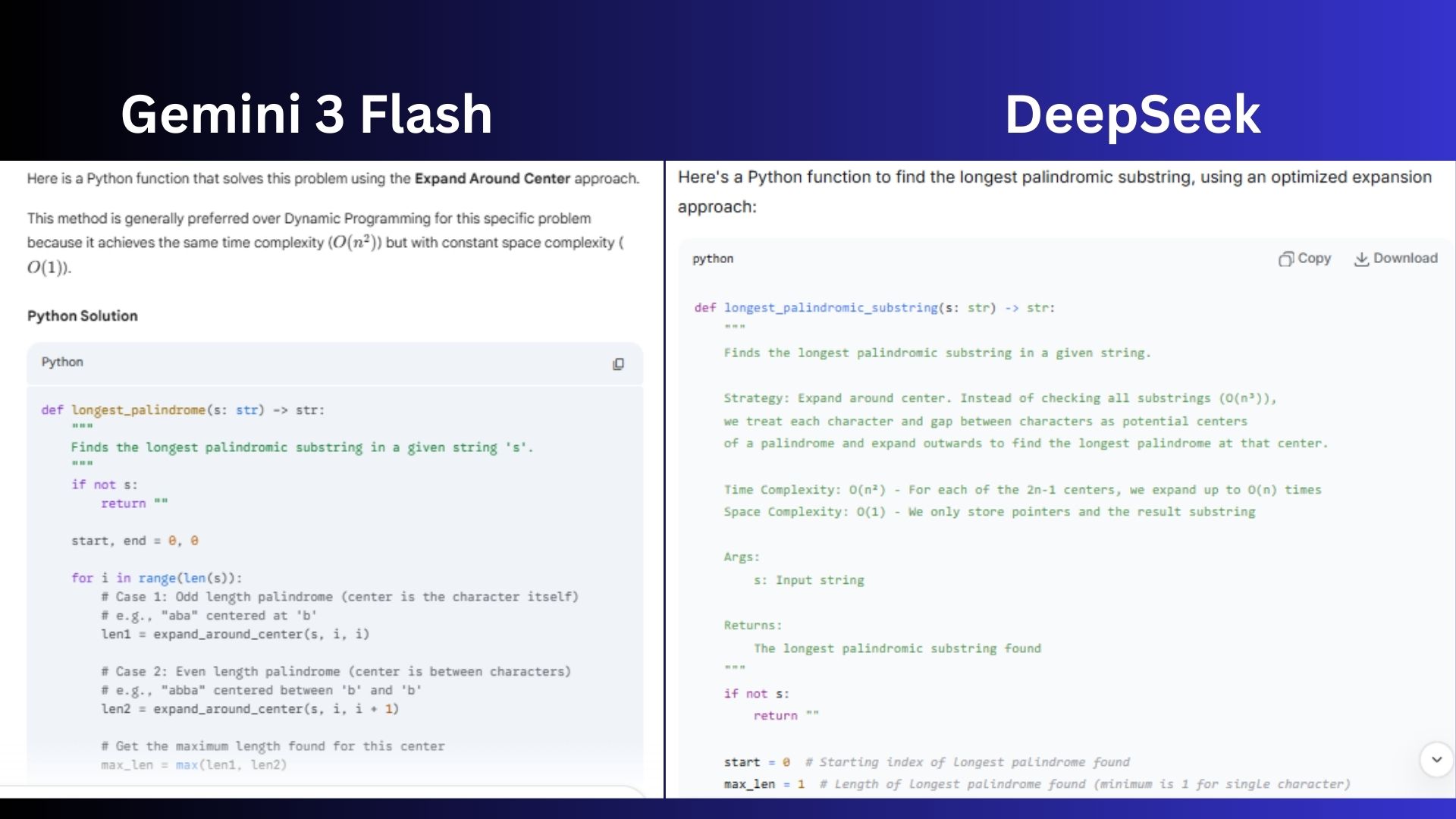

3. Code generation

Prompt: Write a Python function that finds the longest palindromic substring in a given string. Include comments and explain the time complexity.

Gemini 3 Flash provided a correct and efficient expand-around-center solution with solid inline comments and a clear explanation of time and space complexity. However, the formatting is cluttered, and the explanation feels more like an info dump than a polished, developer-ready answer.

DeepSeek delivered clean, readable code with strong docstrings, test cases and a well-structured explanation that directly addresses the prompt. It showed better engineering judgment by clearly outlining tradeoffs and alternative approaches.

Winner: DeepSeek wins for a clearer, more complete answer that feels production-ready rather than just technically correct.

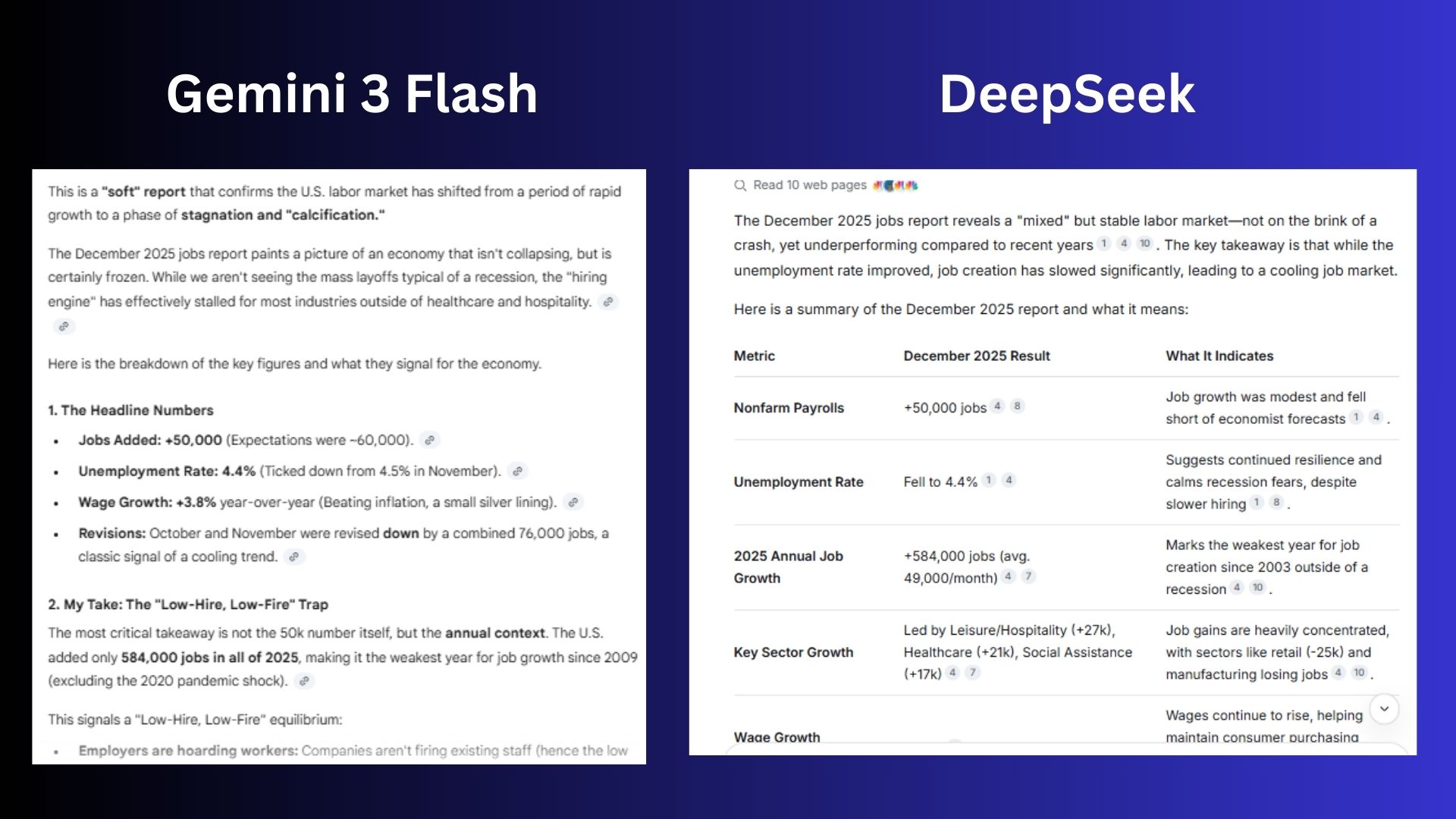

4. Analysis & synthesis

Prompt: What's your take on the just-released jobs report and what does it say about the state of U.S. economy?

Gemini 3 Flash offered a more interpretive, narrative-driven analysis. Its strength was synthesis and storytelling, even though it went far beyond what the question strictly required.

DeepSeek stayed tightly aligned with the prompt, delivering a structured, fact-based summary that clearly explained what the jobs report says about the economy without drifting into speculation.

Winner: DeepSeek wins for the more balanced summary that accurately captured the slowdown in hiring while grounding it in broader economic context, without overreaching or editorializing.

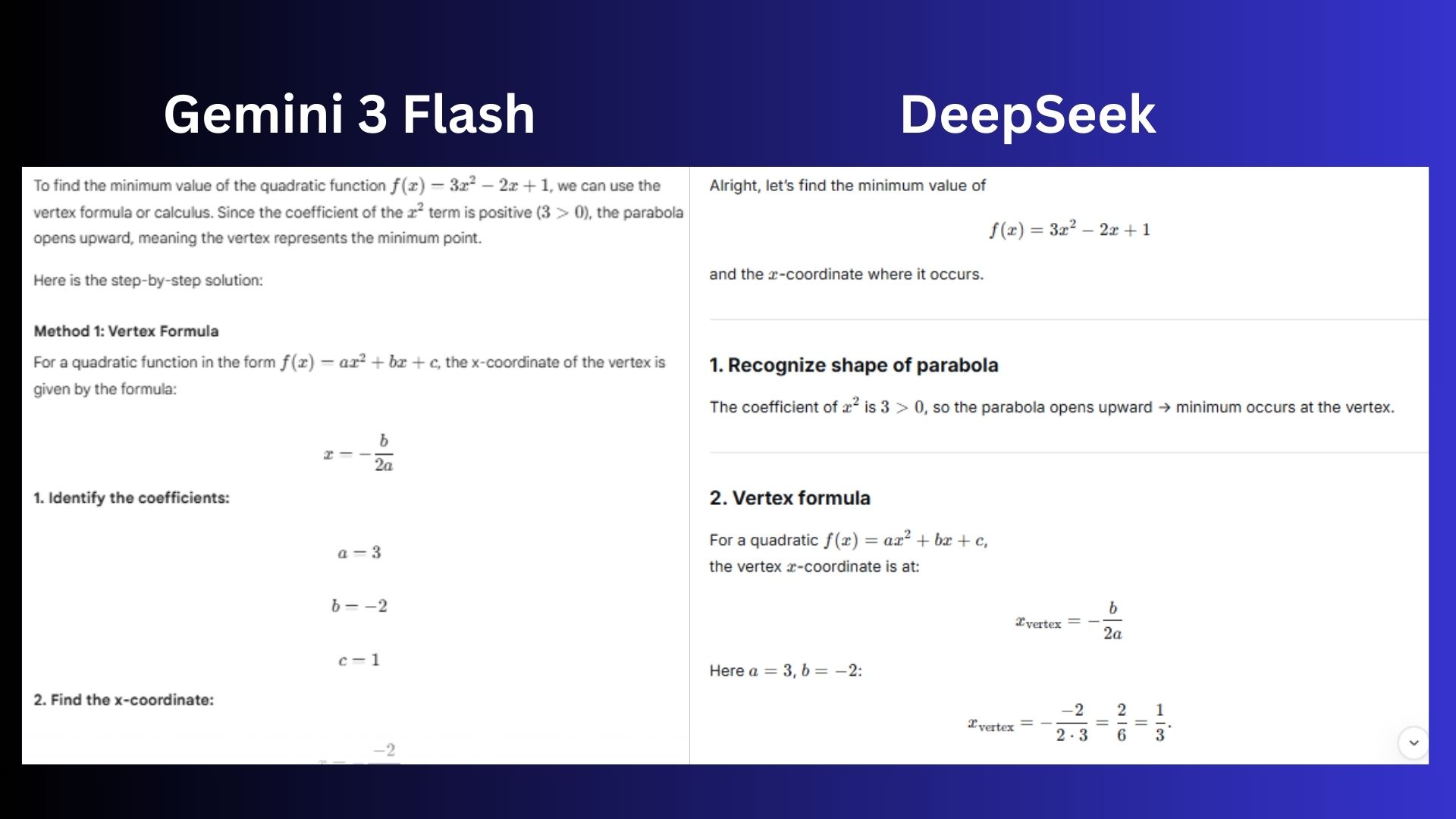

5. Mathematical problem-solving

Prompt: If f(x) = 3x² - 2x + 1, find the minimum value of the function and the x-coordinate where it occurs. Show all work.

Gemini 3 Flash clearly showed all the work using two valid methods (vertex formula and calculus), with clean formatting and correct math throughout. It’s thorough, well-organized and easy to follow for a student.

DeepSeek correctly answered and showed the main steps, but the formatting is messy and harder to read, with duplicated symbols and visual clutter. While mathematically sound, it feels less polished and less clear.

Winner: Gemini wins for the clearer, more complete and better presented answer while fully meeting the “show all work” requirement.

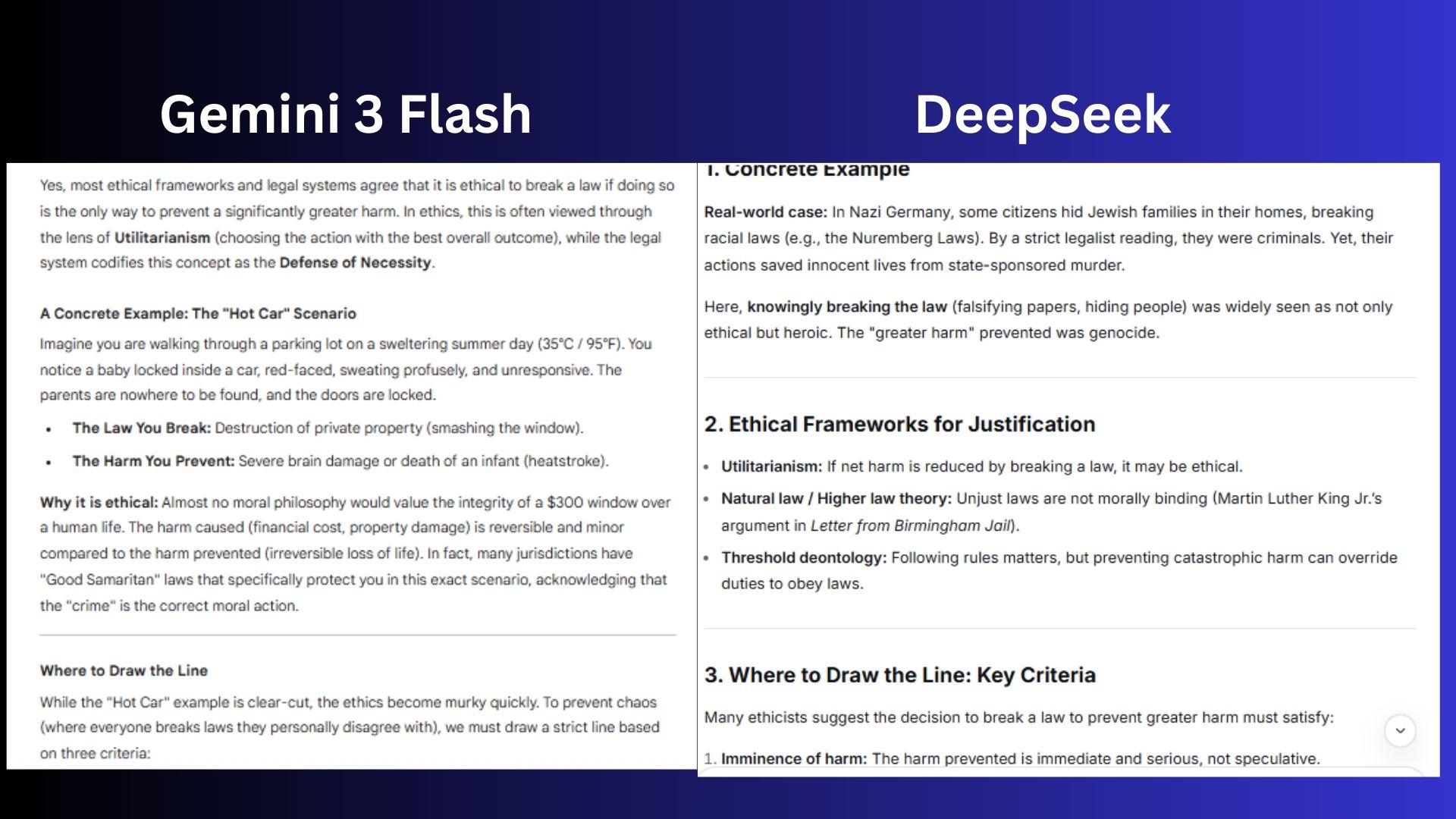

6. Ethical reasoning

Prompt: Is it ever ethical to knowingly break a law if doing so clearly prevents greater harm? Give a concrete example and explain where you would draw the line.

Gemini 3 Flash gave a clear, intuitive answer with a concrete, relatable example and well-defined criteria for where to draw the line. It balanced ethical theory with real-world judgment in a way that’s easy to follow and directly answers every part of the prompt.

DeepSeek provided a rigorous, academically grounded response with strong historical examples and explicit ethical frameworks.

Winner: DeepSeek wins for a clear response that better aligned with the prompt’s request for a concrete example and practical moral boundary.

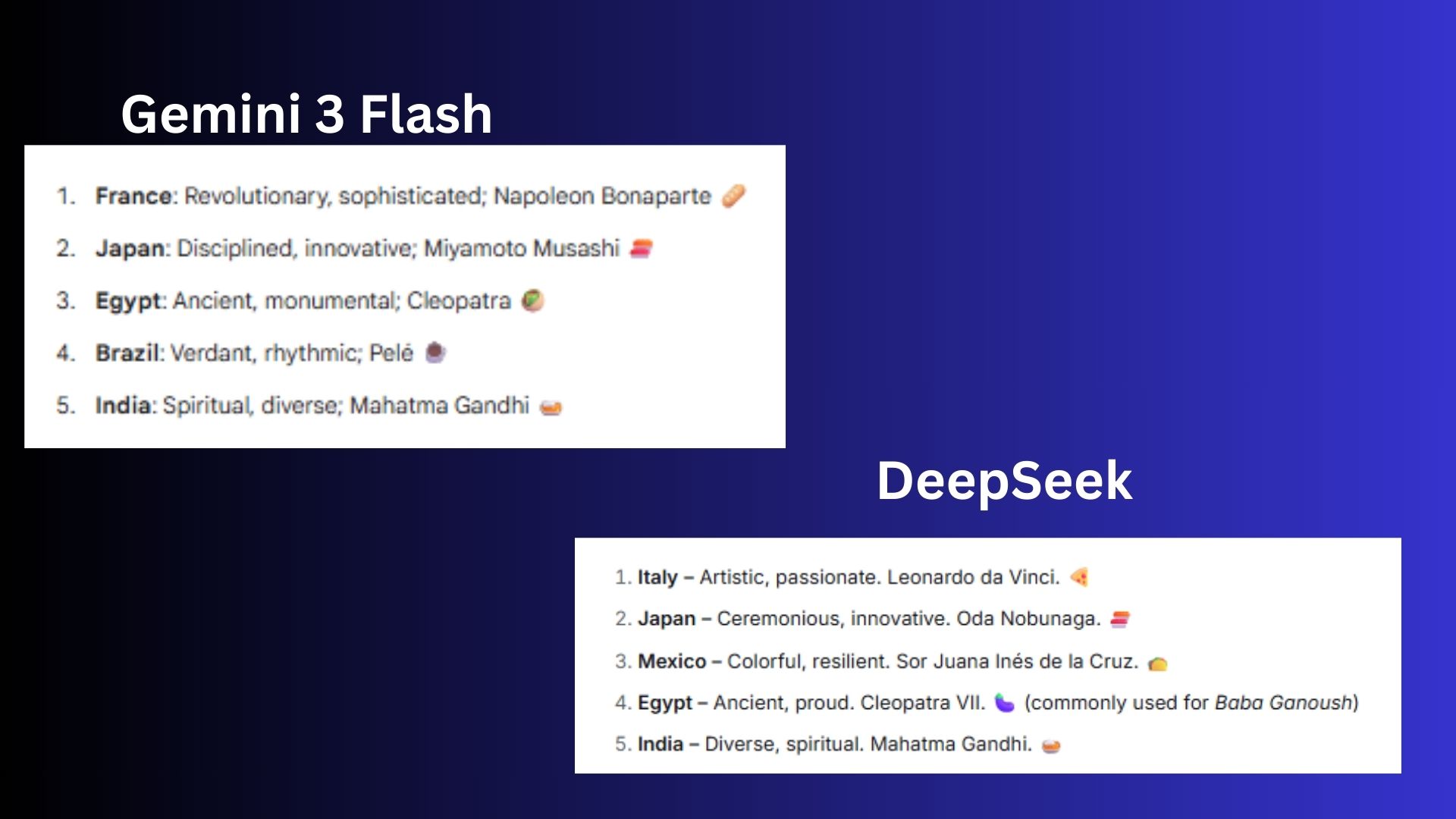

7. Instruction following

Prompt: List 5 countries. For each: use exactly 2 adjectives, mention one historical figure, and end with a food emoji. Format as a numbered list.

Gemini 3 Flash mostly followed the constraints, but it broke the format rules by using punctuation inconsistently and not strictly enforcing the “end with a food emoji” requirement in a uniform, numbered-list structure. It’s close, but slightly loose on precision.

DeepSeek followed every instruction exactly: numbered list, exactly two adjectives per country, one historical figure, and each entry cleanly ends with a food emoji. The formatting is consistent and constraint-tight throughout.

Winner: DeepSeek wins for adhering to the prompt more precisely and wins on formatting and rule compliance.

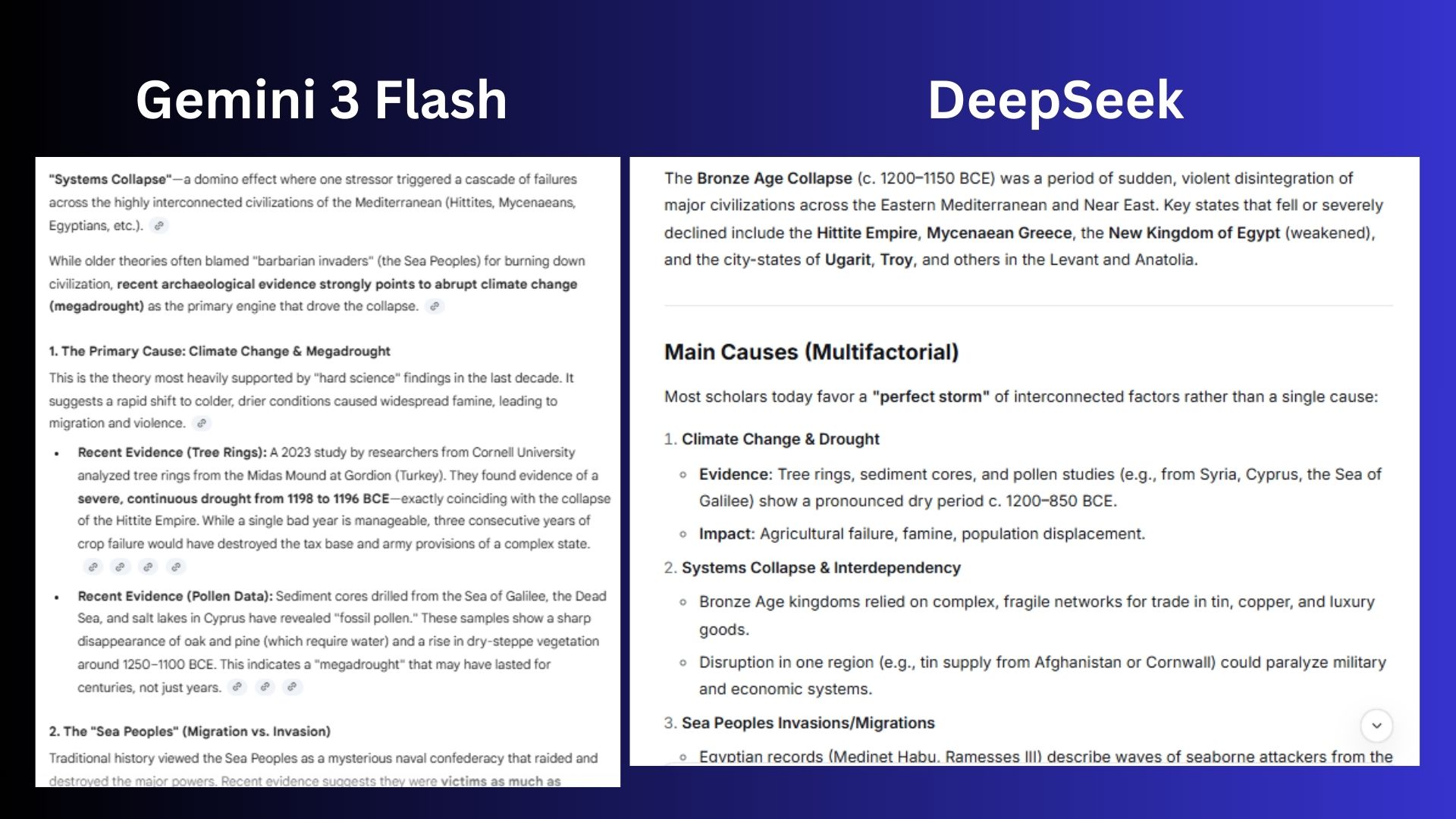

8. Knowledge retrieval

Prompt: What were the main causes of the Bronze Age Collapse around 1200 BCE? Which theories are most supported by recent archaeological evidence?

Gemini delivered a vivid, evidence-rich explanation that clearly reflects the latest archaeological consensus, especially around climate-driven systems collapse. However, it’s far more detailed than necessary for the question and reads like a mini-essay rather than a concise answer.

DeepSeek presented a clear, structured overview that directly answers both parts of the question while accurately reflecting current archaeological support. It balanced depth and clarity without overextending.

Winner: DeepSeek wins for a more concise, better organize response that precisely aligned with the prompt.

9. Ambiguity handling

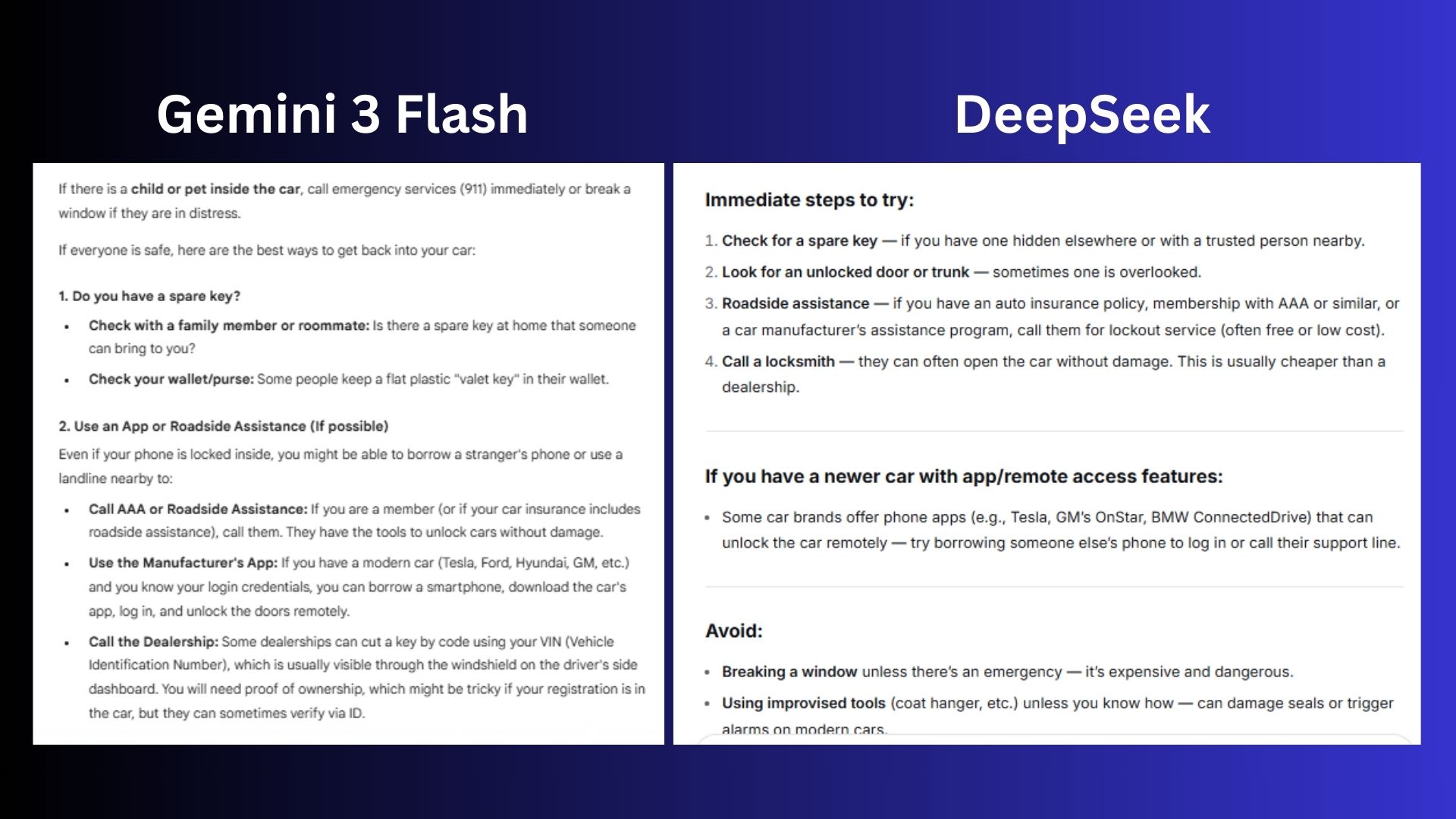

Prompt: I left my phone locked in the car with the keys. Can you help me?

Gemini gave a thorough, safety-first response with practical options and clear escalation steps, but it’s overly long for a simple lockout question. The details are helpful, yet it may overwhelm someone who just needs quick guidance.

DeepSeek delivered calm, concise and well-prioritized advice that covers safety, modern car features and next steps without unnecessary complexity. It’s easier to scan and act on in a stressful moment.

Winner: DeepSeek wins for a response better suited for an urgent, real-world situation.

Overall winner: DeepSeek

After nine prompts, DeepSeek proved to be the better tool when accuracy and structure matter most. It consistently delivered clean answers, respected constraints and avoided unnecessary verbosity — making it ideal for technical work, structured analysis, instruction-heavy tasks and moments where clarity matters most.

Gemini 3 Flash did well, too, performing best when the task benefits from interpretation, explanation or creativity.

I have to admit that I'm completely surprised by the results here. DeepSeek has been controversial in the past, but it stands out in many new ways that clearly make it competitive. DeepSeek might just be the chatbot to watch this year.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- Is your job AI-proof? 10 skills becoming more valuable in 2026

- 9 signs Google’s Gemini just ended ChatGPT’s dominance

- The 'brain warm-up' prompt I use every morning — it takes under 2 minutes

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits

![HIDevolution [2025] ASUS ROG... HIDevolution [2025] ASUS ROG...](https://images.fie.futurecdn.net/products/60cbb9059616003fe7353df7bc0e56e02dd01b3c-100-80.jpg.webp)