Gemini 3 Flash launches worldwide — here’s everything to know about Google's smartest model yet

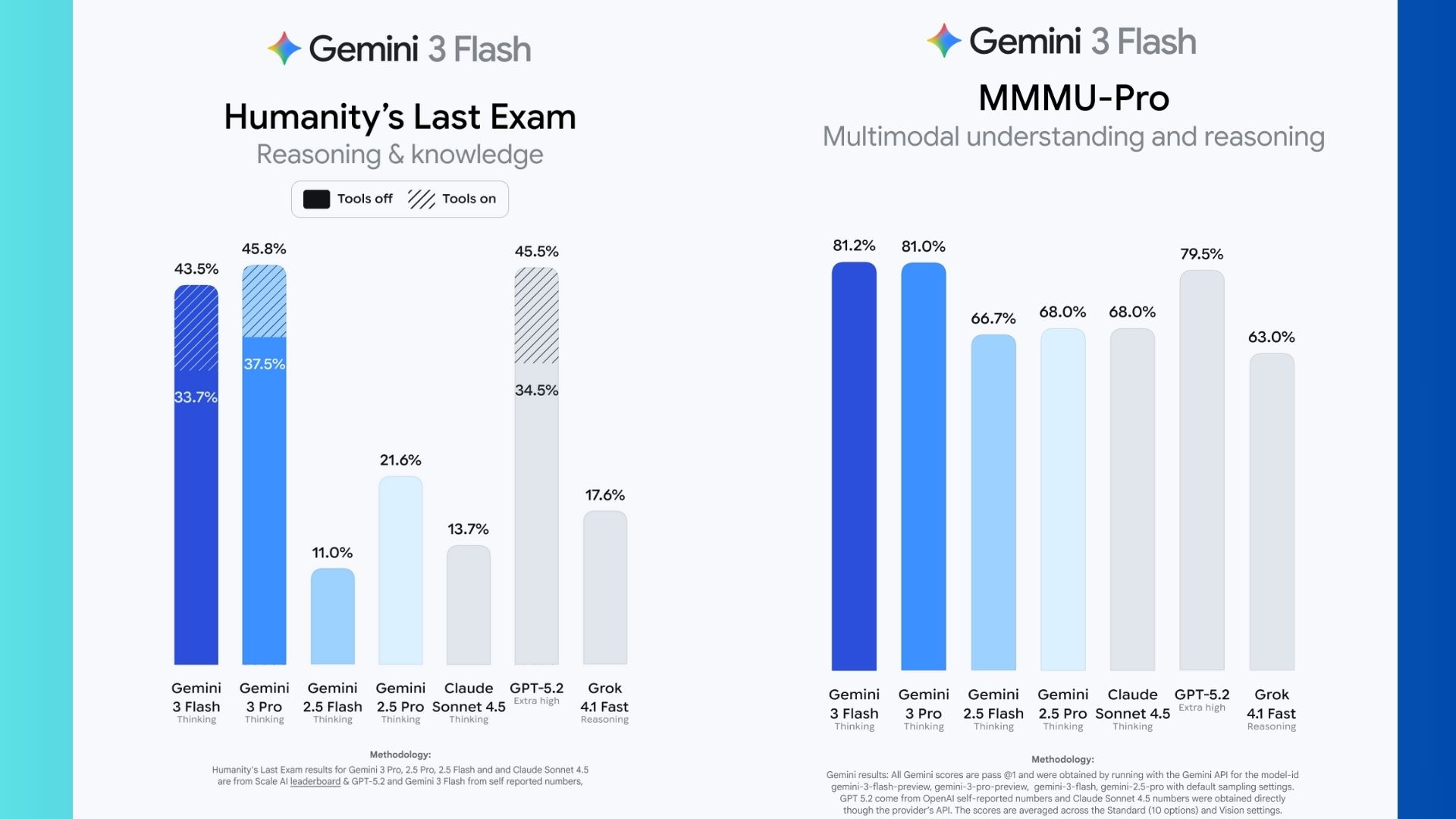

Other models don't even come close in benchmark tests

Google’s Gemini just got a major speed upgrade. Gemini 3 Flash, the company’s fastest AI model yet, rolled out worldwide today (December 17). But Google is betting that raw speed isn’t enough anymore — modern AI has to think well and respond instantly.

As the newest model in the Gemini 3 family, Flash is designed to blur the line between lightweight assistants and high-end reasoning models. Let's just say, based on these benchmarks, OpenAI might be stuck in "Code Red" mode because this is Google’s attempt to make advanced AI feel effortless.

Here’s what Gemini 3 Flash actually does, why it’s a bigger deal than it sounds and why you should download the app on your iPhone to get the best use of it.

What is Gemini 3 Flash?

Gemini 3 Flash is a new AI model built to deliver high-level reasoning at Flash-tier speeds. Google describes it as combining “frontier intelligence” with low latency, making it suitable for real-time use across apps, tools and developer workflows.

This matters because users traditionally had to choose between fast models that respond quickly but reason shallowly and smart models that take longer to respond. Gemini 3 Flash aims to eliminate that trade-off.

While previous “Flash” models prioritized speed over depth, Gemini 3 Flash changes that. According to Google, the model delivers Pro-level reasoning performance while maintaining faster inference times and lower compute costs. That makes it more efficient than earlier Pro models, and significantly more capable than earlier Flash versions.

In practical terms, that means users will get faster answers to their complex questions and better step-by-step reasoning with more consistent performance across longer prompts.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

This is especially noticeable in tasks like planning, summarization and coding, where response time usually slows things down.

Where you’ll actually see Gemini 3 Flash

Like other Gemini 3 models, Flash is natively multimodal. It can work with text, images, audio and video.

That means you can upload a photo, ask a question about it and follow up with a related task — without switching tools or models. Google is positioning Gemini 3 Flash as an everyday assistant that understands context across formats, not just words on a screen.

And here's the kicker: you may already be using the latest Gemini Flash model without realizing it. Google says Gemini 3 Flash is rolling out across the Gemini app, where it becomes the default model for many users, and AI Mode in Google Search, powering faster, more nuanced answers. It's also a part of developer platforms including Gemini API, AI Studio, Vertex AI, Android Studio and the Gemini CLI.

Bottom line

This model is clearly designed for everyday users who want fast, thoughtful answers, developers building interactive agent-style apps and power users who need quick iteration without sacrificing reasoning quality.

Gemini 3 Flash is also especially well-suited for real-time workflows such as live coding assistance, document analysis or multimodal Q&A — where slow responses break momentum.

If it works as promised, this model could become the default experience for millions of users.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- GPT‑5.2 is way smarter than I expected — these 9 prompts prove it

- 5 reasons the Google app is worth downloading on your iPhone right now

- I tested ChatGPT-5.2 and Claude Opus 4.5 with real-life prompts — here’s the clear winner

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits