9 things to try first with your Ray-Ban Meta smart glasses

Follow these tips to familiarize yourself with your brand new gadget

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Unboxing your Ray-Ban Meta smart glasses is only the beginning. Whether you picked up the classic Wayfarers or the newer Headliners, you’re holding one of the most versatile (and surprisingly stylish) pieces of AI tech available today. And, with the recent update, there's never been a better time to start using them.

So much more than stylish sunglasses with speakers, these voice-controlled, camera-equipped glasses powered by Meta AI offer the ability to describe your surroundings, translate language and capture life as it happens.

After using my Ray-Ban Meta glasses daily for more than a year, here are my suggestions for the first 9 things you should try to unlock their full potential. Once you download the accompanying Meta app, these tips work for both the first and second generation of these smart glasses.

1. Customize your settings in the Meta app

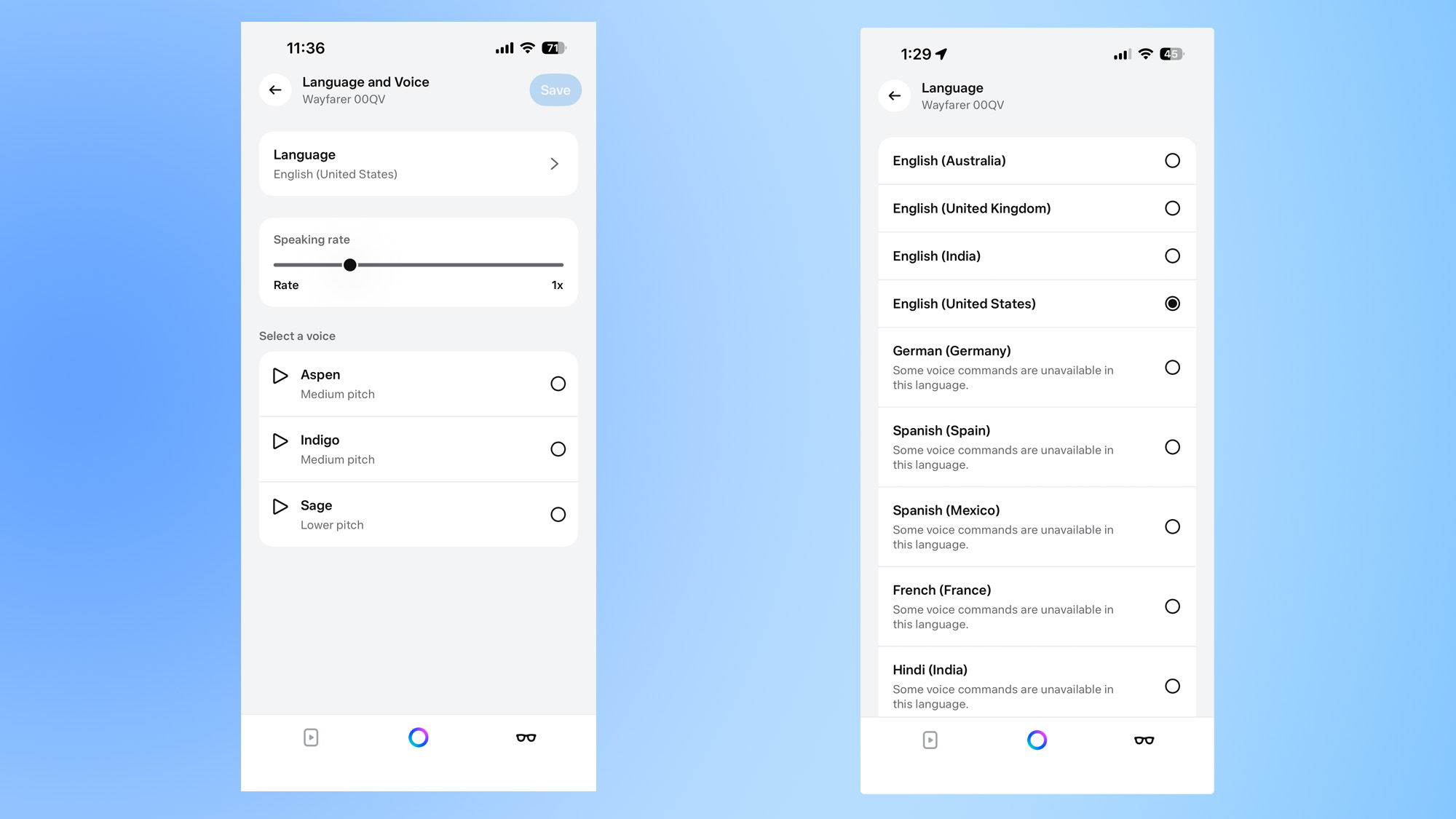

I know you're itching to get out there and start seeing the world with the power of AI, but hold on just a second. Before you go full smart-glasses power user, open the Meta AI mobile app and set:

- Video length defaults

- Wake word preferences

- AI sensitivity

- Shortcuts and gestures

This makes the Ray-Ban Meta experience more seamless and tailored to your lifestyle. I set mine to auto-save the last 60 seconds of video just in case something unexpected happens — and it’s already come in handy, like that time my daughter nailed her first backhand spring. I captured it without even trying!

2. Take hands-free photos and videos

I'll start with my absolute favorite Ray-Ban Meta feature, which is taking hands-free photos and videos. The wide-angle lens is perfect for POV shots during hikes, travel or even walking the dog.

Just look and capture. Simply press the capture button on the right temple to snap a photo or hold it to record up to three minutes of video. Just be sure you've enabled extended capture in settings.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

But my preference is to simply say:

“Hey Meta, take a photo”

“Hey Meta, take a video”

3. Try these voice commands first

When I first tried Ray-Ban Meta smart glasses, I wasn't exactly sure what they could do. If you're in this situation, the easiest way to get started using Meta AI is to try saying the following:

“Hey Meta, what’s the weather?”

“Hey Meta, play music”

"Hey Meta, what am I looking at?"

For the first question, Meta AI will bring up the weather in your location, while the second will use the music service you typically use. In my case, it's Apple Music and my glasses started playing the last song I had in my queue.

Be careful with the last prompt, Meta AI told me my desk was messy; at least it's honest.

Best of all, the open-ear speakers give you clear audio without blocking the world around you. I use them all the time when I'm out for a run.

4. Use AI vision to identify what you’re looking at

Here’s where things get magical. With Vision Mode enabled, say:

“Hey Meta, tell me about this."

The glasses will analyze your surroundings and describe what it sees — like buildings, plants, objects, signs or even food.

I’ve used this to identify flowers on walks, get info on artwork at museums and even decode confusing packaging at the grocery store.

5. Translate conversations in real time

Going abroad? Meeting someone who speaks another language? Say:

“Hey Meta, start live translation”

Meta AI can interpret speech and translate it live — and it’s surprisingly good in noisy environments like on a commuter train or busy shopping mall. Just make sure your language packs are downloaded ahead of time in the Meta AI app.

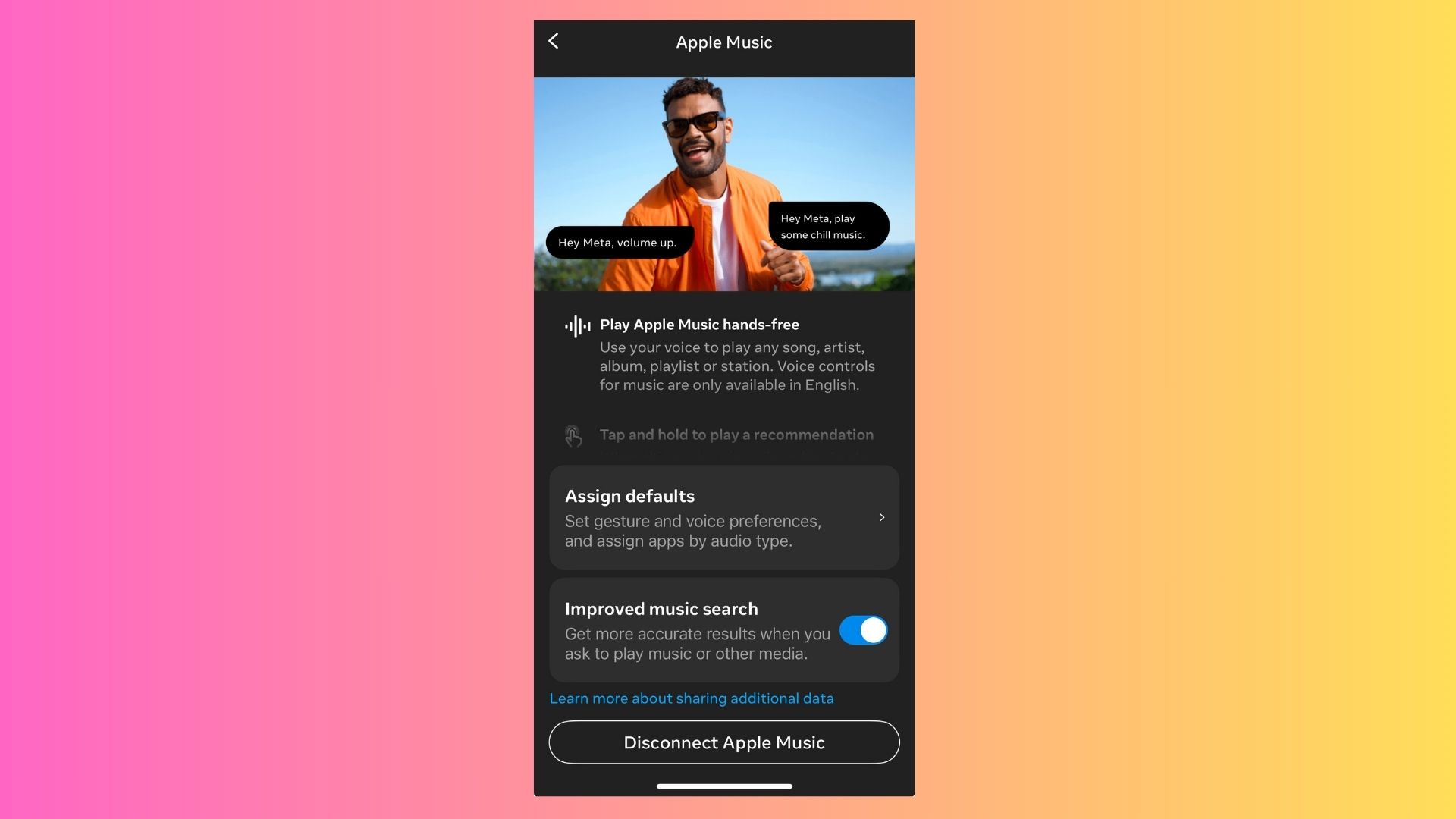

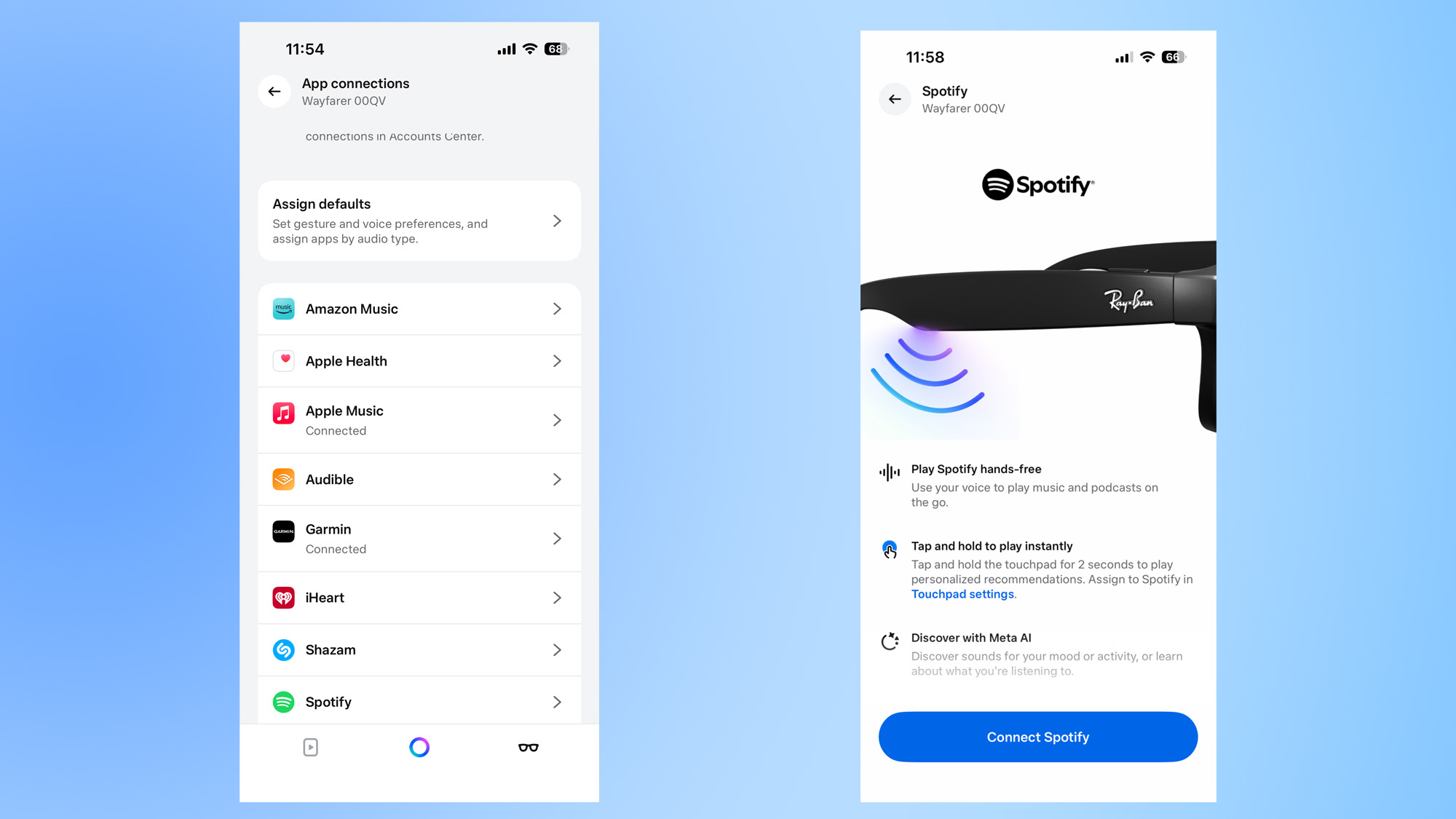

6. Play music that fits your vibe

The new Spotify integration lets your glasses act as mood-based DJs. Say:

“Hey Meta, play music for this view”

Meta AI will pick songs based on your environment. Whether you’re walking through a park or sitting by a café window, it’ll set the tone.

Bonus: You can control playback hands-free — pause, skip or turn the volume up without reaching for your phone.

As mentioned, I use Apple Music and that service integrates, too, just without some of the extra features available if I were to use Spotify. It's safe to say, if you're an Apple Music user, you'll get the fairly same listening experience.

7. Ask Meta for smart suggestions

Because Meta AI is context aware, it can see and hear your environment before responding. For that reason, you'll want to try saying things like:

“Hey Meta, what’s this building?”

“Hey Meta, recommend a lunch spot nearby”

“Hey Meta, help me plan dinner”

The results are tailored and conversational, not to mention, surprisingly helpful when you’re on the go.

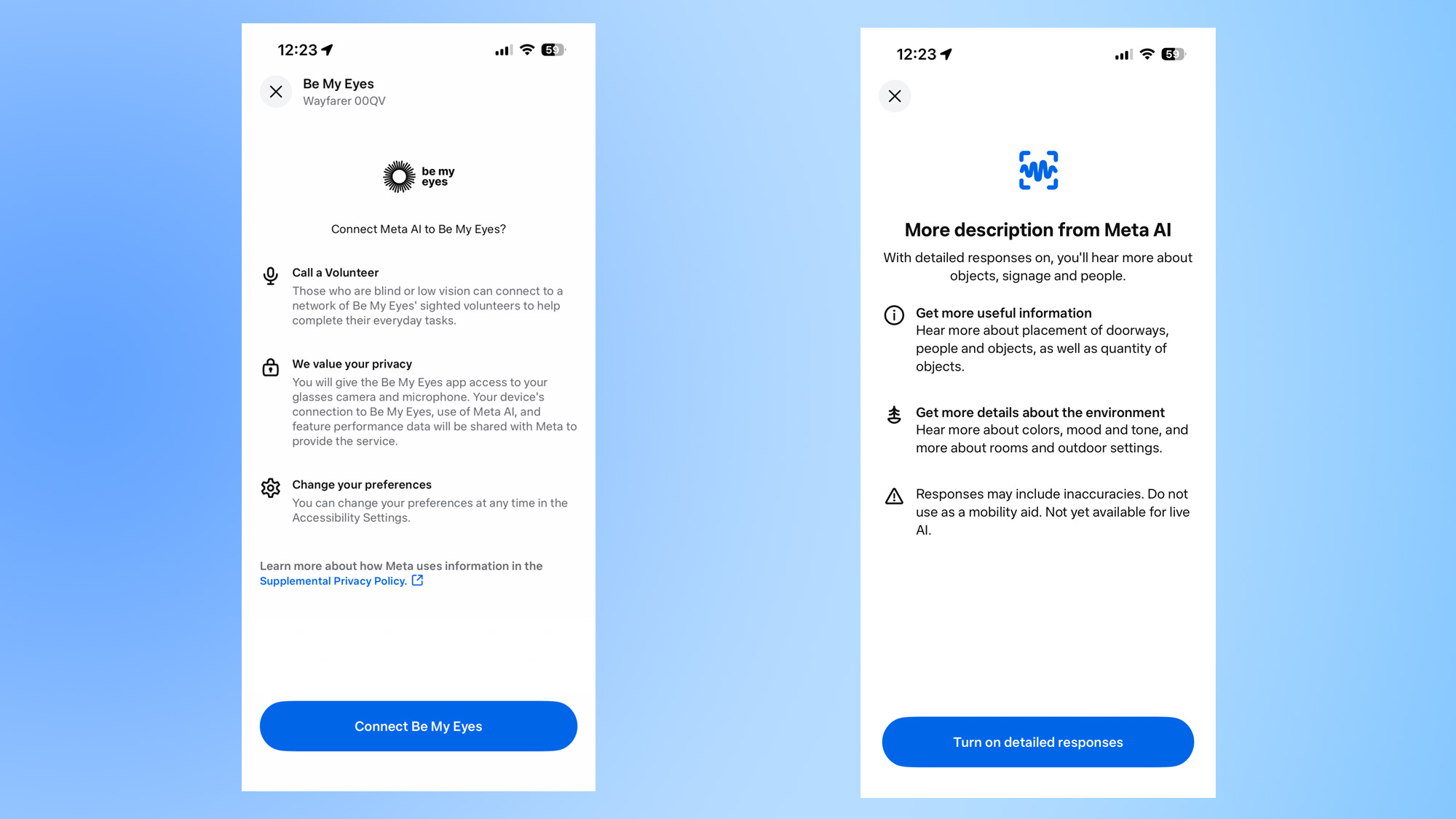

8. Use for visual assistance

If you've ever been at the grocery store and tried to read the tiny expiration dates, you're going to love this trick. If you need help reading small text or identiying something tricky, just say:

“Hey Meta, read this to me”

The next thing you know, you'll get the assistance you need in real time — perfect for accessibility, seniors or just decoding the ingredients in that sauce label.

9. Share your POV to social media

You're not going to believe this, but I never went live on Instagram until I had Ray-Ban Meta glasses. They just make it so much easier to capture moments, review and trip clips and export to Instagram, Reels or TikTok. Right from your glasses, you can apply filters or overlay captions.

It’s a lot like having a head-mounted content camera, but minus the bulky GoPro look. Just remember to center your gaze for the best framing.

Bottom line

I think you're going to love your Ray-Ban Meta smart glasses — especially once you know how to get the most out of them. Start with these 9 tricks, and you’ll quickly see how smart (and surprisingly human) these glasses can be.

Make sure to keep the software updated, too — Meta regularly rolls out new features like smarter music controls and improved visual recognition.

With AI built-in, a stealthy camera, and all-day battery life, these glasses offer a whole new way to interact with your surroundings. They’ve become one of the few wearables I actually want to use every day.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- I turned a hotel key card into a one-tap shortcut for ChatGPT — and now I use it every day

- I used Nano Banana on undeveloped photo negatives — and finally saw what was inside

- The 9 weirdest ways I used AI in 2025 — and the surprising part is they actually worked

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits