"We're building chips that think like the brain" — I got a front row seat to see how neuromorphic computing will transform your next smart device

“Think of it as AI that sleeps until it needs to wake up”

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

For how powerful today’s “smart” devices are, they’re not that good at working smarter rather than working harder. With AI constantly connected to the cloud and the chip constantly processing tasks (even when the device is asleep), this leads to high power consumption, limited privacy, and the constant need for connectivity.

Neuromorphic computing offers a radical alternative, but what is it? I understand that for many of you reading this, it could be the first time you’ve heard this phrase. Simply put, it’s a whole new breed of computer chip that thinks and functions like a human brain — spiking in activity only when needed.

By being inspired by the way the brain works, devices can interpret the world around them in real time and complete key tasks while using a fraction of the power, and without needing to send data to the cloud.

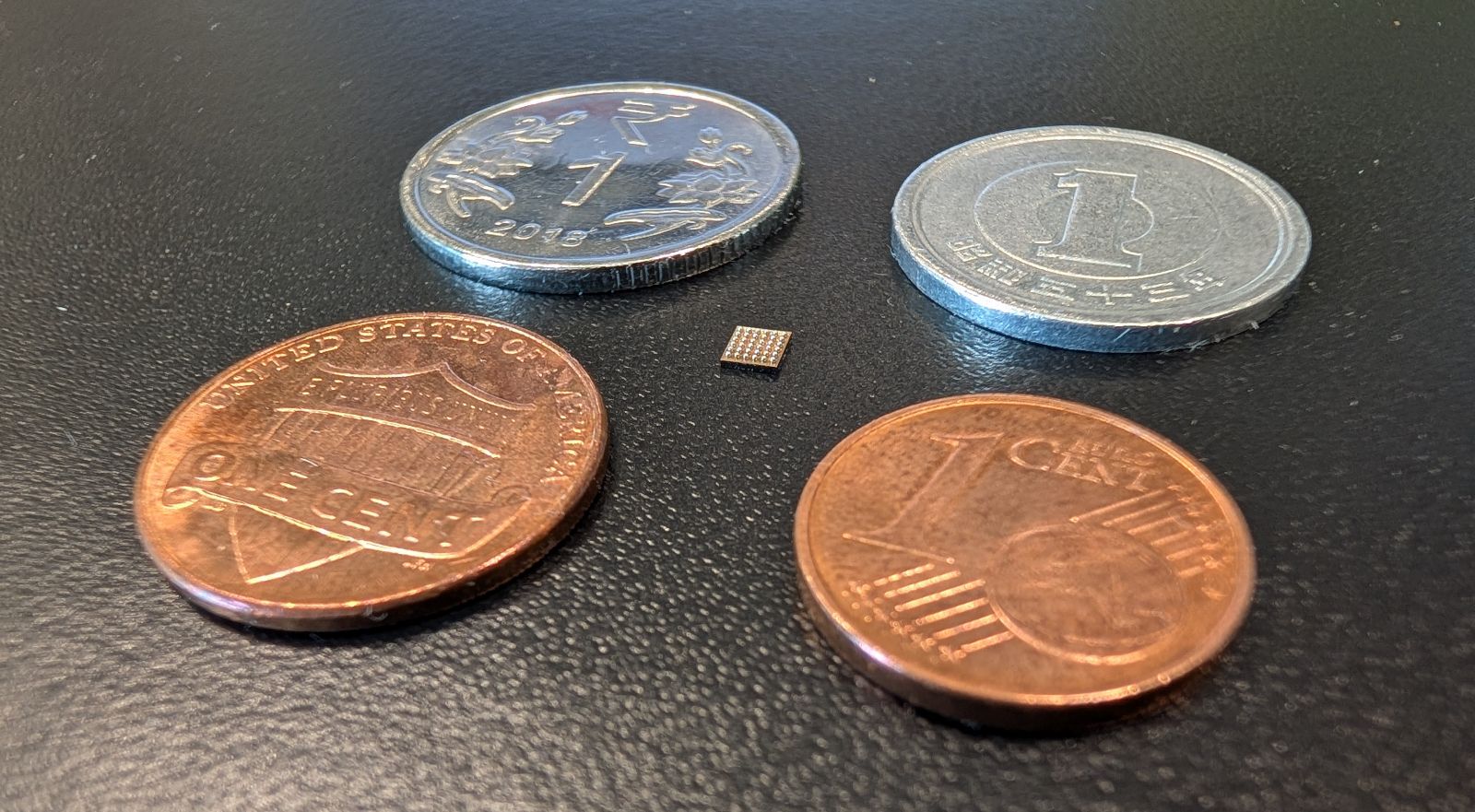

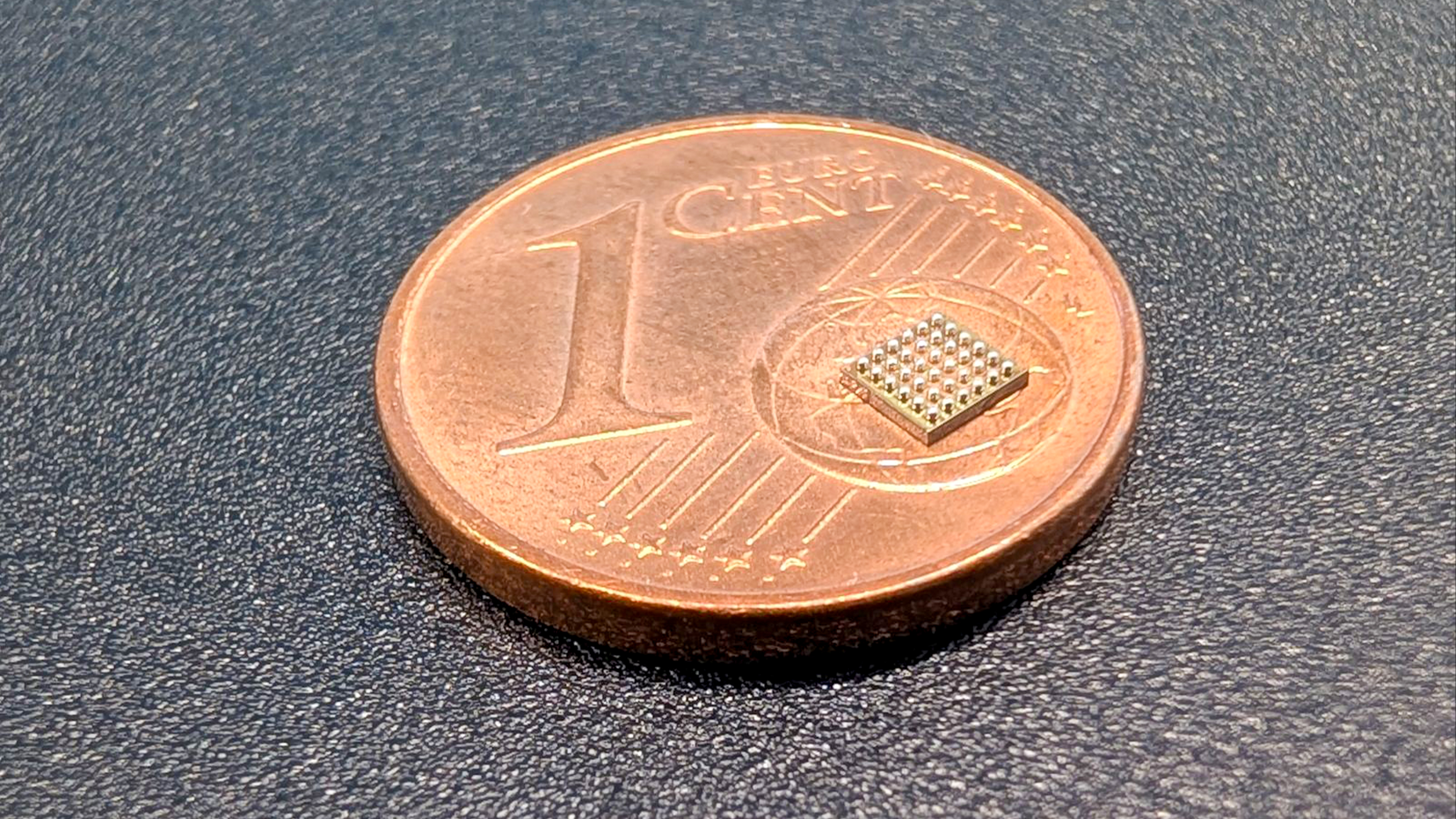

One of the startups leading this brain-inspired shift is Innatera, with its new Pulsar chip being one of the first neuromorphic controllers built for real-world use. This chip that’s 30-40x smaller than a one-cent piece aims to bring smarter sensing and longer battery life to everything from smart doorbells to fitness trackers.

But that’s just the beginning. I envision a time where neuromorphic chips could work alongside beefier chips like the ones you'd find in the best laptops and smartphones for fast, efficient, ultra-low-power intelligence. Think of it as the next-generation NPU or Neural Engine.

To learn more about what neuromorphic computing actually is, how it works, and what it could mean for your next smart device, I spoke with Sumeet Kumar, co-founder and CEO of Innatera.

For readers who’ve never heard of neuromorphic computing — what is it, and how is it different from the AI chips in our phones or smart home devices today?

Neuromorphic computing is a class of AI inspired by the way the human brain processes information. Instead of continuously processing input data and draining power like traditional AI chips, neuromorphic processors use Spiking Neural Networks (SNNs) that mimic the way biological brains work — continuously receiving sensory data, but only spending energy on processing the parts of the data that are relevant.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

SNNs have an in-built notion of time, which makes them good at finding both spatial and temporal patterns within data quickly and without the need for large, complicated neural networks.

Neuromorphic processors like Innatera’s Pulsar use an array of energy-efficient silicon neurons, interconnected through programmable synapses to run SNNs in hardware. The neurons and synapses operate in an asynchronous manner from one another, consuming a tiny amount of energy for each operation.

Importantly, they operate in an event-driven manner, i.e., they compute only when presented with relevant data. This allows Pulsar to process sensor data quickly, with very little power, completely local to the device. These capabilities are pivotal for smart sensing applications, especially in smart home devices.

Take, for example, a smart doorbell. Traditionally, the camera would send a notification every single time there is movement on your front porch — regardless of whether it’s a human presence, a leaf in the wind, or a bird.

By integrating a neuromorphic processor in this device, you’d be able to not only continuously sense the surroundings without turning on the camera through a technology like radar, but you could also interpret the data from said radar to only turn on the camera and notify you when there is a human presence detected. All of this with very little energy.

Innatera’s approach will drastically reduce energy use for real-time processing and make possible a new breed of sensing applications even in small battery-powered devices, all without relying on power-hungry applications, processors, or the cloud. Over time, these processors can even adapt and learn on the fly to create systems that are more intelligent and responsive than ever before.

What kinds of everyday devices could benefit most from neuromorphic chips — and how would that actually change a user’s experience?

Devices that deploy sensors in always-on contexts stand to benefit the most from neuromorphic technologies. This is most prevalent in devices in the consumer electronics market vertical — smart home, wearables, as well as in industrial IoT and building automation. Neuromorphic technologies will allow more intelligent application of sensors across use-cases.

For a user, this will translate to automation that is more responsive and reliable, that doesn’t come with a privacy risk of user data being sent to the cloud, and importantly, doesn’t drain the device battery.

For businesses, neuromorphic technology will enable high-performance intelligence with a tiny bill of materials, ultra-low power consumption that enables it to be integrated anywhere, and programmability that allows the intelligence to be adapted to a diverse range of application use-cases. This will effectively translate to smarter products with robust always-on functionalities, with a fast time-to-market.

Imagine a fitness wearable that tracks your gestures and recognizes your voice instantly without draining its battery in a day. Or a smart home sensor that detects movement and sound changes in real time, without false alarms, adjusting lighting, temperature, and even pausing your favorite show on TV while you go to answer your front door for a delivery.

Neuromorphic chips, such as Innatera’s new microcontroller Pulsar, make this possible by enabling always-on sensing at a fraction of the power traditional processors need, delivering longer battery life, near-instant responsiveness, and room for richer features in smaller and sleeker devices.

You’ve said neuromorphic processors are modeled after the human brain. What does that mean in practice, and what are the real advantages of that approach?

In practice, it means the processor uses spiking neurons and synapses to mimic how the brain processes information – operating sparsely and reacting only to significant events.

For example, Innatera’s Pulsar chip delivers up to 500x lower energy consumption and 100x lower latency than traditional AI processors.

The best smart doorbells you can buy can consume roughly 6 watts of power when streaming video and detecting motion, on top of the AI inference and offloading to the cloud to decide whether to notify you about the motion. Using the cloud also adds latency to the decision-making. Swapping the traditional silicon for a Neuromorphic chip will help reduce AI energy cost by over 100x.

For many applications, this means achieving sub-1mW power dissipation and millisecond-scale latencies on AI tasks that achieve accuracies in the 90+% range. By enabling always-on intelligence without sacrificing power or responsiveness, this brain-inspired efficiency makes real-time, on-device AI both practical and transformative for wearables, smart sensors, and other ultra-low-power devices where every microwatt counts.

In many existing edge AI deployments, developers often have to trade off between application complexity, accuracy, power dissipation, and latency. Often, edge deployments choose low power dissipation over everything else, opting to move all high-performance AI to power-intensive processors or the cloud. Innatera unlocks this tradeoff with Pulsar, enabling high-performance AI functionalities within an ultra-low power envelope and short latency.

And there’s a lot more to come. There are many more facets of neuromorphic technology that can be leveraged in sensing applications to make them better, faster, and more efficient. Innatera’s technology roadmap for the future is exciting and will change the notion of computing at the sensor edge.

Could neuromorphic computing help solve the battery life and privacy trade-offs we see in wearables and voice assistants today?

Absolutely. Most traditional devices depend on the cloud or keep their main processors running constantly, which drains battery life and sends sensitive data over the internet, creating privacy risks.

However, neuromorphic computing can process intelligence locally, at the sensor itself, so data never needs to leave the device. Only necessary insights are passed along, and higher-power components wake up only when required.

This approach delivers major advantages: dramatically longer battery life, far less data transmission, and enhanced privacy protections, which are crucial for always-on features like sound classification or vitals monitoring, where streaming raw data to the cloud is no longer acceptable.

How close are we to seeing neuromorphic chips in consumer products — and what’s holding back wider adoption right now?

We’re already at the threshold of mainstream adoption. Innatera’s Pulsar is the world’s first mass-market neuromorphic microcontroller, purpose-built to bring brain-inspired intelligence to real-world consumer and industrial products. And it’s available now.

Unlike previous neuromorphic solutions limited to research or niche applications, Pulsar is packaged as a full-featured microcontroller, complete with a RISC-V CPU, dedicated accelerators, and a spiking neural network engine that makes it practical for integration into compact battery-powered devices.

So, it’s not just theoretical; Pulsar is in the process of being integrated into next-generation products by partners in radar, ultra-wideband (UWB), and sensing technologies, where ultra-low-power, always-on intelligence is critical. These collaborations highlight how neuromorphic processing is moving far beyond the lab into real-world markets like smart home systems, wearables, and industrial Internet of Things (IoT).

Historically, one of the biggest obstacles to neuromorphic adoption has been software support and developer accessibility, as the steep learning curve and lack of tools slowed innovation.

Innatera has solved this by introducing a developer-friendly Talamo SDK with native PyTorch integration, enabling engineers to build and deploy spiking neural network models using familiar workflows. No neuromorphic PhD required.

Combined with compact model sizes (as small as 5KB) and simplified integration into existing sensor architectures, this approach dramatically lowers the barrier to entry, accelerating time to market for neuromorphic-powered products.

Looking ahead five years, what’s one thing you think neuromorphic computing will make possible that traditional chips can’t?

We’ve only just begun to scratch the surface of its capabilities. Neuromorphic computing is set to enable a new generation of adaptive and autonomous edge devices; systems that aren’t solely detecting and responding, but can also learn, self-calibrate, and optimize in real time, all while running on tiny batteries.

This shift could unlock a plethora of exciting applications, from wearables that adjust to your behavior on the fly to industrial systems that predict and prevent failures with minimal energy use.

Follow Tom's Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

More from Tom's Guide

- I got the inside scoop on Snap Specs from the hardware VP — here's what you need to know about the new smart glasses

- 4K at 240Hz is “no longer a future concept” — Lenovo exec says the future of gaming monitors is already shifting

- ChatGPT-5 users are not impressed — here's why it 'feels like a downgrade'

Jason brings a decade of tech and gaming journalism experience to his role as a Managing Editor of Computing at Tom's Guide. He has previously written for Laptop Mag, Tom's Hardware, Kotaku, Stuff and BBC Science Focus. In his spare time, you'll find Jason looking for good dogs to pet or thinking about eating pizza if he isn't already.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits