With iOS 8, iPhone Camera May Be Ready for Photographers

Apple's new mobile operating system, coming this fall, might allow people to utilize the full capability of the iPhone's powerful camera.

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Currently there is a huge disconnect between the iPhone camera's capabilities and how much users can take advantage of them. According to Tom's Guide tests, the latest iSight camera in the iPhone 5s is very faithful in capturing colors and adept at judging the white balance (color of light) in a scene. No camera is perfect, however, which is why even the best-quality DSLRs have scads of manual controls to override the camera's bad choices. Now the iPhone camera may, too.

Buried in the for-programmers weeds of its Worldwide Developers Conference today was word that Apple will open up the iPhone camera in its new operating system, iOS 8 (due in the fall), to provide camera apps with full control over exposure, white balance and shutter speed. Apple isn't the first phone maker to enable such control, but as the maker of one of the most popular phones and phone cameras, Apple's announcement could be a big deal, depending on the details.

MORE: Best Phone Cameras

Currently your only options with the iSight camera under iOS 7 are to turn on or off the flash or high dynamic range (HDR) mode or to choose among a few Instagram-copycat color filters. If you want to get the silhouette of buildings against a sunset sky, the camera is likely to instead light up the buildings and completely overexpose the sunset, for example. If the camera can't judge the white balance correctly in a night photo and turns everything orange, you have to fix it when you edit the photos. Oh, but the current camera app doesn't allow that that kind of editing.

Making a dumb camera smarter

The new iOS 8 promises to fix those problems, although Apple hasn't said exactly how. Controlling exposure could mean any number of things. Does it allow control of shutter or aperture, or both at the same time (manual mode)? If the camera wants to overexpose that skyline, selecting a faster shutter speed could get a photo that's both darker and less likely to show camera blur. If Apple will allow control of metering mode (deciding what part of the image to optimize exposure for) you can select center or spot mode to get the sun right and make everything else black.

Likewise, it's not clear what control of white balance is. Does that mean selecting pre-set modes, like daylight or incandescent light, or does it include the ability to custom-tune how the camera interprets colors? Or is everything possible and all up to the developers of each app?

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Not everyone may want to know what things like aperture priority, exposure compensation or custom white balance are. But they know the difference between a good and a bad photo and will want to make their pictures better.

MORE: How Many Megapixels Do You Need?

That's where the app developers come in. Apple isn't building all the advanced capabilities into its default phone app, but is allowing the makers of apps to take advantage of those features, either in standalone apps or extensions to the iOS camera app. Some of those apps may be oriented to photo geeks, with an intimidating layout of settings resembling the screen of a pro DSLR. But developers also have the opportunity to make powerful settings easy to use. How about an app with a silhouette mode for that skyline shot, or sliders to adjust color while you are previewing a photo under bad lighting?

Making control intuitive

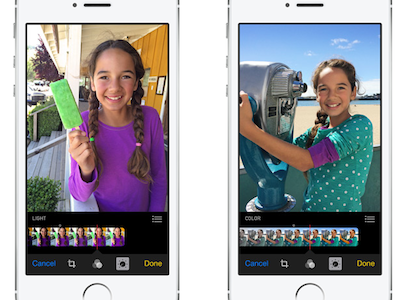

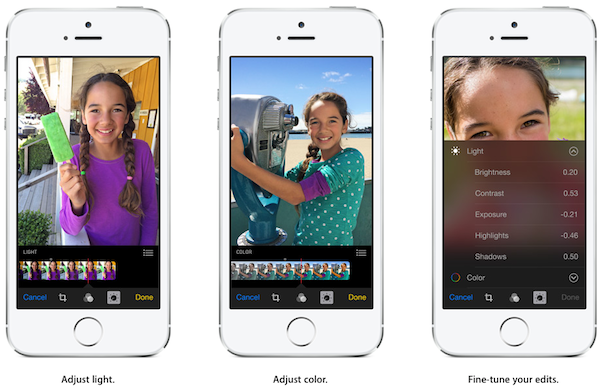

Apple gave a hint of how sophisticated controls can be easy to use while previewing the photo-editing side of its upcoming iOS 8 camera app. Instead of clicking a magic-wand icon and hoping for the best, you'll soon be able to drag smart sliders to change exposure or color in complex ways. In doing that, you are actually asking the camera to make several adjustments, such as brightness, contrast, and shadows that work together to make a better photo. And if you think you can do better by tweaking those individual settings yourself, you'll be able to do that as well.

It's unclear why Apple's own new camera app provides so much ability to fix a bad photo but so little control to take a good one in the first place. Perhaps, because it is so hard to get the right balance between advanced capabilities and usability, Apple decided to turn it over to a mass of programmers, in the hope that some of them get it right.

Follow Sean Captain @seancaptain and on Google+. Follow us @tomsguide, on Facebook and on Google+.

Sean Captain is a freelance technology and science writer, editor and photographer. At Tom's Guide, he has reviewed cameras, including most of Sony's Alpha A6000-series mirrorless cameras, as well as other photography-related content. He has also written for Fast Company, The New York Times, The Wall Street Journal, and Wired.

Club Benefits

Club Benefits