The RTX 5090 is the best graphics card I've ever owned — but there's a catch for living room PC gamers

Multi-frame gen is great, but I'd only use 2x for my living room

Just as Terminator 2: Judgment Day predicted back in ye olden days of 1991, the future belongs to AI. That could be a problem for Nvidia RTX 50-series GPUs, even when it comes to the best consumer graphics card money can buy.

I was ‘fortunate’ enough to pick up an Nvidia GeForce RTX 5090 a couple of months ago. I use those semi-joking apostrophes because I merely had to pay $650 over MSRP for the new overlord of GPUs. Lucky me.

Before you factor in the 5090’s frame-generating AI voodoo (which I’ll get to), it’s important to give credit to Team Green for assembling an utter beastly piece of silicon. Around 30% more powerful than the RTX 4090 — the previous graphics card champ — there’s no denying it’s an astonishing piece of kit.

Whether you’re gaming on one of the best TVs at 120 FPS or one of the best gaming monitors at 240 fps and above, the RTX 5090 has been designed for the most ludicrously committed hardcore gamers. And wouldn't you know it? I just happen to fall into this aforementioned, horribly clichéd category.

My setup

So I have a PC similar to the build our lab tester Matt Murray constructed (he even posted a handy how-to on building a PC) — packing the 5090, AMD Ryzen 7 9800X3D, and 64GB DDR5 RAM on a Gigabyte X870 Aorus motherboard.

In terms of the screens I play on, I have two. For the desk, I've got an Samsung Odyssey G9 OLED with a max 240Hz refresh rate, but most of the time, I'll be in living room mode with my LG G3 OLED’s max 120Hz refresh rate.

Frame game

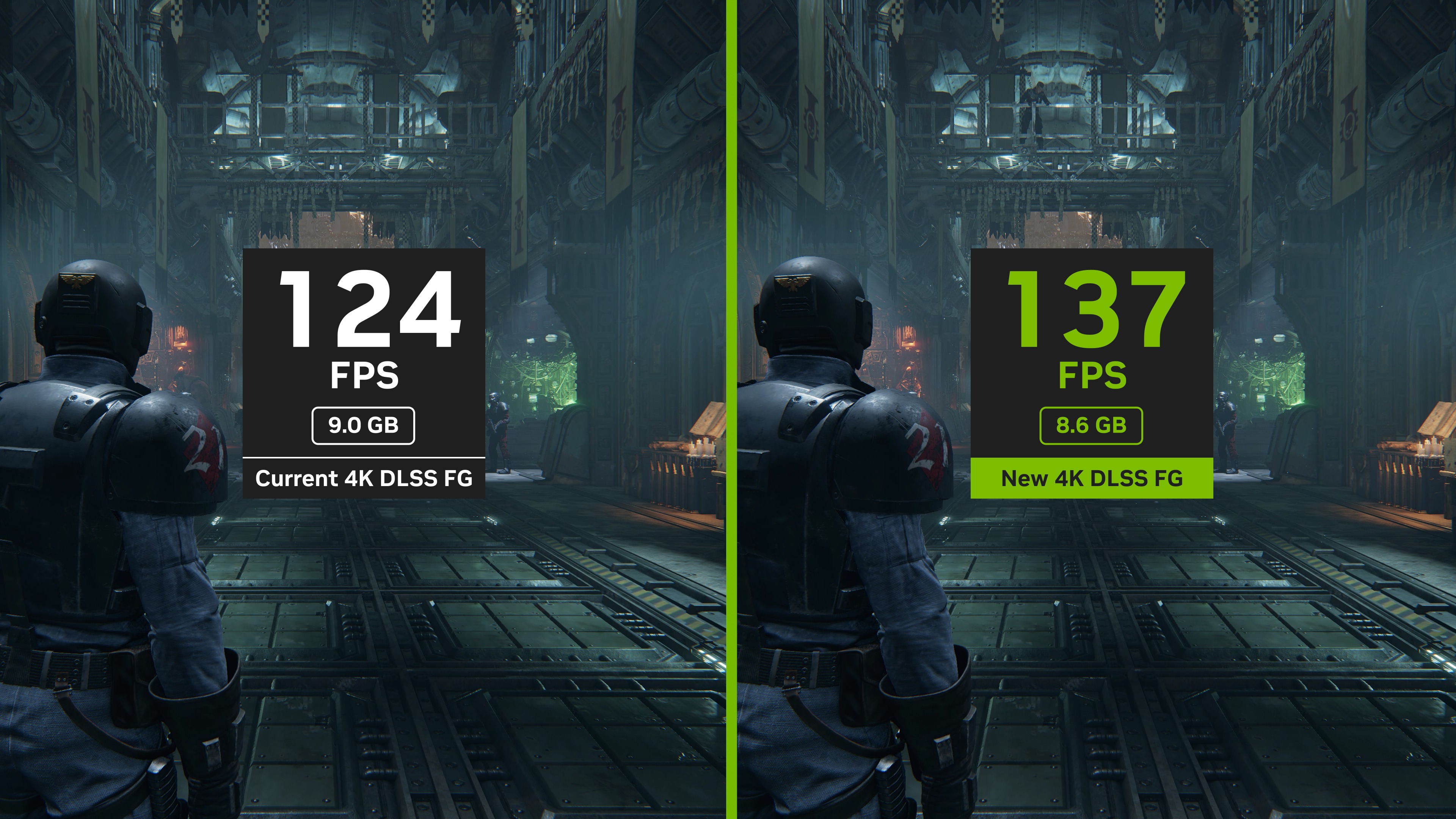

The main selling point of Nvidia’s latest flagship product is DLSS 4’s Multi Frame Generation tech. Taking advantage of sophisticated AI features, Nvidia’s RTX 50 cards are capable of serving up blistering frame rates that simply can’t be achieved through brute force hardware horsepower.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Multi Frame Generation — and I promise that’s the last time I capitalize Team Green’s latest buzz phrase — feels like the biggest (and most contentious) development to hit the PC gaming scene in ages. The tech has only been out for a few months and there are already over 100 titles that support Nvidia’s ambitious AI wizardry.

How does it work? Depending on the setting you choose, an additional 1-3 AI-driven frames of gameplay will be rendered for every native frame your GPU draws. This can lead to colossal onscreen FPS counts, even in the most demanding games.

Doom: The Dark Ages, Cyberpunk 2077, Indiana Jones and the Great Circle and Half-Life 2 RTX — some of the most graphically intense titles around can now be played at incredibly high frame rates with full ray tracing engaged. That’s mainly thanks to multi frame generation.

So I got into the games (turn off Vsync for the best results). For more specific context, these figures were taken from Doom’s Forsaken Plain level, Indy’s Marshall College section during a particularly challenging path traced scene, driving around downtown Night City in Cyberpunk, and Gorden’s mesmerizing new take on Ravenholm.

All games tested at 4K (Max settings, DLSS Balanced) | Cyberpunk 2077 | Doom: The Dark Ages | Indiana Jones and the Great Circle | Half-Life 2 RTX demo |

|---|---|---|---|---|

Frame gen off (Average frame rate / latency) | 58 FPS / 36-47 ms | 95 FPS / 37-48 ms | 85 FPS / 33-40 ms | 75 FPS / 26-3 ms |

Frame gen x2 (Average frame rate / latency) | 130 FPS / 29-42 ms | 160 FPS / 51-58 ms | 140 FPS / 35-46 ms | 130 FPS / 29-42 ms |

Frame gen x3 (Average frame rate / latency) | 195 FPS / 37-52 ms | 225 FPS / 54-78 ms | 197 FPS / 43-53 ms | 195 FPS / 37-52 ms |

Frame gen x4 (Average frame rate / latency) | 240 FPS / 41-60 ms | 270 FPS / 56-92 ms | 243 FPS / 44-57 ms | 240 FPS / 41-60 ms |

These are ludicrous frame rates — limited only by either my LG G3 OLED’s max 120Hz refresh rate, or even the sky high 240Hz on my Samsung Odyssey G9 OLED in a couple circumstances.

There is a catch, though, which goes back to the ways that I play.

A mixed lag

Despite my frame rate counter showing seriously impressive numbers, the in-game experiences often don’t feel as smooth as I expected.

As much as I’ve tried to resist, I’ve become increasingly obsessed with the excellent Nvidia app (and more specifically) its statistics overlay while messing around with multi frame gen of late. These stats let you monitor FPS, GPU and CPU usage, and most crucially for me, latency.

Also known as input lag, latency measures the time it takes a game to register the press of a button on one of the best PC game controllers or the click of a key/mouse in milliseconds. If your latency is high, movement is going to feel sluggish, regardless of how lofty your frame rate is.

And that situation is compounded on my TV. The high frame rate is glorious on my monitor, but when locked to 120Hz, you don't get the perceived smoother motion of those additional frames — creating a disconnect that makes that latency a bit more noticeable.

My advice to TV gamers

If you own one of the best gaming PCs and want to enjoy a rich ray traced experience with acceptable input lag at responsive frame rates on your TV, my advice would be to aim for the frame gen level that is as close to your maximum refresh rate as possible.

For all the games I tested, that would be 2x. At this level, I find latency hovers around the mid 30s but never exceeds 60 ms, which feels as snappy in that kind of living room gaming setup.

Crank up the multi frame gen set to either x4 or x3 setting, and there's a depreciation of what you get here, as the latency becomes more visibly prevalent at the restricted refresh rate using one of the best gaming mice.

Flip to a 240Hz monitor, however, and the difference is night and day, as the latency remains at a responsive level alongside those AI-injected frames for a buttery smooth experience.

Path life

And now, we've got to talk about path tracing — it's already blowing minds in Doom: The Dark Ages, and it's prevalent in the likes of Cyberpunk and Doctor Jones' enjoyable romp. It's essentially the ‘pro level’ form of ray tracing, this lighting algorithm can produce in-game scenes that look staggeringly authentic.

Given the demands of this tech on your GPU, the most graphically exciting development in PC gaming for years will most likely demand you use DLSS 4’s x4 or x3 AI frame-generating settings to maintain high frame rates in future implementations.

I wasn’t surprised that path tracing floored me in CD Projekt Red’s seedy yet sensational open-world — I was messing with its path traced photo mode long before DLSS 4 arrived. The quality of the effect cranked to the max in The Great Circle knocked my socks off, though.

That stunning screenshot a few paragraphs above is from the game’s second level, set in Indy’s Marshall College. During a segment where Jones and his vexed buddy Marcus search for clues following a robbery, path tracing gets to really flex its muscles in a sun-dappled room full of antiquities. Dropping down to the highest form of more traditional ray tracing, I was genuinely shocked at just how much more convincing the path traced equivalent looked.

So while the technology matures, I hope Nvidia continues to work to reduce latency at these middle-of-the-road frame rates too, so that this AI trickery really hits the spot when maxed out.

To be clear to those on the ropes about buying an RTX 5090 — just as we've said in our reviews of the RTX 5060 Ti, 5070 and 5070 Ti, if you own a 40 series-equivalent GPU, you should stick with your current card.

You may not get that multi-frame gen goodness, but with DLSS 4 running through its veins, you still get the benefits of Nvidia’s latest form of supersampling and its new Transformer model — delivering considerably better anti-aliasing while being less power-hungry than the existing Legacy edition.

I don’t want to end on a total downer though, so I’ll give credit where its due. If you’re on a monitor with a blisteringly refresh rate though, I’ll admit multi frame generation might be a good suit for your setup.

My fondness for the RTX 5090 is only matched by Hannibal Lecter’s delight in chowing down on human livers. But for those who hot switch between the desk and the couch like I do, make sure you tweak those settings reflective of your refresh rate.

More from Tom's Guide

- Nvidia's DLSS is a game-changer for PC gaming - here's how it works

- I tested the Nvidia GeForce RTX 5070 for 2 months — here’s why it’s good, but not great

- I dismissed the MSI Claw 8 AI+ — then Intel's game-changing update shut me up

Dave is a computing editor at Tom’s Guide and covers everything from cutting edge laptops to ultrawide monitors. When he’s not worrying about dead pixels, Dave enjoys regularly rebuilding his PC for absolutely no reason at all. In a previous life, he worked as a video game journalist for 15 years, with bylines across GamesRadar+, PC Gamer and TechRadar. Despite owning a graphics card that costs roughly the same as your average used car, he still enjoys gaming on the go and is regularly glued to his Switch. Away from tech, most of Dave’s time is taken up by walking his husky, buying new TVs at an embarrassing rate and obsessing over his beloved Arsenal.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits