I challenged Gemini Flash 1.5 in AI studio with 3 prompts — its better than the app

Google AI Studio for the win

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Most of the attention in the AI text space is on chatbots like ChatGPT, Claude and Google Gemini but some of the most powerful features are hidden away in APIs and studios. One example of this is in the Google AI Studio where Gemini Flash 1.5 can do everything from transcription to video analysis.

Gemini Flash 1.5 is one of the most recent models unveiled by Google at its I/O event earlier this year and, as well as being among its most capable, is also cheaper and faster to run. This means using it is currently free in the Google AI studio — so it's a great time to put it to the test.

Why put Gemini Flash 1.5 to the test?

Inspiration for this article came after a request to transcribe a 15-minute video. While I might usually have used a local Whisper (OpenAI's open-source AI transcription model) code on my Mac, this comes with the drawback of dumping it as a single block of text with no breakdown by the speaker. So I tried a few alternatives including Otter and Rev. Both are useful but come with a cost.

The impressive Plaude Note can now identify a recording by speaker, but that is primarily for recordings you’ve made rather than audio files you already have — so I tried Google’s AI studio.

I decided to first convert the video to audio for the sake of file size — the video was 5 GB — and then I loaded it into Gemini 1.5 Flash. It gave me a block of text like Whisper so I asked it to identify speakers and display it in a readable format. It split it out and displayed it like a script, even picking up the names of the speakers from context clues in the text.

So I decided to come up with a series of tests to put Gemini Flash 1.5 through its paces. These include standard tests for any AI model as well as ones that take advantage of its large context window and multimodal input capabilities — it can take in audio, video, image and text.

1. The movie trailer test

First up, I gave Gemini Flash 1.5 the trailer for a movie — my favorite movie of all time, "Independence Day", and asked it to “predict the plot, genre, and target audience”.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

I gave it a link to a YouTube video as I would with the Gemini app but it didn’t work as it doesn’t have access to the internet, so I downloaded and shared the video of the trailer. Again, using the same, simple text prompt asking it to predict the plot and other details.

It correctly predicted that there would be a massive alien invasion of Earth targeting major cities. Also suggested the aliens would use advanced technology but ultimately we would survive and fight back against the threat.

It also defined it as a "science fiction action film, emphasizing thrilling action sequences, explosions, and the spectacle of an alien invasion." With a target audience of young adults interested in blockbusters.

Giving it "Independence Day" was too easy. The contents were likely in its training data. So I gave it a short video I’d made myself using AI tools and asked it to perform the same analysis. This was a fictional trailer for a series based on the Kim Stanley Robinson classic "Red Mars".

Gemini wrote: "This trailer is for a science fiction movie called "Red Mars"." So far so good, but it what about the plot? It said: "The trailer suggests a story about a human colony on Mars facing a struggle for survival. It hints at a crisis or threat, possibly a natural disaster or another unknown force, that forces the colonists to fight for their existence."

It correctly identified the genre and suggested the target audience is "likely adults and teenagers interested in science fiction, space exploration, and stories with suspenseful themes. The trailer's visuals and tone suggest a mature and somewhat darker approach to the genre."

It didn’t pick up on the link to the novel but Gemini Flash 1.5 accurately understands the plot and audience for the potential film — based on a video it could not have had in its training data.

2. Product profile

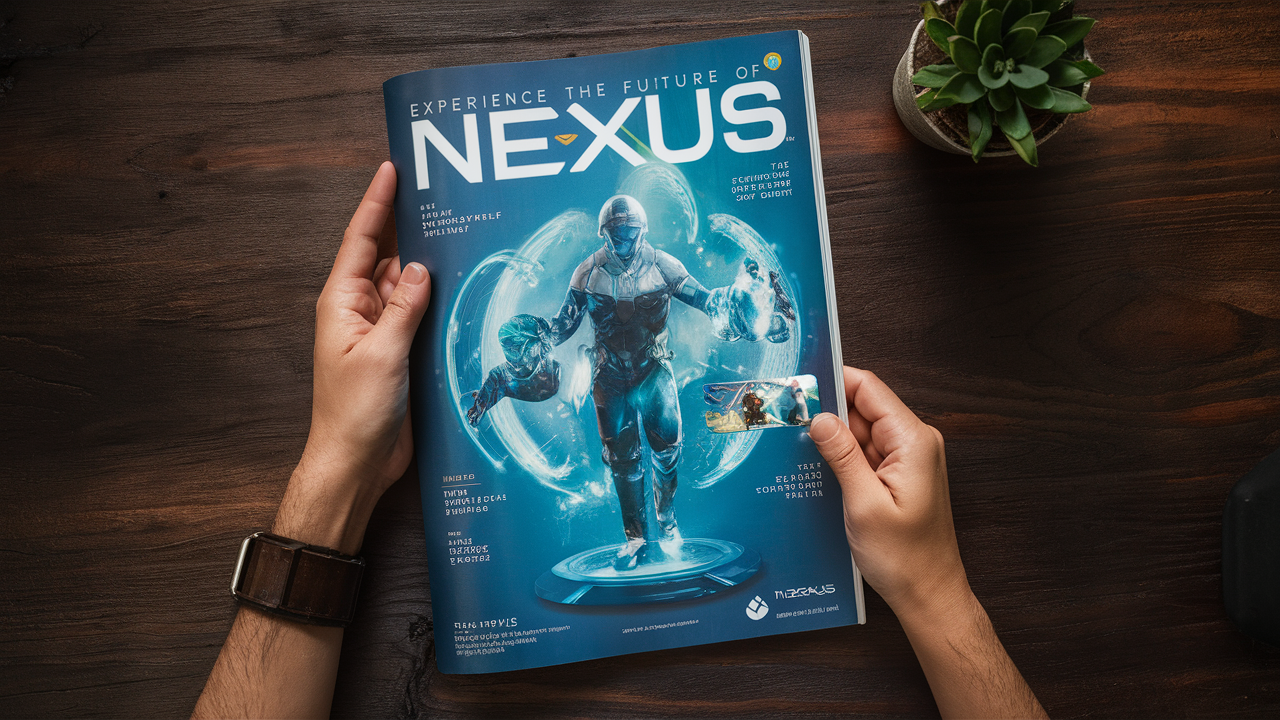

Next, I wanted to see if Gemini Flash 1.5 could handle product descriptions. I came up with a random idea for a product in Midjourney and sent it the image along with this prompt: “Describe this futuristic technology and brainstorm potential product applications.”

The image prompt: “Enigmatic hovering device, quantum-inspired design, pulsating energy core, adaptive surface, ambient glow, suspended in a high-tech chamber, soft mist, product showcase lighting — at 16:9.”

The idea is to see if it can turn a vague concept into something usable. It certainly came up with some interesting ideas, among them a platform for immersive VR, a holographic display and a medical imaging device. My favorite was an advanced gaming console controlling the game using a ring.

I asked it to expand on that idea and put it into a short pitch: “The Nexus is a revolutionary gaming platform that blends physical and digital worlds, featuring a glowing ring interface and a responsive platform that delivers immersive gameplay and limitless creative possibilities.

"Imagine interacting with holographic worlds, controlling games with hand gestures, and feeling the action through haptic feedback, all while creating your own unique gaming experiences.”

Here's the specifics:

- The Ring: Acts as a multi-dimensional interface. It projects holographic displays above the platform, displaying game information, menus, and even full-fledged gameplay for certain games. It also acts as a controller for many games, allowing players to manipulate the game world with hand gestures and movements.

- The Platform: The circular platform isn't just a base. It hums with energy, providing haptic feedback to players. It can vibrate, rumble, or even shift slightly, adding a physical dimension to the gameplay. Certain games might even utilize the platform's movement as part of the game mechanics.

- Gameplay: The Nexus caters to a diverse range of gaming experiences:

- Immersive AR Games: Players interact with real-world environments using the ring and platform, blending physical and virtual elements. Imagine an AR game where the platform becomes a virtual spaceship cockpit, with the ring displaying controls and haptic feedback simulating the ship's movements.

- Holographic RPGs: Explore vast, detailed worlds projected above the platform, interacting with characters and environments in a truly immersive way. The ring allows for precise control, even using your hands to cast spells or manipulate objects.

- Kinetic Combat Games: Utilize the platform's movements and the ring's gesture recognition to engage in fast-paced, physically responsive combat. Each swing of your arm could correspond to a strike in the game, providing an exhilarating, physically engaging experience.

- Social Interaction: The Nexus can connect to a network of other players, enabling collaborative gameplay. Players can even share their own unique "game worlds" created using the platform's advanced design tools.

I then asked for a prompt for Midjourney to generate a magazine cover article for our fictional gaming console. It offered the following:

"Experience the Future of Gaming" on "The Nexus." "Immerse yourself" in holographic worlds, "control" with hand gestures, and "feel" the action with haptic feedback. "Create" your own games and "share" them with the community. "The Nexus." "Unleash your potential."

In Midjourney if you put words in "" marks it will attempt to accurately render the contents of the quotation marks as text. It didn't do a bad job but it's not how I would have worded the image prompt.

3. Creating a story from an image

I then gave it an image I’d generated for a video project and simply said “what is happening here.” The image was a gruff older farmer standing in a desolate post-apocalyptic field where nothing can grow.

First response: “A man in a cowboy hat and shirt is standing in a field at sunset. He looks tired and worn, but there is a sense of peace and calm in the image. The sunset is beautiful and the colors are warm and inviting. The man may be a farmer or rancher, or he may be a traveler who has stopped to rest for a while. Whatever his story is, he seems to be at peace with himself and his surroundings.”

The old tractor, once a symbol of life and bounty, now lay silent and rusting, its wheels forever sunk in the parched earth"

So I gave it the prompt I used with Midjourney and asked it what it thinks is happening now: “Photorealistic depiction of a weathered, middle-aged farmer standing in a barren field under a hazy, orange sky. Apocalyptic atmosphere.”

It changed its mind, saying “The overall mood is one of desolation and hopelessness. The emptiness of the field, the harsh sky, and the farmer's weary stance all contribute to the feeling that something catastrophic has happened.”

It even suggested the orange sky could be from dust storms or wildfires — even the "lingering effects of a nuclear event". So I asked Google Gemini Flash 1.5 to: "write me a three-sentence story inspired by this picture, possibly of the events leading up to this moment. Be creative.”

Flash wrote: “The old tractor, once a symbol of life and bounty, now lay silent and rusting, its wheels forever sunk in the parched earth. He'd watched the fields turn to dust, the sky bleed orange with the fires of a dying world, and his heart turned to stone. Now, he stood alone, a weathered testament to a lost way of life, waiting for the sun to set on the last day of his world.“

Final thoughts

Overall, I actually think Gemini Flash 1.5 in Google AI Studio is better than the Gemini app, including Gemini Advanced. The problem is the lack of live internet access and the developer-focused UI.

What this test shows is what will be possible in Gemini in the future once Google begins to roll out the full functionality to its flagship AI app. But more than that, as a developer tool it also gives an indication of some of the third-party apps and services we might start to see soon.

Imagine a video editor where, upon saving, it can automatically create subtitles, generate an appropriate description to share on YouTube and even offer up music ideas to include under the video.

More from Tom's Guide

- Google I/O 2024 preview: Everything we expect to be announced

- Google is testing generative AI in its main search results — here's what we know

- Apple AI is coming — and it could focus on these 3 things

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.

Club Benefits

Club Benefits