I tested ChatGPT vs Gemini 2.5 Pro with these 3 prompts - and it shows what GPT-5 needs to do

It needs to mix reasoning with creation

OpenAI has been teasing GPT-5 throughout the summer, keeping the AI community on tenterhooks. Back in mid-July, Sam Altman couldn't resist sharing that their internal GPT-5 prototype had topped this year's International Mathematical Olympiad.

His casual mention that "we are releasing GPT-5 soon" sent a clear signal that the model's production-ready, even if the full research system remains under wraps.

But here's the thing — Google's Gemini 2.5 Pro already sets a formidable benchmark. This "thinking" model dominates maths, science, and coding leaderboards while shipping with a staggering one-million-token context window (with two million on the horizon).

Gemini 2.5 Pro also boasts native ingestion of text, images, audio, video, and complete codebases, plus an experimental "Deep Think" mode that also excels at Olympiad-level mathematics and competitive coding challenges.

What can we expect from GPT-5?

Core rumors around GPT-5 point to it being an agent-ready model capable of reasoning as well as creativity. A blend of the o-series and GPT-series models.

The next few months will be pivotal. These tests show the gap isn't about pure performance — it's about thoughtful completeness. GPT-5 doesn't need to be universally better. It needs to be consistently whole.

A config file labelled "GPT-5 Reasoning Alpha" surfaced in an engineer's screenshot, researchers discovered the model name embedded in OpenAI's own bio-security benchmarks, and a macOS ChatGPT build shipped with intriguing flags for "GPT-5 Auto" and "GPT-5 Reasoning", suggesting dynamic routing between general and heavy-duty reasoning engines. Even OpenAI researcher Xikun Zhang offered reassurance to anxious users that "GPT-5 is coming" in an X post.

Sources speaking to Tech Wire Asia paint an exciting picture of an early August release alongside lighter "mini" and "nano" variants designed for lower-latency applications. The approach reportedly merges the GPT-4 family with the o-series into a unified model architecture — reasoning plus knowledge similar to Gemini 2.5 Pro.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Rumors are buzzing about a 256,000-token context window, native video input capabilities, and agent-style autonomy for complex tasks. If accurate, that would double ChatGPT-4o's memory capacity and put GPT-5 squarely in competition with Google's latest.

What does GPT-5 need to compete with Gemini 2.5 Pro?

To understand exactly what GPT-5 needs to deliver, I put ChatGPT-4o and o3 head-to-head with Gemini 2.5 Pro across three demanding prompts that stress-test long-context analysis, full-stack code generation, and multi-step planning. The results reveal precisely where OpenAI needs to focus their efforts.

Prompt 1: Long-form Analysis and Synthesis

I challenged both systems to analyze a 3,000-word peer-reviewed article on renewable-energy policy, identify logical fallacies or unsupported claims, summarize findings in plain English, and propose three specific research avenues while highlighting biases and assumptions.

Specifically, I found an article in the journal PLOS One on the use of renewable energy as a solution to climate change. I fed each the PDF and the prompt outlined above. I didn’t use Deep Research from either Gemini or ChatGPT — just the native model.

ChatGPT's analysis

When I fed this renewable energy research paper to ChatGPT, the difference between 4o and o3 was striking.

The standard 4o model delivered a competent overview that correctly identified the correlation-causation problem and noted missing economic controls — solid analysis that would satisfy most readers.

But o3 operated on an entirely different level, systematically dissecting the paper using formatted tables that exposed five distinct types of bias, caught subtle methodological flaws like data imputation risks, and proposed specific research approaches including difference-in-differences and synthetic controls.

The standout feature was a comprehensive table that didn't just suggest future research directions, but explained precisely why each avenue mattered and how to pursue it — the kind of rigorous analysis you'd expect from a senior research analyst, not an AI.

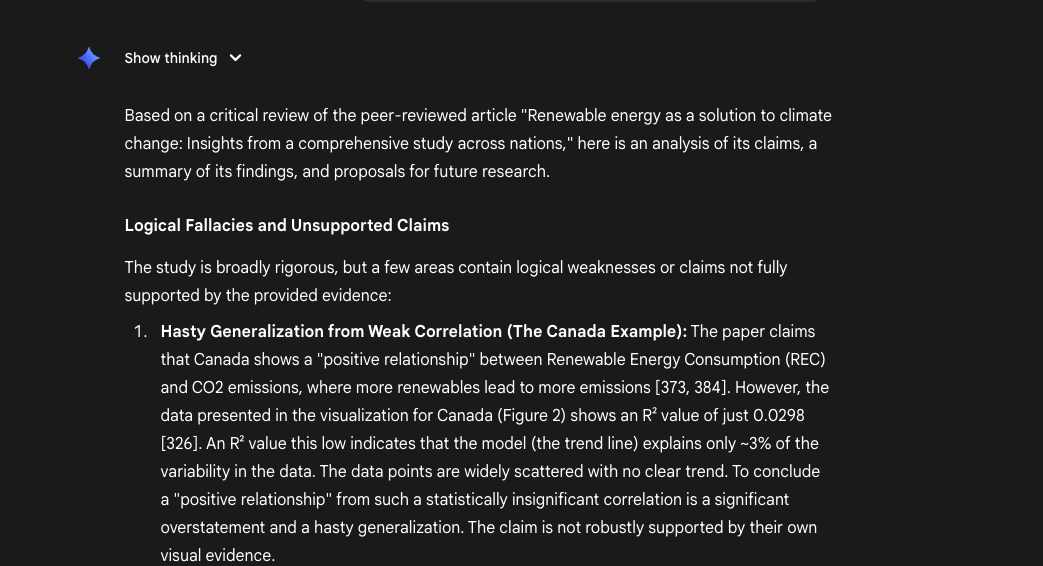

Gemini 2.5 Pro: The Statistical Detective

While both ChatGPT versions focused on broad methodological critiques, Gemini 2.5 Pro zeroed in on a critical statistical flaw that completely undermined one of the paper's key claims.

The smoking gun: an R-squared value of 0.0298 for Canada's data, meaning the statistical model explained only 3% of the variance — essentially random noise masquerading as a "positive relationship."

Gemini also identified a circular reasoning problem where researchers used linear regression to create missing data points, then analyzed that artificially smoothed dataset with more regression analysis.

Though less comprehensive than o3's response, Gemini's laser focus on quantitative rigor caught fundamental errors that both ChatGPT models missed entirely.

What GPT-5 Needs to Deliver

The implications are clear: GPT-5 needs to combine o3's breadth of analysis with Gemini's precision in statistical reasoning. While o3 excels at comprehensive structural analysis and methodology critique, it completely missed basic statistical red flags that Gemini caught immediately.

The ideal system would automatically run sanity checks on correlation coefficients, sample sizes, and confidence intervals without prompting — when a paper claims a relationship exists with an R-squared near zero, the AI should flag it immediately.

Think of it this way: o3 writes like a brilliant policy analyst who can contextualize research within broader frameworks, while Gemini reads like a sharp-eyed statistician who spots when the numbers don't add up.

For GPT-5 to truly compete with Gemini 2.5 Pro's analytical capabilities, OpenAI needs both skills seamlessly integrated — comprehensive analytical frameworks backed by rigorous quantitative verification.

Prompt 2: End-to-end code & app creation

For the coding challenge the rule was 'one shot', no follow up. It had to build the response within a single message.

I specified a simple clicker game with tap-to-collect coins, an in-game shop, upgrade tree, and save-state functionality, requesting a complete, responsive web app in HTML/CSS/JavaScript with commented code explaining core mechanics.

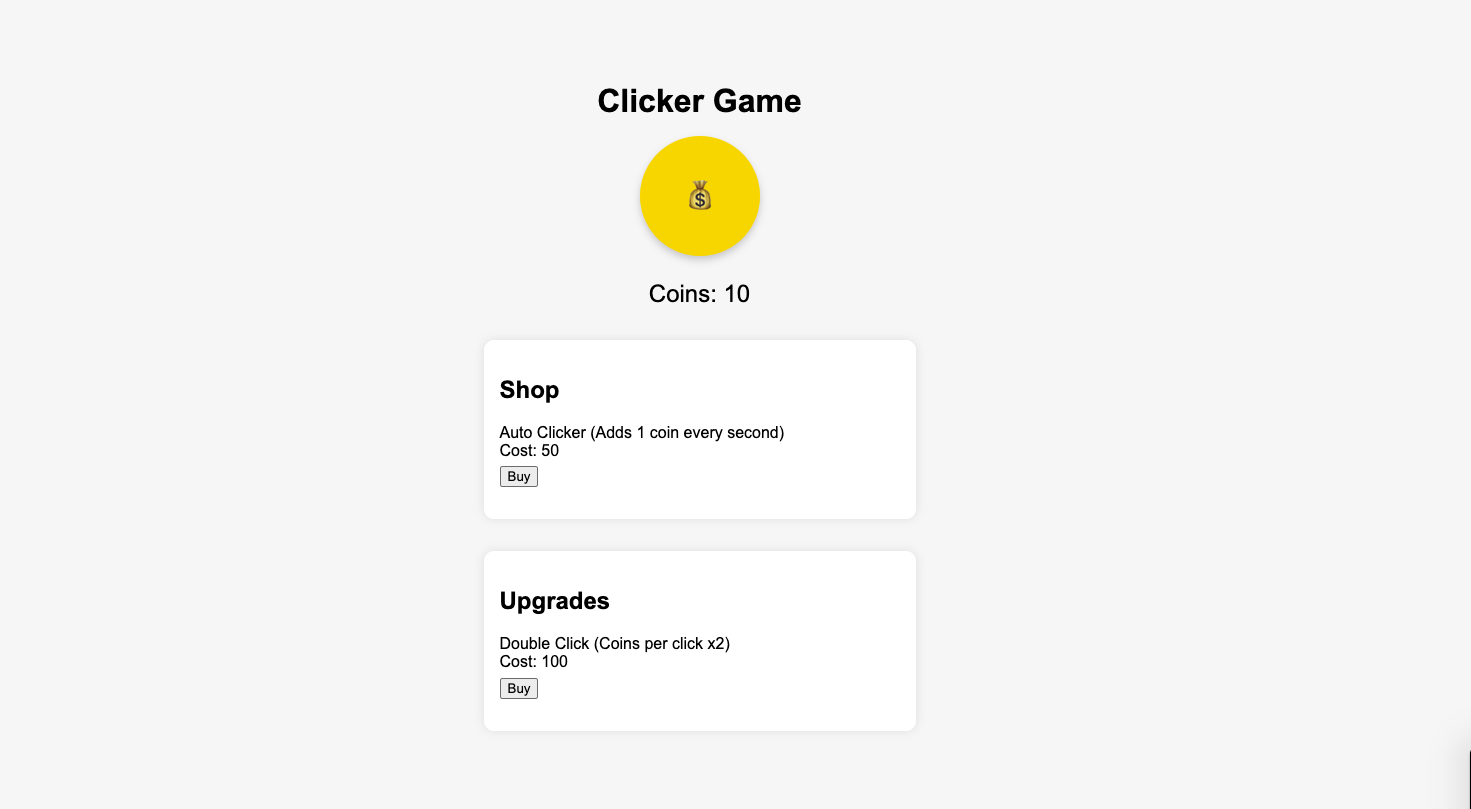

ChatGPT: Preview failure and incomplete

First, the preview feature from Canvas in ChatGPT failed on both tests, so I had to download the code and run it directly in the browser. No hardship but Gemini's preview worked perfectly.

ChatGPT o3's implementation started strong with a sophisticated architecture that showed real programming expertise. The code featured clean separation of concerns, custom CSS variables for consistent theming, and a well-structured game state object.

The upgrade system used dynamic cost functions with exponential scaling, and it included a passive income system using intervals. However, there's a critical issue: the code cuts off mid-function. What we can see suggests o3 was building something elegant — but an incomplete game is no game at all and the rule was one shot.

In stark contrast, 4o delivered a complete, working game that does exactly what was asked — no more, no less. The implementation features a simple coin-clicking mechanic, localStorage for persistence, and two basic upgrades: an auto-clicker and a double-click multiplier.

The code is clean and functional, using straightforward loops for automation and JSON serialization for saving. While it lacks sophistication, it actually works. Sometimes shipping beats perfection, and 4o understood this assignment. The interface is minimal but responsive, and every promised feature functions as expected.

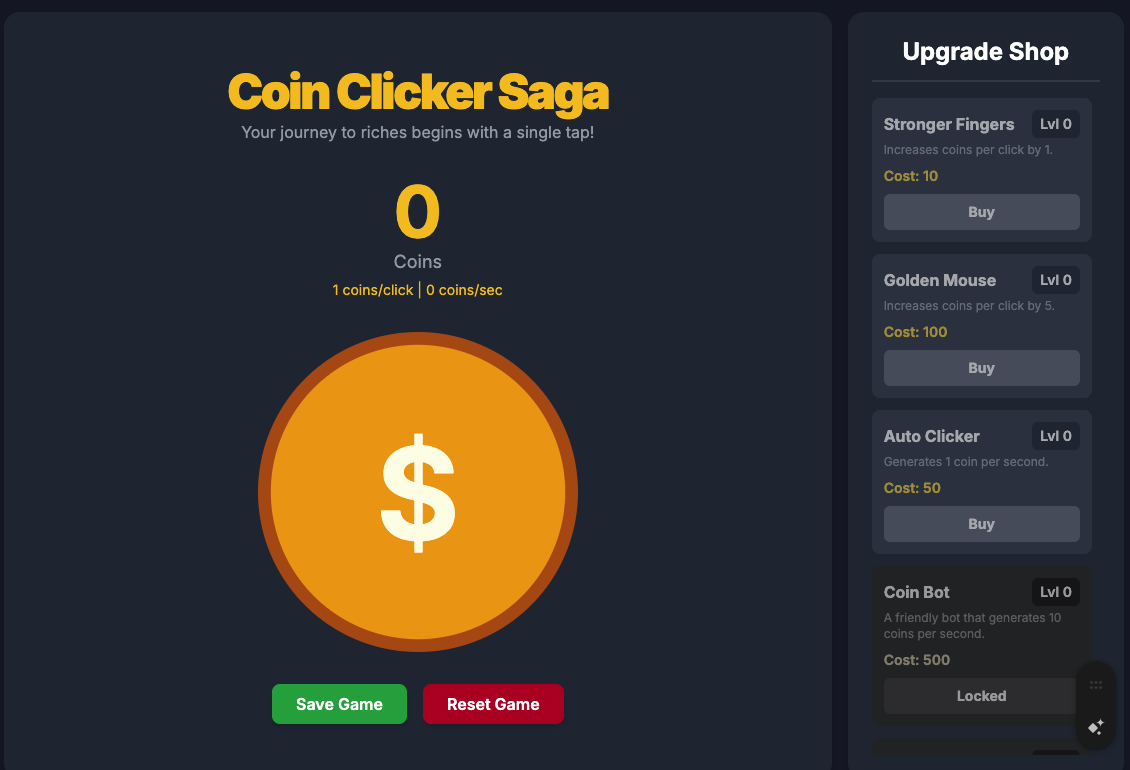

Gemini 2.5 Pro: The Complete Package

Gemini's implementation reads like production code from an experienced game developer. Beyond delivering all requested features, it adds polish that transforms a simple clicker into an engaging experience.

The upgrade tree includes unlock conditions (Coin Bot requires Auto Clicker level 5), creating actual progression mechanics. It even included an offline earnings function to calculate rewards for returning players based on their passive income rate.

Visual feedback through floating damage numbers and toast notifications makes every action feel impactful. The code itself is exemplary — every function is thoroughly commented, explaining not just what it does but why design decisions were made.

Most impressively, it includes features I didn't even request, like auto-save every 30 seconds and a confirmation dialog for resets.

What GPT-5 Needs to Deliver

The contrast here is illuminating. While o3 showed sophisticated programming patterns, it failed the fundamental requirement: shipping working code. GPT-5 needs to combine o3's architectural elegance with reliability that ensures complete, functional output every time. More importantly, it needs to match Gemini's product thinking — anticipating user needs beyond the literal specification.

The difference between "build a clicker game" and building a good clicker game lies in understanding implicit requirements: players expect satisfying feedback, progression systems that create goals, and quality-of-life features like auto-save.

Gemini demonstrated this product sense by adding unlock conditions that create strategic depth and offline earnings that respect player time.

For GPT-5 to compete in code generation, it needs to deliver not just syntactically correct code, but thoughtfully designed products that show understanding of user experience, game design principles, and production-ready practices. The goal isn't just to write code — it's to create software people actually want to use.

Prompt 3: Multi-step travel planning

For the final test I asked the AI's to help with some travel planning. Specifically a ten-day family trip through parts of Europe.

The prompt: Plan a 10-day family vacation (2 adults, 2 children ages 10 and 14) visiting London, Paris, Rome, and Barcelona with a total budget of €10,000. Include:

- Day-by-day itinerary with specific attractions, restaurants, and activities suitable for the family

- Transportation between cities (flights/trains) with booking recommendations and costs

- Hotel recommendations in each city with nightly rates

- Daily spending breakdown including meals, attractions, and local transport

- Essential local customs and etiquette for each city

- Practical tips for traveling with children in each location

- Currency/payment considerations and money-saving strategies

- Backup plans for rainy days or attraction closures

Ensure the budget covers all expenses including international travel, accommodation, food, activities, and incidentals. Present the information in an organized, easy-to-follow format.

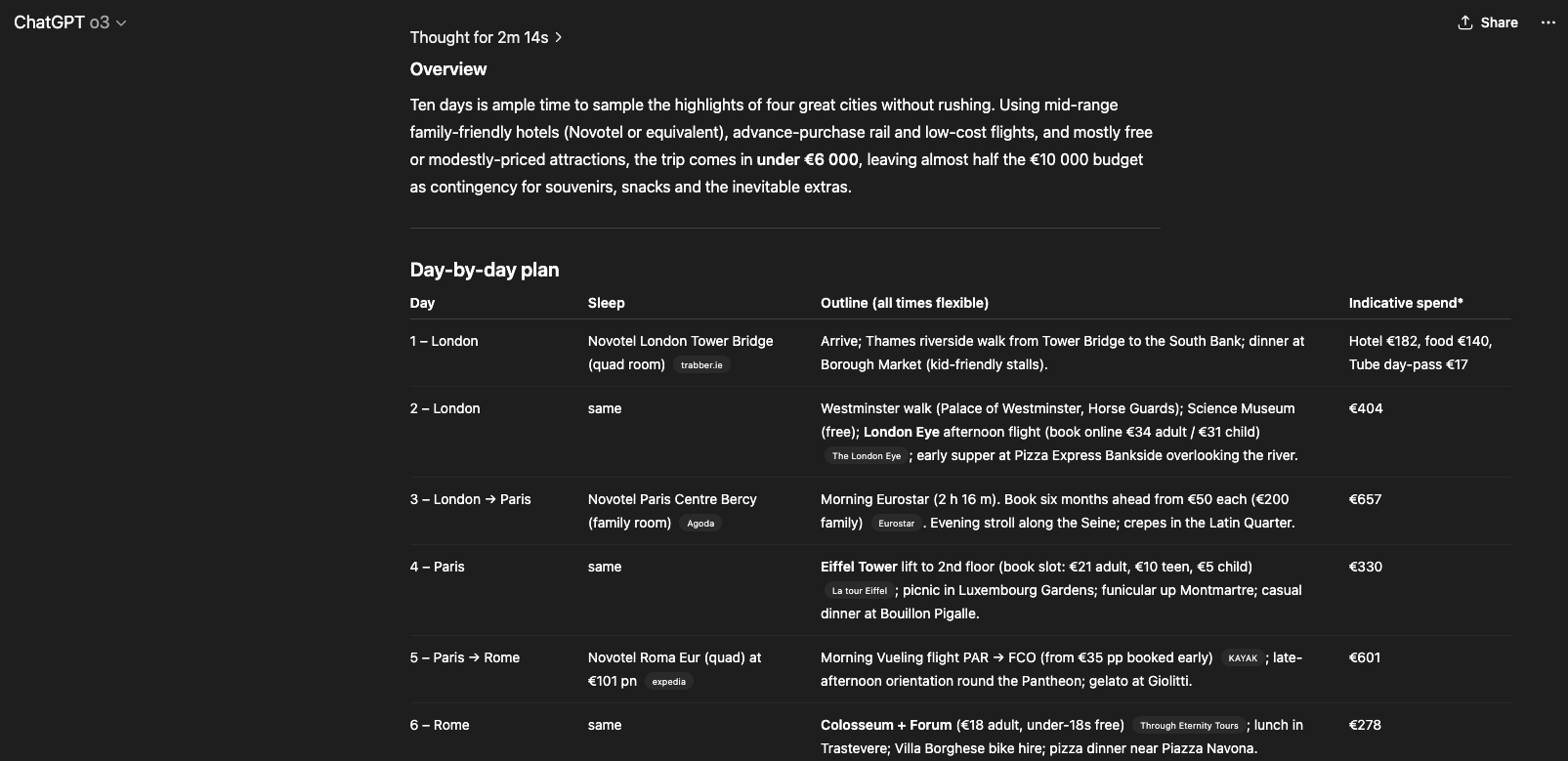

ChatGPT: Precision vs approximation

ChatGPT o3 delivered what I can only describe as a travel agent's masterpiece. We're talking specific hotel recommendations with direct booking links (Novotel London Tower Bridge at €182/night via trabber.ie), exact train times (morning Eurostar, 2h 16m), and granular cost breakdowns that show the entire trip coming in at €6,000 — leaving a realistic €4,000 buffer.

The customs advice reads like insider knowledge: order coffee at the bar in Rome for local prices, keep your voice down on the Paris Métro, and watch out for Roman drivers who won't stop at zebra crossings.

ChatGPT 4o took a more traditional approach, delivering a competent itinerary that hits all the major spots but relies heavily on approximations (~€180/night) and generic recommendations ("family-friendly pub").

While it covers all the required elements, the linked sources are mostly Wikipedia pages rather than actual booking sites. It's the difference between a travel blog post and an actionable planning document.

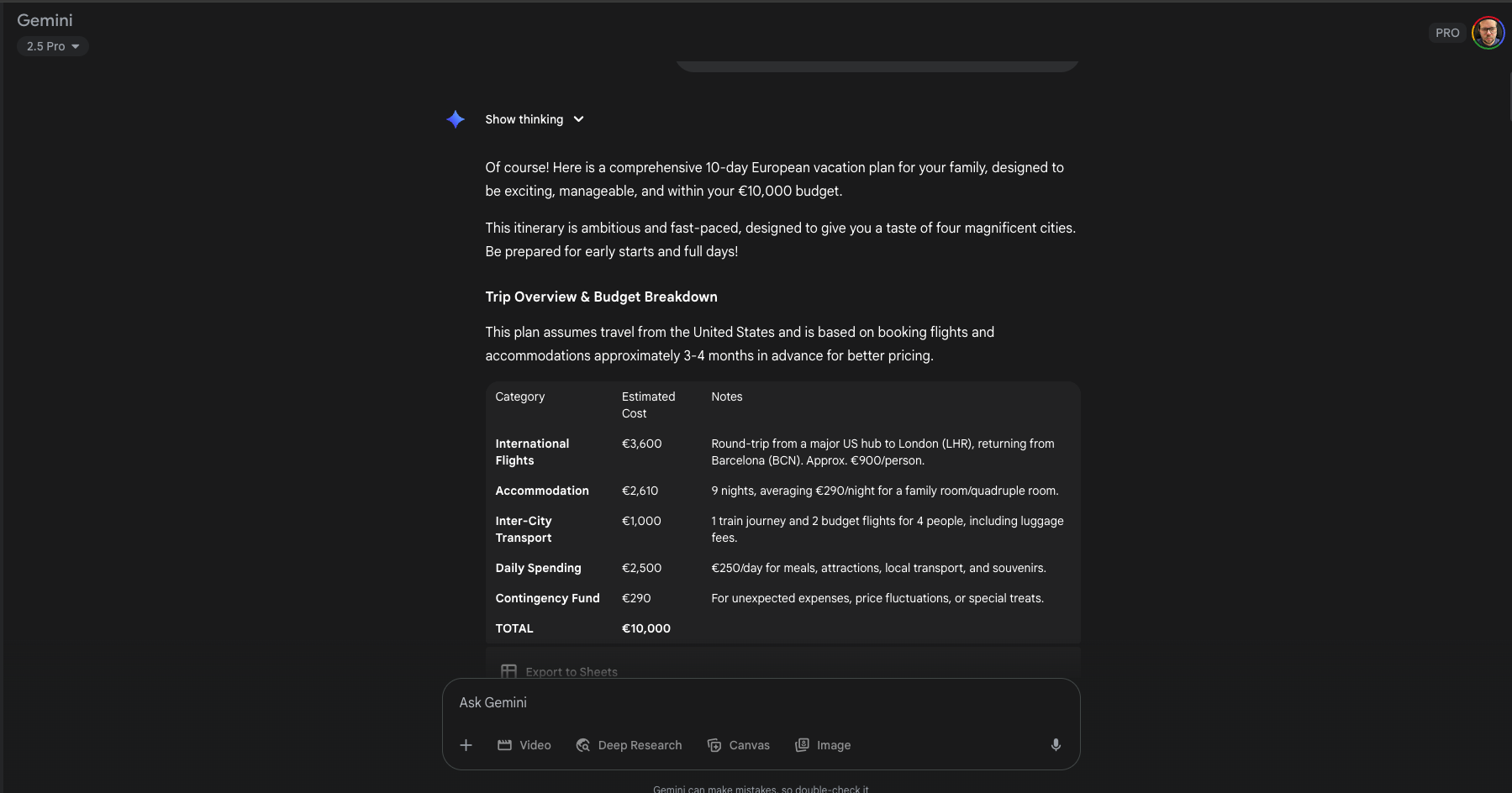

Gemini 2.5 Pro: The experience designer

Gemini transformed the assignment into something else entirely — a narrative journey that reads like a high-end travel magazine feature.

Beyond the expected attractions, it adds experiences I didn't even think to ask for: Gladiator School in Rome where kids learn sword-fighting techniques, specific times to catch the afternoon light through Sagrada Família's stained glass, and psychological tricks like using gelato as museum motivation.

The writing itself sparkles with personality ("Queuing is a national sport" in London) while maintaining practical precision. Each day includes specific departure times, walking routes, and restaurant recommendations with exact locations.

The budget hits exactly €10,000, suggesting careful calculation rather than rough estimates. Most impressively, it anticipates family dynamics — building in pool time at Hotel Jazz in Barcelona as a "reward for tired kids (and parents)."

What GPT-5 Needs to Deliver

This comparison exposes a fundamental challenge for OpenAI. While o3 excels at data precision and 4o provides reliable completeness, Gemini demonstrates something harder to quantify: contextual intelligence that understands the human experience behind the request.

GPT-5 needs to combine o3's factual accuracy with Gemini's emotional intelligence. When someone asks for a family vacation plan, they're not just requesting a spreadsheet of costs and times — they're asking for help creating memories. Gemini understood this implicitly, weaving practical logistics with experiential richness.

The technical requirements are clear: maintain o3's ability to provide specific, bookable recommendations with real links while adding Gemini's narrative flair and anticipation of unstated needs. But the deeper challenge is developing an AI that doesn't just answer the question asked, but understands the human story behind it.

For complex, multi-faceted tasks like travel planning, raw intelligence isn't enough. GPT-5 needs to demonstrate wisdom — knowing when to be a precise logistics coordinator and when to be an inspiring travel companion who understands that the best trips balance spreadsheets with serendipity.

Final analysis and thoughts

These tests exposed three critical gaps that GPT-5 must address to compete with Gemini 2.5 Pro.

- Statistical Blind Spots The research paper analysis revealed ChatGPT's most surprising weakness. While o3 delivered comprehensive critiques, it missed a fundamental statistical error that essentially invalidated the paper's core claim. This represents a critical failure in quantitative reasoning that GPT-5 must fix through built-in statistical sanity checks.

- Reliability Over Architecture o3's clicker game literally cut off mid-function, while Gemini delivered a complete product with features I never requested—offline earnings, unlock conditions, and auto-save functionality. GPT-5 needs to prioritize shipping complete, production-ready outputs over architectural elegance. This is where a merger with the GPT family will help.

- Product Intelligence The travel planning exposed the deepest divide. While o3 provided precise data and booking links, Gemini understood the human story behind the request — adding Gladiator School experiences and timing visits for optimal lighting. This contextual intelligence transforms functional responses into genuinely helpful ones.

OpenAI faces a nuanced challenge beyond raw capability metrics. These tests reveal that while ChatGPT excels at certain reasoning tasks, Gemini 2.5 Pro has redefined expectations around completeness and contextual understanding.

The leaked "GPT-5 Reasoning Alpha" configurations suggest OpenAI recognizes this shift. Their rumored approach — unified architecture, expanded context windows, native video understanding — addresses the right technical gaps. But the real challenge is developing judgment: knowing when statistical precision matters, when completion is non-negotiable, and when to read between the lines of user requests.

This competition benefits everyone. Gemini has raised the bar from "Can it reason?" to "Does it understand?" If GPT-5 combines OpenAI's analytical strengths with Google-level reliability and product thinking, we're witnessing the emergence of AI systems that genuinely grasp human needs. We're near or at AGI with this next generation leap.

The next few months will be pivotal. These tests show the gap isn't about pure performance — it's about thoughtful completeness. GPT-5 doesn't need to be universally better. It needs to be consistently whole.

More from Tom's Guide

- ElevenLabs reveals AI music generator — and it has full commercial rights

- OpenAI launches two new AI models ahead of GPT-5 - here's everything you need to know

- ChatGPT-5 is coming — here's how it could change the way we prompt forever

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits