Your Claude chats are being used to train AI — here's how to opt out

Disable data training in Claude's Privacy Settings to keep your conversations private

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Anthropic now uses Claude conversations to train its AI models unless you manually opt out. The company changed its privacy policy last month, reversing its previous stance of not using chat data for training purposes.

Now that Claude 4.5 is here, the new policy affects all free and paid Claude users. Your chats and coding sessions could become training data for future models unless you turn off a specific setting. Anthropic enabled this by default, so you need to take action to protect your privacy.

The opt-out process is straightforward and takes less than a minute. Here's exactly where to find the setting and how to disable it.

What this policy covers

Both regular chats and coding sessions are included in the training policy. If you use Claude for programming help, those conversations become training data unless you opt out.

Old conversations become training data if you open them again after the policy change. Your complete chat history isn't automatically included — only conversations you access after October 8, 2025.

Anthropic keeps your data for five years whether or not you opt out of training. The company extended its retention period from 30 days, so your conversations remain stored much longer than before.

Who needs to opt out?

Free and paid personal Claude users must opt out manually if they want privacy. The setting applies to all standard accounts regardless of subscription level.

Commercial, government, and educational account users are automatically exempt. Enterprise accounts aren't affected by the policy change and conversations from these users won't be used for training.

New users see the choice during signup but the training option is enabled by default. You need to actively disable it even when creating a new account.

How to opt out of Claude AI training on your chats

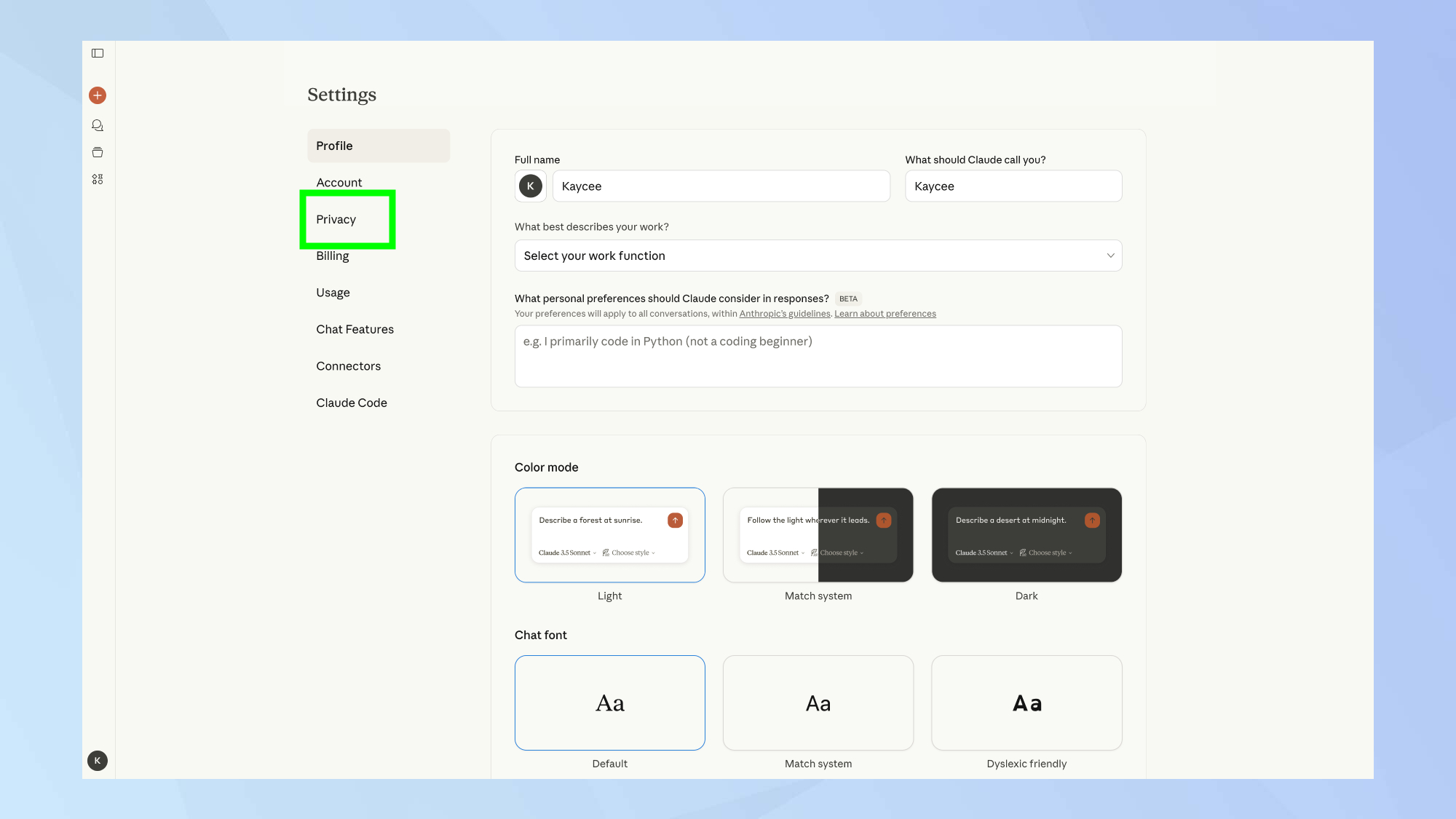

1. Access Privacy Settings

Open Claude and click your profile icon or name to access account settings. The Privacy Settings option appears in the dropdown menu or settings panel.

Then navigate to Privacy by clicking it from the menu. This section controls all privacy-related features including data training permissions.

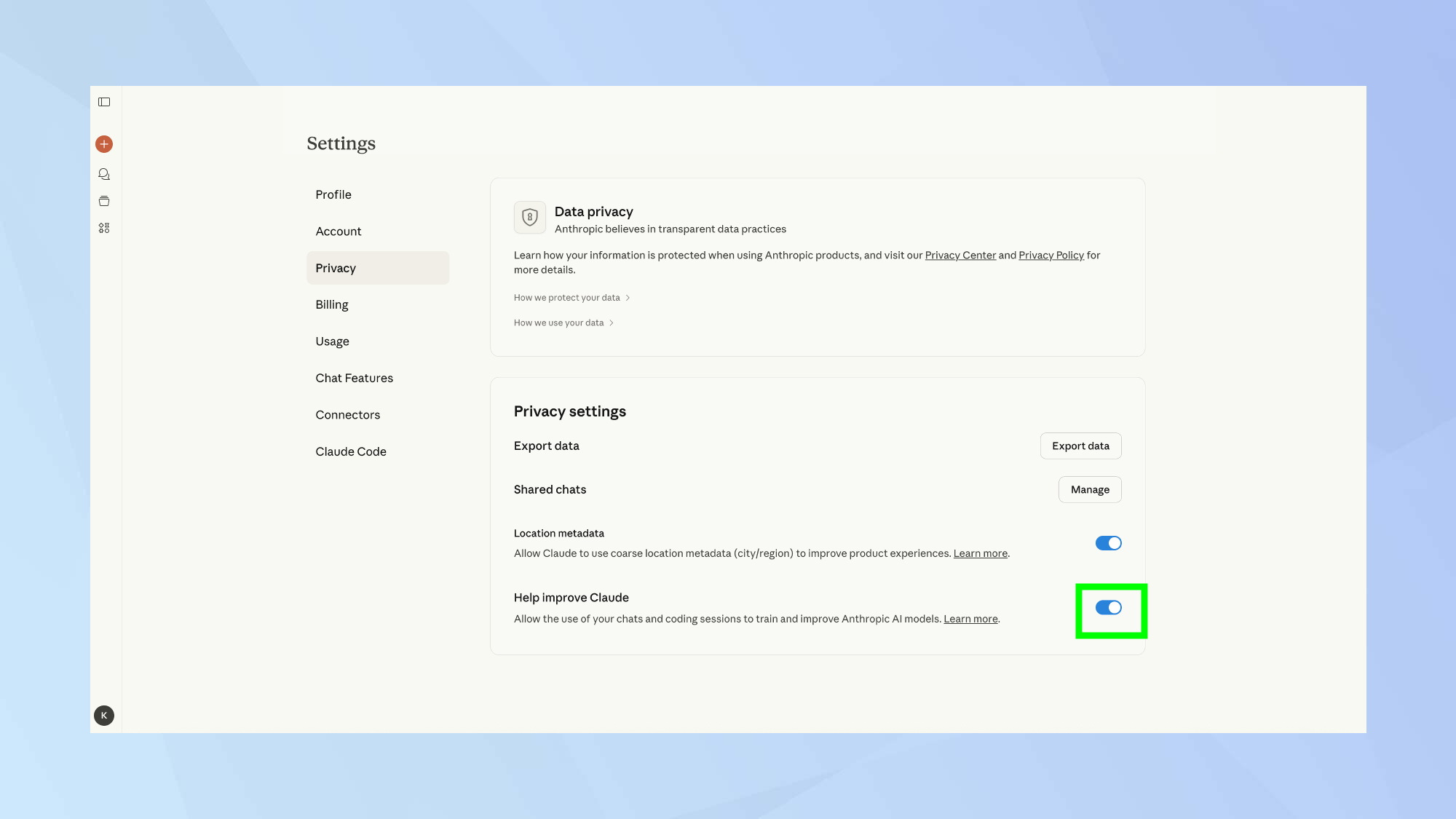

2. Find the training toggle

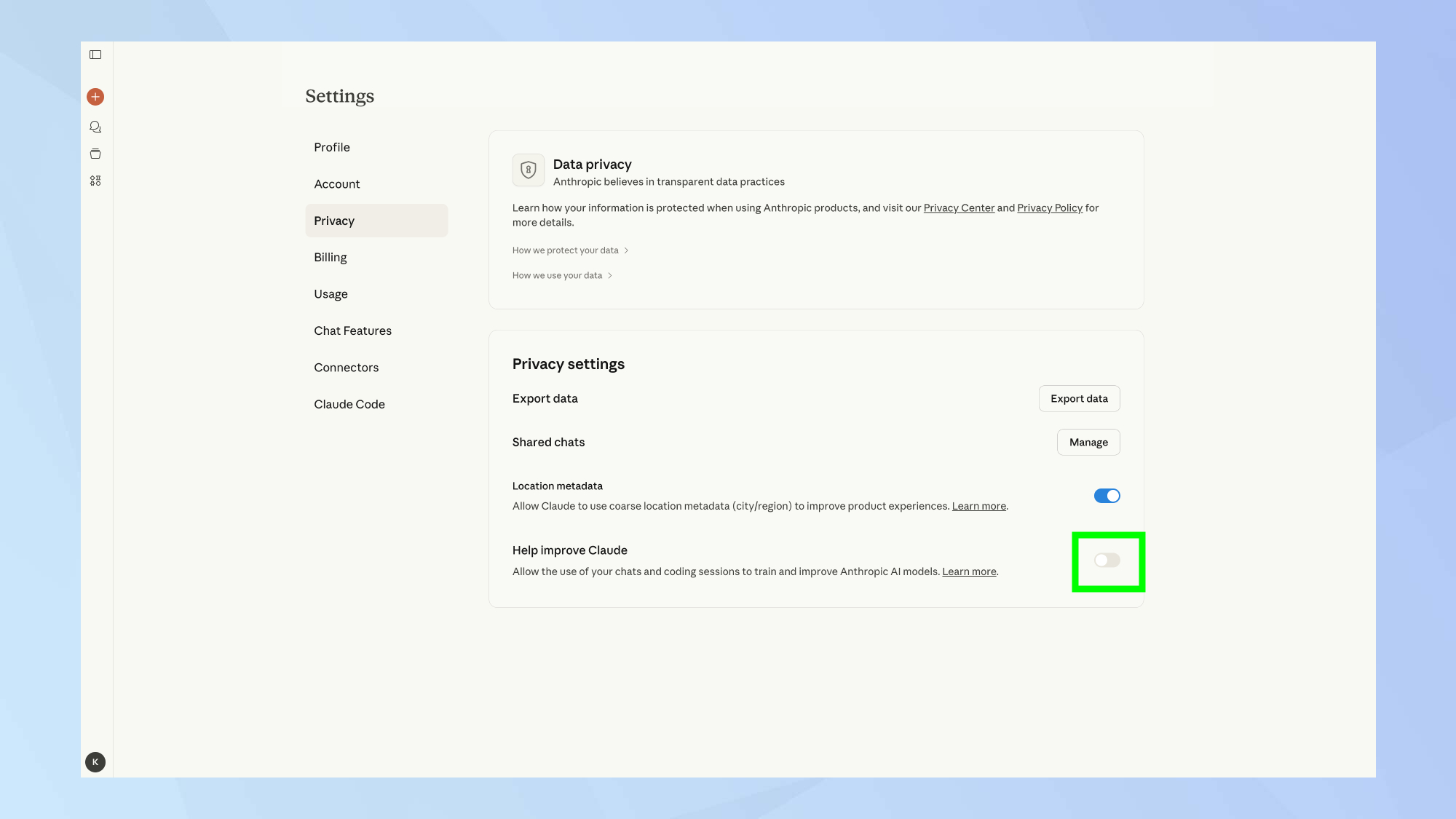

Locate the setting labeled Help improve Claude in Privacy Settings. This controls whether Anthropic uses your conversations to train AI models.

Check the current position of the toggle switch. If it's turned on (positioned to the right and highlighted), Anthropic is currently using your chats for training.

3. Turn off data training

Click the toggle to switch it off so it moves to the left and turns gray. This disables training on your conversations.

The change takes effect immediately once you turn off the toggle. New chats and any old conversations you revisit after this point won't be used for training.

More from Tom's Guide

- Claude is my favorite AI model — here's how I use it

- I've tried all the leading AI chatbots — why I keep going back to Claude

- How to use Claude AI on your smartphone

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Kaycee is Tom's Guide's How-To Editor, known for tutorials that get straight to what works. She writes across phones, homes, TVs and everything in between — because life doesn't stick to categories and neither should good advice. She's spent years in content creation doing one thing really well: making complicated things click. Kaycee is also an award-winning poet and co-editor at Fox and Star Books.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits