Apple Intelligence's best feature gains new powers in iOS 26 — what's new with Visual Intelligence

Screenshots are now search tools

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Visual Intelligence has proven to be one of Apple Intelligence's better additions, turning your iPhone's camera lens into a search tool. And in iOS 26, the feature gains new capabilities that promise to make Visual Intelligence even more useful.

It's tempting to dismiss Visual Intelligence as Apple's Google Lens knock-off, but that undersells what the AI-powered capability brings to the table. In the current iteration, you can use the Camera Control button on iPhone 16 models or a Control Center shortcut on the iPhone 15 Pro to launch the camera and snap a photo of whatever has caught your interest. From there, you can run an online search of an image, get more information via Apple Intelligence's ChatGPT tie-in, or even create a calendar entry when you photograph something with dates and times.

iOS 26 extends those capabilities to onscreen searches. All you have to do is take a screenshot, and the same Visual Intelligence commands you use with your iPhone's camera appear next to the screenshot, simplifying searches or calendar entry creation.

The same restrictions to the current version of Visual Intelligence apply to iOS 26's updated version — you'll need an iPhone that supports Apple Intelligence to use this tool. But if you have a compatible phone, you'll gain new search capabilities that are only a screenshot away.

Here's what you'll see when you try out the updated Visual Intelligence, whether you've downloaded the iOS 26 developer beta or if you're waiting until the public beta arrives this month to test out the latest iPhone software.

What's new in Visual Intelligence in iOS 26

You can still use your iPhone camera to look up things with Visual Intelligence in iOS 26. But the software update extends those features to on-screen images and information captured via screenshots.

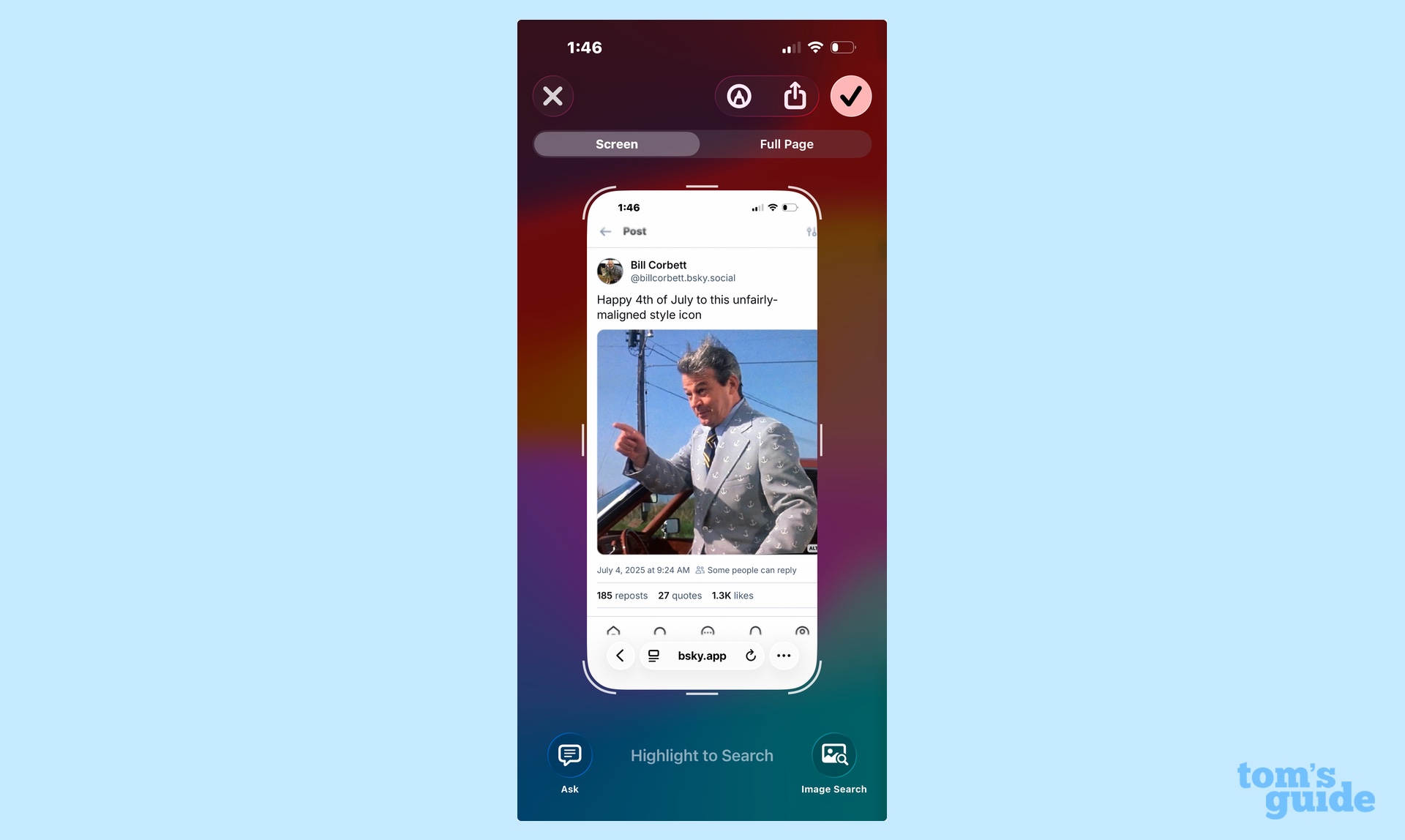

Simply take a screenshot of whatever grabs your interest on your iPhone screen — as you likely know, that means pressing the power button and top volume button simultaneously — and a screenshot will appear as always. You can save it as a regular screenshot by tapping the checkmark button in the upper right corner and saving the image to Photos, Files or a Quick Note. Next to that checkmark are tools for sharing the screenshot and marking it up.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

But you'll also notice some other commands on the bottom of the screen. These are the new Visual Intelligence features. From left to right, your options are Ask, Add to Calendar and Image Search.

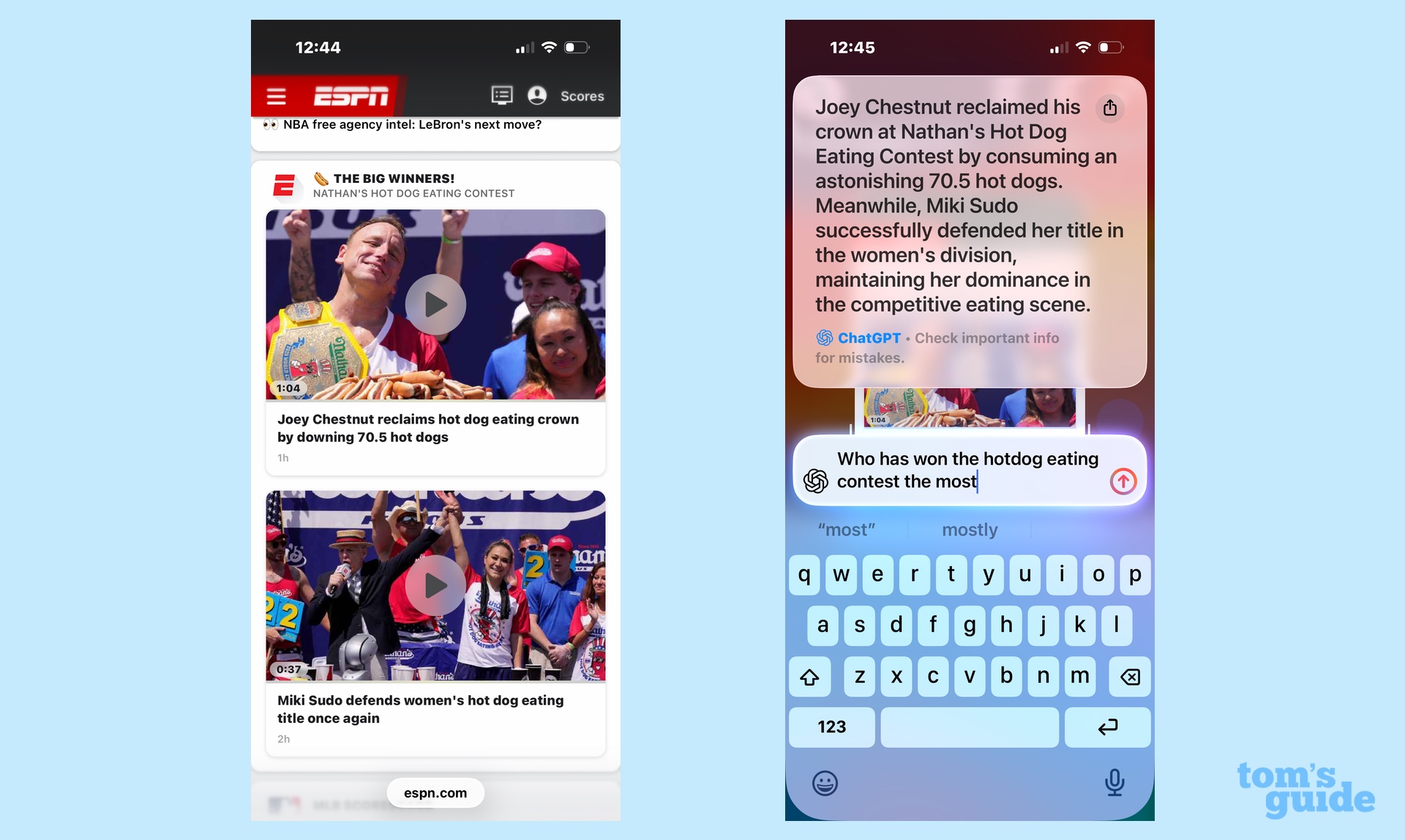

Ask taps into ChatGPT's knowledge base to summon up more information on whatever it is on your screen. There's also a search field where you can enter a more specific question. For instance, I took a screenshot of the ESPN home page featuring a photo of the hot-dog eating contest that takes place on the Fourth of July and used the Ask button to find out who's won the contest the most times.

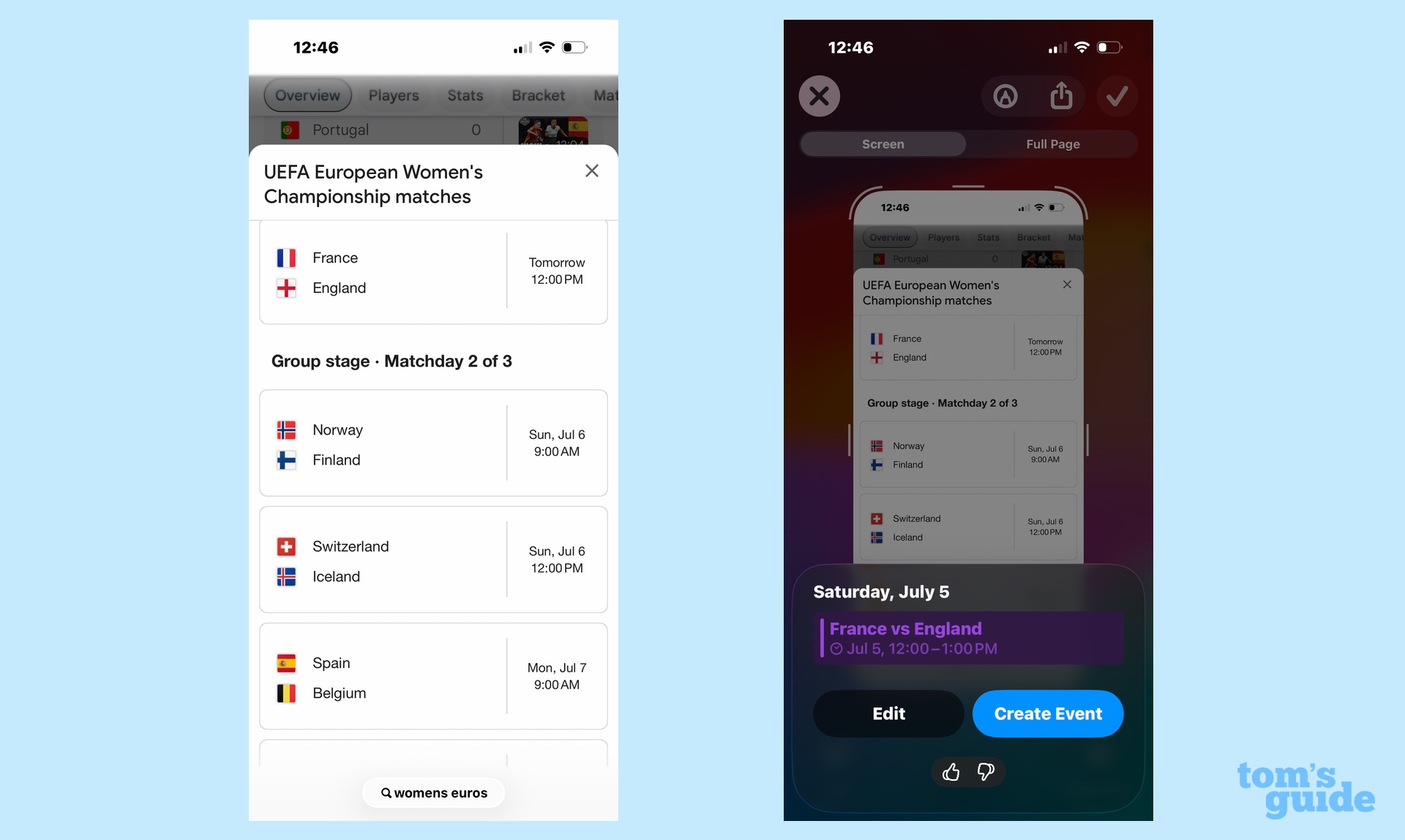

Add to Calendar pulls time and date information off of your iPhone screen and auto-generates an entry for the iOS Calendar app that you can edit before saving. (And a good thing, too, as Visual Intelligence doesn't always get things right. I'll discuss that in a bit.) With the Add to Calendar feature, I could look up schedules for the UEFA Women's European Championship and block out the matches that I wanted to watch on my calendar.

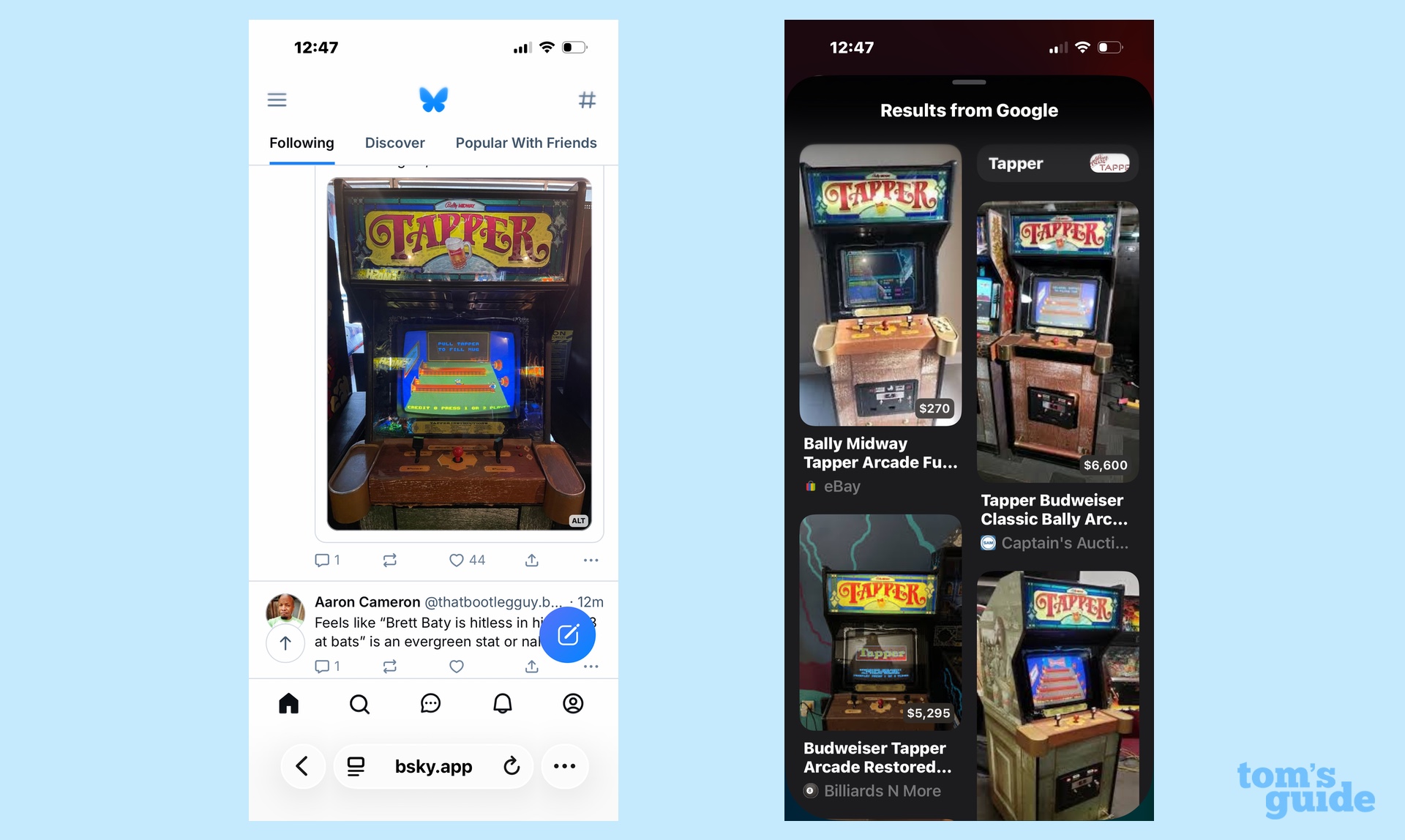

Image Search is pretty straightforward. Tap that command, and the AI will launch a Google search for whatever image happens to be in your screenshot. In my case, that happens to be an old Tapper arcade game console just in case I've got more money and nostalgia than sense.

For the most part, these Visual Intelligence searches I've referenced above have been done using the whole screen, but you're able to highlight the specific thing you want to search for using your finger — much like you can with the Circle to Search feature now prevalent on Android devices. I highlighted the headline of a Spanish newspaper and could get an English translation. Yes, Visual Intelligence can translate language in screenshots, too, just as it can when you use your camera as a translation tool.

iOS 26 Visual Intelligence impressions

It's important to remember that Visual Intelligence's new tools are in the beta phase, just like the rest of iOS 26. So you might run into some hiccups when using the feature.

For example, the first time I tried to create a calendar entry for the Women's Euro championships, Visual Intelligence tried to create an entry for the current day instead of when the match was actually on.

When this happens, make liberal use of the thumbs up/thumbs down icons that Apple uses to train its AI tools. I tapped thumbs down, selected the Date is Wrong option from a list provided on the feedback screen and sent it off to Apple. I don't know if my feedback had an immediate effect, but the next time I tried to create a calendar event, the date was auto-generated correctly.

You can capture screenshots of your Visual Intelligence results, but it's not immediately intuitive how to save those screens. Once you've taken your screenshot, swipe left to see the new shot, and then tap the checkmark in the upper corner to save everything. It's something I'm sure I'll get used to over time, but it feels a little clunky after years of taking screenshots that just automatically save to the Photos app.

It's an effort worth making, though. As helpful as the Visual Intelligence features have been, remembering to use your camera to access them isn't always the most natural thing to do. Being able to take a screenshot is more immediate, though, putting Visual Intelligence's capabilities literally at your fingertips.

More from Tom's Guide

- I tested AI writing tools on iPhone vs Galaxy vs Pixel — here's the winner

- Apple reportedly has more iOS 26 features in the works — here's what's coming to your iPhone

- iOS 26 has 5 upgrades I can't wait to use — but they only work with these iPhones

Philip Michaels is a Managing Editor at Tom's Guide. He's been covering personal technology since 1999 and was in the building when Steve Jobs showed off the iPhone for the first time. He's been evaluating smartphones since that first iPhone debuted in 2007, and he's been following phone carriers and smartphone plans since 2015. He has strong opinions about Apple, the Oakland Athletics, old movies and proper butchery techniques. Follow him at @PhilipMichaels.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits