20 million ChatGPT logs are to be shown in court – is there an answer to AI's vast data collection?

Using big-name AI chatbots can have devastating consequences for your data privacy

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

OpenAI, the company behind ChatGPT, has been ordered to turn over 20 million ChatGPT logs in a US court as part of an AI copyright case.

The logs are reportedly "de-identified," but serious questions are being raised about the privacy impact this has on ChatGPT users and the vital importance of clear logging policies.

For example, all the best VPNs operate strict no-logs policies. This means that even if they receive a court order, no sensitive personal data can be shared. This is essential for software that has access to such a large amount of your personal information and internet traffic.

AI chatbots like ChatGPT don't offer the same protections. Almost all AI companies store your chat conversations in some way, compromising your data privacy and meaning your data could appear in court. Some companies may even use your chats to train their LLMs.

It's not just chat logs. AI chatbots collect vast amounts of information, including your device, location, and identifiers. Research has also shown that AI chatbots can infer information about you based on the prompts you enter, even if you haven't shared specifics.

The simplest way to protect your data from AI companies is not to use their products in the first place. However, With AI permeating many work sectors, avoid it is near-impossible. In these cases, choosing to use a local LLM over a cloud LLM is a reliable way of protecting your information from data-hungry AI corporations, and as always, be careful about what sensitive information you input into any online tool.

20 million logs to be handed over

According to reports, OpenAI has been ordered to hand over 20 million "de-identified" ChatGPT logs as part of an ongoing copyright case. It isn't clear how de-identified the logs are, but that's not the main issue. The problem is there are logs for OpenAI to hand over in the first place.

The figure initially stood at 120 million, showing just how much data ChatGPT collects and stores.

ChatGPT, along with other AI chatbots, collects vast amounts of personal information, including chat history. OpenAI's privacy policy states it collects log and usage data, as well as device information, cookies, and analytics.

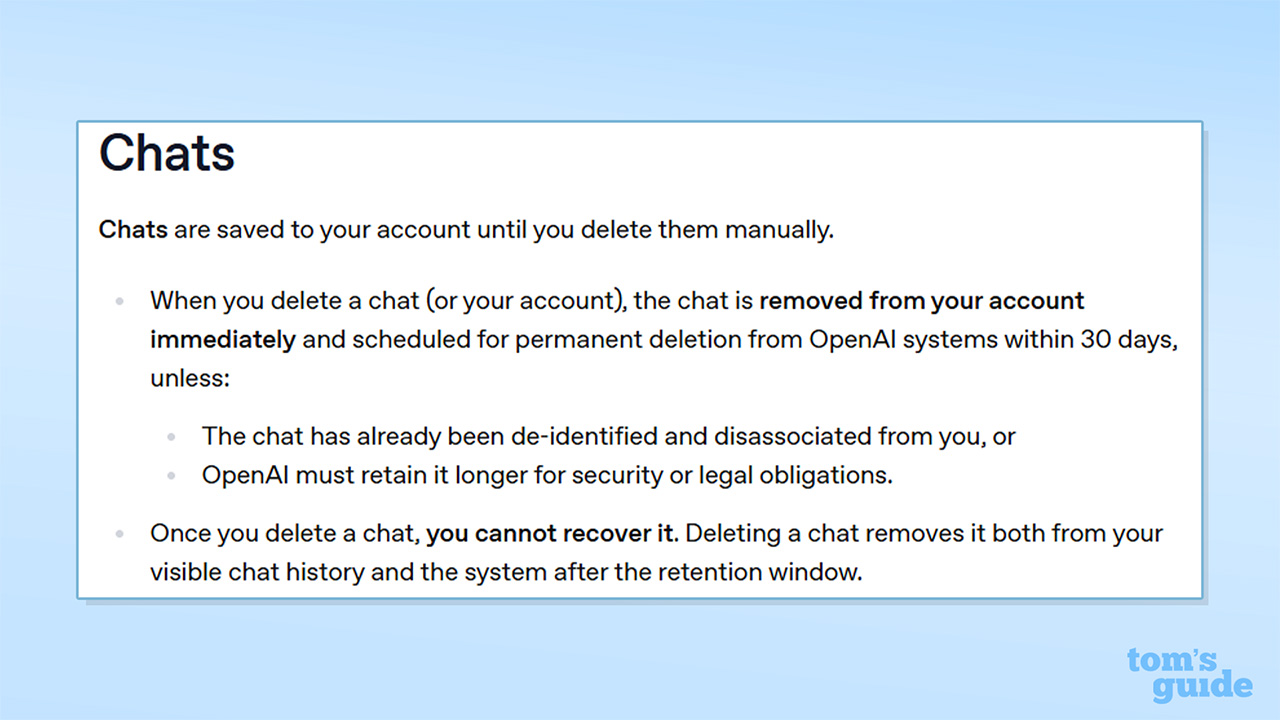

Its Chat and File retention policy states "chats are saved to your account until you delete them manually." Deleted chats, and temporary chats, are "automatically deleted" from OpenAI's systems after 30 days.

In a previous court case involving the New York Times, OpenAI had been ordered to retain "consumer ChatGPT content indefinitely" – these obligations ended in September 2025. It argued this contradicted its privacy practices, saying trust and privacy are "at the core" of its products.

However, it isn't clear if the 30-day deletion period applies to chats users haven't manually deleted. The privacy policy also states the 30-day deletion period doesn't apply if "the chat has already been de-identified and disassociated from you" or if "OpenAI must retain it longer for security or legal obligations."

OpenAI's privacy policy says it retains your personal information for as long as it needs in order to provide its service. It may also disclose this information to affiliates and third-parties.

Dr Ilia Kolochenko, CEO at ImmuniWeb, commented on the case. He said: "This case is a telling reminder that – regardless of your privacy settings – your interactions with AI chatbots and other systems may, one day, be produced in court."

He added that "even if some user-facing systems are specifically configured to delete chat logs and history, some others may inevitably preserve them in one form or another."

Dr Kolochenko warned users to be aware of what they're inputting into chatbots. He said "in some cases, produced evidence may trigger investigations and even criminal prosecution of AI users." He concluded by saying AI users should "think twice" prior to entering chats or "testing its guardrails ... otherwise, legal consequences may be pretty serious and long-lasting."

A lesson to be learned from VPNs

VPN providers are no strangers to court summons and requests to hand over data. The crucial difference between VPNs and AI chatbots is that one relies on no-logs policies, and one doesn't.

Strict no-logs policies ensure quality VPNs never collect, store, or share your sensitive personal data. In fact, we would never recommend using a VPN that does not have a no-logs policy.

There is an important difference between no-logs and zero-logs policies. With a no-logs policy, data such as number of connection time stamps or application data may still be collected, as well as information which can help improve app experience such as crash reports. Zero-logs polices mean no data is collected about you at all. However, true zero-logs VPNs are very rare – if they exist at all.

With a no-logs policy, the most private VPNs don't log "identifying information," which includes your IP address, browsing history, session length, and location. No sensitive data is linked to your identity or activity. This means that when law enforcement comes calling, there's nothing to hand over.

Many VPNs undergo third-party audits to verify their logging policies. However, having a no-logs policy proved in court is arguably more reassuring than an audit. For example, Private Internet Access (PIA) has had its no-logs policy proven in court twice.

Windscribe boss Yegor Sak found himself in a Greek court in April 2025 after a Windscribe VPN user was alleged to have committed a crime. Windscribe's no-logs policy meant no data on the individual could be handed over and the case was thrown out. Windscribe's case demonstrated the importance of no-logs policies first-hand.

Many leading VPNs publish regular transparency reports which detail the number of data requests they receive from various law enforcement agencies and how much data they hand over. Spoiler alert: the answer is always zero.

Protecting your privacy

With some AI chatbots collecting up to 90% of user data types, the easiest way to protect your privacy is simply not using LLMs. But this might not be practical for existing AI users.

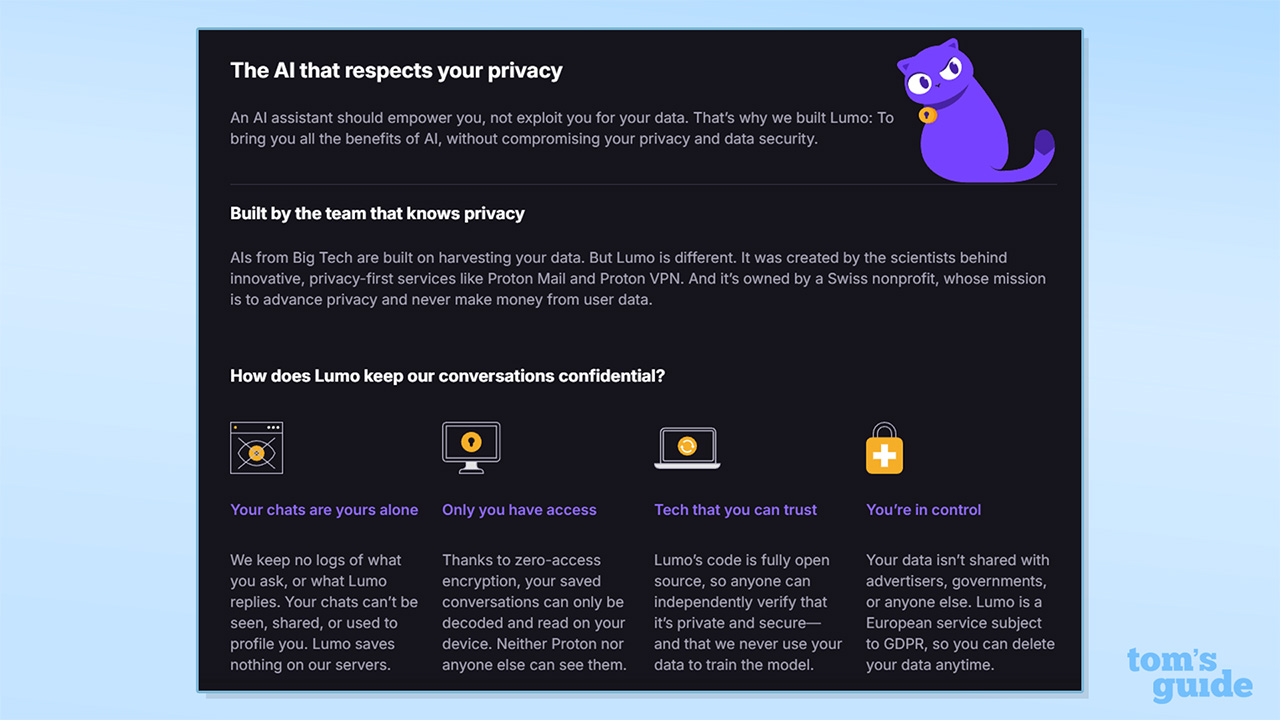

If you do choose to use an AI chatbot, we'd encourage you to use a privacy-focused AI chatbot such as Proton's Lumo. Lumo is a "local AI," which means everything is handled on your device, as opposed to through data centers, like ChatGPT and Gemini. Chats are also end-to-end encrypted and, as a result, your data is never seen by Proton, or any other third party – with an added bonus that local AI is more environmentally friendly than cloud AI.

Opera's browser can also support local AI, and aims to protect your privacy while giving access to cutting-edge technology. However, it's worth noting that these local AI chatbots may not be as powerful as their big-name counterparts, and often require frequent updates.

As with any online service, always read and understand the privacy policy before sharing any data. Ensure you're aware of what information is being collected and how it's being used.

VPNs on their own won't protect you from data-hungry AI chatbots. But signing up to a VPN can be part of a wider series of actions that allow you to understand and take control of your data privacy in 2026.

We test and review VPN services in the context of legal recreational uses. For example: 1. Accessing a service from another country (subject to the terms and conditions of that service). 2. Protecting your online security and strengthening your online privacy when abroad. We do not support or condone the illegal or malicious use of VPN services. Consuming pirated content that is paid-for is neither endorsed nor approved by Future Publishing.

George is a Staff Writer at Tom's Guide, covering VPN, privacy, and cybersecurity news. He is especially interested in digital rights and censorship, and its interplay with politics. Outside of work, George is passionate about music, Star Wars, and Karate.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits