Opera brings AI to the browser — now you can engage with chatbots on your laptop

Run an LLM locally

Opera has become the first web browser maker to provide built-in support for a wide range of locally running artificial intelligence models.

Opera's One browser now has “experimental support" for 150 local large language models (LLM) from 50 different model families.

These include major local or open source LLMs such as Meta’s Llama, Google’s Gemma, Mixtral from Mistral AI, Vicuna and many more.

And in doing so, Opera has outflanked AI-savvy browsers like Microsoft Edge. The latter offers two AI integrations in the form of Bing and Copilot and neither running locally.

What this means for Aria

This news means Opera One users will be able to swap the proprietary Opera-built Aria AI service for a more popular, third-party LLM. And they’ll be able to run these LLMs locally on their computer, meaning their data won’t be transferred to a remote server. It will also be accessible from a single browser.

Local LLMs are not only great for privacy-conscious AI lovers but also for those who want to access AI models without an internet connection. That could be handy if you want to use LLMs while travelling.

“Introducing Local LLMs in this way allows Opera to start exploring ways of building experiences and knowhow within the fast-emerging local AI space,” said Krystian Kolondra, EVP of browsers and gaming at Opera.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Accessing local LLMs on Opera

While this is an exciting update for any AI lover, it’s currently experimental feature and not widely available yet. Initially, it’ll only be accessible available through the developer stream of Opera’s AI Feature Drops Program.

Launched last month, the program allows early adopters to get the first taste of Opera’s latest AI features. Luckily, it’s not exclusive for just developers and can be accessed by anyone.

Testing this feature will require the latest version of Opera Developer and 2-10GB of computer storage for a single local LLM, which will need to be downloaded onto the machine.

Local LLMs include:

- Llama from Meta

- Vicuna

- Gemma from Google

- Mixtral from Mistral AI

- And many families more

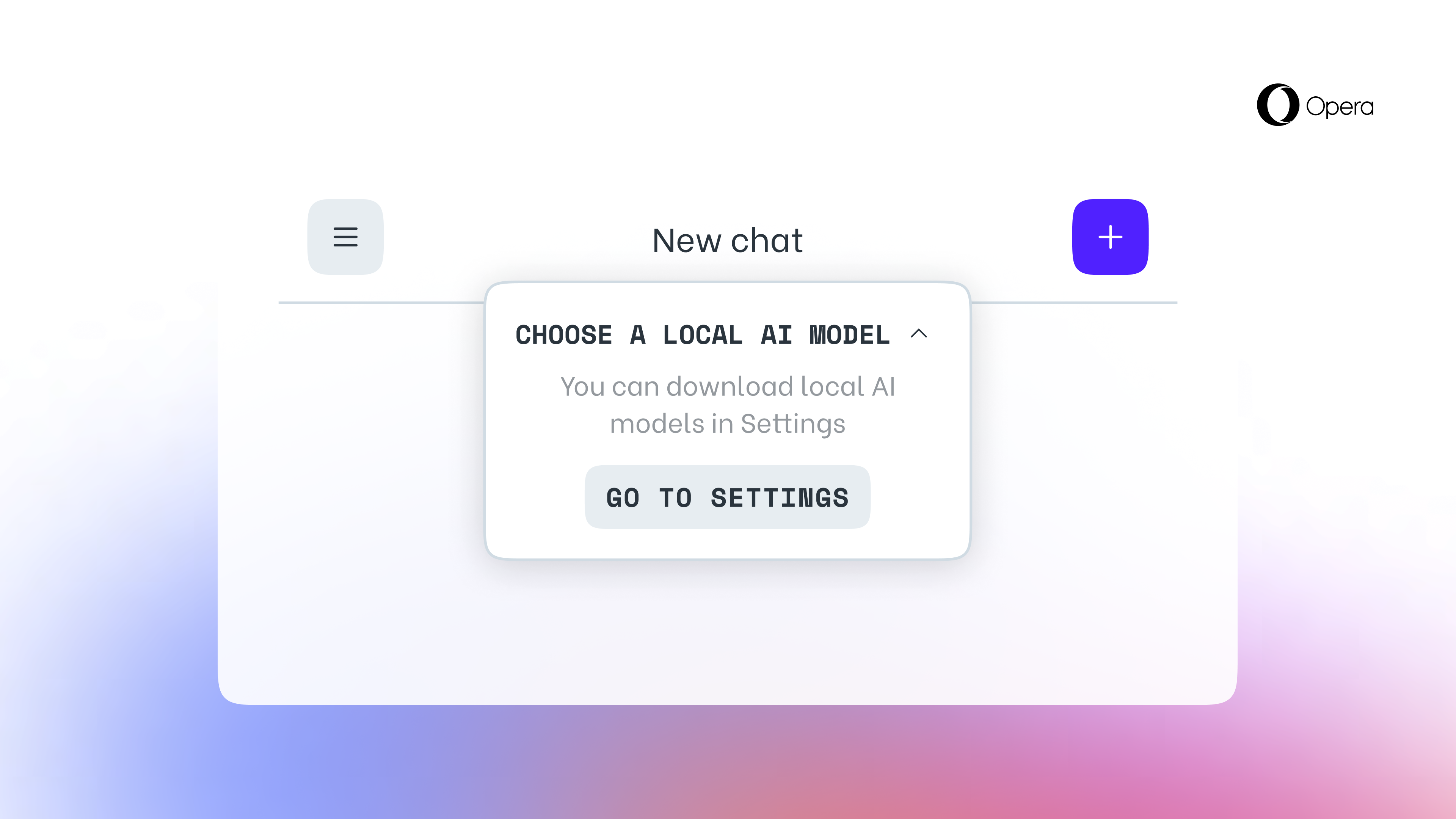

Using local LLMs within Opera One is pretty simple. After loading up the browser, go into the side panel and choose Aria.

You’ll see a drop-down menu at the top of the chat window - press this, and then click on the option that says “choose local AI model”.

A little box will then appear on the screen with a button titled “go to settings”. Here, you can select one of the 150 LLMs from 50 families.

After choosing a local LLM, the next step will be to download it by clicking on the button with a downward-facing arrow. Then, click the button with three lines at the top of screen, and click the “new chat” button. You can then switch between different LLMs via the “choose local AI model” button.

More from Tom's Guide

- Google Search just stole one of the Pixel’s best features — and it will save you a ton of time

- AI-powered thermal cameras could be used to crack your passwords

- DarkBert AI was trained using dark web data from hackers

Nicholas Fearn is a freelance technology journalist and copywriter from the Welsh valleys. His work has appeared in publications such as the FT, the Independent, the Daily Telegraph, The Next Web, T3, Android Central, Computer Weekly, and many others. He also happens to be a diehard Mariah Carey fan!

Club Benefits

Club Benefits