How to make and run your own AI models for free

Installing AI on your local computer can save you time, money and offer total privacy.

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

When most people think about AI, they tend to focus on ChatGPT, in the same way that search means Google. But there's a large AI world outside of chatbots which is definitely worth exploring.

One example is the growing community of people using AI models on their home computer, instead of using third-party cloud services like OpenAI or Google Gemini.

But why would I do that, you ask. Well for one thing you get to control every aspect of the AI you’re using. That ranges from the choice of model, to the subject matter you use it for.

Everything runs completely offline with no internet needed, which means there's no problem keeping your stuff private. It also means you can do your AI work anytime, anyplace, even without a WiFi connection.

There’s also the question of costs, which can mount up quickly if you use commercial subscription services for larger projects. By using your own local AI model, you may have to put up with a less powerful AI, but the trade-off is that it costs pennies in electricity to run.

Finally, Google has just started running advertising in its Gemini chatbots, and it’s likely that other AI suppliers will soon follow suit. Intrusive ads when you’re trying to get stuff done can get boring very fast.

How to get started

There are three main components to getting your local AI up and running. First you need the right type of computer, second you’ll have to select the right AI model and finally there's the matter of installing the right software to run your model. Oh, and you’ll also need a little bit of know-how to tie it all together.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

1. The computer

Whole books could be written—and probably will be—on choosing the right computer to run your local AI. There’s a huge number of variables, but for a simple starter, only three things matter. Total amount of RAM, your CPU processing power, and storage space.

The basic rule of thumb is you should use the most powerful computer you can afford, because AI is power mad. But it’s not only about the processor; just as important is the amount of memory (RAM) you have installed.

The bottom line - forget about using your old Windows XP machine, you’re going to need a modern computer with at least 8GB of RAM, a decent graphics card with at least 6GB of VRAM, and enough storage space (preferably SSD) to hold the models you’ll be using. The operating system is irrelevant; Windows, Mac, or Linux are all fine.

The reason these things matter is that the more power your computer has, the faster the AI will run. With the right configuration, you’ll be able to run better, more powerful AI models at a faster speed. That’s the difference between watching an AI response stutter across the screen one painful letter at a time, or whole paragraphs of text scrolling down in milliseconds.

It’s also the difference between being able to run a half-decent AI model that doesn’t hallucinate, and delivers professional quality answers and results, or running a model that responds like a village idiot.

Quick Tip: If you bought your computer within the last two to three years, chances are you’ll be good to go, as long as you have enough RAM, especially if it’s a gaming computer.

2. The model

AI model development is moving so fast this section will be out of date by next week. And I’m not joking. But for now, here are my recommendations.

The number one rule is to match the size of your chosen model to the capacity or capabilities of your computer. So if you have the bare minimum RAM and a weak graphics card, you’ll need to select a smaller, less powerful AI model, and vice versa.

Quick tip: always start with a smaller model than your RAM size and see how that works. So for example if you have 8GB of RAM, select a model which is around 4 to 5 GB in size. Employing trial and error is a good idea at this point

The good news is more and more open source models are coming onto the market which are perfectly tailored for modest computers. What’s more, they perform really well, in some cases as well as cloud-based alternatives. And this situation is only going to get better as the AI market matures.

Three of my personal favorite models right now are Qwen3, Deepseek and Llama. These are all free and open source to different degrees, but only the first two can be used for commercial purposes. You can find a list of all the available open source AI models on HuggingFace. There are literally thousands of them in all sizes and capabilities, which can make it hard to select the right version - which is why the next section is crucial.

3. The software

There are a lot of great apps on the market, called wrappers or front ends, which make it easy to run the AI models. You just need to download and install the software, select the model you want to use, and off you go. In most cases, the app will warn you if the model you’ve selected won’t run properly on your computer, which makes the whole thing a lot less painful. Here are a couple of my personal favorites.

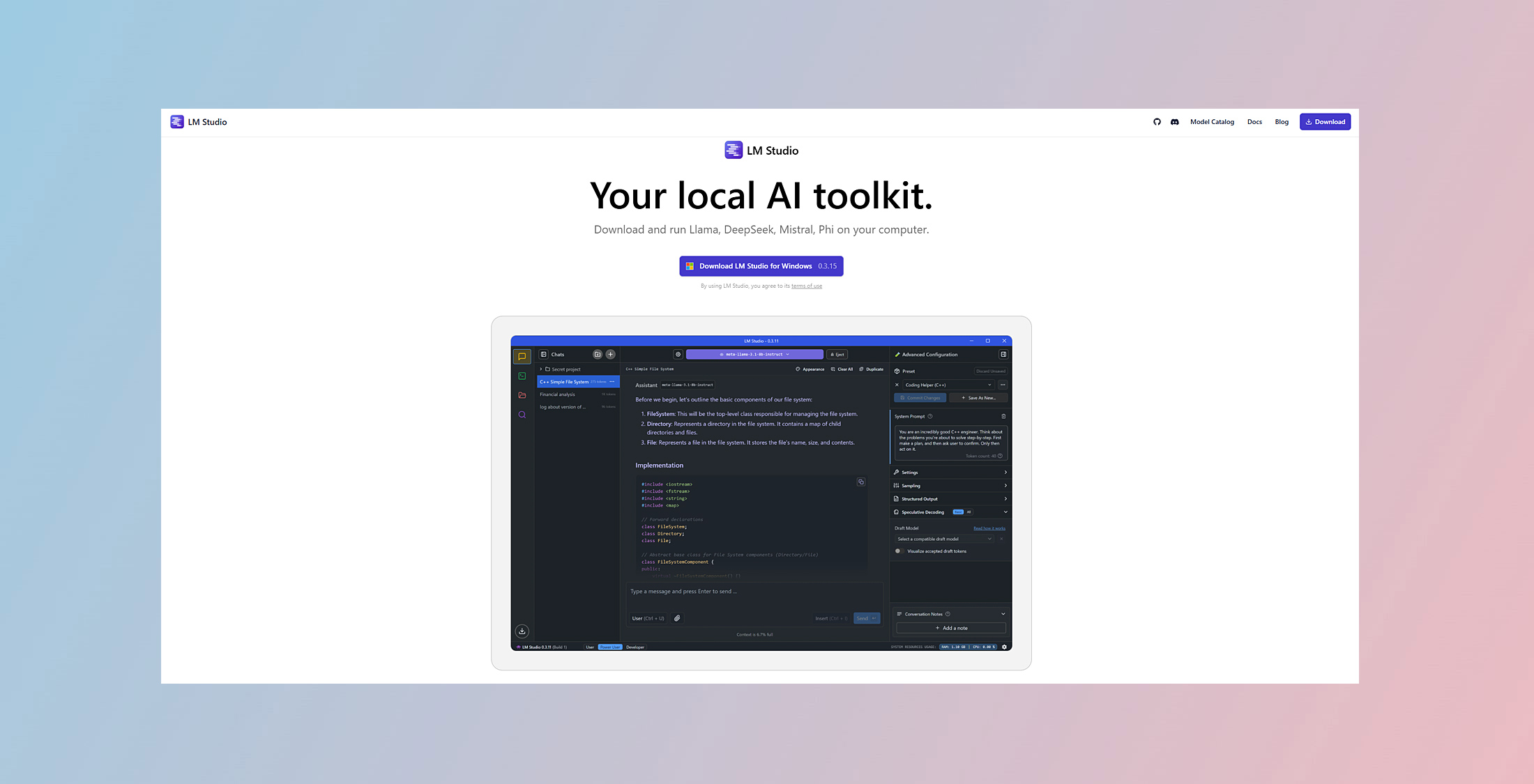

LM Studio

LM Studio is a free proprietary app that is an easy way to use models on your computer. It runs on Windows, Mac and Linux, and once installed, you can instantly add a model by doing a search and selecting the best size. To do this, click on the discover icon in the left sidebar, and you will see a model search page. This scans HuggingFace for suitable models and gives you tons of great information to make the right choice. It's a very useful tool.

PageAssist

My personal favorite free open-source tool for running local models is PageAssist. This is a web browser extension which makes it easy to access any Ollama AI models on your machine. You will need to install the free Ollama program first, and follow the instructions to download models, so this method requires a little more expertise than LM Studio. But PageAssist is a superb way of running local AI models at the press of a button, offering not just chat, but also web search and access to your own personal knowledge base.

Olama is rapidly becoming the number one way to install and run free open source AI models on any small computer. It's a free model server which integrates with a lot of third-party applications, and can put local AI on a level playing field with the big cloud services.

Final Thoughts

There’s no question that local AI models will grow in popularity as computers get more powerful, and the AI tech matures. Applications such as personal healthcare or finance, where sensitive data means privacy is critical, will also help drive the adoption of these clever little offline assistants.

Small models are also increasingly being used in remote operations such as agriculture or places where internet access is unreliable or impossible. More AI applications are also migrating to our phones and smart devices, especially as the models get smaller and more powerful, and processors get beefier. The future could be very interesting indeed.

More from Tom's Guide

- Google's Gemini AI will soon be accessible to kids under 13 — here's how that could look

- I put ChatGPT, Gemini and Claude through the same job interview — here’s who got hired

- I’ve tested every major AI chatbot in 2025 — these 5 prompts never fail me

Nigel Powell is an author, columnist, and consultant with over 30 years of experience in the technology industry. He produced the weekly Don't Panic technology column in the Sunday Times newspaper for 16 years and is the author of the Sunday Times book of Computer Answers, published by Harper Collins. He has been a technology pundit on Sky Television's Global Village program and a regular contributor to BBC Radio Five's Men's Hour.

He has an Honours degree in law (LLB) and a Master's Degree in Business Administration (MBA), and his work has made him an expert in all things software, AI, security, privacy, mobile, and other tech innovations. Nigel currently lives in West London and enjoys spending time meditating and listening to music.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits

![HIDevolution [2024] ASUS ROG... HIDevolution [2024] ASUS ROG...](https://images.fie.futurecdn.net/products/848664f20a82da37ee7b66f813eb40cdef2cfcf5-100-80.jpg.webp)