Intel Shows Off Our Wild Future of VR and Autos

Intel’s CES 2018 keynote discussed the role of data in the future of VR, sports, movies, computing and autonomous transportation.

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Intel CEO Brian Krzanich gave the opening keynote to CES 2018, covering a wide range of topics, including the futures of computing, virtual reality, cars and drones.

He started the show by referencing the recent Spectre and Meltdown vulnerabilities, claiming that security is “job number one” for Intel and the tech industry. He asked users to apply updates to systems and operating systems. He said Intel will release patches for up to 90 percent of chips in the last five years within a week, with the rest within a month, and that the performance hits will be worth the security. (Krzanich came under fire following reports that he sold stock after the company learned about the issues. Intel claims the sale was unrelated, and he didn’t comment on it at the keynote.)

Krzanich then turned to data, the theme for the night, which he said was one of the big societal changes that you only see every few decades. And data is made by everything in technology.

By 2020, the average person will produce 1.5GB of data per day, he said, but that’s tiny compared to an autonomous car, which he predicts will generate 4TB of data per day. An airplane? That’s 40TB. A smart factory could reach a petabyte.

“Almost everything you see here at CES…” he said, “they all start with data.”

Data in VR, Sports and the Olympic Games

His next topic was “immersive media,” and getting realistic content in virtual reality. He said that the lack of things to do is what could keep VR from reaching its potential. So Intel tried to solve a massive computing problem with AI and imaging. He discussed Intel’s True VR, which generates sports content by posting cameras within an arena with 180 or 360-degree views and stiching them together so you can view the entire field.

Krzanich then explained how you can use the company's True View technology, which takes pictures of the entire viewing area, creating billions of data points, or voxels (three-dimensional pixels). The term "voxels," he said, may become part of everyday speech like pixel has, because content will have depth and volume.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Capturing 3D video like that can produce data at 3 terabytes per minute. A single football game’s first quarter will create more data than all the text in the Library of Congress, Krzanich claimed.

The upcoming winter Olympic games will have 30 events broadcast live in VR with Intel as a partner using True VR and True View, he said. Then a giant screen emerged from the ceiling with the same field of view as a VR headset, showing off what snowboarding, ice skating, hockey and other winter sports could look like in VR.

“Almost everything you see here at CES… they all start with data.” - Brian Krzanich, Intel CEO

Krzanich also touted Intel’s role in eSports, both in streaming and gameplay, as well as in the Ferrari Challenge, which will use data to highlight the most important parts of the race.

Then he honed in on the NFL and fantasy football. He said Intel will create content tailored to individual viewing habits. Intel showed off a solution to the problem of missing a TV show when there’s too much to watch. With an Oculus Rift, the demo included a representative’s entire fantasy team, so he could know when they scored or when there’s a video of a player in another game. When one scored, he just put the original game, in VR, right on top of the one he was watching. When the clip ended, he returned to the original game.

While most VR sportscasts give you a view from the best seat in the house, Krzanich says True View can show you what it’s like to be in the eyes of a player. In a clip from a Patriots game, viewers looked through the eyes of quarterback Tom Brady, a rare perspective that no one else gets.

Krzanich brought former Dallas Cowboys’ quarterback and current broadcaster Tony Romo on stage, who commentated on a play through the Ravens’ quarterback Joe Flacco’s eyes.

The Immersive Media Future

Krzanic also detailed Intel’s new immersive content studio in Los Angeles which seeks to produce “the future of media.” It has a massive dome with 100 cameras and sensors to produce immersive content. Krzanich showed off the debut clip from the first capture the studio produced: a western scene of a brawl with a horse running around. It was able to circle the fight, slow down hits matrix style and zoom in and out in a scene much more exciting than when he showed it from just one camera.

Even though it was only filled once, you could watch from the peripheral, in the middle of the brawl, or even from the eye of the actors themselves. Krzanich then replayed the whole clip from the view of the horse.

Jim Gianopulos, CEO of Paramount pictures, came on stage to announce a partnership with Intel, though no films or other projects were announced.

Krzanich and a Linden Labs represenative then used Sansar, software based on Second Life and a HTC Vive, to look at a virtual representation of Intel’s CES booth. It was overly scripted and quite awkward, but it showed off a social interaction in VR that looks like what Facebook CEO Mark Zuckerberg has been claiming could occur for a few years now.

The Future of Computing

Krzanich then talked about how computing could change. He discussed neuromorphic computing, which doesn’t use a CPU and RAM, but instead simulates the brain to make smarter and more efficient systems. The chip, Loihi, that Intel is working on is meant for applications that need intelligence in a device, and Intel suggest it could start in robots and autonomous vehicles. It’s a more human form of artificial intelligence, because it learns and networks the way we do.

It’s already performing simple object recognition, he said. This is still in the research stage, so don’t expect to start seeing chips in consumer-grade machines anytime soon.

Next up is quantum computing. It will solve problems that are impossible today, he said. The additional power could model interactions for drug development in a short period of time, he suggested. Krzanich then revealed Intel’s first 49 qubit chip, the most powerful ever.

It’s “coming faster than anyone imagined,” he said.

Going Autonomous

Intel is also looking into safety in autonomous driving, Krzanich said.

“We want to push a platform that will allow autonomous cars to become a reality,” he said. And that means keeping people safe.

He brought on Ammon Shashua, the head of Mobile Eye, who drove in an autonomous car. There was no equipment in the car that made it look different from any other vehicle. It’s part of Intel’s self-driving fleet.

There are a bunch of scanners, lasers and other trackers all over the cars, including on the mirrors, sides, bumpers and more. The platform for making decisions includes Mobile Eye’s Eye Q and Intel’s Atom processors.

Ammon said the future includes sensing, mapping, driving policy (planning decisions, merging into traffic) and government regulation.

In a demonstration video, we could see how the car could see lines on the road within centimeters. Upcoming partners include Volkswagen, BMW and Nissan. Ammon than promised Krzanich that he could have the demo car, which was really generous of him. Krzanich said he’ll be driving in it and inviting press to drive in the autonomous vehicle with him to work occasionally.

He then discussed a company called Volocopter, which is working on a self-driving helicopter. Krzanich says that flying autonomous cars are already in the works and that the tech exists today. He cued up a video that showed him in the Volocopter in an empty warehouse, on his own, with no one touching the controls.

“Everyone will fly in one of these someday,” he said.

Volocopter CEO Florian Reuter came out on stage and said that it’s not a fantasy, and that flight tests have taken place over the last several months. He claimed that soon air taxis will be common, and that flights could be affordable.

And if that didn’t seem nuts, there it was, on stage. It was behind glass for safety, but we got to see it fly live on stage. The landing bounced a little, but it was otherwise entirely flawless.

Krzanich also announced Intel's own drone, the Shooting Star Mini. He said that it can be flown indoors with up to 100 controlled by a single pilot, and then a fleet of drones started flying throughout the theater. They danced in a choreographed light show as the theatre went dark, creating a beautiful image to close the show. It was a Guinness World Record, he said: the first indoor. drone light show with over 100 drones with no GPS.

(Data) Rock of Ages

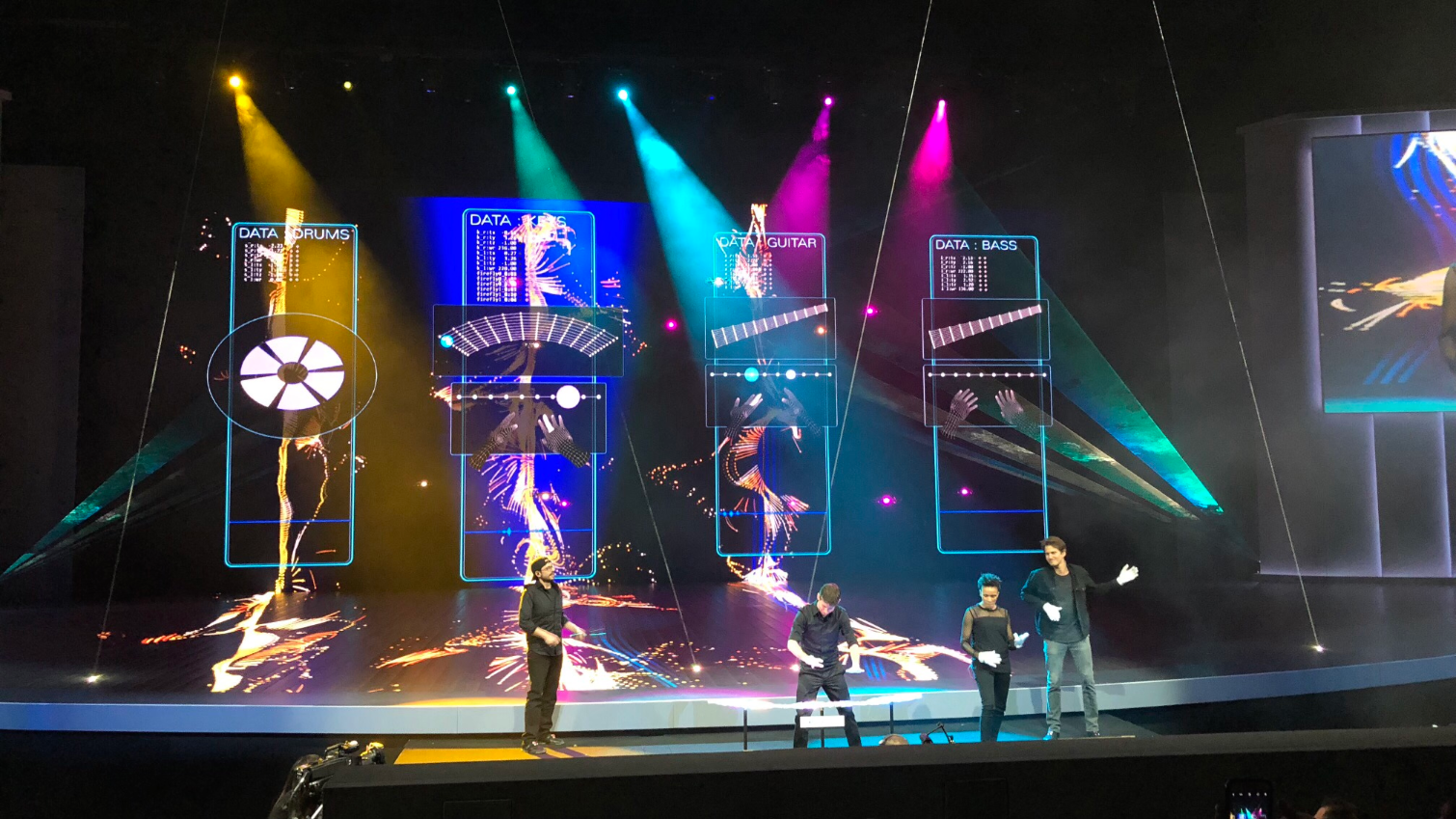

But before the show had even started, things got weird (which, to be fair, is typical for CES). A pre-show featured a “data-only band,” algorithm and blues, featuring algorithms, drones, computers, RealSense cameras and more to mix technology, music and dance. The human band members all wore motion-sensing gloves that saw gestures in a 3D space or used props that sent data to a computer to produce the music. That included a cover of The Killers’ “Human” and then a bunch of drones hovering over a hologram of a piano to play “Chopsticks.”

They also used an Intel Movidius Neural Compute Stick to play with two holographic band members, Ella and Miles, powered by the tech.

Then, using Intel RealSense cameras, a dancer wearing tackers was followed across the stage in real time, followed by her own wireframe, and then algorithm-based illustrations that moved along with her.

“They don’t even need us anymore,” the lead musician quipped. With all of that data, maybe they don’t.

Andrew E. Freedman is an editor at Tom's Hardware focusing on laptops, desktops and gaming as well as keeping up with the latest news. He holds a M.S. in Journalism (Digital Media) from Columbia University. A lover of all things gaming and tech, his previous work has shown up in Kotaku, PCMag, Complex, Tom's Guide and Laptop Mag among others.

Club Benefits

Club Benefits