I test AI for a living — here’s why Google Gemini is a big deal

Gemini has three versions

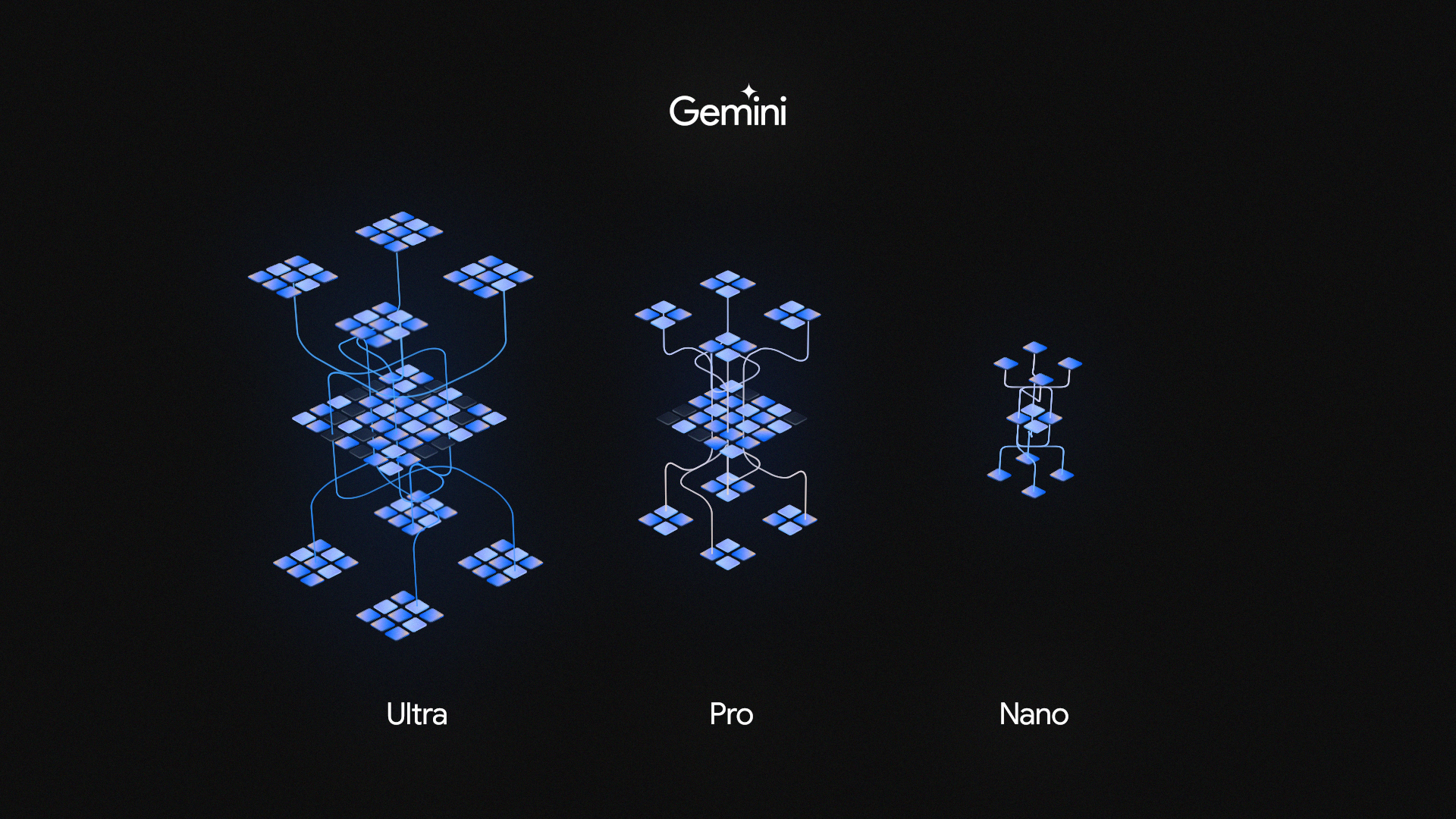

Google has finally unveiled its much anticipated next-generation artificial intelligence model Gemini. In a virtual press event, the search giant showed off its multi-modal capabilities and revealed it comes in three different sizes: Nano, Pro, and Ultra.

All of the big AI models come in multiple sizes, including GPT-4 from OpenAI and LlaMA from Meta so that wasn’t a big surprise, but the way it's being packaged is noteworthy.

The smallest model, Nano, is being sold as an on-device model that will run on Android, this will allow developers to create generative AI tools in their apps without sending data to the cloud.

The mid-tier Gemini Pro is seen as an efficient, capable model along the same lines as GPT-3.5 that can perform well with a smaller computing requirement. Google hinted that Ultra performs as well if not better than GPT-4 and requires the best chips and largest data centers to operate.

Why does this matter?

The world of generative artificial intelligence has changed dramatically over the past year, going from something reserved for research and drip-fed into products to the cornerstone of big tech business plans for the next few years.

Google has been heavily invested in AI research since its founding 25 years ago including, inventing the underlying technology that is the foundation of most large language models.

After the rapid change in the AI landscape, the search giant merged its multiple research divisions to focus on building a new generation of large language models to replace it's already powerful PaLM 2 and better compete with OpenAI’s GPT-4.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

So why is Gemini different?

The model was designed and trained to be multimodal from the ground up — capable of understanding images, text, code, audio, and video without the need for plugins or fine-tuning. At least that is the case with the Ultra, GPT-4-like version of the AI model.

It has also been trained with improved reasoning capabilities, allowing it to better interpret natural language prompts and respond more accurately. This includes mathematical, programming, and science-related problems.

In a video previewing Gemini Google showed it watching a video of someone playing a game of hide the ball under the cup, with Gemini accurately determining the correct cup.

In another video, the company revealed Gemini Ultra was capable of reading and analyzing 200,000 research papers over an hour and finding new patterns that would have taken a human researcher weeks.

Here is why I'm excited

What this demonstrates is a broader degree of capabilities when compared to other models. Google explained that it not only outperformed the other leading models in key metrics but also outperformed some of the best humans in a range of academic assessments.

Google opined that, because it was built from day one to be multimodal and see the world the way humans do, it is better able to rationalize across a range of inputs.

Demis Hassabis, CEO of Google DeepMind said: “Human beings have five senses, and the world we built, and the media we consume is in those different modalities.” He added: “Gemini can understand the world around us in the way that we do and can absorb any type of input and output. Not just text like most models but also code, audio, image, and video.”

Every technology shift is an opportunity to advance scientific discovery, accelerate human progress, and improve lives. I believe the transition we are seeing right now with AI will be the most profound in our lifetimes, far bigger than the shift to mobile or to the web before it.

Sundar Pichai, Google CEO

While I haven’t used Gemini myself, if the hype is real, and what we were shown in the video demonstrations is accurate, then this is a game-changing new approach to generative AI. This isn’t just a direct competitor to GPT-4 but a new baseline for next-generation AI.

Google’s team described this as Gemini 1.0, heavily suggesting that future versions were already in development and would be significantly more powerful than what is already available, likely coming around the same time as GPT-5 from OpenAI.

More from Tom's Guide

- Nothing Chats just got pulled from Google Play store over security concerns

- Hackers now spreading Mac malware via fake browser updates — don’t fall for this

- Best Black Friday streaming deals now— Hulu, Paramount Plus, Max and more

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.

Club Benefits

Club Benefits