Google just dropped Gemma 2 — the power of GPT-3.5 on a phone is here

A very small new AI model

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Google DeepMind has just dropped a brand new AI model, Gemma 2 2B. It’s a 2 billion parameter model that is small enough to fit onto a smartphone and still offers GPT 3.5 levels of performance.

The launch follows on from the June announcement of the Gemma 2 9B and 27B models, all of which represent Google's fightback against rivals in the AI LLM space, particularly against Meta and its Llama 3.1 family.

The new Gemma 2B model is more evidence of a deliberate shift towards smaller, more lightweight models that can run on a wider range of devices, not just powerful computers.

Even though the new LLM is a fraction of the physical size of previous models like OpenAI's GPT-3.5 and GPT-4 its performance in tests reflects the massive improvement in training and packaging now on offer.

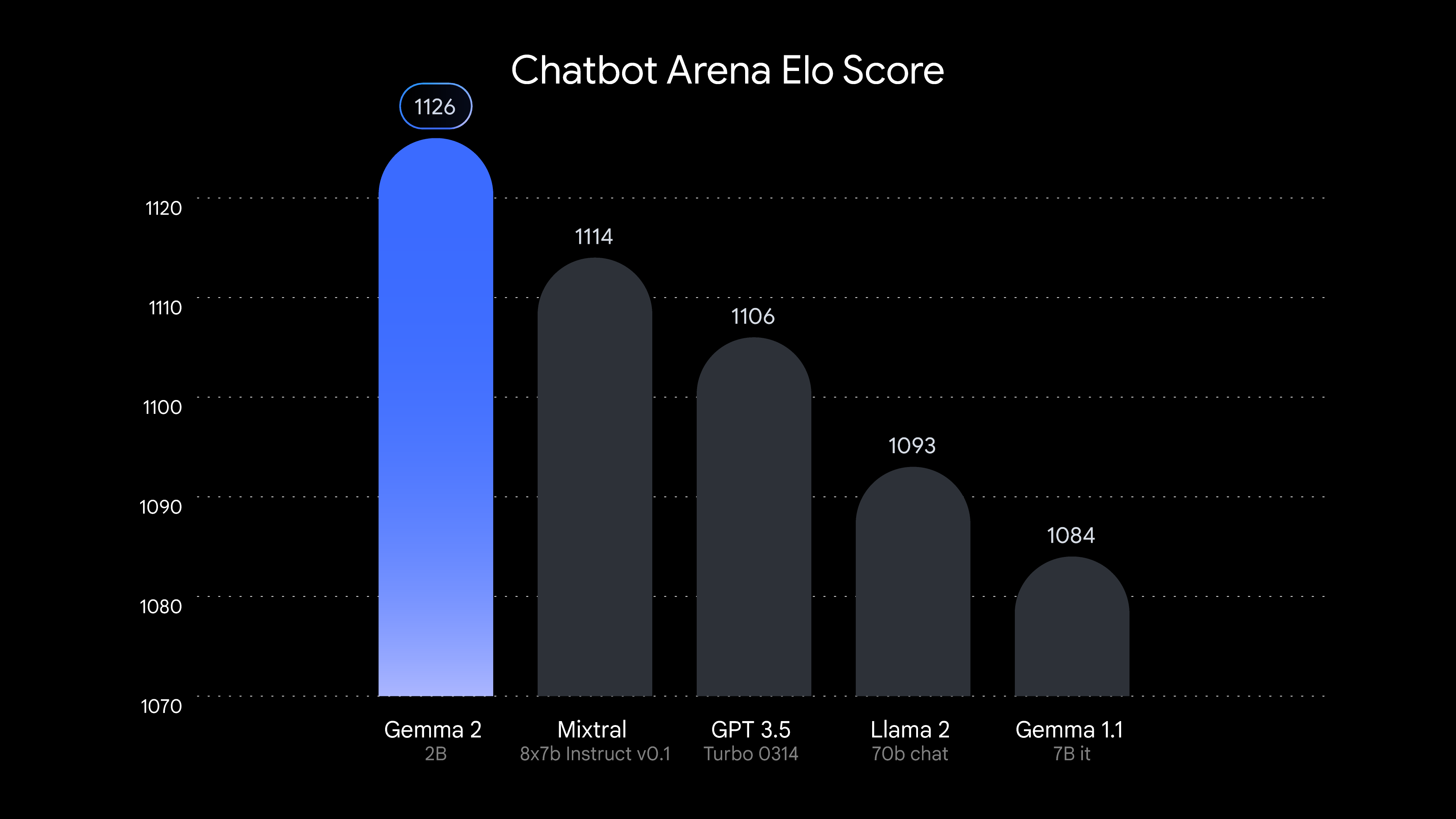

According to Google the new model offers ‘best-in-class performance for its size, outperforming other open models in its category,’ and the LYMSYS charts certainly show some impressive stats.

The model apparently achieves this performance uplift ‘by learning from larger models through distillation.’ The technique was introduced in 2015 in a paper by Geoffrey Hinton, and employs a sophisticated student-teacher method of training that allows for smaller, more compressed models.

So for most people, cloud-based Al is likely to continue to dominate, at least in the short term. But inevitably, as the technology matures, on-device AI is going to take a larger slice of the chatbot pie.

The market for mobile and edge-based Al, for use on small portable and static devices such as sensors, is expected to grow exponentially over the coming years. This is especially true of multimodal AI applications which will use vision and sound to create a rich interactive universe for the user. Hence the race to perfect smaller, more powerful systems as quickly as possible.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

However, despite all the talk of running this type of AI locally on smartphones (aka ‘on-device’), the reality is there are still very few portable devices that can cope with the processing demands of current LLMs.

Modern iPhones running at least IOS 14, and later Android phones using Snapdragon processors like the Galaxy S23 Ultra, are some that can. But even then the performance is modest in comparison to computer-based systems and Apple's Apple Intelligence which is mostly on-device requires at least an iPhone 15.

So for most people, cloud-based Al is likely to continue to dominate, at least in the short term. But inevitably, as the technology matures, on-device AI is going to take a larger slice of the chatbot pie. Especially as users begin to focus more and more on privacy, security and reliability.

Interested readers can play around with the new Gemma 2 2B model at the Google AI Studio playground and it is available through Ollama to run on device.

More from Tom's Guide

- 5 astonishing uses of AI happening right now

- Sam Altman hopes to take on Nvidia with new global network of AI chip factories

- AMD drops new bargain-minded CPUs and GPU with an AI focus at CES 2024

Nigel Powell is an author, columnist, and consultant with over 30 years of experience in the technology industry. He produced the weekly Don't Panic technology column in the Sunday Times newspaper for 16 years and is the author of the Sunday Times book of Computer Answers, published by Harper Collins. He has been a technology pundit on Sky Television's Global Village program and a regular contributor to BBC Radio Five's Men's Hour.

He has an Honours degree in law (LLB) and a Master's Degree in Business Administration (MBA), and his work has made him an expert in all things software, AI, security, privacy, mobile, and other tech innovations. Nigel currently lives in West London and enjoys spending time meditating and listening to music.

Club Benefits

Club Benefits