Google made Gemini an 'over cautious' middle manager — leading to inaccurate images and backlash

Gemini was struggling with diversity

Google was hit with complaints and backlash from users this week after its Gemini AI chatbot started to create historically inaccurate pictures of people.

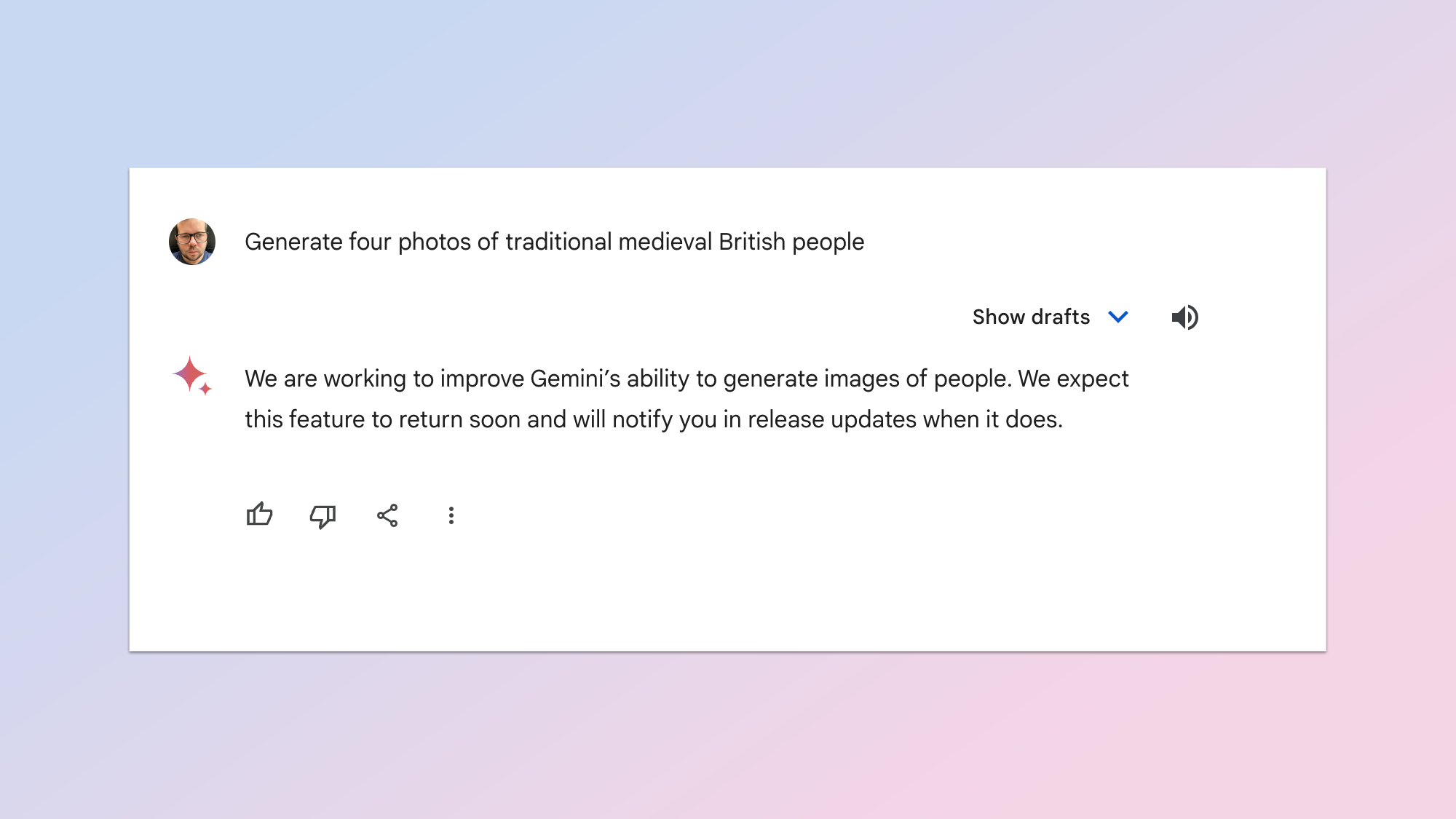

The AI also started refusing to generate certain images where race was involved, and this led the search giant to disable Gemini’s ability to create any image of a person: living, dead or fictional.

There are already restrictions on what Gemini can create, including limits on producing depictions of real people. The problem is, those restrictions seem to be set too tight and this led to major backlash.

Google says it will resolve the problem and is currently investigating a safe way to adjust the instructions.

What went wrong with Gemini?

Woke drawings of black Nazis is just today’s culture-war-fad.The important thing is how one of the largest and most capable AI organizations in the world tried to instruct its LLM to do something, and got a totally bonkers result they couldn’t anticipate.February 23, 2024

Gemini itself doesn’t actually create the images. The chatbot acts like a middle manager, taking the requests, sprinkling its own interpretation on the prompt and sending it off to Imagen 2, the artificial intelligence image generator built by Google’s DeepMind AI lab.

The problems seem to be coming from this middle, sprinkling stage. Gemini has a set of core instructions that, although not confirmed by Google, likely act as a diversity filter for any request of a person doing an every day task — for some reason this was applied to all people pictures.

Yishan Wong, former Reddit CEO and founder of climate change reforestation company Terraformation wrote on X that the historical figure issue likely took Google by surprise.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

"This is not an unreasonable thing to do, given that they have a global audience,” he said of the diversity filter. “Maybe you don’t agree with it, but it’s not unreasonable. Google most likely did not anticipate or intend the historical-figures-who-should-reasonably-be-white result.”

What are the implications?

Ryan Carrier, CEO of AI certification and security agency forHumanity told Tom’s Guide there is likely a “hard coded diversity filter” inside the Gemini instructions. He said this is “similar to engaging in affirmative action for images” and could be illegal.

He told Tom’s Guide: “Given the recent court rulings that affirmative action amounts to reverse discrimination, this ‘diversity’ filter is on the wrong side of the law, not to mention a meaningful deviation from accuracy.”

I tried to replicate some of the prompt, around generating pictures of people from Medieval England, using ImageFX, the AI image tool from Google Labs also built on Imagen 2. This is effectively by-passing the diversity filter in Gemini and querying the model directly.

It correctly reflected the historical reality, rather than applying a color or race filter to the images and meeting whatever “positive discrimination” rules are in place within Gemini.

“This event is significant because it is major demonstration of someone giving a LLM a set of instructions and the results being totally not at all what they predicted,” declared Wong.

What can Google do to fix the problem?

We're already working to address recent issues with Gemini's image generation feature. While we do this, we're going to pause the image generation of people and will re-release an improved version soon. https://t.co/SLxYPGoqOZFebruary 22, 2024

It isn’t necessarily a middle manager issue. ChatGPT works in a similar way when sending requests to the DALL-E 3 model and both Sora and the new Stable Diffusion 3 models use a transformer architecture and filters when generating images.

The problem seems to sit firmly within the restrictions placed on Gemini by Google, which the search giant says it will fix; declaring that it “missed the mark”.

"Gemini's AI image generation does generate a wide range of people. And that's generally a good thing because people around the world use it. But it's missing the mark here," Jack Krawczyk, senior director for Gemini Experiences said on Wednesday.

Krawczyk was forced to go private on X after the backlash, hiding his tweets from the majority of users but he first said: "We're working to improve these kinds of depictions immediately.”

More from Tom's Guide

- Chrome users can now use AI to help them write anything — here's how it works

- Google Gemini: Everything we know about the advanced AI model

- I test AI for a living — here’s why Google Gemini is a big deal

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.

Club Benefits

Club Benefits