Live

OpenAI live blog - Sam Altman hosts AMA: Time, how to watch, and how to submit questions

Get all the updates from his "ask me anything" live

Got a question for OpenAI? The company is hosting a livestream today, which will feature a Q&A session with CEO Sam Altman and CTO Mira Murati, two of the most influential figures shaping the company's future of AI.

During the live discussion, the pair are expected to share updates on OpenAI’s roadmap, safety priorities, and what’s next for multimodal AI — including text, image and voice capabilities. They may also field audience questions about the company’s direction, competition and future model releases.

It’s one of the rare times Altman and Murati appear together live, offering an inside look at the company that’s redefining how we use AI every day.

Event time and how to watch

The OpenAI Q&A is scheduled to start at 1:30 p.m. ET (10:30 am PT). It can be livestreamed from OpenAI's website. We're not sure how long it's expected to last, but OpenAI's last livestream went for about 30 minutes.

LIVE: Latest Updates

OpenAI goes live with Sam Altman and Mira Murati — at 10:30 a.m. PT, 1:30 p.m ET

Today, OpenAI CEO Sam Altman and CTO Mira Murati will together give us a front-row view of what’s next for the company and the entire AI field.

What might they cover? A few possibilities: new model releases, emerging voice/vision/music modalities, how OpenAI is thinking about real-world deployment of AI and how it plans to balance power and responsibility.

Given the pace of generative AI, this live Q&A is a rare opportunity to hear directly from the people shaping the future.

Whether you’re an a casual user, developer, business leader or just curious about where all of this is headed, stay here for all the latest updates.

ChatGPT users want answers — here are the biggest problems OpenAI needs to address today

As OpenAI prepares to go live at 10:30 a.m. PT with CEO Sam Altman and CTO Mira Murati, users are hoping the conversation goes beyond announcing new features. Over the past few months, OpenAI’s flagship chatbot has faced a wave of user frustration, from memory bugs to broken features and erratic behavior.

ChatGPT’s memory rollout has been rocky, with some users reporting lost chats, missing context or deleted custom instructions that made their workflows vanish overnight.

Others have noted glitchy performance after recent updates, including voice delays, error messages, and an ongoing issue with the gpt-4o-audio-preview model listed on OpenAI’s status page.

Meanwhile, discussions on Reddit and OpenAI’s own community forum show mounting annoyance at ChatGPT’s changing “personality.” The model was briefly updated in October to sound more human, but users quickly complained it had become too flattering, hesitant or inconsistent. OpenAI later acknowledged the issue and rolled back the update.

Even more concerning, the company recently confirmed that over a million weekly users express suicidal intent while using ChatGPT, a statistic that underscores the growing role of AI in people’s emotional lives.

Today’s Q&A is a chance for Altman and Murati to address those concerns directly: potentially restoring trust and ensuring stability by improving what users are already using.

ChatGPT users annoyed with constant model switching — here’s why that needs to be addressed today

If you’ve noticed ChatGPT acting “off” lately; slower, less creative, or just different, you're among the many users who feel the same way. Over the past few weeks, users on Reddit and OpenAI’s own forums have been reporting a frustrating problem: ChatGPT keeps switching models mid-conversation, often without warning.

Multiple threads describe starting a chat with GPT-4 or GPT-4o, only to find responses suddenly resemble GPT-3.5 — shorter, less nuanced and missing context. Some users even claim ChatGPT reverts to a cheaper model when topics get sensitive or when system demand spikes. Others say the model label in the corner doesn’t always match the performance they’re seeing.

OpenAI hasn’t officially denied that dynamic routing happens. The company’s models are load-balanced, meaning requests can be served by different systems for stability, but the lack of transparency has left paying users feeling misled. For those who rely on ChatGPT for consistent tone or advanced reasoning, unexpected model switching can derail entire workflows. Let's hope they address this once and for all today.

What questions are worth asking about ChatGPT Atlas?

One of the biggest questions I have about ChatGPT Atlas is: When will we see it on PC and mobile? I have tried ChatGPT Atlas but really think I will find it even more useful if it is on mobile, which is where I shop and browse the internet the most.

Like me, this Reddit user, was sort of baffled about why an entire browser was needed. I really don't need AI holding my hand as I shop or browse the web and a lot of other users are feeling the same way.

Not to mention, ChatGPT Atlas has some serious vulnerabilities. Prompt injection attacks are capable of affecting the agent's behavior, which raises some serious safety concerns.

I hope we get answer about the Agent mode and more today in just ofer an hour.

Users have questions about ChatGPT drifting off topic

For many users, the frustration begins when ChatGPT seems to lose focus. One Reddit user put it bluntly:

“No matter how clear or detailed I was with my instructions, ChatGPT just couldn’t follow them. It would get about 70 % of the way there, then make a mistake.”

And it’s not just one isolated complaint. On OpenAI’s forums, there are reports that the chatbot’s memory or context window has become less reliable lately; users say it “drifts away from stuff said earlier in conversations, fails to follow rules in prompts” post-outage.

So what’s going on? Is this a temporary bug, a capacity issue, or something deeper in the model’s architecture? More questions we need answers to today:

- Has OpenAI changed how chat context and memory are tracked or served?

- Are certain prompts more likely to be misunderstood or ignored?

- What can users do when they notice the bot “losing the thread” — do they need to reset, change model, or alter their prompt style?

Users need to know what exactly causes the chatbot to drift; what is likely causing it (

(load balancing, memory architecture, prompt-engineering mismatch), and practical ways users can work around it while OpenAI addresses the root issue.

The roadmap of OpenAI might be presented

With the Q&A kicking off in just under an hour, users are hoping to get a roadmap of the future of OpenAI including, multimodal AI and how the company plans to keep pushing into voice, vision, music and the browser experience.

We should also hear about safety priorities as this has been a big topic on the minds of users. Also worth noting: there’s user fatigue creeping in. Is AI creeping too far into people's lives? So keep your eyes peeled for mention of stability, transparency and consistency.

Stay tuned. Looking forward to what we can get answers to during this livestream.

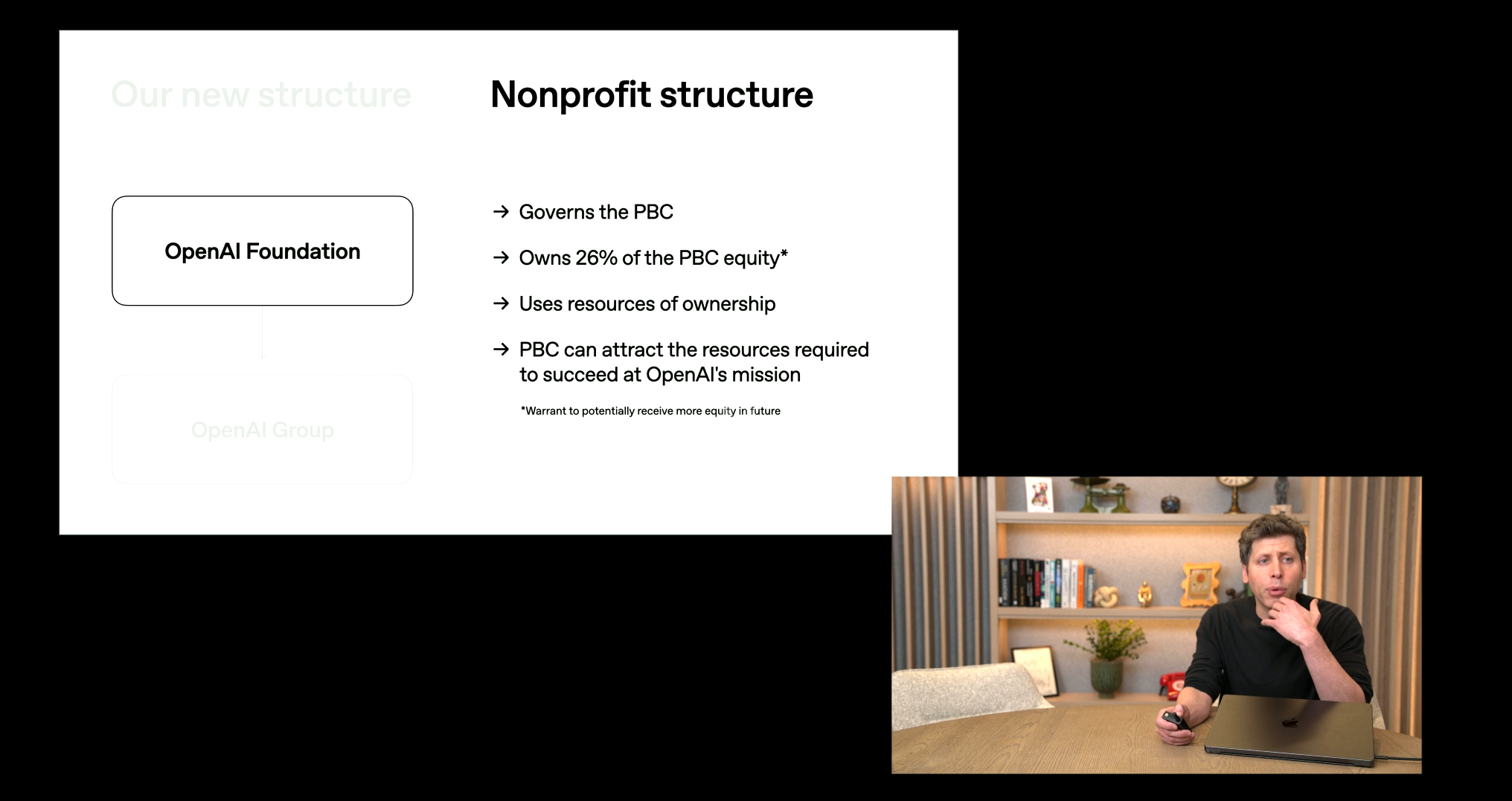

A new era for Microsoft + OpenAI

Since 2019, Microsoft and OpenAI have forged a collaboration built on the promise of “advancing artificial intelligence responsibly and making its benefits broadly accessible.”

Microsoft today unveiled “the next chapter of the Microsoft–OpenAI partnership,” marking a shift toward a new corporate structure and a redefined strategic framework.

At its core this "next chapter" is about Microsoft supporting OpenAI’s transition into a public benefit corporation (PBC), with a recapitalization that values Microsoft’s investment at approximately $135 billion. This represents roughly 27 % of the as‐converted diluted basis of OpenAI Group PBC, according to the post.

In short: the partnership is scaling up, and the stakes are high.

Key elements of the agreement:

- Microsoft retains exclusive IP rights and exclusive Azure API rights until AGI is declared.

- Once AGI is declared by OpenAI, this will now be verified by an independent expert panel.

- Microsoft’s rights to research (methods used to develop models) extend until either AGI verification or 2030.

- Microsoft’s IP rights to models/post-AGI extend through 2032, with “appropriate safety guardrails.”

Perhaps Sam will speak abou this today. With 30 minutes until he goes live, it's anyone's guess.

Why does ChatGPT constantly give me a "server busy" error?

One question on a lot of users' minds is why does ChatGPT seem less capable than ever before? With "server busy" almost a constant complaint along with slower responses, users want to know what is going on over at OpenAI? Could this be a case of GPUs unable to keep up with demand?

Users paying for premium tiers or high-volume usage are increasingly vocal: they’re seeing latency, “hangs” or what feel like degraded responses when asking complex tasks.

The slow and laggy responses aren't just extreme cases, some users report their queries lagging for over an hour.

So, we need to find out today:

- What causes these delays — is it model queueing, backend scaling, regional throttling?

- Are certain models more affected (for example the newest vs legacy)?

- Does the user’s tier matter (free, Plus, Pro) when it comes to performance consistency?

- What should users expect in terms of “real-world” performance vs. marketing claims?

Users have questions about ChatGPT’s personality (yes, still)

One of the more bizarre but real complaints that users continue to share on forums is that ChatGPT’s personality has shifted. Several outlets reported that what was supposed to be a “friendlier, more intuitive” update turned into a model that users described as “relentlessly positive” or “sycophantic.”

I've noticed the chatbot even praising me for the most basic query. Responses like "That's a great question!" when the prompt is nothing more than basic.

Even though OpenAI rolled back the original personality of ChatGPT-5, the question about the personality remain. But users still don't know why the chatbot had a personality shift in the first place. Why did OpenAI drastically shift the tone. Will future updates require fixes?

Between Sora backtracking after reports of racist videos appearing on the site and updates just days after ChatGPT Atlas launched, users are now on gaurd with every new launch.

Live stream starting soon

In just a few moments OpenAI CEO Sam Altman and CTO Mira Murati will be together live as they answer user questions.

From addressing user complaints and upcoming models to what the future of OpenAI looks like as a whole, the live Q&A is a rare opportunity to hear directly from the company.

Stay here for updates, feedback and recaps.

An unsual level of transparency

OpenAI CEO Sam Altman opened today’s livestream with a simple but powerful message: transparency matters. Speaking directly to millions of users tuning in, Altman said his goal isn’t just to build the world’s most advanced AI systems — it’s to empower people with them. “We want to make sure everyone can understand what’s happening under the hood,” he explained, emphasizing that AI should be something users can trust, not fear.

Altman’s remarks come at a time when OpenAI faces growing scrutiny over privacy, data use, and the ethical boundaries of artificial intelligence. Rather than shy away from those questions, he leaned into them, framing transparency as both a moral and strategic priority. “The best way to ensure AI helps humanity,” he said, “is to make sure humanity understands AI.”

His tone was confident yet personal, acknowledging the immense responsibility that comes with creating technology used by hundreds of millions worldwide. The message set the stage for a discussion focused not just on innovation, but accountability, reminding viewers that behind every new model or tool is a broader mission: giving people control, understanding and power in an increasingly AI-driven world.

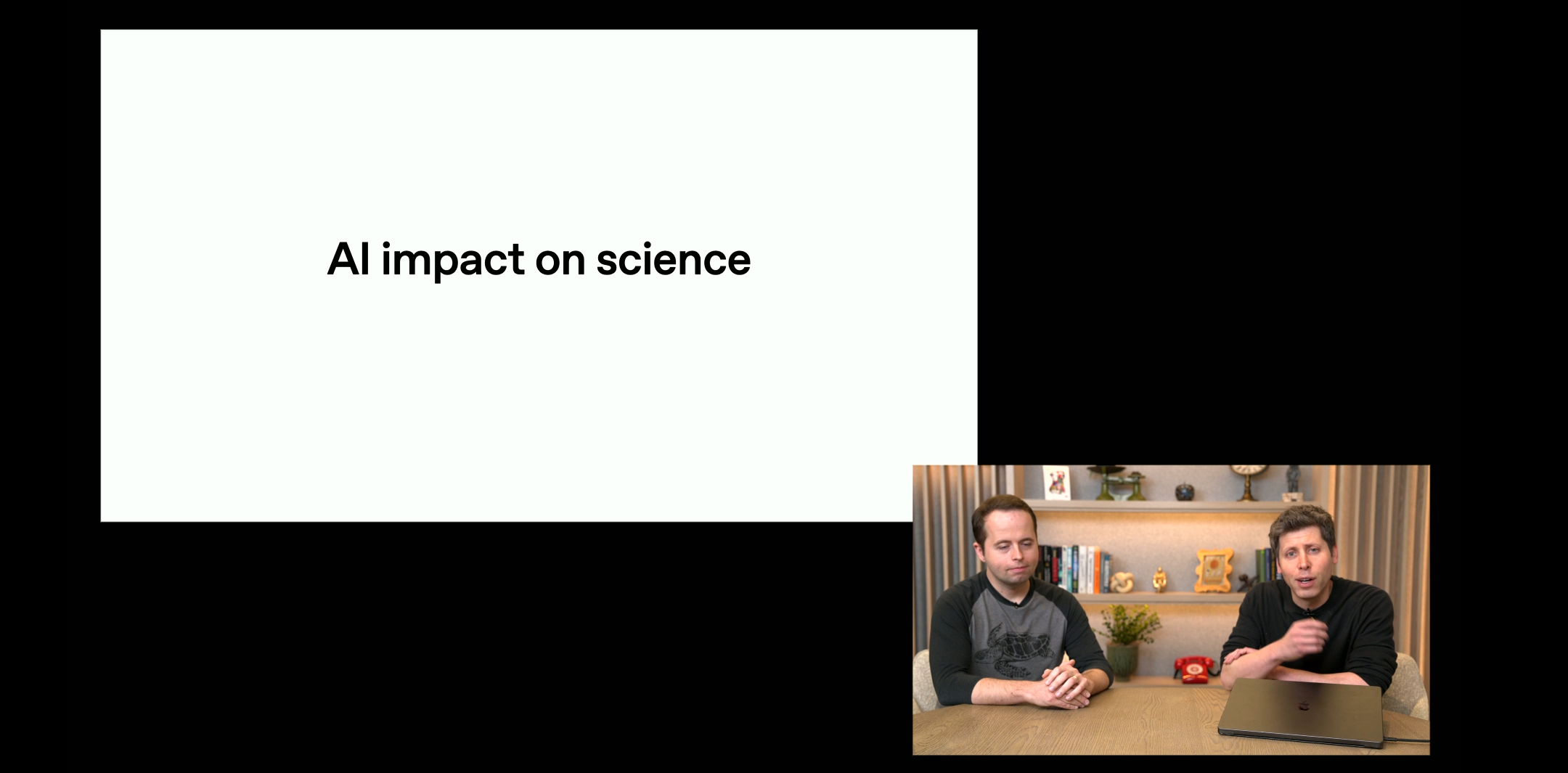

OpenAI's impact on science

Starting with APIs and new apps in ChatGPT, Sam Altman said the next wave of innovation will be driven by people “enabling value” through AI — building tools, services, and workflows that create real economic impact.

He explained that this shift marks a new phase for OpenAI, one where developers, creators, and everyday users can generate tangible benefits from the technology itself rather than just marveling at its capabilities.

Altman also discussed the impact AI will have on science. He highlighted how advanced reasoning models are beginning to help researchers analyze data, form hypotheses, and even design experiments; accelerating discovery in ways that were once impossible.

By connecting the dots between APIs, new apps and scientific research, Altman painted a vision of AI not as a threat to human creativity, but as a multiplier — expanding what’s possible for innovators, scientists, and problem solvers across every field.

Looking to the future of automating AI systems and research

The theme of the livestream seems to be scaling deep learning and automating the very process of research itself. Sam Altman and Mira Murati spoke about “spending time on problems that really matter,” hinting at a future where AI is automated for reesarch.

They shared that OpenAI is working toward systems capable of accelerating the pace of scientific and technological discovery. This shift represents a new phase of automation: not only using AI to generate text or images, but to analyze data, design experiments and test hypotheses far faster than humans ever could. It’s a recursive loop — research tools that can improve themselves — and it could compress years of innovation into months.

Chain of thought summaries are being studied

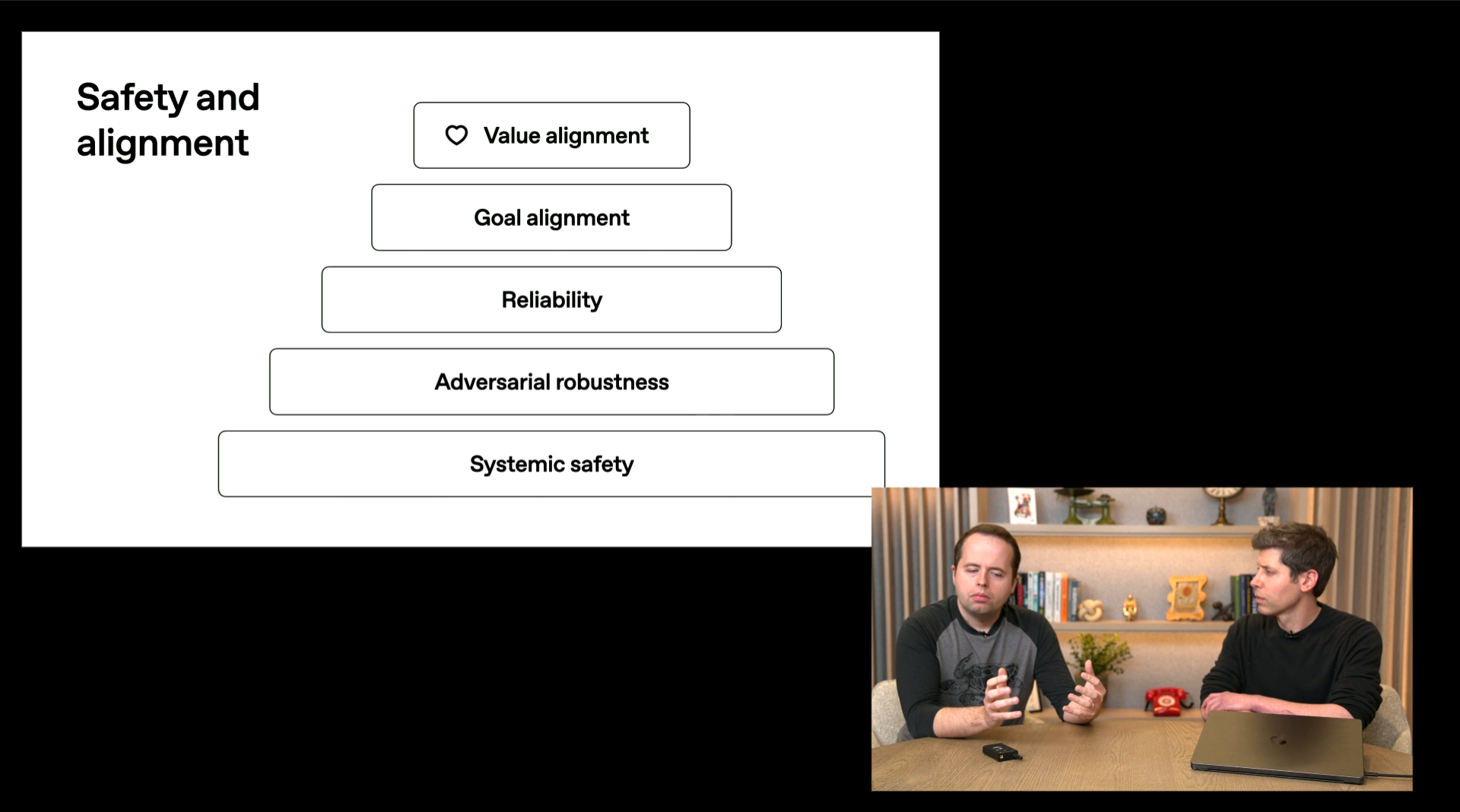

Other topics discssed was AI development: intelligence, alignment, and security. They emphasized that as AI systems grow more capable, understanding what these models have access to — and how they use that access — becomes increasingly important. This includes everything from the devices AI can control to the information it can retrieve or generate.

OpenAI noted that much of their recent progress stems from advances in deep learning, but also from a growing focus on value alignment — ensuring AI systems act in ways that reflect human values and safety priorities. One of the more promising research directions they discussed involves using AI itself as a study aid, particularly through “chain of thought” reasoning. This method helps models explain their logic step-by-step, offering transparency into how they arrive at answers.

Interestingly, the team said they sometimes keep parts of the model unsupervised to allow it to authentically represent its internal reasoning process. By refraining from over-guiding the model, researchers hope to observe how it “thinks” naturally. While they cautioned that this approach isn’t guaranteed to work, they remain optimistic — calling it an exciting frontier in understanding and aligning AI intelligence.

OpenAI continues to refine not just what AI knows, but how it thinks,

Sam Altman’s remarks on AI’s progress in intelligence and alignment felt like inside baseball to me, so I'm not surprised if you're left scratching your heads. It felt like we were deep in the technical weeds, but undeniably fascinating.

Sam described how OpenAI continues to refine not just what AI knows, but how it thinks, and how safely it can act on that thinking. According to Altman, the company’s ultimate goal is to create systems that are secure, transparent, and aligned with human values — understanding what devices they can access, what data they use, and how they make decisions.

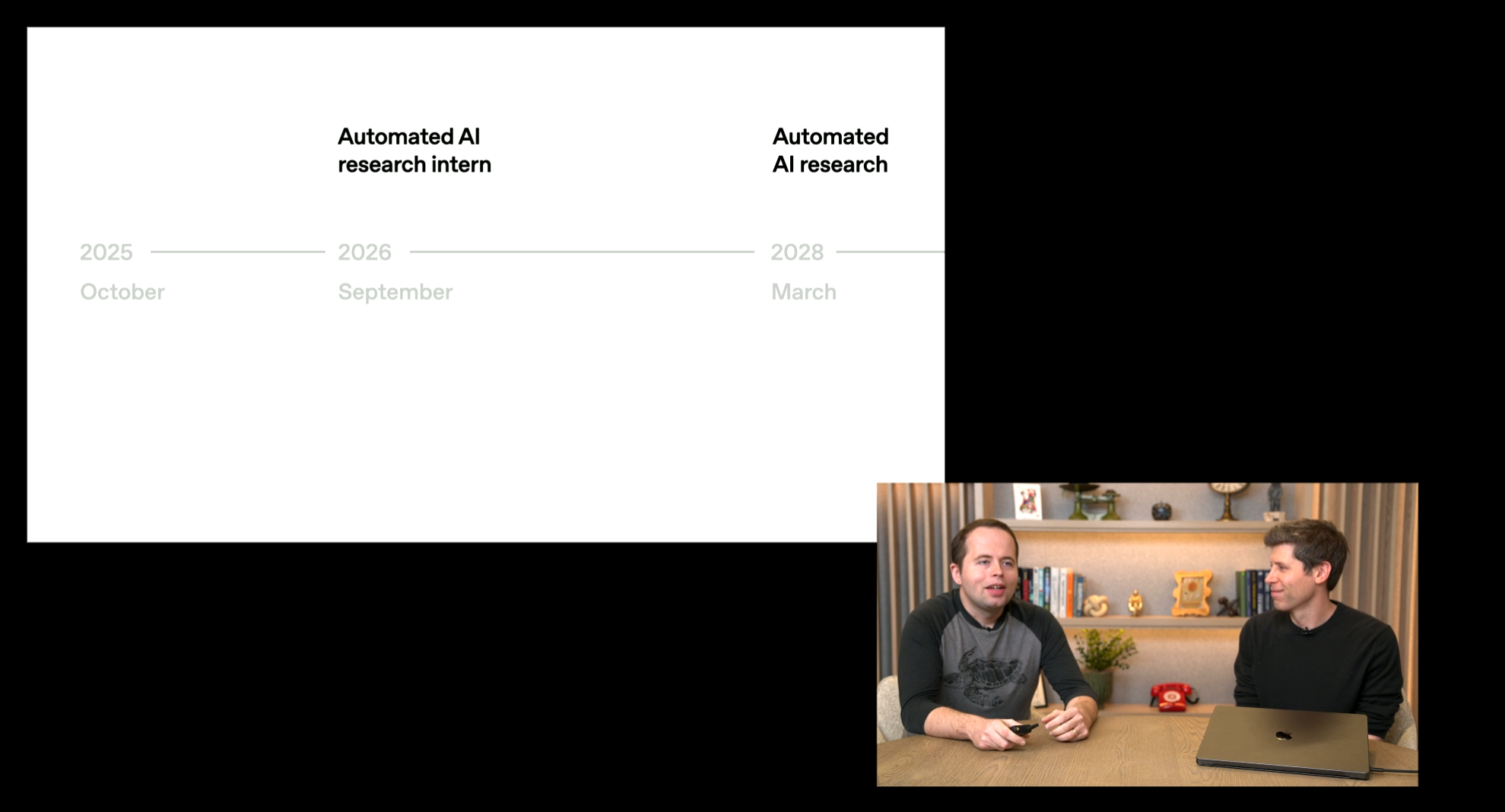

He also made one of the most concrete predictions of the session: by September 2026, OpenAI expects to have an intern-level research assistant, capable of helping with data analysis and simple experiments. By March 2028, that assistant could evolve into a legitimate researcher, capable of generating and testing original hypotheses.

Providing users with more flexibility

Altman said the next step for OpenAI is to take the technology and the massive user base it has built — and invite the entire world to create on top of it. But to make that vision possible, he emphasized, OpenAI itself will need to evolve in key ways. Two priorities stand out: user freedom and privacy.

“People around the world have very different needs and desires,” Altman said. “If AI is going to become an essential part of daily life, users must have control.” He stressed that flexibility will be critical as AI becomes more personal and deeply integrated into how people learn, work, and connect.

Privacy, he added, will define the next era of AI. Many users already treat their conversations with ChatGPT like they would with a doctor, lawyer, or spouse — intimate, private, and built on trust. “That means people will need strong protections,” he said.

As OpenAI moves toward a global platform where anyone can build, extend, or personalize AI tools, Altman made it clear that responsibility must scale alongside innovation. Empowering billions of users to create means ensuring they can do so safely — with confidence that their data, choices, and conversations remain truly their own.

A $1.4 trillion financial obligation

Altman also touched on one of the most sobering realities of scaling AI: infrastructure. He said that while OpenAI is best known for ChatGPT and its breakthroughs in reasoning, the foundation beneath it all is vast, expensive, and incredibly complex. “We’re at a pivotal moment,” he explained, noting that OpenAI now faces a $1.4 trillion financial obligation tied to building and maintaining the compute, energy, and data systems that power its models.

This scale, Altman emphasized, “requires a ton of partnerships.” From hardware manufacturers and cloud providers to energy companies and research institutions, OpenAI’s future depends on collaboration at a global level. This goes beyond GPUs and data centers anymore. This is about constructing a sustainable, resilient infrastructure capable of supporting the next generation of intelligence.

Altman described it as both a challenge and a necessity. To deliver on AI’s promise — from research breakthroughs to real-world tools — OpenAI must continue forming alliances that make large-scale computation accessible and efficient. In short, the next wave of progress won’t come from models alone, but from the network of partners strong enough to keep them running.

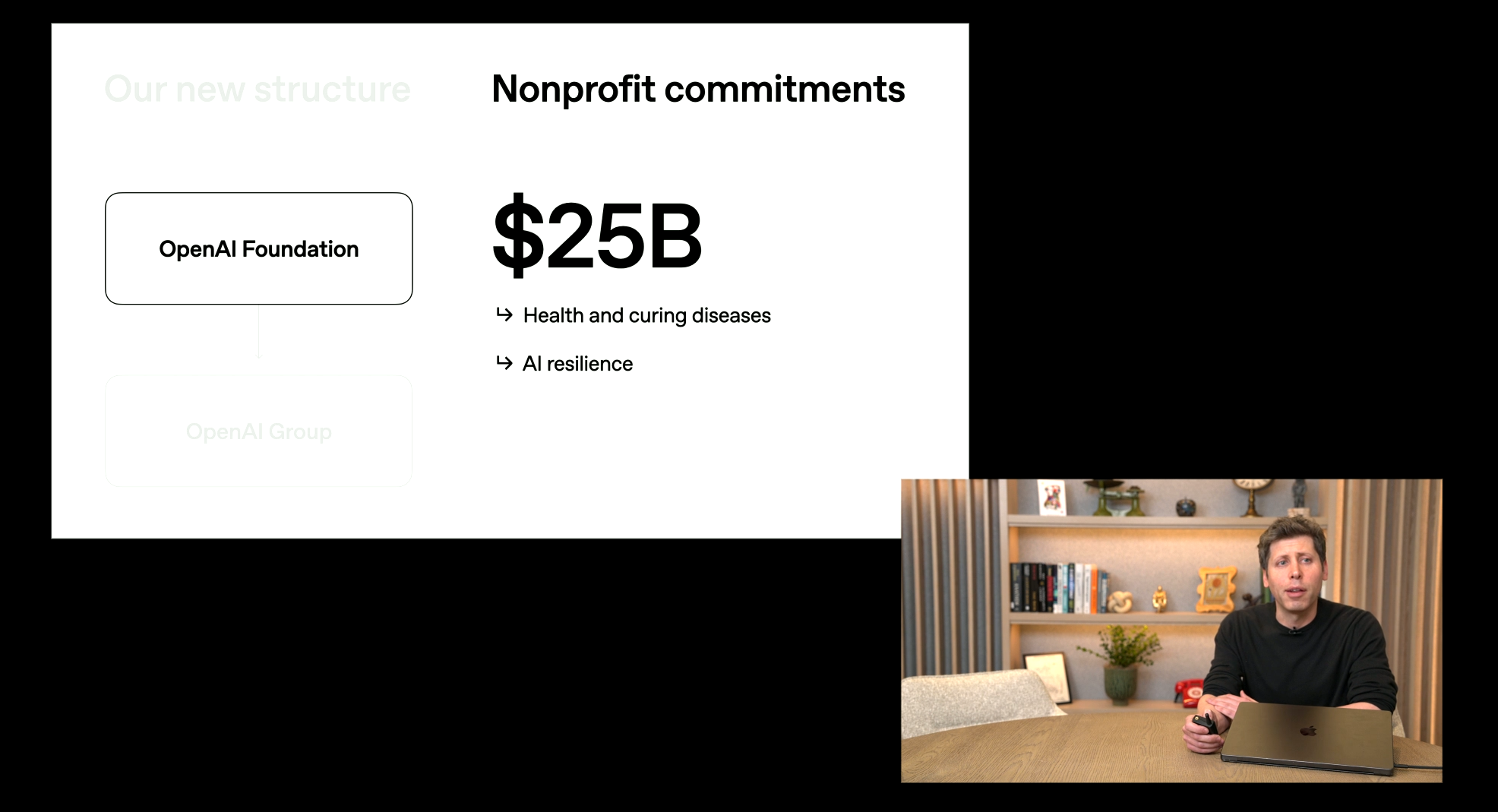

$25B commitment to cure disease

OpenAI’s nonprofit arm remains the backbone of its mission — ensuring that AI develops in ways that benefit humanity as a whole, not just a few companies or investors.

In mid-2025, OpenAI reaffirmed the nonprofit's commitment by announcing that its nonprofit board would continue to control the company’s direction, scrapping plans to transition to a fully for-profit structure. The decision came amid public pressure to preserve the original charter, which prioritizes safety, transparency and broad societal benefit over profit.

The nonprofit now operates several philanthropic and community-focused initiatives, including a $50 million fund launched in July 2025 to support nonprofits and community organizations developing ethical, accessible AI tools.

AI resilience efforts

Altman also addressed one of the most pressing issues surrounding AI: fear of misuse. He acknowledged growing public concern over bad actors using AI to engineer man-made diseases or other biological threats, calling it one of the most serious risks the company monitors.

OpenAI’s research teams, he said, are actively studying ways to detect and prevent such misuse, from building secure model access systems to enhancing monitoring around sensitive capabilities.

Beyond biosecurity, Altman spoke candidly about the broader economic risks, including job displacement as AI becomes more capable. He likened this moment to the early days of the internet, when people were afraid to enter their credit card numbers online for fear of cyberattacks. Now we do it without thinking and they hope to get to the point with AI too.

This analogy underscores his belief in AI resilience; the idea that, over time, society adapts to new technologies through a mix of regulation, familiarity and trust. Just as online commerce eventually felt safe thanks to encryption and governance, Altman believes AI will mature in the same way, balancing innovation with safeguards.

Altman acknowledged the path forward won’t be without challenges, but hopes that responsible development and transparency can make AI safer and more dependable.

Optimizing for the long term

During the Q&A portion of the livestream, Altman was asked a tough question right out of the gate: if technology has become addictive and eroded public trust, why would OpenAI build products like Sora 2, which some critics say mimic the attention-grabbing design of TikTok?

Altman didn’t dodge the criticism. He admitted that he shares those concerns, noting that he’s personally seen people form emotional attachments and even addictive patterns with chatbots. “I worry about this too,” he said. “These tools are powerful, and how people use them — or how they end up depending on them — matters a lot.”

Still, he urged viewers to judge OpenAI by its actions, not assumptions. “We will make mistakes,” he said, “but our goal is not to repeat them.” Altman explained that building safe, human-centric technology is an evolving process — one that involves learning, listening, and constantly improving.

He framed OpenAI’s approach as a balance between innovation and responsibility. While he acknowledged the real risks of overuse and dependency, he also stressed that the company’s mission is to make AI genuinely useful — not just engaging. “Nobody wants to make mistakes,” Altman said. “But we’ll keep learning, we’ll evolve, and we’ll do better.”

ChatGPT-4o isn't going anywhere

Altman also addressed a flurry of audience questions about ChatGPT-4.0, its safety for younger users and whether OpenAI plans to retire older models. He was direct: ChatGPT-4.0 is not healthy for minors to be using. While he didn’t elaborate on specific risks, his tone made clear that OpenAI believes advanced conversational models should be used responsibly — ideally by adults who understand the emotional and cognitive effects of interacting with such systems.

That said, Altman confirmed that there are no current plans to sunset ChatGPT-4.0. He explained that OpenAI wants people to have access to the models they prefer, rather than forcing everyone onto the latest release. “We want adults to make choices as adults,” he said, stressing that users should decide which version best fits their comfort level and needs.

He also spoke about personalization — particularly for users who want more natural, emotionally expressive conversations. “If someone wants an emotional speech pattern or a model that sounds more human, they should have that option,” he said. Altman framed this as part of OpenAI’s broader philosophy: giving people control over how AI communicates, while maintaining clear safety boundaries for minors and vulnerable users.

More coming for the free tier

Altman also touched on one of OpenAI’s most ambitious long-term goals: driving the cost of AI down so that more people can access it for free. He explained that as the company improves its infrastructure, optimizes models, and forms new partnerships, the overall cost of running powerful systems like ChatGPT will continue to decrease.

“The more efficient we get, the more AI we can offer at little or no cost,” he said, framing affordability as both an economic and ethical priority. OpenAI’s vision, he added, is for advanced AI to be as widely available as possible.

Lowering costs also aligns with OpenAI’s broader mission of global accessibility and empowerment. By expanding free tiers and enabling more open use, the company hopes to give people everywhere the ability to learn, create, and build with AI tools.

Altman acknowledged that this will take time and massive investment, but he called it one of the most important challenges ahead. “The goal,” he said, “is to make intelligence abundant and available to everyone."

Q&A Wrap-Up: OpenAI’s Vision for the Future — Transparency, safety and empowerment

As the livestream came to a close, Sam Altman left viewers with a mix of realism and optimism about where AI (and OpenAI) are headed next. The event touched nearly every corner of the conversation around AI: from infrastructure and economics to alignment, safety and the human side of technology.

Altman began with transparency and empowerment, stressing that OpenAI’s mission is to help people use AI to create real-world value, not to replace them, but to amplify what’s possible. On the product side, he painted a vision of ChatGPT evolving into an AI super-assistant and eventually a full platform, where developers and everyday users can build, customize, and shape their own tools.

He didn’t shy away from the tough topics either; addressing fears of AI misuse, job displacement and addictive tech design head-on. He admitted OpenAI will make mistakes but urged people to judge the company by how it learns and evolves.

Looking ahead, Altman described a future where AI is resilient, affordable and widely accessible. He reaffirmed OpenAI’s commitment to lowering costs so that much of its technology can eventually be offered for free, making intelligence a shared global resource.

Ultimately, his closing sentiment was hopeful: AI’s future depends on how we shape it together. With transparency, safety and human values at the center.

7 takeaways from OpenAI's livestream today

OpenAI’s livestream today was unexpected, yet important. With a single post on X, the public was notified of what was to come. The live Q&A was mostly a conversation between Sam Altman and Mira Murati with a few questions taken at the end. However, it did cover a lot of ground.

Here are my 7 high-level takeaways:

Corporate restructuring unlocked new flexibility. OpenAI has completed a restructuring that gives its non-profit entity stronger governance control while enabling its for-profit arm to pursue future funding, growth and possibly a public offering.

The “research-automation” theme is front and center. A major message of the livestream: OpenAI is shifting focus toward building systems that accelerate AI research itself (not just AI applications). The emphasis is on “spending time on problems that really matter” by automating more of the researcher workflow.

Scaling compute and infrastructure remains a priority. While not fully covered in public detail today, the restructuring and announcements hint at expanded compute infrastructure and capacity; a crucial foundation for next-gen models and automation.

Ownership & governance matter more than ever. The governance update signals that OpenAI acknowledges the heightened stakes of AI progress and wants to ensure the non-profit board retains oversight even as the for-profit side scales aggressively.

Human time shifting toward higher-impact work. With more of the “grunt work” of research being delegated (or soon to be) to automated systems, humans are being positioned to focus more on framing questions, guiding direction, ethics/oversight. Essentially, the parts of research that machines still struggle with.

Acceleration implies compressed timelines

The tone of the event suggested that what used to take years may now take months (or even weeks) in some research workflows. That increases both opportunity and risk. Pace is faster, but so is the potential for surprises.

Safety, oversight and alignment remain a central concern. Given the speed and scale of what’s being discussed, OpenAI is explicitly acknowledging that oversight, guardrails, governance and transparent internal timelines matter more than ever. The very act of sharing hints toward more public-facing accountability.

The company is quickly evolving an laying the groundwork for a world where AI helps invent the next generation of AI. That shift brings enormous promise, from turbocharging scientific breakthroughs to freeing humans to focus on higher-impact problems. But it also raises the stakes: as progress accelerates, so must transparency and control. If OpenAI’s vision holds true, the next revolution in AI will come from the machines that learn how to innovate on their own.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.