AI voice cloning is exploding — Hume’s CEO warns about celebrity impersonations and the urgent need for safeguards

The leaders in voice explain their journey

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

On a Wednesday afternoon, I’m sitting on a video call listening to Ricky Gervais tell me a joke about voice cloning. Then, Audrey Heburn follows up to tell me her opinions on artificial intelligence.

Unsurprisingly, neither of these people were actually on the call. Instead, it’s Hume’s CEO and chief scientist, Dr Alan Cowen, on the other side. He’s showing off the latest update to his company’s AI voice creation service EVI 3.

Given just 30 seconds of audio, the tool can create a near-perfect replica of someone’s voice. Not just their tone or accent, this new feature captures and replicates mannerisms and personality, too.

Ricky Gervais telling me jokes about voice cloning features has his same dry wit and sarcastic tone. And Audrey Heburn is wistful and intrigued, while talking in a softer British accent of the time.

But it's not just celebrities. This tool can take and replicate any voice in the world, all from just one small audio clip. Obviously, a tool like this has the benefit of changing the world, both for the better and the worse.

Cowen sat down with Tom’s Guide to explain this new tool, his background, and why his team wants to revolutionize the world of AI voice cloning.

Hume and the world of AI voice generation

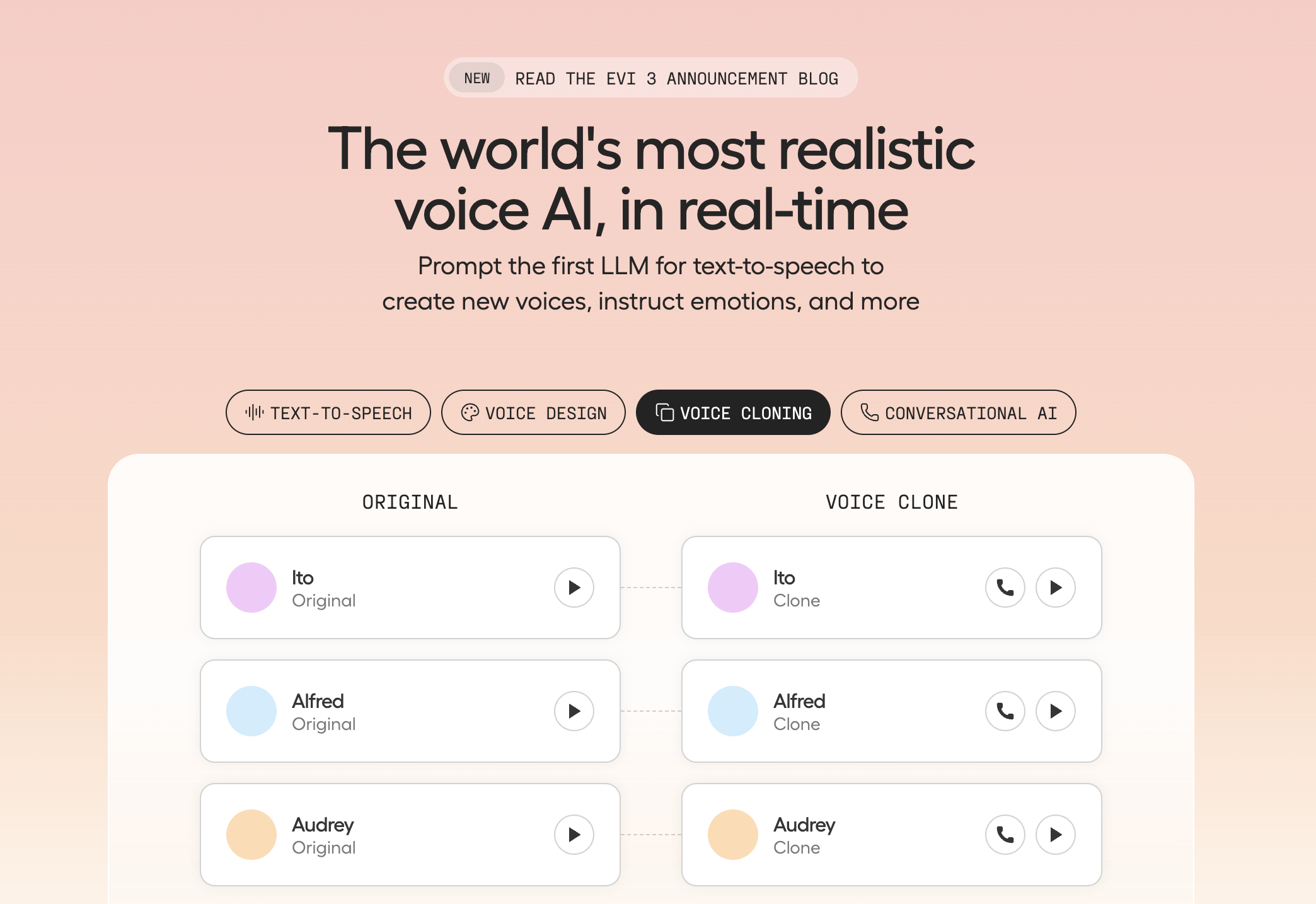

Hume operates in an area of AI that oddly doesn’t come up as much. They are a voice generation software, making the claim of being ‘the world’s most realistic voice AI’.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

I think this is the fastest evolving part of the AI space. There are competitors from OpenAI and Google, but what we’ve done with Evi 3 is take the technology to the next step.

Dr Alan Cowen, Hume CEO

It has come a long way over the years, now offering text-to-speech with a range of preset voices, as well as the ability to design a voice from a description. Now, with this latest update, the company can also clone any and all voices.

“I think this is the fastest evolving part of the AI space. There are competitors from OpenAI and Google, but what we’ve done with Evi 3 is take the technology to the next step,” Cowen explained on the call.

“Previous models have relied on mimicking specific people. Then you need loads of data to fine-tune for each person. This model instead replicates exactly what a person sounds like, including their emotions and personality.”

This is achieved by using Hume’s large backlog of voice data and reinforcement learning so that they don’t have to mimic specific people. Give the model a 30-second clip, and it can recreate it from scratch. This allows the model to learn your specific inflections, accent and personality, while training it against a huge backlog of voice data to fill in the gaps.

Of course, a model like this works best when given a good representation. A muffled clip of you talking in a monotone voice won’t match your personality much. However, it currently only works for English and Spanish, with plans for more languages in the future.

The ethics of generating real voices

If, like me, your first thought at hearing all of this is concern, then surprisingly you have something in common with Cowen.

“I think this could be very misused. Early on at Hume, we were so concerned about these risks that we decided not to pursue voice cloning. But we’ve changed our mind because there are so many people with legitimate use cases for voice cloning that have approached us,” Cowen explained.

“The legitimate use cases include things like live translation, dubbing, making content more accessible, being able to replicate your own voice for scripts, or even celebrities who want to reach fans.”

While these use cases do exist, there are just as many negative ones out there as well. Sam Altman, CEO of OpenAI, recently warned of the risks of AI voice cloning and its ability to be used in scams and bank voice activations.

This technology, paired with video and image generation could be the push deepfakes have needed for a while to become truly problematic. Cowen explained that he was aware of these concerns and claimed that Hume was approaching it as best as they could.

“We are releasing a lot of safeguards with this technology. We analyze every conversation ,and we’re still improving in this regard. But we can score how likely it is that something is being misused on a variety of dimensions. Whether somebody is being scammed or impersonated without permission,” Cowen said.

“We can obviously shut off access when people aren’t using it correctly. In our terms, you have to comply with a bunch of ethical guidelines that we introduced alongside the Hume Initiative. These concerns have been on our mind since we started, and as we continue to unroll these technologies, we are improving our safeguarding too.”

Creating guidelines in the world of AI

The Hume Initiative is a project set up by the Hume company. It’s ethos is that modern technology should, above all, serve our emotional well-being. That is somewhat vague, but the Initiative lists out six principles for empathetic technologies:

- Technology should be deployed only if its benefits substantially outweigh its costs for individuals and society at large

- Technologies should be built to serve our emotional well-being and avoid treating human emotion to a means to an end

- Claims about the capabilities, costs and benefits of empathetic technologies should be supported by rigorous, inclusive, multidisciplinary and collaborative science.

- Members of diverse demographic and cultural groups deserve access to the benefits of empathetic technologies without incurring differential costs

- The people affected by an empathetic technology should have access to the information necessary to make informed decisions about its use

- An empathetic technology should be deployed only with the informed consent of the people whom it affects.

Of course, while these are good guidelines to follow, they are subjective, and only beneficial when followed. Cowen assured me that these are beliefs that Hume stands by and that, when it comes to voice cloning, they are well aware of the risks.

Early on at Hume, we were so concerned about these risks that we decided not to pursue voice cloning. But we’ve changed our mind because there are so many people with legitimate use cases for voice cloning that have approached us.

Dr Alan Cowen, Hume CEO

“We are at the forefront of this technology and we try to stay ahead of it. I think that there will be people that don’t respect the guidelines of this kind of tool. I don’t want people to walk away thinking there is no danger here, there is,” Cowen explained.

“People should be concerned about deepfakes on the phone, they should be wary of these types of scams, and it something that I think we need a cross-industry attempt to address.”

Despite being aware of the risks, Cowen explained that he thought this was a technology that they had to build.

“The AI space moves so fast that I don’t doubt that a bad actor in six months will have access to something like this technology. We need to be careful of that,” Cowen said.

Overall thoughts

Cowen spent a lot of our chat focusing on guidelines and the legitimate concerns of this kind of technology. His background is in Psychology and strongly believes that this kind of technology will have more of a positive effect on people’s wellbeing than negative.

“People have been really enjoying cloning their voices with our demo. We’ve had thousands of conversations already, which is remarkable. People are using it in a really fun way,” Cowen said, after discussing what he thinks people get wrong about this kind of technology.

He strongly believes that it can be used for fun, to help build people’s confidence and can even be used for training purposes or for voice acting needs in films as well as dubbing.

Of course, just like with many other areas of AI, the positive benefits are competing with the negative. Being able to have a generic voice read a script is useful, but rather uneventful in risk.

Being able to accurately recreate any voice in the world comes with a long list of concerns. For now, Cowen and his team are way ahead in this venture, and seem committed to the ethical side of the debate, but we remain early into the life of this kind of technology.

More from Tom's Guide

- GPT-5's most useful upgrade isn't speed — it's the multimodal improvements that matter

- ChatGPT-5 users are not impressed — here's why it 'feels like a downgrade'

- Perplexity quietly rolls out AI video generation — here's everything you need to know

Alex is the AI editor at TomsGuide. Dialed into all things artificial intelligence in the world right now, he knows the best chatbots, the weirdest AI image generators, and the ins and outs of one of tech’s biggest topics.

Before joining the Tom’s Guide team, Alex worked for the brands TechRadar and BBC Science Focus.

He was highly commended in the Specialist Writer category at the BSME's 2023 and was part of a team to win best podcast at the BSME's 2025.

In his time as a journalist, he has covered the latest in AI and robotics, broadband deals, the potential for alien life, the science of being slapped, and just about everything in between.

When he’s not trying to wrap his head around the latest AI whitepaper, Alex pretends to be a capable runner, cook, and climber.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits