Can you trust AI Overviews? Recent studies suggest they may not be as accurate as you think

Accuracy is crucial for humans, but AI doesn't seem to think it's important

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

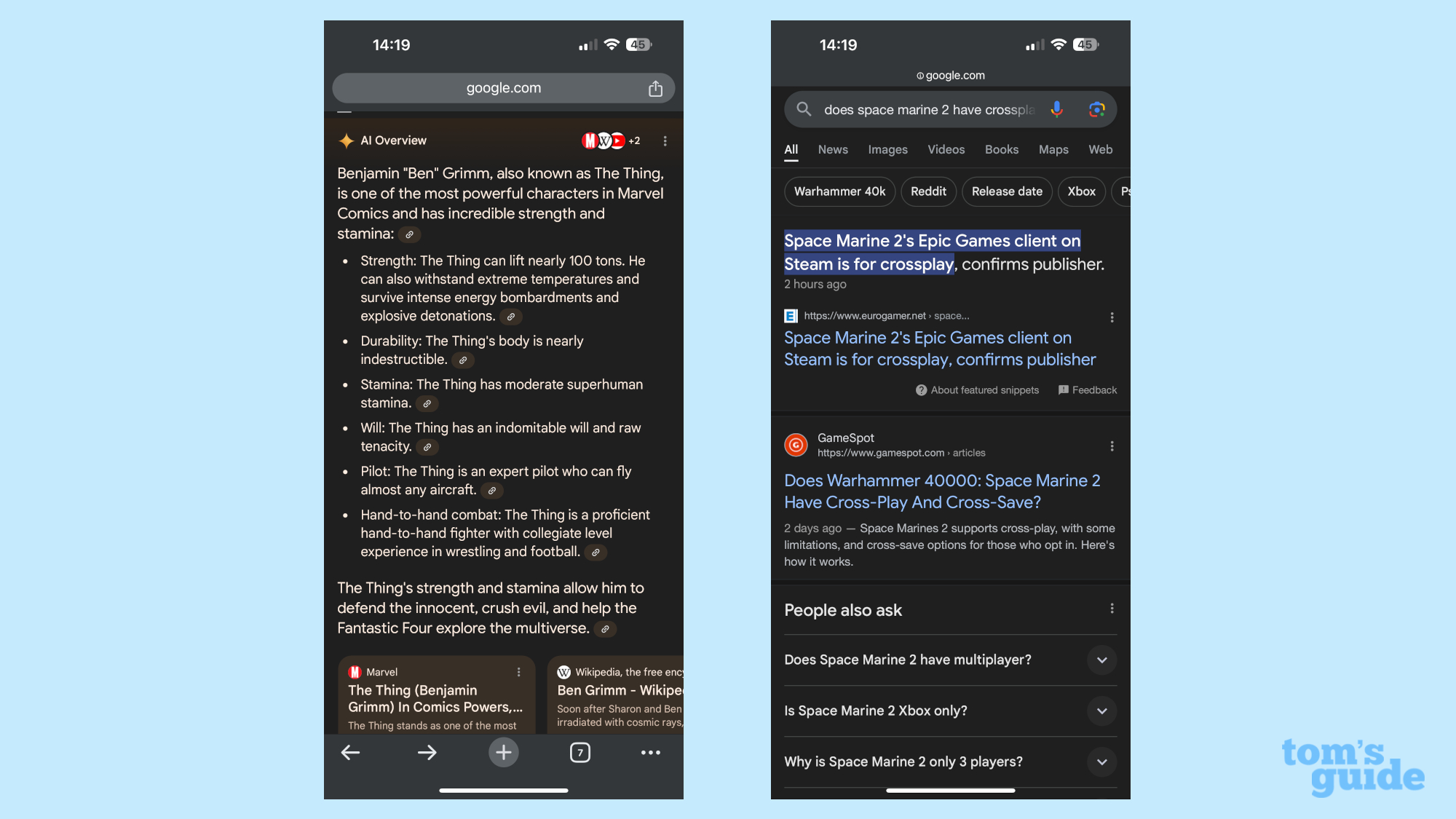

Google’s AI Overviews promise instant answers, but new research shows they’re not always reliable. From overgeneralized claims to outdated sources, here’s why you should think twice before trusting that neat little summary at the top of your search results.

After rolling out earlier this year, these AI Overviews and are now showing up more and more at the top of search results. Instead of pulling a direct quote from one site, Google uses AI to generate a short summary of what it thinks is the best answer to your question.

For example, it might even pull up this article and summarize it for you. And while that may sound convenient, there's a big problem with the accuracy of these overviews. Recent research shows that the AI exaggerates and even serves up outdated information and glosses over the most important details. With ChatGPT wrong 25% of the time, it's worth taking a closer look at how we get information from AI.

What the studies show

AI is confident, which can be a problem. Even if the answer is wrong, the AI highlights the information and makes it automatically seem accurate, even if it isn't always correct.

In one peer-reviewed study, researchers tested ChatGPT-style summaries of scientific abstracts. On paper, the results looked good: the summaries were judged 92.5% accurate on average. Seems pretty good, right? But even with a high accuracy score, reviewers noted that key details were often left out. In other words, the AI gave you the gist, while leaving important aspects out. It stripped away the nuance a user would really need to understand the real findings.

Another major issue with AI overviews is that overgeneralization is a recurring issue. When researchers in one study pushed models to be precise, large language models often overstated conclusions. Between 26% and 73% of summaries introduced errors by exaggerating claims. That might not sound catastrophic, but when careful scientific conclusions get turned into bold, oversimplified statements, readers can walk away with the wrong impression.

Google favors consensus — even if it’s wrong

One test revealed an even bigger problem: Google’s AI Overview repeated an outdated answer simply because it was the most common version found online. I have personally noticed this and find it extremely frustrating. Even if a newer, correct answer is avilable, if it's not widely published, AI won't show it because AI leans heavily on consensus, not accuracy, which means if information is popular it will show up — even if it's not accurate.

Scale is another concern. A massive audit of more than 400,000 AI Overviews found that 77% of them cited sources only from the top 10 organic results. That may seem efficient, but it creates an echo chamber. If none of those top-ranked pages are current or accurate, the AI summary won’t be either.

Probably the most telling detail in AI Overviews is the fact that even Google isn't fully confident in an AI's ability. The company says AI Overviews perform “on par” with its traditional featured snippets, but it still includes a warning: “results may not be accurate.” That should tell you everything you need to know.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Why AI Overviews get it wrong

There are a few recurring reasons these summaries miss the mark. Hallucinations are the most obvious. These errors are moments when the AI simply invents a detail to fill a gap. Outdated sources are another culprit. If the most visible articles are old or flawed, the AI doesn’t know any better; it just repeats them.

There’s also the issue of bias toward repetition. AI leans on patterns and consensus, which means popular answers get amplified whether they’re correct or not. And finally, there’s the problem of missing the context entirely. Complicated answers often get sanded down into something short and confident, but that brevity can erase important context.

The takeaway

AI Overviews can still be useful. They’re great for simple questions, quick definitions or when you just need a general idea before digging deeper. I'm not telling you to stop using them. But I encourage you to use them as a starting point, not the whole story.

Just as you wouldn't use shortcuts for everything at work or school, you should not rely on AI Overviews entirely because they aren't foolproof. Even Google admits they don’t always get it right, which should be reason enough to stay skeptical.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

More from Tom's Guide

- These 5 ChatGPT prompts stopped my arguments from spiraling — they might help yours too

- OpenAI just launched Sora 2 — here’s how to join the waitlist

- Claude 4.5 just launched — 7 prompts that show what it can really do

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a long-distance runner and mom of three. She lives in New Jersey.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits