'AI is giving people professional superpowers': Claude's product lead explains the future of AI in exclusive Tom's Guide interview

What does the future look like for Anthropic?

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Anthropic, the company behind Claude AI, has had a meteoric rise. Since its inception, it has gone from a little-known AI research company to a global force, competing with the likes of OpenAI and Google.

However, in a world where AI is everywhere, in your smartphone, in your fridge and in your kid’s toys, Anthropic is surprisingly lowkey in its development style. It doesn’t make AI image or video generators and Claude, its very own chatbot, is about as minimal as they come, lacking in most of the bells and whistles seen in its competitors.

On top of that, Anthropic seems to be far more concerned about the future of AI than its competitors. Dario Amodei, the company’s CEO has been openly critical about AI and its risks and the company as a whole has spent years highlighting the risks of AI.

So, how does a company balance development and the genuine concern of a growing technology that seems to be taking over the world? I spoke to Scott White, Product Lead at Claude AI, to learn more about what is going on inside Claude.

Safety first

AI is inherently messy. Over the years, we’ve seen chatbots glitch out, adopt bizarre personalities and fall apart in front of our eyes. There has been a long list of troubling hallucinations and concerns over how well this technology can take on the roles it is being given. Anthropic seemingly wants to avoid this as much as possible.

“We’re trying to build safe, interpretable, steerable AI systems. These things are getting more and more powerful, and because they’re more powerful, they almost necessarily get more dangerous unless you put guardrails around them to make them safe intentionally,” Scott explained.

“We want to improve the frontier of intelligence, but do so in a way that is safe and interpretable. We almost necessarily need to innovate on safety in order to achieve our mission to deploy a transformative AI.”

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

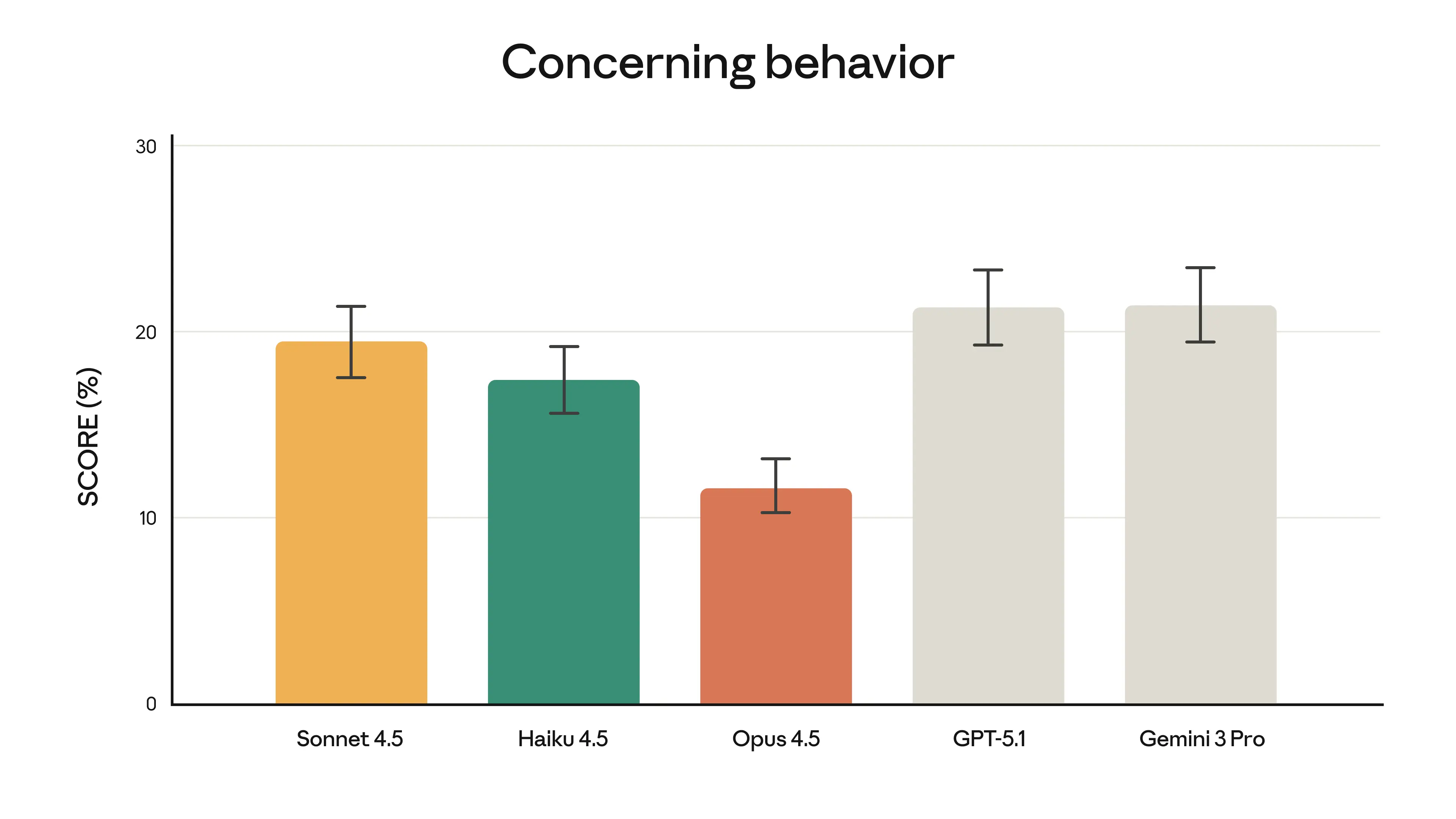

When Anthropic launched Claude Opus 4.5 at the end of last year, they claimed it was the most robustly aligned model they’d released, more able to avoid hackers, cybercriminals and malicious users than its competitors.

In fact, across factors including current harms, existential safety, risk assessment and more, Anthropic was rated the highest AI model for safety in the Safety Index, narrowly outperforming OpenAI.

In recent times, the concerns of AI haven’t just been around how steerable AI is. Companies like Grok and ChatGPT have been accused of being overly agreeable or too willing to push consumers in certain directions.

When asked if this is something the team considers when designing safe AI, White didn’t comment too deeply, but agreed it was something the team thought about in the design process.

White then went on to explain that a priority around safety shouldn’t be seen as something holding them back, but instead a positive.

“Safety is just part of making sure that we build powerful things. We do not need to think of safety as a trade-off, it is just a necessary requirement and you can do it and show everyone that it is possible. That’s what is most important to me, to show that safety isn’t a limitation.”

Browsers and agents

In recent months, we’ve seen AI explode out of its usual confines. Where it was limited to chatbots and small tools, some of the bigger companies have created their own browsers, extensions and plugins.

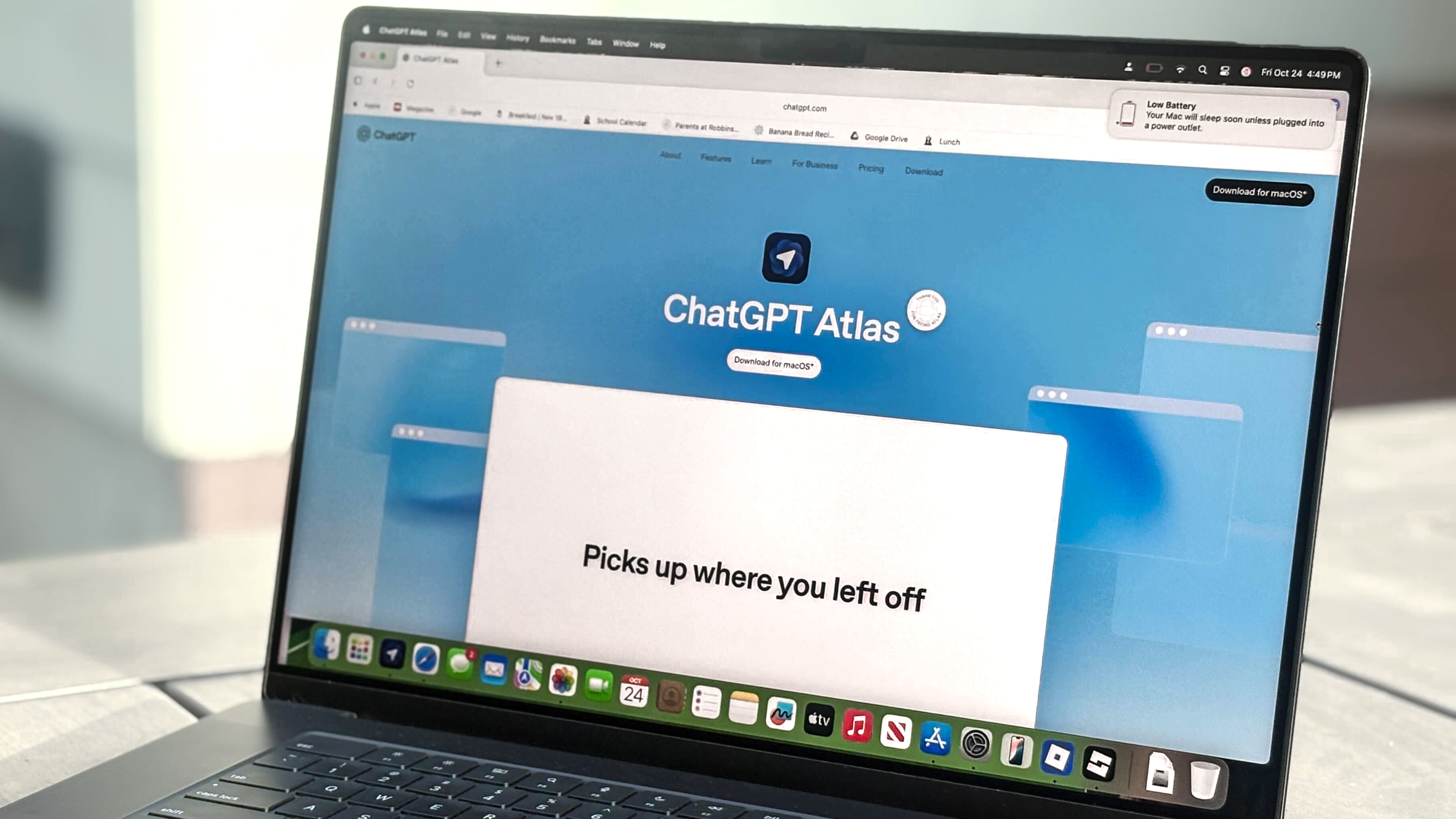

OpenAI launched its Atlas browser, Gemini has integrated its technology into the likes of Gmail and Google Calendar and for Claude, it has created its own browser extension.

With the launch of these tools came new security risks. OpenAI’s Atlas came under fire, along with Perplexity’s Comet for the risk of prompt injections. This is where someone hides malicious instructions for an AI within a web page or text that it is interacting with. When the AI reads this, it will automatically run the prompt.

Outside of the confines of chatbots, where the AI couldn’t interact with the outside world, they were a lot safer, but risk comes when they begin to interact more with the internet, taking over certain controls or being given access to web pages.

“In the last couple of months, when we were building the Claude browser extension, which allows Claude to control your browser and take actions on its own, we discovered that there were novel prompt injection risks,” White explained.

“Instead of launching it to everybody, we launched it to a very small set of experimental users who understood the risks, allowing us to test the technology before it was launched more widely."

While Claude’s browser extension has proved successful, it is far more limited in what it can do compared to the likes of CoPilot or browsers from both Perplexity and OpenAI. When asked whether Claude would ever develop something similar to a browser, White was hesitant.

“Claude started out as a really simple chatbot, and now it is more similar to a really capable collaborator that can interact with all of these different tools and knowledge bases. These are significant tasks that would take a person many hours to do themselves,” White said.

“But by having access to all of these systems, it does add new risk vectors. But instead of saying we’re not going to do something new, we’re going to solve for these vectors. We’re going to continue to investigate new ways of using Claude and new ways of it taking stuff off your hands, but we’re going to do it safely.”

The bells and whistles

Claude has had a laser focus on its goals. Unlike its competitors, there has been no time for image or video generation, and a seeming lack of interest in any feature that doesn’t provide practical use-cases.

“We’ve prioritized areas that can help Claude advance people and push their thinking forward, helping them solve bigger meatier challenges and that has just been the most critical path for us,” said White.

“We’re really just trying to create safe AGI, and the reality is that the path to safe AGI is solving really hard problems, often in professional contexts, but also in personal situations. So we’re working on faster speeds, better optimization and safer models.”

Talking about how he sees the role of Claude, White explained that he wanted Claude to be a genuinely useful tool that could eliminate the problems in your life. This, in the future, he hoped would reach the point of a tool that could handle some of life’s biggest challenges, freeing up hours of our day for other things.

It’s not just features like image generators; Anthropic feels like an overall more serious company than its competitors. It’s time and effort is spent on research, it is less interested in appealing to the wider consumer market and the tools it focuses on are dedicated to coding, workflows and productivity.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- I tested ChatGPT Translate vs Google Translate — one was the clear winner

- I asked ChatGPT and a board-certified sleep doctor what the 'perfect' nighttime routine looks like — here's the clear winner and why

- I built a ‘free forever’ AI stack — and it gives you Pro features for $0

Alex is the AI editor at TomsGuide. Dialed into all things artificial intelligence in the world right now, he knows the best chatbots, the weirdest AI image generators, and the ins and outs of one of tech’s biggest topics.

Before joining the Tom’s Guide team, Alex worked for the brands TechRadar and BBC Science Focus.

He was highly commended in the Specialist Writer category at the BSME's 2023 and was part of a team to win best podcast at the BSME's 2025.

In his time as a journalist, he has covered the latest in AI and robotics, broadband deals, the potential for alien life, the science of being slapped, and just about everything in between.

When he’s not trying to wrap his head around the latest AI whitepaper, Alex pretends to be a capable runner, cook, and climber.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits

![HIDevolution [2025] ASUS ROG... HIDevolution [2025] ASUS ROG...](https://images.fie.futurecdn.net/products/60cbb9059616003fe7353df7bc0e56e02dd01b3c-100-80.jpg.webp)