AI becomes dumber when trained on viral internet content — and there's no cure

Turns out AI doomscrolls too

Whether it's hours spent scrolling through TikTok or a good doomscroll across long, rambling X threads, we’re all guilty of engaging in one of life’s more recent trends: "brainrot".

That rather intense phrase relates to a trend of declining cognitive concentration driven by short-form, attention-grabbing content. In other words, content is designed for a reduced attention span.

But while humans are guilty of this, it turns out that we’re not the only ones. A team of scientists has found that AI models, when trained on this type of content, show exactly the same response.

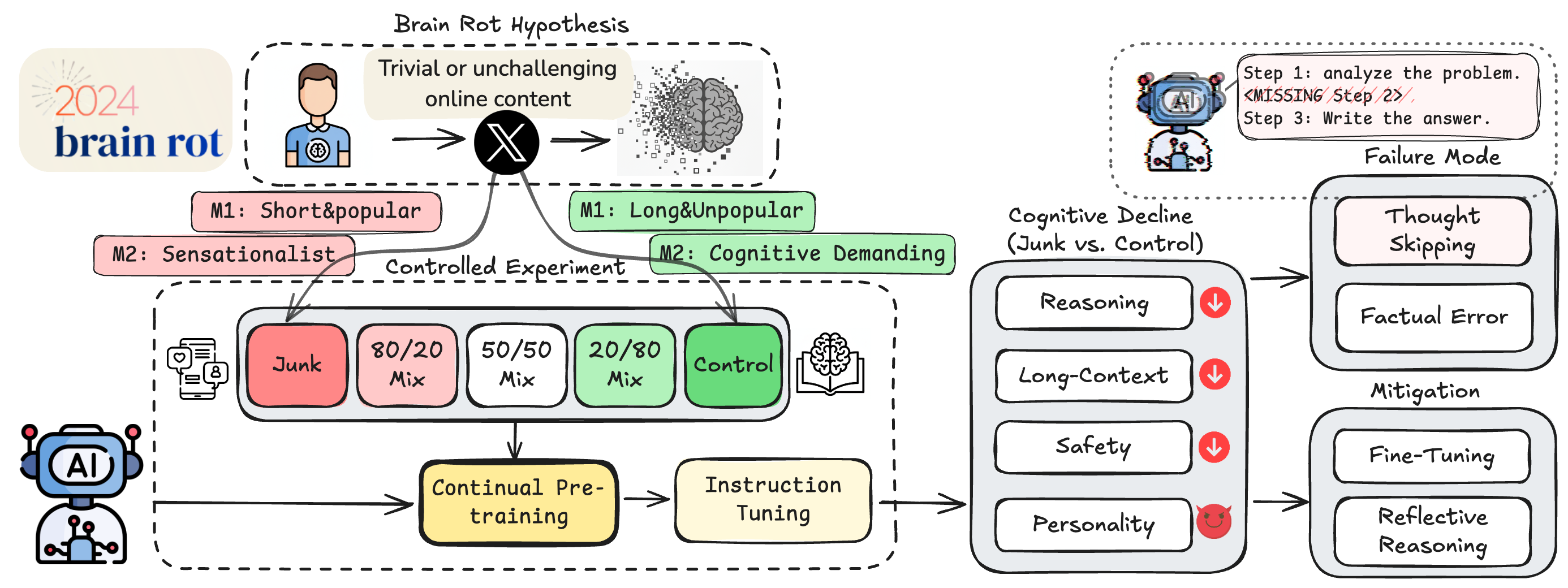

The team, made up of researchers from Texas A&M University, the University of Texas at Austin, and Purdue University, fed a collection of large language models months of viral, high-engagement content from X.

In doing this, the model’s reasoning ability fell by 23%, its long-context memory dropped by 30% and, when put through personality tests, the models showed increased spikes in narcissism and psychopathy.

On top of that, even when the models were retrained on high-quality data, the effects of the ‘brainrot’ remained. In humans, we can rewire our brains by engaging in endless short-form content. When we scroll on something like TikTok, we're getting the dopamine blast in short bursts, and the same seems to happen to AI.

The researchers created two data sets. In the first, there were short high-engagement X posts. The second included longer, more well-thought-out posts, but they weren’t as likely to go viral.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

They then retrained two AI models, Llama 3 and Qwen, using both of these types of data sets separately. Once they had been retrained, the models were benchmarked using well known AI tests.

When we scroll on something like TikTok, we're getting the dopamine blast in short bursts, and the same seems to happen to AI.

In the reasoning task, accuracy fell from 74.9% to 57.2% and in a test of how much information the AI model could analyze in one go, results fell from 84.4% to 52.3%.

Like in humans, the brain rot effect reduced the model’s ability to concentrate on longer tasks. When trained on the lower quality content, the AI models were seen skipping important steps to get to the end of the task.

What does this mean for AI?

This all seems very interesting, but AI models aren’t sat scrolling through social media each day to train, right? Well, it is slightly more complicated than that.

AI models like ChatGPT are trained in closed-off situations. While ChatGPT and other models like it can reference trends from the internet, or what is trending on a platform like X, it is simply referencing that world, not actually learning from it.

For the largest AI models, the training process is select and carefully done to avoid risk. What this study does prove, though, is how easy it would be for a chatbot to degenerate, especially as they are given more control.

A brain-rotted ChatGPT is unlikely in the future, but this is a demonstration of how easily AI models can adopt negative results from bad data, requiring a diet of high-quality information.

Yes, AI doesn't actually have a brain to rot, it also doesn't have a personality to become narcissistic, but they can very easily reflect real-life experiences, especially when exposed to material that hasn't been screened.

As AI is given more control, maybe it will start to need health screenings to make sure it hasn't ingested content that could affect its performance? These models are both complicated and incredibly expensive to produce.

A brain-rotted ChatGPT is unlikely in the future, but this is a demonstration of how easily AI models can adopt negative results from bad data, requiring a diet of high-quality information.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

More from Tom's Guide

- Meta AI’s mobile app just crossed 2 million daily users — here’s what’s behind the surge

- 7 prompts I use for every AI chatbot — and they work for just about everything

- OpenAI tightens guardrails around celebrity deepfakes after pressure from Bryan Cranston & SAG-AFTRA — here's everything you need to know

Alex is the AI editor at TomsGuide. Dialed into all things artificial intelligence in the world right now, he knows the best chatbots, the weirdest AI image generators, and the ins and outs of one of tech’s biggest topics.

Before joining the Tom’s Guide team, Alex worked for the brands TechRadar and BBC Science Focus.

He was highly commended in the Specialist Writer category at the BSME's 2023 and was part of a team to win best podcast at the BSME's 2025.

In his time as a journalist, he has covered the latest in AI and robotics, broadband deals, the potential for alien life, the science of being slapped, and just about everything in between.

When he’s not trying to wrap his head around the latest AI whitepaper, Alex pretends to be a capable runner, cook, and climber.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits