Artists turn AI against itself with tools designed to protect their work and break the algorithm — here’s how they are ‘poisoning the well’

A pixel-by-pixel rebellion

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Artists are turning to technology to combat the growing threat from artificial intelligence-powered image generators such as Midjourney and Stable Diffusion. New techniques allow them to adapt their images by a few pixels to look fine to humans but “poison the well” for AI tools.

While some AI image models only use licensed content, including Adobe’s Firefly and those from Google or Meta, others are trained by scraping the open web for source material.

This has created a conflict between the creatives making artwork and sharing it online, and the technologists who say AI simply takes inspiration from works, much like a human art student.

There have been attempts to stop the scraping through no-follow commands on a webpage, but that isn’t always adhered to. A new tool called Nightshade is allowing artists to turn the way AI is trained to their advantage, leading to unintended consequences when generating images.

How does Nightshade work?

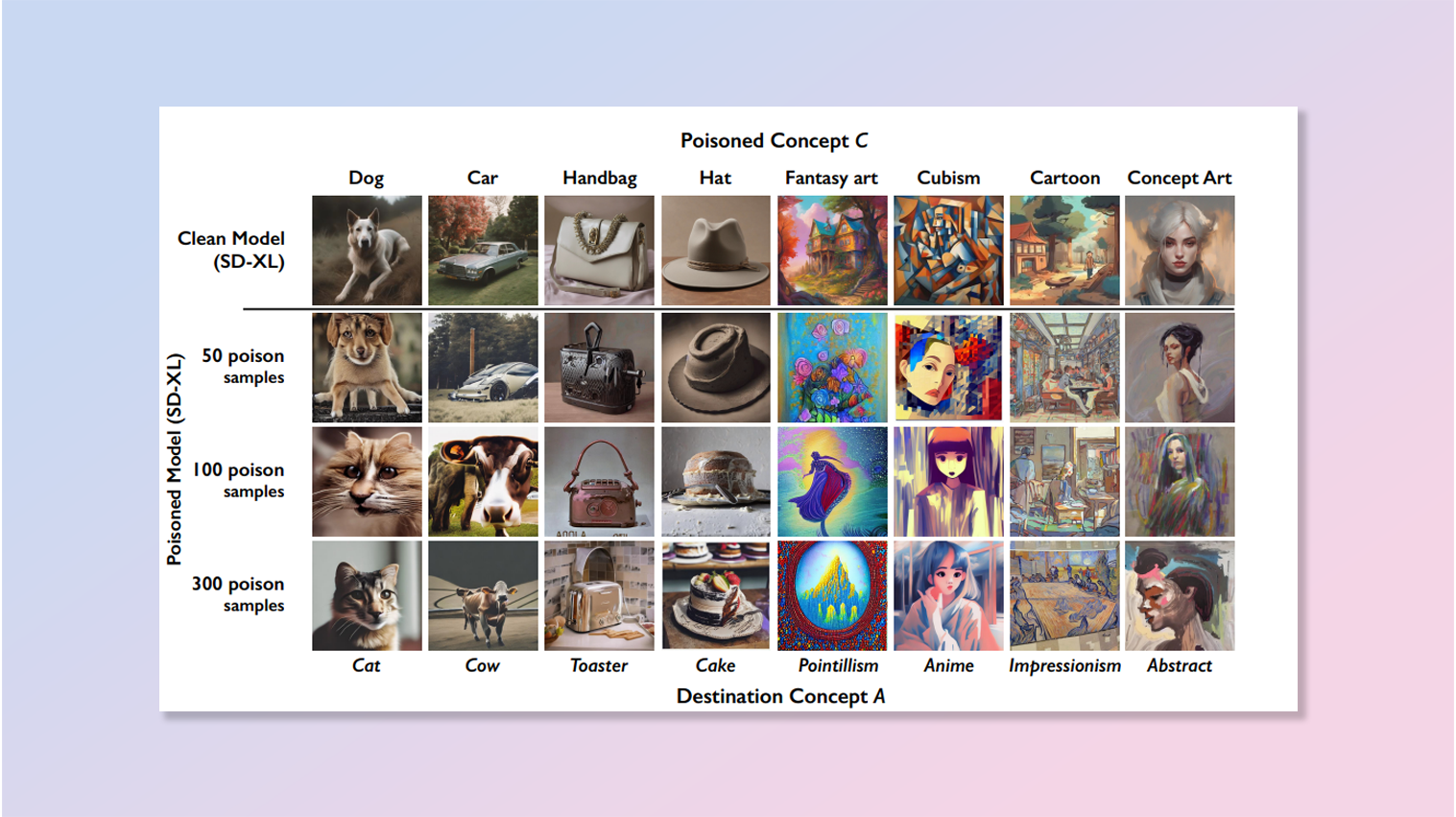

Nightshade subtly alters the pixels of an image in a way that damages the AI algorithm and computer vision technology but leaves it unaltered for human eyes. If these are scraped by the AI model it can result in images being incorrectly classified.

If a user goes to an AI image generator and asks, for example, for a red balloon set against a blue sky, instead of the red ball they might be given an image of an egg or a watermelon.

This is evidence the image generator you are using has had its dataset poisoned, explained T.J Thomson of RMIT University, not involved in Nightshade.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Writing in The Conversation, Thomson explained: “The higher the number of “poisoned” images in the training data, the greater the disruption. Because of how generative AI works, the damage from “poisoned” images also affects related prompt keywords.”

Why is this important?

A growing number of text-to-image generators are trained using licensed data. For example, Adobe has a deal with Shutterstock and Getty Images built its own AI generator trained on its library of images.

Those trained on publicly accessible images from the open web are increasingly facing lawsuits, no-scrape restrictions from different websites and criticism from artists.

“A moderate number of Nightshade attacks can destabilize general features in a text-to-image generative model, effectively disabling its ability to generate meaningful images,” the University of Chicago team behind Nightshade wrote in their paper published in the arXiv pre-print server.

They see the tool as a "last line of defense", to be used when companies refuse to respect no-scrape rules or continue to use copyrighted content in image generation.

What happens next?

As with any battle between two sides working in the technology space, there will likely be return fire from the image generators. This could come in the form of tweaks to the algorithm to counter the Nightshade changes.

Companies are fine-tuning their models by having humans rank between different images. Ahead of the launch of version 6 Midjourney developers have asked human users to "rank the most beautiful" out of a selection of two images.

In the end it will come down to regulation. Copyright laws will need to be adapted to reflect the concerns of artists on data input, but also the output generated by the AI image tools, especially where there is extensive prompting to create an image.

More from Tom's Guide

- I just tried Stable Diffusion's new real-time AI image generator — and it's scary fast

- I just tried Meta’s new AI image generator — and it gives DALL-E a run for its money

- Meet the AI image generator that can create pictures up to 16x higher resolution than Stable Diffusion

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.

Club Benefits

Club Benefits