I write about AI for a living and Apple's iPad event has left me very excited for WWDC in June

New AI software is right around the corner

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Apple has had a change of heart about the letters AI in recent months, going from not uttering the initials at all during WWDC 2023 to proclaiming the new iPad Pro as one of the best AI devices in its category during its recent Let Loose event.

Some of this is driven by market forces, with the stock price of a company fluctuating depending on its success in the artificial intelligence space, but there is a deeper cultural shift bringing AI to the forefront.

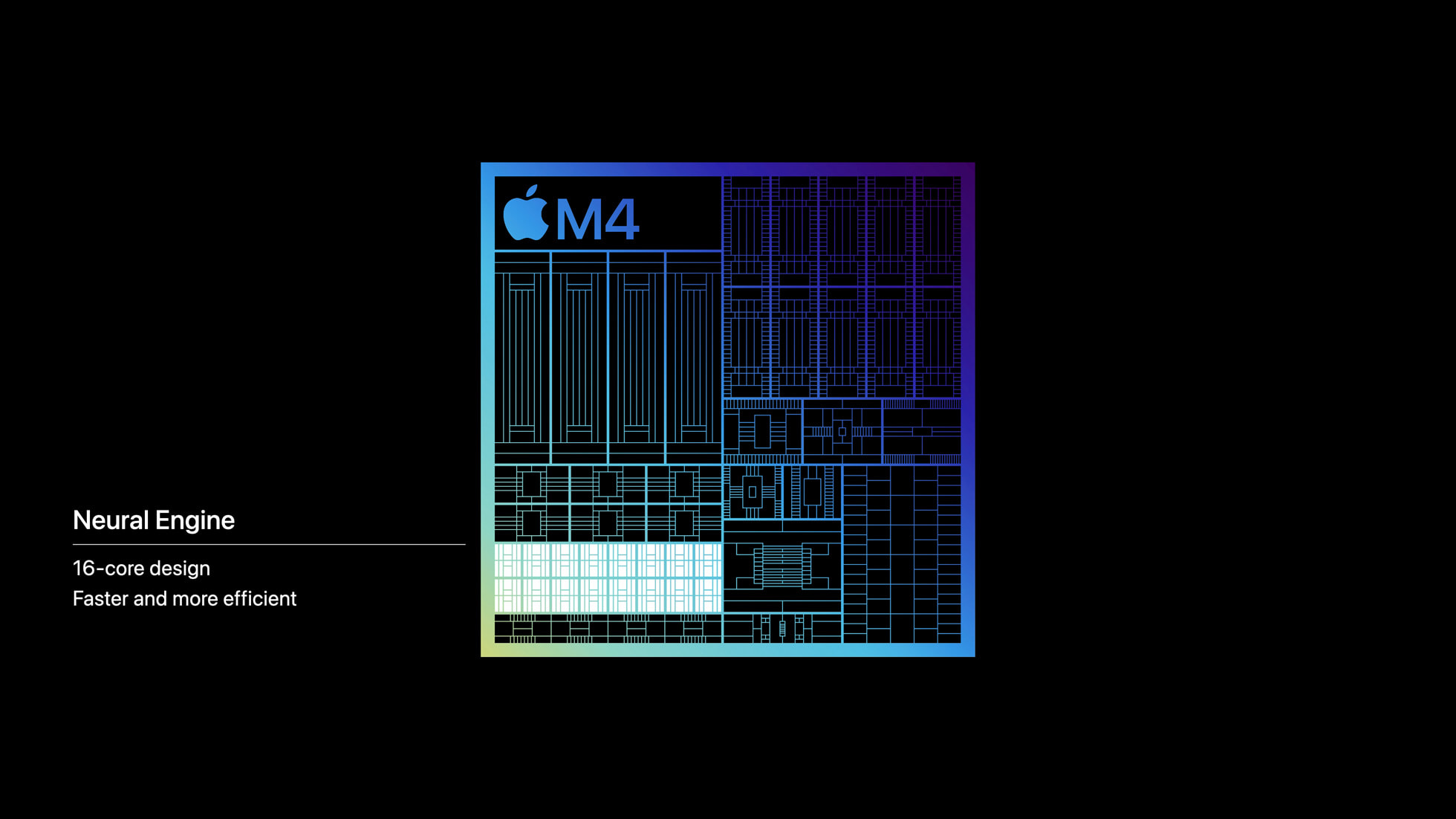

Looking back on the event, with the arrival of the AI-ready M4 chip and the mention of CoreML, Apple’s developer kit for AI, I think WWDC is going to be all-in on AI. I've held the view that Apple is in a good place to be an AI leader, and that hasn't changed.

Rumors suggest that the M4 will be in all Apple laptops going forward and at 38 trillion operations per second, this is a device able to run complex AI models like Meta’s Llama 3 or even various Stable Diffusion image generators locally with minimal effort.

Why is Apple moving on AI now?

Not long after the launch of ChatGPT, Tim Cook talked about the products powered by AI rather than AI as a tool in its own right. This included transcription on the iPhone, removing the background of images and the health products on the Apple Watch.

Despite not even mentioning AI and CoreML sitting as a bit of a developer after thought, Apple has continued to upgrade its neural engines, making them faster, more responsive and capable.

While it might seem like Apple is behind companies like Meta, Google and Microsoft when it comes to AI, the reality is Apple has been at the forefront of the technology for decades. It’s just been reluctant to call it AI and the Let Loose event gave us an insight into what comes next.

This is most obviously seen in the hardware. Intel made a big deal last year about its Core Ultra system-on-a-chips with CPU, GPU and now an NPU. Qualcomm has made similar boasts of its Snapdragon range but Apple has been building an NPU into chips since the A11 Bionic in 2017.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Cook said during a recent earnings call that it would benefit from its "unique combination of seamless hardware, software, and services integration, groundbreaking Apple Silicon with [its] industry-leading neural engines, and [Apple’s] unwavering focus on privacy”.

Despite not even mentioning AI and CoreML sitting as a bit of a developer after thought, Apple has continued to upgrade its neural engines, making them faster, more responsive and capable.

Some of this has led to advancements in machine learning for health and visual applications, but so far no major generative AI has appeared — that will change from next month.

What will we see at WWDC 2024?

Nobody knows for certain what WWDC 2024 will bring (at least, nobody outside of Apple’s inner circle) but we can make some assumptions based on what has been revealed so far.

I suspect we’ll get confirmation the M4 is going into all future MacBooks. This will allow Apple’s silicon to run more powerful on-device AI applications, including inside Apple’s software as well as for third-party developers.

Overall I think what we'll get is a dramatic shift towards AI. Apple will be encouraging developers to utilize on-device AI models and may even give prominent App Store placement to AI apps.

We may even see other versions of the M4, maybe an M4 Ultra, geared towards training new AI models — or at least fine tuning them — so that developers can integrate a custom model into their applications.

Developers are the main audience for Apple at WWDC as they build the apps and software that drives the wider ecosystem. I suspect we will get a major upgrade to CoreML, including access to on-device large language, vision and image generation models.

Last year Apple’s research division launched MLX. this is a framework that makes it trivial to install and run generative AI models like OpenAIs Whisper transcription model or StabilityAIs Stable Diffusion using Apple Sillicon’s Neural Engine.

Apple has been rumored to be in talks with everyone from Google and OpenAI to China’s Baidu over the use of various models.

Some predict this will be to power generative features in Siri 2.0 through the cloud, and that may be the case but I suspect it is also to license models to run on iPhones, iPads and MacBooks that developers can reference without cloud calls.

Overall I think what we'll get is a dramatic shift towards AI. Apple will be encouraging developers to utilize on-device AI models and may even give prominent App Store placement to AI apps.

More from Tom's Guide

- iOS 18 just tipped for a major productivity upgrade

- Budget phones need to stop skimping on this one key feature

- iPhone Live Photos are easy to combine into a single video— here’s how to do it

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.

Club Benefits

Club Benefits