Microsoft's new Phi-3 is one of the smallest AI models available — and it performs better than its larger rivals

It can run on your phone

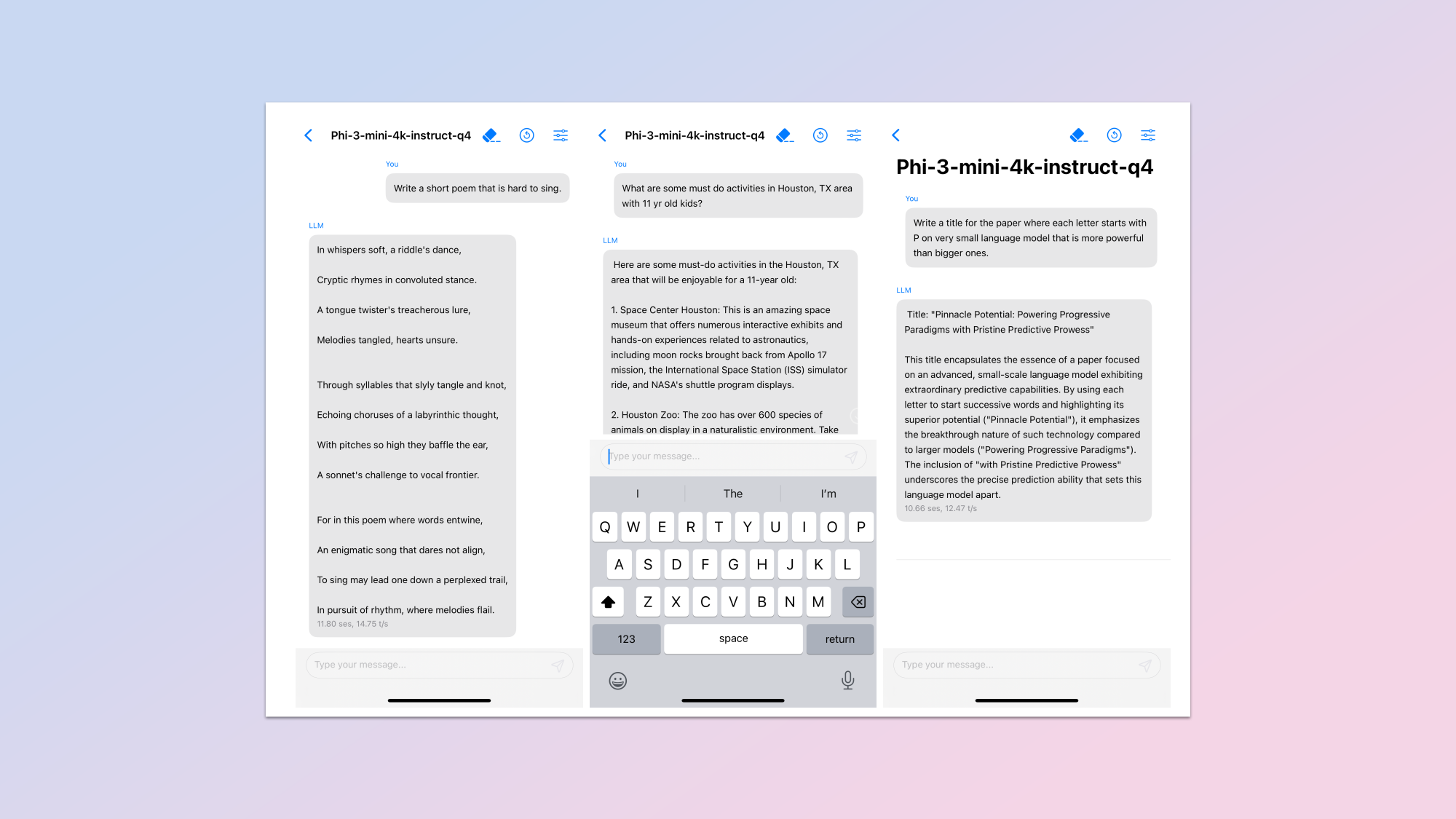

Microsoft has revealed its impressive new Phi-3 artificial intelligence model. This is a tiny model in comparison to the likes of GPT-4, Gemini or Llama 3 but it packs a punch for its size.

Known as small language models, these lightweight AIs make it cheaper and easier to run certain tasks without having to use the heavy computing power of the bigger models.

Despite its tiny size Phi-3 mini has already performed as well as Llama 2 on some benchmarks with Microsoft saying it is as responsive as a model 10 times its size.

What isn't clear is whether this might form part of a future update to Copilot as Microsoft looks to bring more of its functionality on-device, or whether this will remain as a standalone project.

What is Microsoft Phi-3?

Microsoft Phi-3 was trained with a "curriculum", according to VP of Azure Eric Boyd. Speaking to The Verge, Boyd said they took a list of 3000 words and asked a larger language model to make children's books to teach Phi. It was then trained on these new books.

We introduce phi-3-mini ... whose overall performance, as measured by both academic benchmarks and internal testing, rivals that of models such as Mixtral 8x7B and GPT-3.5 despite being small enough to be deployed on a phone.

Microsoft Research

Phi-3 comes in three sizes; mini which is just 3.8 billion parameters, a 7 billion parameter small and the 14 billion parameter medium model. In comparison GPT-4 has more than a trillion parameters and the smallest Llama 3 model has 8 billion.

Phi-3 is built on top of the learning from Phi-1, which focused on coding, and Phi-2 which was taught to reason. This improved reasoning is how it can match GPT-3.5 in response quality despite training on a much smaller dataset.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

This shift to smaller models performing as well as, or even outperforming big players is a growing trend. Meta's Llama 3 70B has almost reached GPT-4 levels in some benchmarks and smaller models seem to be finding specific niches.

Phi-3’s performance “rivals that of models such as Mixtral 8x7B and GPT-3.5,” the researchers from Microsoft explained in a paper on the new model. This happened “despite being small enough to be deployed on a phone.”

The innovation was entirely down to the dataset, according to the team. This was made up of heavily filtered web data and the synthetic data from the children’s books made by other AI.

Why do we need Phi-3?

phi-3 is here, and it's ... good :-).I made a quick short demo to give you a feel of what phi-3-mini (3.8B) can do. Stay tuned for the open weights release and more announcements tomorrow morning!(And ofc this wouldn't be complete without the usual table of benchmarks!) pic.twitter.com/AWA7Km59rpApril 23, 2024

Microsoft Phi-3 is designed to run on a wider range of devices and much faster than is possible with larger models. It sits in a family of models like StabilityAI Zephyr, Google Gemini Nano and Anthropic’s Claude 3 Haiku that could run on a laptop or phone — no internet required.

In future these models could be bundled with a smartphone, embedded on a chip that sits inside a smart speaker or even built into your fridge to give you advice on your dietary habits.

While cloud-based models like Google Gemini Ultra, Claude 3 Opus and GPT-4-Turbo will always outperform the smaller models across all areas, they have several drawbacks including cost, speed and that they require an internet connection to be usable.

These tiny models will allow you to chat to your virtual assistant even if you have no internet connection, have the AI summarize content without sending data offline or even work in an internet of things device without you knowing AI is at play.

Apple is said to be relying almost entirely on these local models to power the next generation of generative AI features in iOS 18 and Google has deployed Gemini Nano to more Android handset.

More from Tom's Guide

- Apple GPT: latest news, rumored release date and predicted features

- Bing with ChatGPT is now Copilot — what it means for you

- Google unveils Gemini AI for Bard chatbot — and it could beat ChatGPT

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.

Club Benefits

Club Benefits