Project Astra is back and better than ever — how Google is using AI to make its assistant even smarter

A lot can change in a year

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

If there was a star of last year's Google I/O, it was Project Astra, Google's attempt to build an AI-powered assistant that can see what you see, act on what you say and — perhaps most impressively — remember it all.

Google's had a year to beef up Project Astra, and the version the company had on display at Google I/O 2025 shows that the assistant has come a long way in the last 12 months.

While last year's Project Astra demo featured some object recognition chops, a video shown during the I/O keynote featured a much more sophisticated AI assistant, surpassing the features that Google has integrated into Gemini Live.

It's all part of Google's stated goal to build a universal AI assistant, which the company defines as an assistant that's not only intelligent but also understands your context. In addition, Google's vision of a universal assistant can plan and take action on your behalf.

Project Astra at Google I/O 2025

Those skills were certainly on display in the Google I/O 2025 demo video for Project Astra. The video featured a man speaking to the assistant on his phone while he set about repairing a bike. He started off by asking the assistant to go online and look up a repair manual for a particular brand of bike; the assistant responded by finding a user manual and asking what specific topic the man needed to look up.

When the repair work was sideline by a stripped screw, the man could ask the assistant to pull up a YouTube video with tips on how to work around that problem. The assistant not only found some videos but highlighted one that it thought sounded particularly relevant.

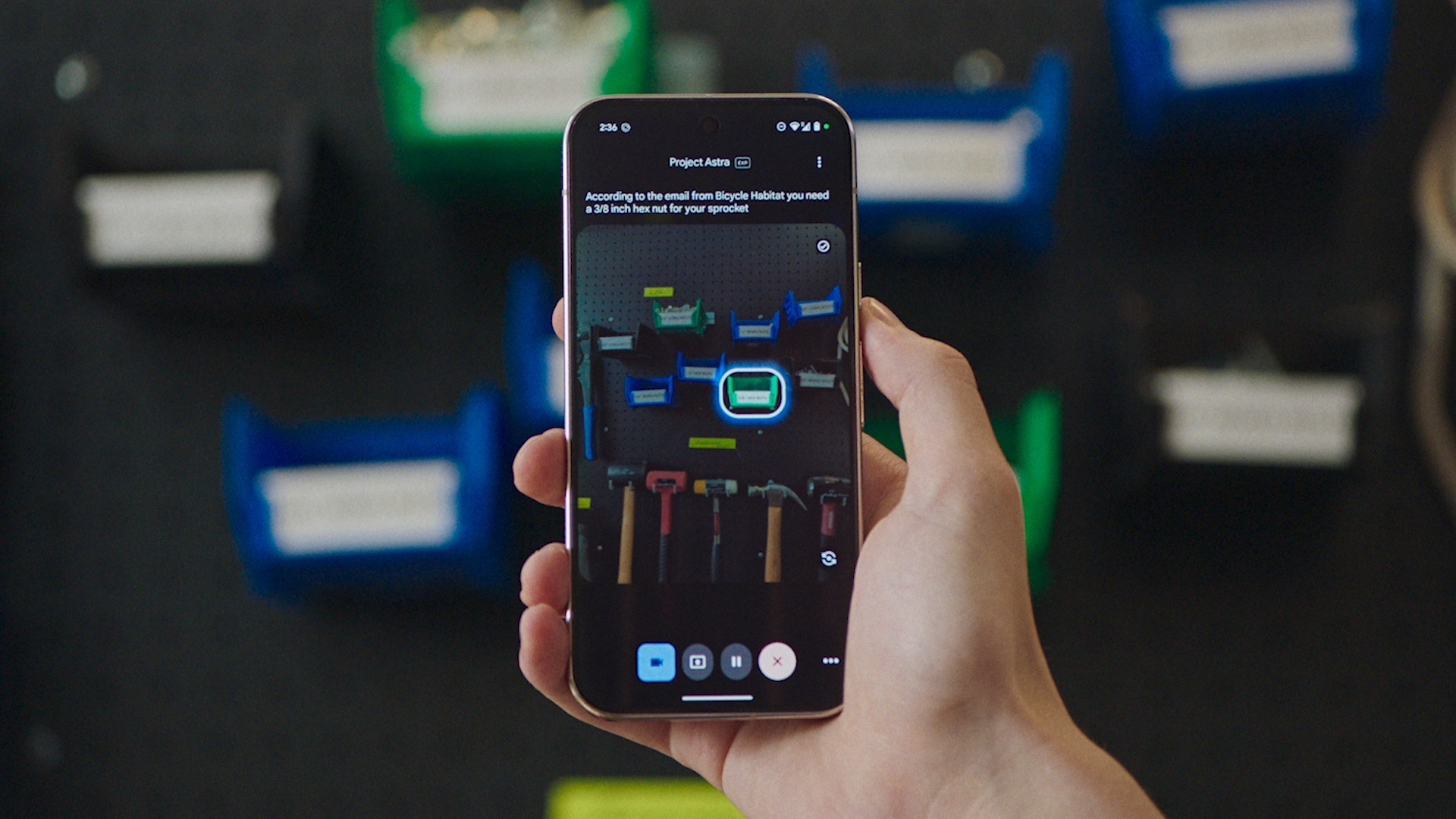

When the man needed a particular hex nut for his repair, he asked the assistant to find a specific email with that information from a bike repair shop. At the same time, he also pointed at a bin of different-sized hex nuts, and the assistant highlighted on the phone screen the one to grab.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

When a part for the repair wasn't available, the man asked his AI assistant to find the nearest bike shop. The assistant proactively placed a phone call and said they would find out when they had the part in stock.

Gemini Live/Project Astra demo at #GoogleIO2025 pic.twitter.com/dcY24zWVBrMay 20, 2025

In a demonstration of how Google's assistant can pick up conversations where it left off, another person interrupted while the assistant was reading back instructions from an online repair manual and paused for the duration of that conversation. Afterward, the assistant resumed with "As I was saying..." before diving back into the instructions. As for that phone call earlier, the assistant told the man his part was in stock and asked if he wanted the assistant to place a pick-up order.

The assistant even demonstrated some personalization. As the man petted his dog and asked for the assistant to search for appropriately-sized dog baskets, the assistant was able to refer to the dog by name.

Project Astra outlook

All told, it's an impressive video that really shows off the ideal of what Google is aiming for when it talks about a universal AI assistant. But how close is it to reality?

Google says it has integrated several improvements into Gemini Live, such as making voice output sound more natural and improving memory. Select testers are providing Google with feedback, which Google hopes to incorporate not only into Gemini Live but also to add new capabilities to Google searches.

The company also plans to add improvements to its Live API for developers and to bring this assistant to new devices like smart glasses — part of Google's overall goal of extending Gemini to new devices.

In other words, Google's Gemini may not be your assistant on bike repairs like it is in that demo video right away. But that's the ultimate goal for Google, whether it's the assistant on your phone or some other device.

I'm currently at Google I/O 2025 in Mountain View, and I'll be keeping an eye out for any other demo opportunities involving Project Astra or any of the other AI capabilities on display at this annual developer conference.

More from Tom's Guide

Philip Michaels is a Managing Editor at Tom's Guide. He's been covering personal technology since 1999 and was in the building when Steve Jobs showed off the iPhone for the first time. He's been evaluating smartphones since that first iPhone debuted in 2007, and he's been following phone carriers and smartphone plans since 2015. He has strong opinions about Apple, the Oakland Athletics, old movies and proper butchery techniques. Follow him at @PhilipMichaels.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits