Janus Pro hands-on — here's what happened when I put DeepSeek's new image platform to the test

DeepSeek's honeymoon period may be over

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Daily (Mon-Sun)

Tom's Guide Daily

Sign up to get the latest updates on all of your favorite content! From cutting-edge tech news and the hottest streaming buzz to unbeatable deals on the best products and in-depth reviews, we’ve got you covered.

Weekly on Thursday

Tom's AI Guide

Be AI savvy with your weekly newsletter summing up all the biggest AI news you need to know. Plus, analysis from our AI editor and tips on how to use the latest AI tools!

Weekly on Friday

Tom's iGuide

Unlock the vast world of Apple news straight to your inbox. With coverage on everything from exciting product launches to essential software updates, this is your go-to source for the latest updates on all the best Apple content.

Weekly on Monday

Tom's Streaming Guide

Our weekly newsletter is expertly crafted to immerse you in the world of streaming. Stay updated on the latest releases and our top recommendations across your favorite streaming platforms.

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

DeepSeek is on a roll. Not content with exploding the apple cart with its ChatGPT-rivaling R1 model, it's just released a new multi-modal model upgrade called Janus Pro.

These new 1B and 7B models can complete image generations and also understand visuals, which is becoming an increasingly important part of modern day AI.

I took a look myself at this latest offering from what's easily the hottest AI company in the world right now.

If you're curious to try it for yourself, you can access the model at HuggingFace here.

The promise

This is the second generation of the Janus model, and it’s supposed to deliver improved image quality, and an ability to handle text.

Another key difference is the fact that the new model combines visual understanding alongside image generation — so it can "see" an uploaded image and understand it.

This is not a typical combination with conventional models. They call it unified multimodal.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

The reality (for now)

Unfortunately all this tech seems to have gotten in the way of creating a knockout product.

It’s not that the model is bad so much, it’s just that the image generation feels two years old. Forget about creating human faces; they’re distorted, twisted, and the very worst of early AI image generation. Think about what Stable Diffusion was like in 2023 and you'll know what I'm talking about.

It’s as though we’ve all been whisked back in a time machine to the era of three fingered humans, only it’s now the whole body.

It’s a shame, but I guess innovation often comes with a price. I spent quite a while trying to generate an image which was anywhere near the current state of the art, and failed miserably. You can see the examples below.

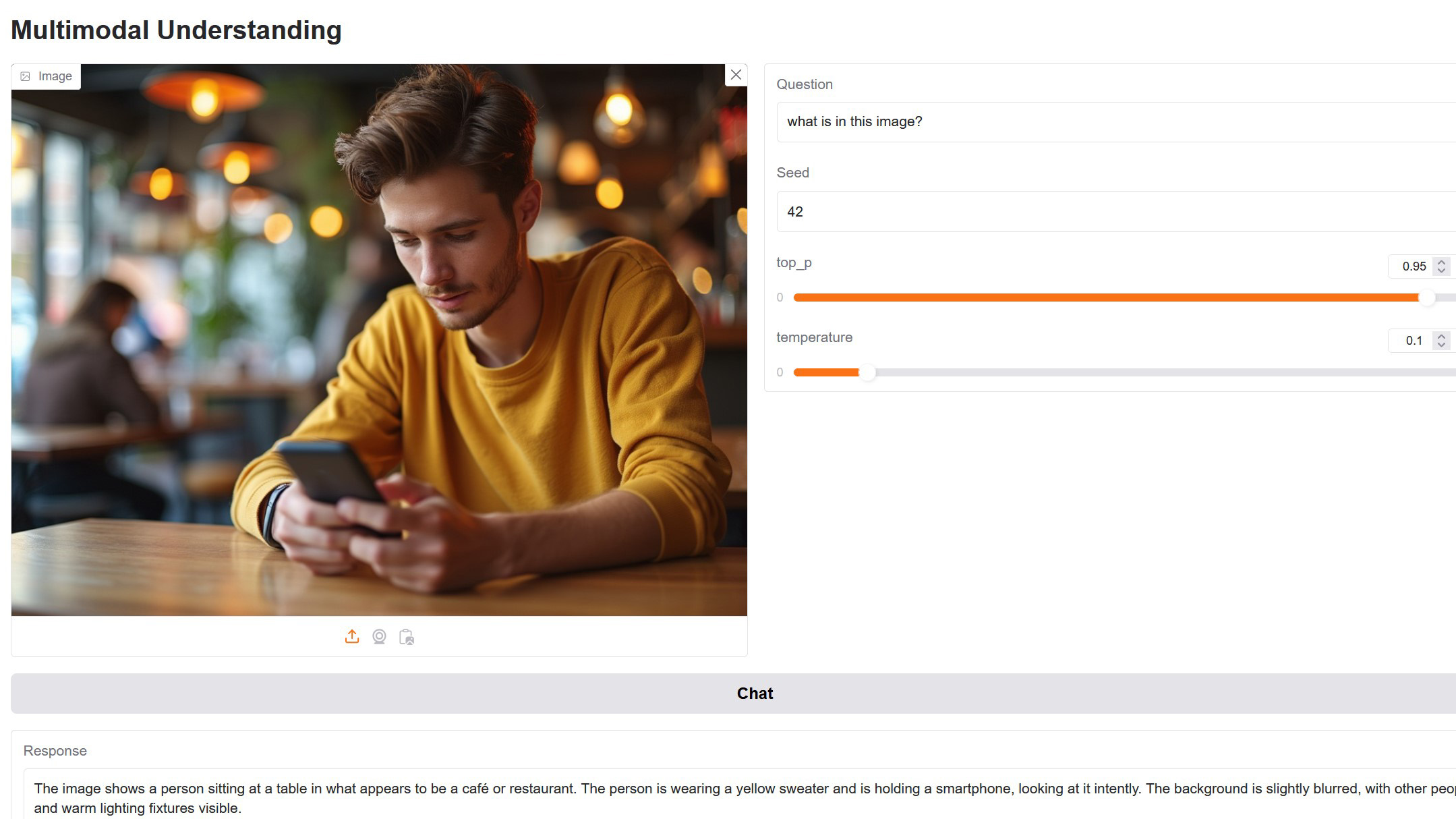

The good news is the image vision seems to work fine. I uploaded a shot of someone looking at their mobile phone in a café, and the model accurately depicted what was in the image.

But this is hardly ground-breaking stuff, just about any vision model, proprietary or open source, can do this at the moment. Even the lowly Llava model, which is small enough to run on a home computer, can do this.

Bottom line

So where does that leave us? It’s clear the Chinese have once again tried to innovate with their model design, and on the face of it in a good way. Combining image generation with the ability to read images is a nice feature.

However, the report card on this attempt must read "could try harder."

I’m not sure how or where DeepSeek got the demo images from on its website, and I’m absolutely baffled by the text images the company is boasting about.

Of course these are only tiny models at 1B and 7B parameters, but even so one would hope there would be better output. I got nowhere near the demo results on their site, despite trying different configurations, long prompts and short prompts. It’s a total mystery. I suggest they maybe take a trip back to the drawing board?

More from Tom's Guide

- What is DeepSeek? — everything to know

- Is DeepSeek a national security threat? I asked ChatGPT, Gemini, Perplexity and DeepSeek itself

- I tested the Plaud Note AI voice recorder — here’s my verdict

Nigel Powell is an author, columnist, and consultant with over 30 years of experience in the technology industry. He produced the weekly Don't Panic technology column in the Sunday Times newspaper for 16 years and is the author of the Sunday Times book of Computer Answers, published by Harper Collins. He has been a technology pundit on Sky Television's Global Village program and a regular contributor to BBC Radio Five's Men's Hour.

He has an Honours degree in law (LLB) and a Master's Degree in Business Administration (MBA), and his work has made him an expert in all things software, AI, security, privacy, mobile, and other tech innovations. Nigel currently lives in West London and enjoys spending time meditating and listening to music.

Club Benefits

Club Benefits