If you’ve been hyped up about putting AI inference tools to work for you, but don’t like relying on an internet-based tool that requires a constant connection, sends data out to external servers and just doesn’t let you tweak it to your liking, we've got good news. OpenAI has dropped a new family of gpt-oss models, it’s ready to run locally on consumer-grade hardware — and (bonus) you can make the most of it running with ease and speed on NVIDIA RTX AI PCs.

This new model is flexible and lightweight — OpenAI has released it with open weights and it's open source. So, not only can you tweak how it behaves, but you’re also free to integrate it into apps and software of your own using the Apache 2.0 license. It’s designed to follow instructions and provide explanations of its reasoning. This makes it useful for agentic tasks and lets you load up your own data as source materials, helping it become an expert assistant on whatever information you feed into it.

OpenAI’s gpt-oss-20b is built for efficient deployment. It's able to respond to prompts with a similar efficiency as OpenAI’s 03-mini model, and it can do so while running on devices with as little as 16GB of memory. There are plenty of options with those system resources available, so you no longer have to rely on a connection to massive data centers to load up gpt-oss-20b. With the ability to run locally, you can now feel secure in working with more sensitive data.

What are some other things that sets this model apart from the crowd? It features Chain-of-Thought (CoT) reasoning models that can think through problems. You can adjust the amount of time it thinks before giving an answer, resulting in more thought out solutions. It can reason through longer documents and uses fewer memory resources. This is a killer application for web searching, coding assistance and truly understanding complex documents.

Of course, running locally still calls for the right hardware, and NVIDIA has proven it has the goods. Not only were the gpt-oss models trained in partnership with NVIDIA, but NVIDIA’s RTX AI PCs have serious hardware advantages for running these models exceptionally well.

Llama.cpp is an open-source framework that lets you run LLMs (large language models) locally with great performance, especially on RTX GPUs thanks to optimizations made in collaboration with NVIDIA. Llama.cpp also provides users with flexibility, including the ability to adjust quantization techniques, layer offloading, and memory layouts.

In Llama.cpp’s testing, NVIDIA’s flagship consumer grade GPU, the GeForce RTX 5090 with 32GB of RAM, has shown a serious performance advantage. It was able to run gpt-oss-20b in Llama.cpp at a staggering 282 tok/s (tokens per second). That metric indicates how many chunks of text the model reads or outputs in one step and how quickly they can be processed, and 282 tok/s is blistering. In comparison to the Mac M3 Ultra (116 tok/s) and AMD’s 7900 XTX (102 tok/s), the RTX 5090 leads by a wide margin. That comes in part thanks to the GeForce RTX 5090’s built-in Tensor Cores, which are designed to accelerate AI tasks and thereby maximize performance running gpt-oss-20b locally.

So how do you tap into all that potential? For AI enthusiasts that just want to use local LLMs with these NVIDIA optimizations, consider the LM Studio application, built on top of Llama.cpp. LM Studio adds support for RAG (retrieval-augmented generation), so you can customize the data the model refers to, and it’s designed to make running and experimenting with large LLMs easier, not forcing you to contend with the command line or go through an extensive setup.

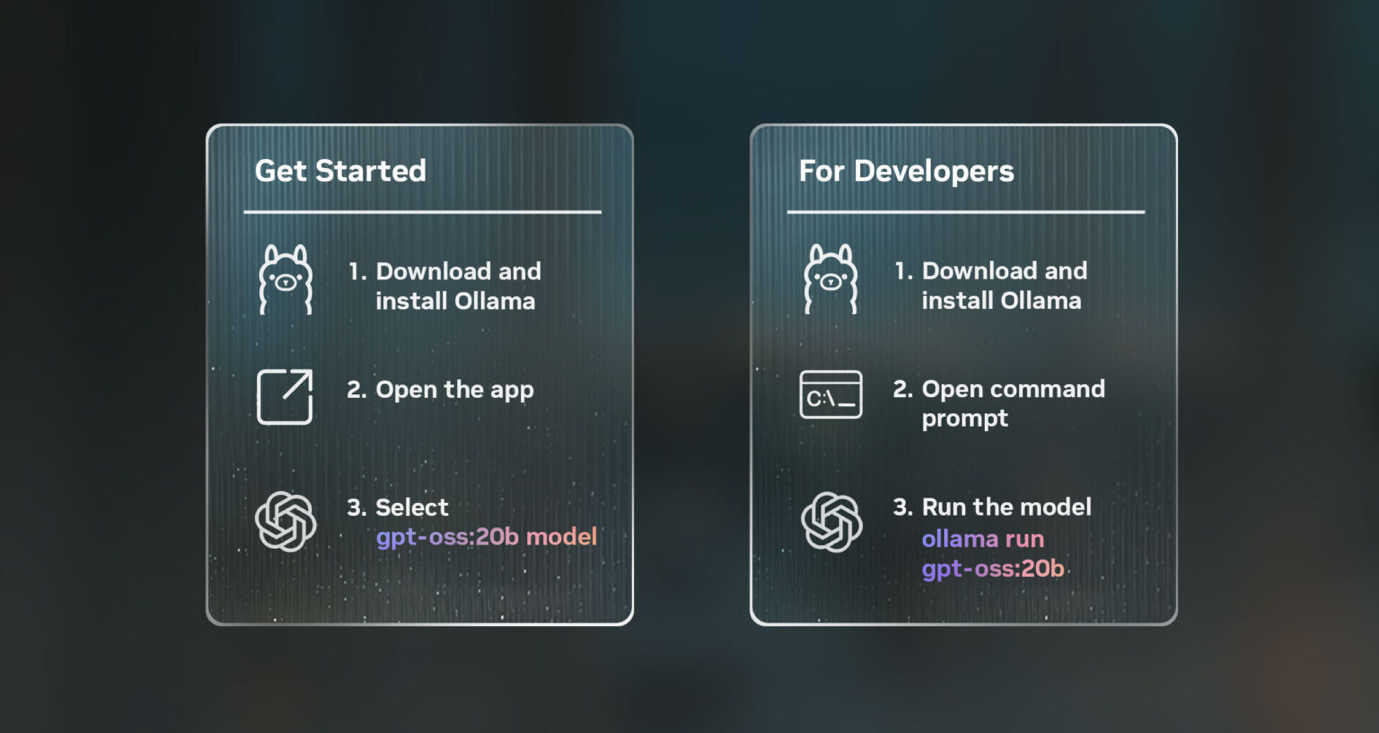

There’s also Ollama, another popular open-source framework you can easily use to run and interact with LLMs. It’s quick to get up and running, and NVIDIA has also worked with Ollama to optimize its performance so you get similar accelerations for gpt-oss models. The Ollama app is a great way to quickly experience the LLMs, but you can also dive into third-part apps like AnythingLLM, which also offer a simple, local interface while adding in support for RAG.

With gpt-oss-20b running locally on your system, you can quickly control how much effort it spends on reasoning to get more thorough or quicker responses, though you’ll enjoy low latency in either case.

With OpenAI’s gpt-oss-20b offering a powerful new tool for local inference that runs with extreme speed and efficiency on NVIDIA hardware, it’s a great time to get started with an RTX AI PC. Of course, gpt-oss-20b is just the latest addition to a whole suite of features on RTX AI PCs.

To find out more about what NVIDIA is doing with AI, you can follow its RTX AI Garage blog for regular posts on new features and tools powered by RTX hardware.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Club Benefits

Club Benefits