iPhone 14 — Apple should definitely steal these Google Pixel 6 features

The Pixel 6 offers a number of new features worth adopting for the iPhone 14

The Google Pixel 6 is making a big splash, and for good reason — both the standard model and the Pixel 6 Pro are the best phones Google's ever built. Powered by a Google-designed Tensor chip and boasting new camera hardware as well as impressive photo-editing features, the Pixel 6 is a phone you can't ignore.

In fact, the Pixel 6 Pro is already selling out, which is prompting people to ask where to buy a Pixel 6. And we hope Apple's paying attention.

- These are the best iPhones right now

- iPhone 14 rumored release date, specs, design and leaks

- Plus: Apple says to expect shipping delays to continue this holiday season

Even given the Pixel 6's strengths, there's still no knocking the iPhone 13 Pro Max off its perch as the best phone overall. And the rest of the iPhone 13 lineup impresses as well. Still, the Pixel 6 proves that Google is serious about challenging Apple's place at the front of the smartphone pecking order. Apple should be ready to prove that it's just as serious about keeping the iPhone ahead of rival devices.

While Apple doubtlessly has its own ideas about what to do with future iPhones, the Pixel 6 certainly offers plenty in the inspiration department. These are the Pixel features Apple should adapt for the iPhone 14.

Adaptive refresh rates for all iPhones

iPhone 13 Pro and iPhone 13 Pro Max users get to enjoy the benefits of screens that can adjust their refresh rate all the way up to 120Hz when on-screen activity would benefit from the faster speed, then scale back down to preserve battery. But that's a benefit iPhone 13 and iPhone 13 mini owners can only dream of.

Google didn't make the same distinction with its two new phones. While the Pixel 6 doesn't match the 120Hz adaptive rate that the Pixel 6 Pro is capable of, it can still scale up to 90Hz. Even that modest change makes a world of difference when it comes to smoother scrolling.

The good news is that Apple's likely to address this disparity with the iPhone 14, adding adaptive refresh rates to its less expensive models should the supply chain allow it. Apple had better, because increasingly the standard iPhone is one of the few flagships still stuck at 60Hz.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

A better camera bump

Camera bumps are getting more prominent, as phone makers have to squeeze in more optics to provide the photographic features users demand. That's led to some fairly sizable arrays taking up space on the backs of current flagship devices — something the iPhone 13 is certainly guilty of.

Google leaned into a big camera array with the Pixel 6, though. Instead of confining it to one corner, the company stretched it out across the length of the phone. It certainly adds to the Pixel 6's thickness, but it gives the phone a distinct look that helps it stand out from other devices. Even better, the Pixel 6 is one of the few phones you can lay flat on its back and not experience any sort of wobble.

Early iPhone 14 rumors suggest that Apple is likely to stick with its current phone design rather than liven things up. There's nothing especially wrong with that approach, and while we don't see the iPhone 14 copying the look of the Pixel 6, it wouldn't hurt Apple to find a different approach for housing all the cameras it plans to include on future iPhones.

The return of a fingerprint reader

The Pixel 6's in-display fingerprint sensor can't claim any responsibility for the rave reviews Google's phone has received — in fact, a few of my colleagues argue that it's one of the worst things about the Pixel 6 because of its slow response time and frequent fussiness. But at least the Pixel 6 has a fingerprint sensor. That's not something the iPhone 13 can claim.

Yes, Apple's Face ID remains the preferred method for unlocking devices and for securing mobile payments. But that's only when you're not wearing a mask, and that could be something we wind up doing for a long time, at least when we're indoors. It's incumbent upon Apple to come up with a phone that reflects that reality, whether it puts the fingerprint reader underneath the display or within the iPhone 14's power button.

Better phone features

One area where the Tensor powering the Pixel 6 really shines involves the device's Phone app. Tapping into the phone's onboard intelligence, you can now get wait times when you dial a toll-free customer service number, and the assistant can transcribe automated phone trees, making it easier to tap the number you need to when you make a customer service call.

Apple doesn't offer anything like that with the iPhone, though it probably could, given the amount of effort the company puts into the neural engine of its in-house smartphone chipset. The iOS 15 update introduced a number of improvements to Siri, led by on-device speech processing and better context between requests. We imagine that Apple could figure out a way to better incorporate its own assistant into the phone app.

Photo editing tools like Magic eraser

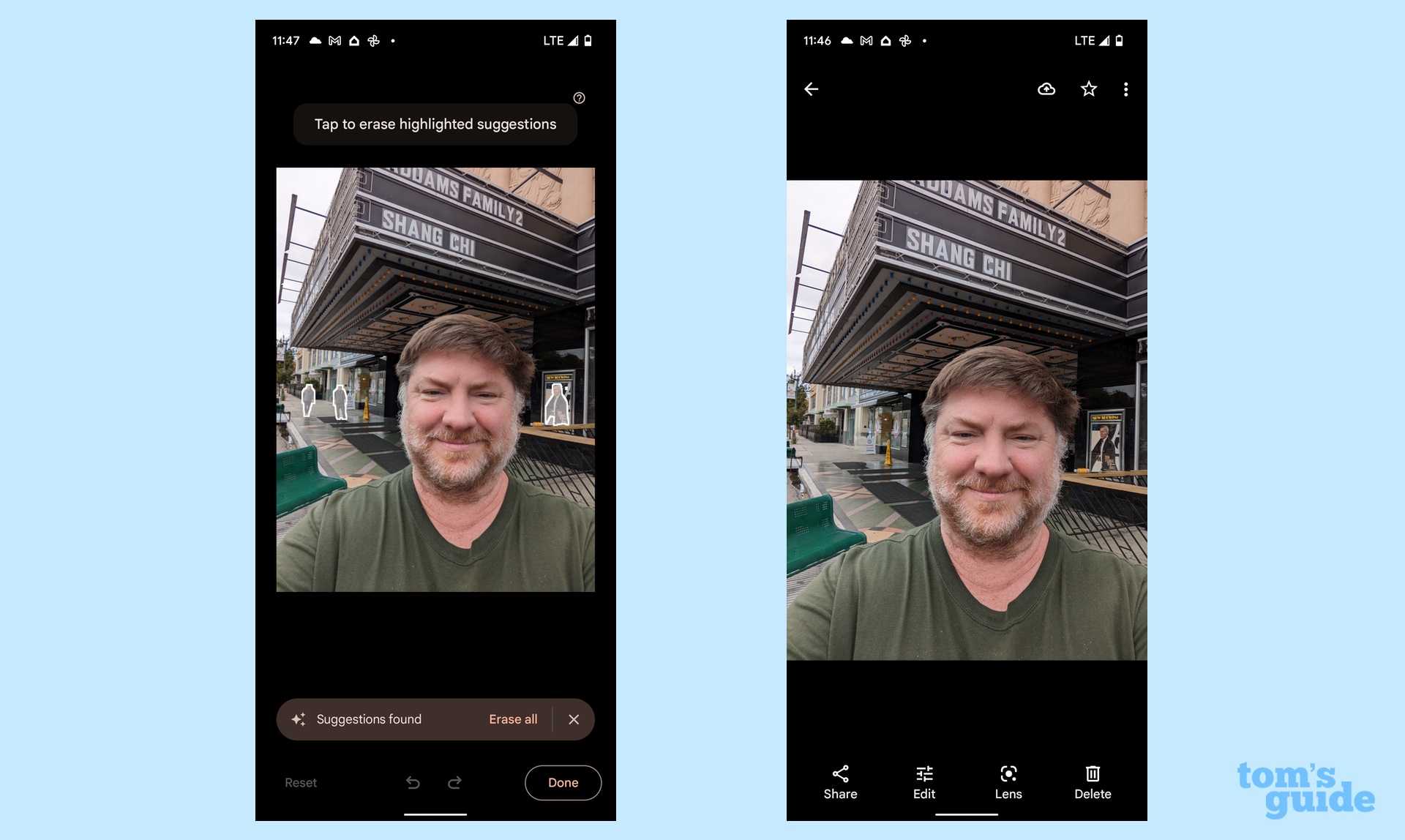

It seems like the fall phone launches of Apple and Google usually result in the two companies announcing dueling camera features we'd like to see the other one adopt. For Apple and the iPhone 13, it was Cinematic mode, which offers all sorts of neat focus tricks when you shoot video with one of Apple's new phones. For the Pixel 6, it's Magic Eraser.

Magic Eraser uses the Pixel 6's smarts to identify people or objects in the background of a photo that may be cluttering things up, giving you the chance to remove those people with a tap. It's not the only camera feature that relies on Google's Tensor chip to make your photos look a little better, but it's a lot of fun to use and is the best example of the Pixel 6's improved computational photography.

Magic Eraser is dead simple to use, and the results have been impressive so far. It's the sort of thing we'd love Apple to put its own spin on, especially since Cupertino has made the most of computational photography in its own right.

Better skin tones for everyone in photos

When I tested the Pixel 6, I compared the photos by its camera to the iPhone 13. Frankly, I thought Apple's phone did a better job with my skin tone, but then again, I'm a middle-aged white guy. Historically, cameras have done a very good job representing people who look like me while being less accurate in how they depict people of color.

The Pixel 6 looks to do something about that via its Real Tone feature. Google worked with coloring and photography experts to better tune its various algorithms to take better, more representative pictures of everyone. It's the right thing to do, and I'd like to see Apple make a similar effort with its future camera phones.

- More: Best camera phones

Philip Michaels is a Managing Editor at Tom's Guide. He's been covering personal technology since 1999 and was in the building when Steve Jobs showed off the iPhone for the first time. He's been evaluating smartphones since that first iPhone debuted in 2007, and he's been following phone carriers and smartphone plans since 2015. He has strong opinions about Apple, the Oakland Athletics, old movies and proper butchery techniques. Follow him at @PhilipMichaels.

Club Benefits

Club Benefits