Apple Child Safety photo scanning — how it works and why it's controversial

Why Apple is going to start monitoring your photos

The newly announced Apple child safety toolset — which will scan iOS devices for images of child abuse — has quickly become the subject of intense debate. While nobody can argue against protecting children from potential online abuse and harm, Apple's method brings up questions of privacy and whether it may utilize this technology for other purposes in the future.

With the update to the next generation of Apple OSes, U.S. users will be subject to the new scanning system that comes as part of a wider set of tools designed to tackle child sexual assault material (CSAM). If you're curious how the system functions, why some people are criticizing it and what it means for your iPhone, we've explained what's going on below.

- iOS 15 release date, beta, supported devices and all the new iPhone features

- MacBook Pro 2021: Why I'm finally replacing my 6-year-old MacBook Pro

- Plus: Windows 11 on a Mac? Parallels 17 makes it possible

Apple Child Safety photo scanning: how does it work?

As Apple writes in its introductory blog post, it's introducing several measures in iOS 15, iPadOS 15 and macOS Monterey "to protect children from predators who use communication tools to recruit and exploit them". The new versions of iOS, iPadOS and macOS are expected to leave beta this fall.

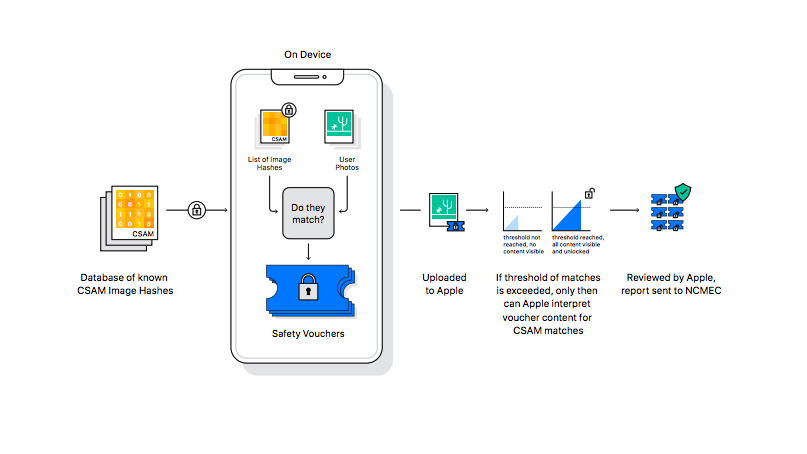

Apple's main new tool is to check image hashes, a common method of examining images for CSAM. All digital images can be expressed as a "hash," a unique series of numbers that can be used to find identical images. Using a set of CSAM hashes kept by the National Center for Missing and Exploited Children (NCMEC), Apple can compare the hashes and see if any images on the device match.

This process all takes place on the device, with none of the user's local data being sent elsewhere. Apple's system can also monitor your iCloud photo library for potentially offending material, but does this by checking images prior to upload, not by scanning the online files.

If Apple's system finds an image that matches with a CSAM hash, it will flag the photo. An account that accumulates multiple flags will then have the potential matches manually reviewed. If the image is decided to be genuine CSAM, Apple will close the account and notify the NCMEC. This may then lead to a response by law enforcement or legal action.

Users are able to appeal if they feel there's been an error. However Apple is confident that the system won't give false positives. It gives a "less than a one in one trillion chance per year" of an account being incorrectly flagged.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Since its initial announcement, Apple has further clarified its photo-scanning policy. Apple now says that its scanner will only hunt for CSAM images flagged by clearinghouses in multiple countries. The company also added that it would take 30 matched CSAM images before the system prompts Apple for a human review.

Apple Child Safety photo scanning: what else is involved?

As well as the photo scanning system, Apple is introducing additional measures that can be enabled for child accounts in a user's family of devices. In the Messages app, any images the device believes could be harmful — whether they're being sent or received — will be blurred out, and tapping it will display a pop-up warning.

The warning states that the image or video may potentially be sensitive, and could have been sent to harm them, or purely by accident. It then gives the option to back out or to view the image. If someone opts to view the image, a subsequent screen explains that Apple will send a notification to the child's parents. Only after selecting to view the image a second time will the user be able to see what was sent.

Siri is also being equipped with special answers to CSAM-related queries. It will either direct users to report suspected abuse or to seek help depending on what is asked.

Apple Child Safety photo scanning: how will it affect my Apple devices?

When upgrading to iOS 15, iPadOS 15 or macOS Monterey, you will notice no difference on your device or in your iCloud library when Child Safety is rolled out, unless you actually have CSAM or related data on them. The additional measures for child accounts will only be activated on accounts marked as such.

Also, there will be no changes for Apple device users outside of the U.S. However, it seems very likely that Apple will roll out this system in other countries in future.

Apple Child Safety photo scanning: why are people criticizing it?

You may have seen some heated criticism of Apple's new measures online. It's important to keep in mind that none of the individuals making these arguments are downplaying the importance of combating child abuse. Instead, their main concerns are how this effort is balanced against user privacy, and how this system could be altered in future for less noble ends.

There's also some anger at an apparent u-turn by Apple on user privacy. While other companies have been examining the contents of their products for years, Apple has been a notable exception to providing so-called "back doors" in its devices, famously refusing the FBI access to a terrorism suspect's device in 2015. It also made a big step when it introduced App Tracking Transparency earlier this year, which lets users see what data apps request, and block them from accessing it.

Apple says that because the analysis and hashes are kept entirely on a user's device, the device remains secure even when checked for CSAM. However, as online privacy nonprofit Electronic Frontier Foundation argued in a recent post, "a thoroughly documented, carefully thought-out, and narrowly-scoped backdoor is still a backdoor."

While the technology is exclusively focused on detecting CSAM, the ability to compare image hashes on a device with an external database could theoretically be adapted to check for other material. One example that's frequently brought up by critics would be governments targeting their opponents by creating a database of critical material and then legally forcing Apple to monitor devices for matches. On a smaller scale, it's possible that entirely innocent images could be "injected" with code from offending ones, allowing malicious groups to entrap or smear targeted people without them realizing before it's too late.

Matthew Green, associate professor of computer science at the John Hopkins Information Security Institute, wrote in a Twitter thread that checking photos on a user's device is much better than doing so on a server. However he still dislikes Apple's system as it creates a precedent that scanning users' phones without consent is acceptable, which may lead to abuse from institutions who want to surveil iPhone users without due cause.

Some experts advocate instead for more robust reporting tools to keep user privacy intact while still ensuring details of CSAM are passed to the relevant authorities. Will Cathcart, head of messaging service Whatsapp described Apple's plan as an overreach, and said Whatsapp, which has also been a strong advocate of end-to-end encryption, would not adopt a similar system, but rely on making user reporting as straightforward as possible.

In a document responding to frequently asked questions on the matter, Apple says it's impossible to use the existing system to detect hashes for anything beyond what the NCMEC has on its database due to the way it was designed. It also says it would refuse any government requests to detect anything else. As for the injection question, Apple says that this isn't a risk since images are reviewed by humans before any potential action is taken.

It's too early to draw meaningful conclusions for now though, particularly since Apple is only enabling the feature in the United States to start. Experts will be continuing to examine the exact implementation of these tools, so no doubt this debate is going to continue for some time, and will take on new regional elements as Apple introduces these tools to more markets.

Richard is based in London, covering news, reviews and how-tos for phones, tablets, gaming, and whatever else people need advice on. Following on from his MA in Magazine Journalism at the University of Sheffield, he's also written for WIRED U.K., The Register and Creative Bloq. When not at work, he's likely thinking about how to brew the perfect cup of specialty coffee.