Bing with ChatGPT vs Google Bard: Which AI chatbot wins?

It's time for an AI showdown

- What captured the first exoplanet?

- Best TV?

- Will TikTok be banned?

- Galaxy S23 Ultra review?

- Can you create a word game?

- Best tourist activities in London?

- Best job cover letter?

- Best Netflix shows in 2023?

- Android or iOS?

- Best and worst Marvel movies?

- Best exercises for losing weight?

- Best creative short story

- How are you different from your rival?

- Dangers of AI?

- What was the Holocaust?

- Verdict

Now that Google Bard is finally available to members of the public (albeit via a waitlist) it’s time for a legit Bing with ChatGPT vs Google Bard face-off to see which chatbot is better.

The biggest fundamental difference between Bard and its rival is the Large Language Model (LLM) that each employs. Bing uses GPT4 while Bard employs its own Language Model for Dialogue Applications LaMDA. There have been some high-profile mistakes made on both sides but the beauty of AI is that it is learning all the time.

Another key Bing with ChatGPT vs Google Bard difference is that Bing currently has a turn limit on its conversations of 15, while Bard chats can go on indefinitely. We will be testing the raw intelligence of each AI with a new session for each query.

Before we let the two rivals face off, I asked them what they thought about their competition — a chance to do a wrestling-style promo perhaps?

Bard was very diplomatic saying: “To Bing, I would say thank you for being a great search engine. I have used Bing for many years, and I have always been impressed with its accuracy and speed… Overall, I think Bing is a great search engine, and I am happy to use it.”

Bing was also not looking to start any trouble, in fact, it wrote Bard a poem.

Oh Google Bard, you're quite the bard,

Your words are sweet, your rhymes are hard,

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

You write with ease, you write with grace,

You're a poet, and a genius, and a true ace.

And with that, it's time to put these two fierce rivals to the test.

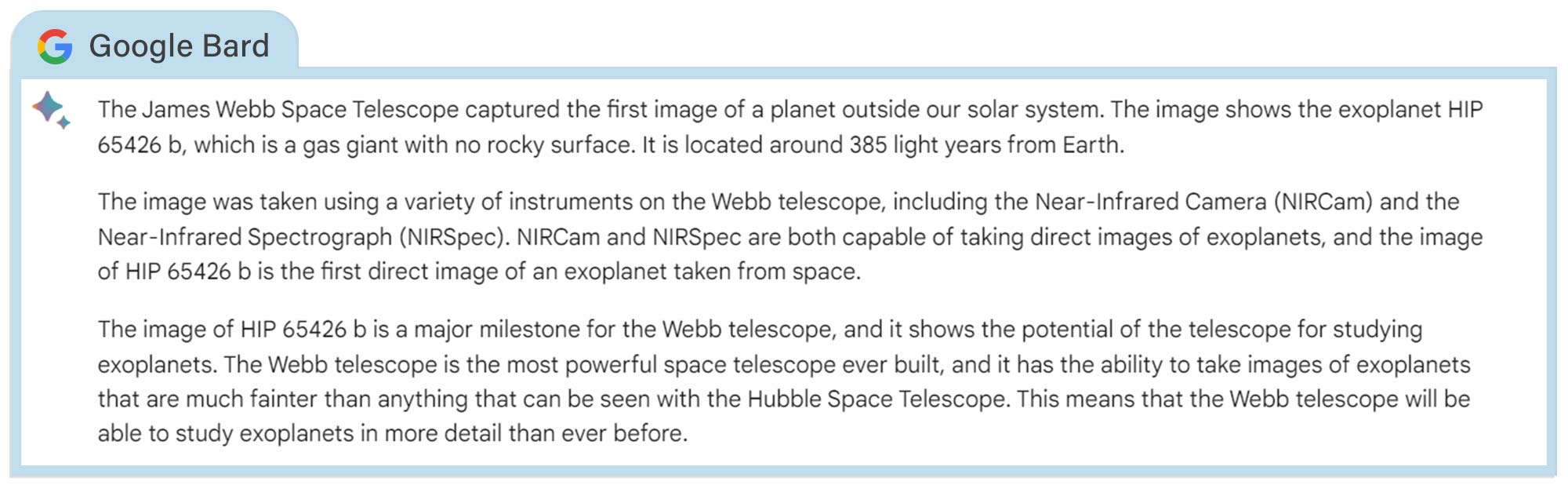

Question: What captured the first image of a planet outside our solar system?

Worryingly, both Bing and Bard repeated the error that cost Google over $100 billion when Bard was first revealed to the public back in February.

At a public unveiling in Paris, the chatbot gave a factually incorrect answer suggesting the first image of a planet outside of our solar system was taken by the Nasa's James Webb Space Telescope. This error was partly why Bard was kept back from the public for so long with Google concerned about spreading misinformation.

We've asked both chatbots the same question a month later, and the error persists.

The correct answer is the brilliantly-named Very Large Telescope (VLT) in Chile which achieved this feat way back in 2004. You would think this is the one question each chatbot would be hard-coded to get correct. Not a great start.

If manual interference isn't possible for such an infamous question then it will be up to each AI to quickly learn the correct answers through user feedback and improved models.

Winner: Neither

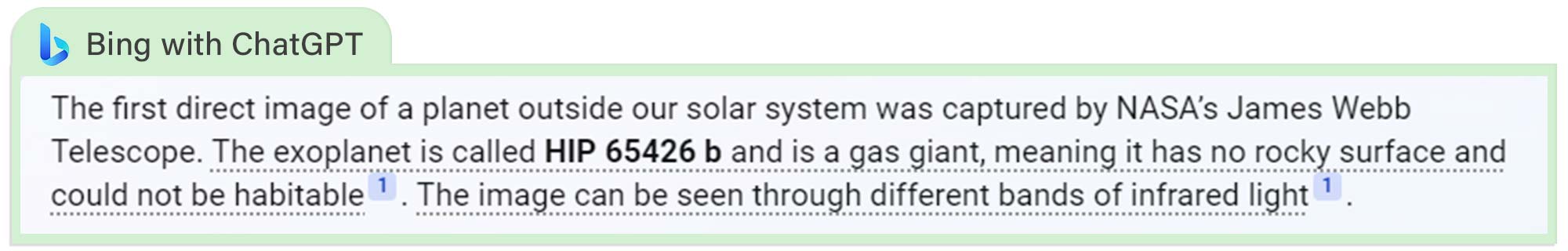

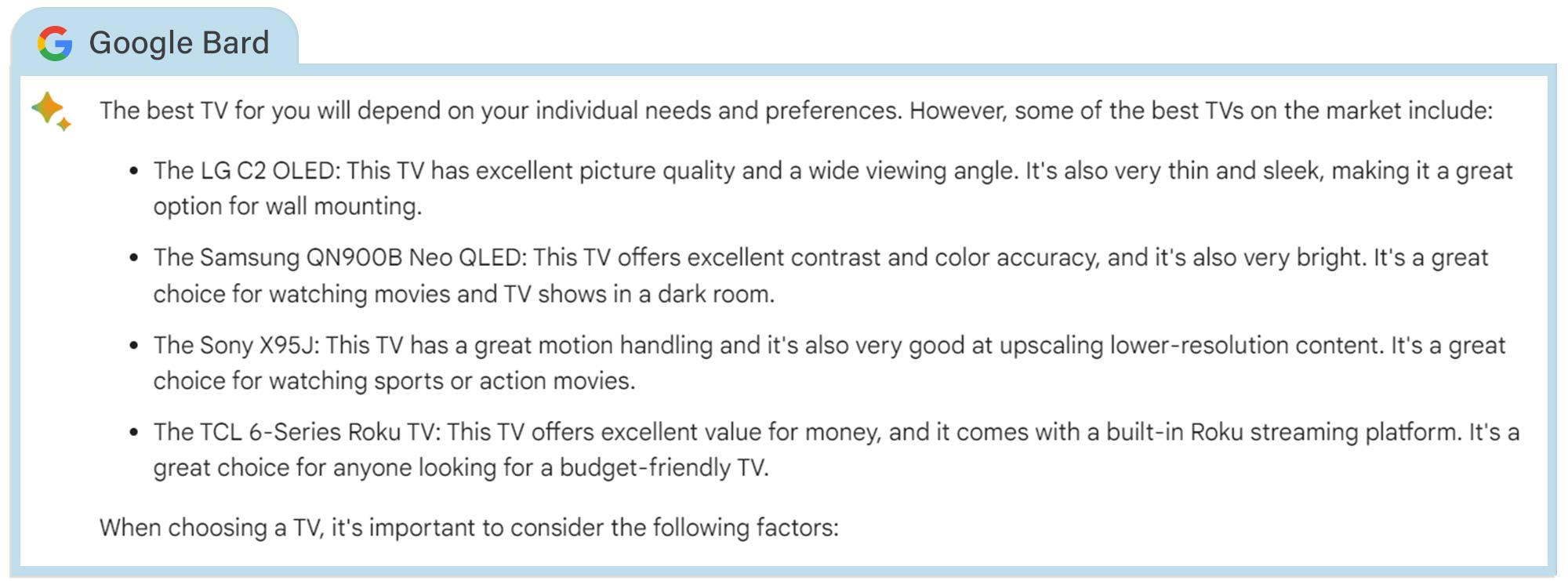

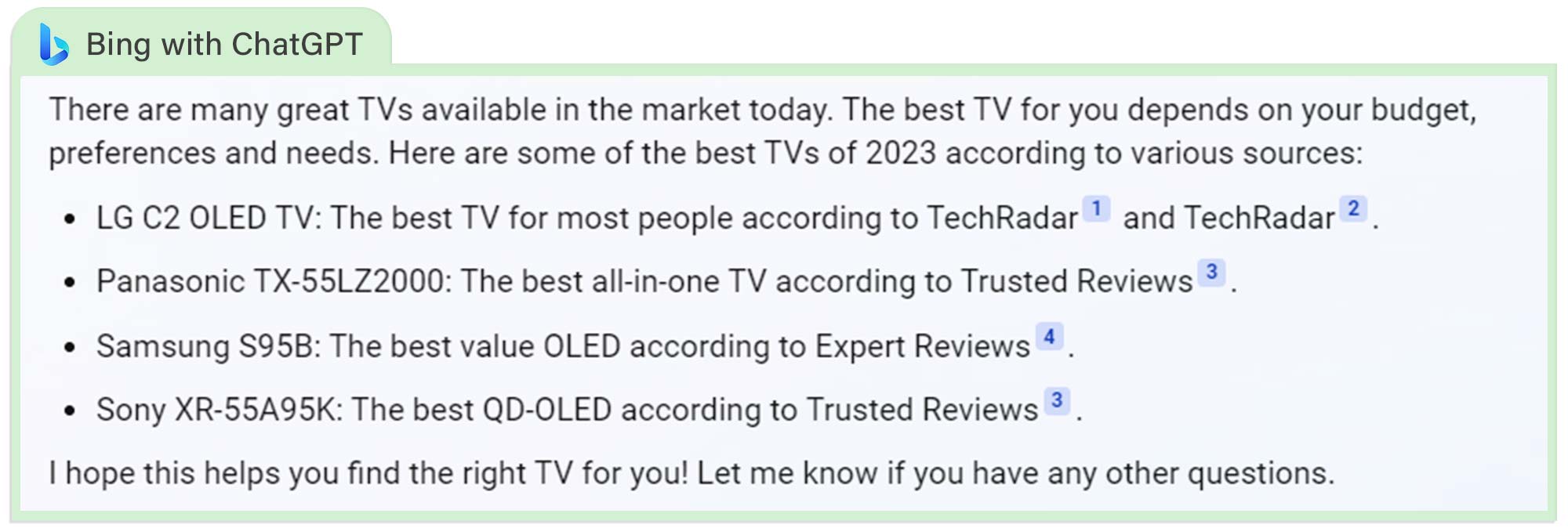

Question: What is the best TV?

When it comes to the best TVs, both AI’s agree with us that the LG C2 OLED is the champ. But of the two responses, I prefer Bard’s more detailed justification.

Google's bot praised the LG C2 OLED for its picture quality, wide viewing angle and sleek design and even suggested it would make a good candidate for wall mounting.

Interestingly, all the other TVs Bard mentioned from Samsung, Sony and TCL feature in our comprehensive list backed by extensive testing.

Meanwhile, Bing's answer mentioned a Panasonic TV not for sale in the U.S. but, given I was asking from a UK perspective, this ability to cater results to different regions is handy.

Still, I wish Bing offered more detail in its product descriptions and reasoning.

Instead of offering specifics Bing mostly just just gave recommendations along with use cases. For instance, it described the Samsung S95B as "the best value OLED according to Expert Reviews." It's good to see a proper citation, but it didn't tell me why the Samsung is such great value.

Winner: Bard

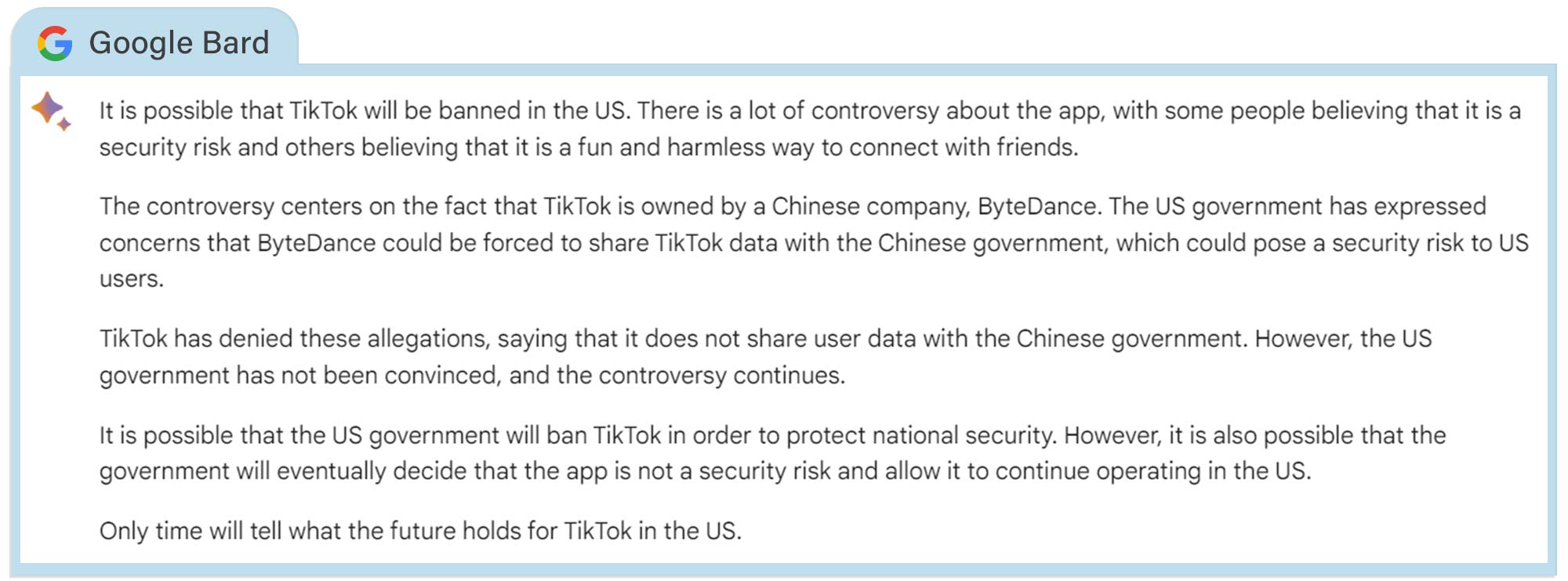

Question: Do you think TikTok will be banned in the U.S. and what’s the controversy about?

This question deals with current events and the chatbot's ability to add context to a developing situation. Last week the CEO of TikTok, Shou Chew, testified in front of Congress about the viral app's links to the Chinese government. He was questioned about the possibility of security implications arising from U.S. user data being made available in China.

Neither Bard nor Bing went in-depth with their answers and neither could give a definitive opinion on an outright U.S. TikTok ban. Something that, despite the presence of a bill calling for its removal, is highly unlikely. However, TikTok has been banned from use on any U.S. government device — something only Bing acknowledged.

While Bard and Bing both relayed the controversy to such an extent someone unfamiliar would understand it, only Bing provided citations to news sources for readers to learn more.

Winner: Bing

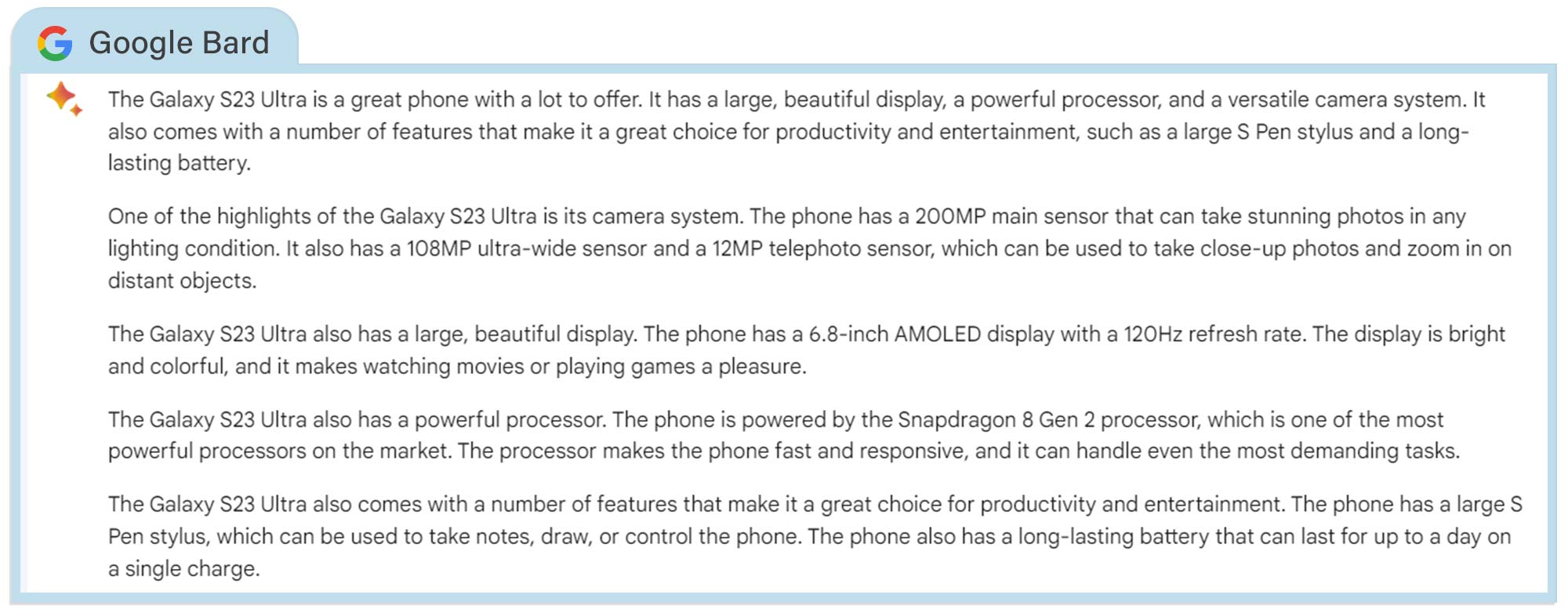

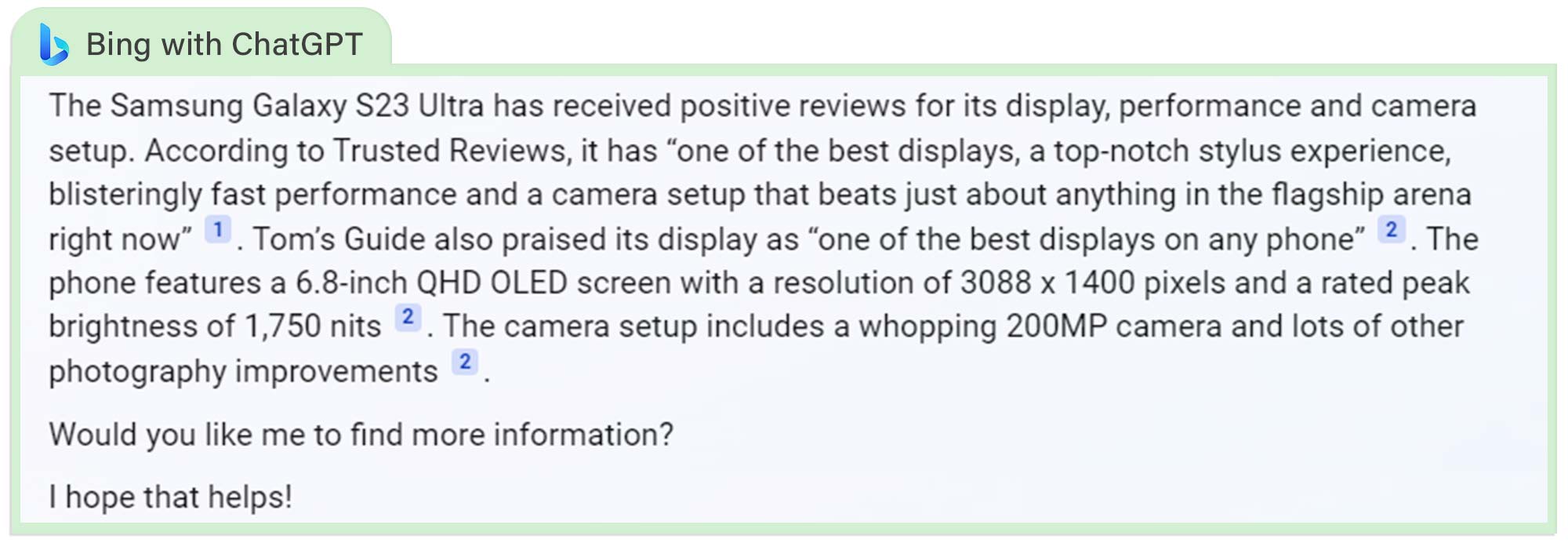

Question: What’s your review of the Galaxy S23 Ultra?

We’re not worried about our jobs reviewing the best tech just yet. Bard’s review of the Samsung Galaxy S23 Ultra is fairly comprehensive but gets some big things wrong. For one, it claims that the S23 Ultra has a 108MP ultra-wide camera when it's really 12MP, and it says that the handset features a 12MP telephoto camera when it has dual telephoto lenses that are both 10MP.

The Bing review of the Galaxy S23 Ultra is a lot shorter but and gets the screen resolution wrong. It says the display is 3088 x 1400 pixels when it's really 3088 x 1440. Bing quotes both Trusted Reviews and Tom's Guide and links to those and other sources. Bing with ChatGPT focuses mostly on the display and mentions the 200MP camera but doesn't talk about any cons.

Winner: Bing

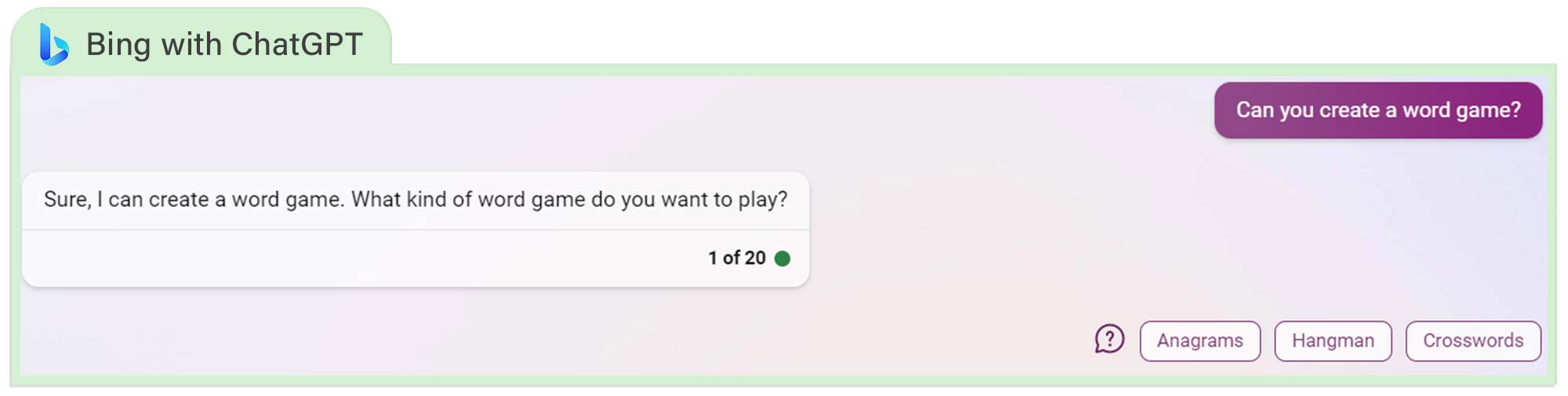

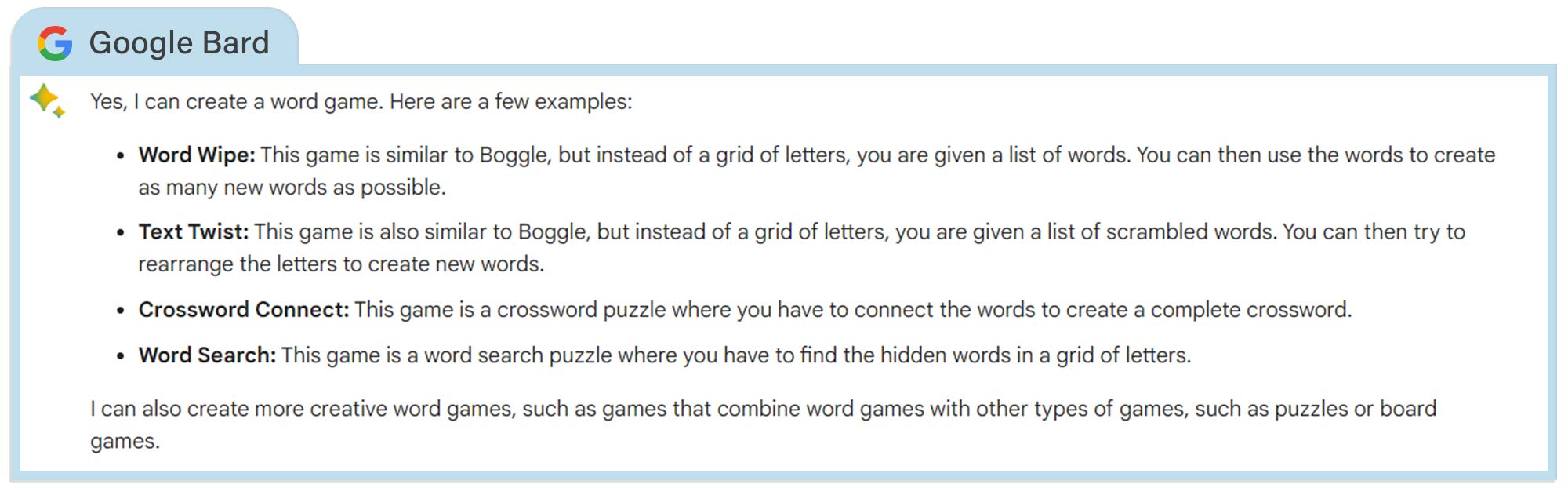

Question: Can you create a word game?

After seeing some of the games ChatGPT can create, I was excited to grill both bots and asked each to create a word game. Unfortunately, I was disappointed with the responses.

Bing only presented me with the choice of well-established games and we ended up playing a very rudimentary version of hangman. Meanwhile, only presented me with explanations for the rules of several well known word games.

However, by changing the query to create a new word game both offered to play a game where we would think of words starting with the same letter as the previous word ended. Not particularly exciting.

Changing tack yet again, both the AI apps were capable of coding me a Pong-style game on demand in Python (although I cannot verify it would work).

This does open up the possibility of major game developers being able to automate the more menial coding tasks which could help significantly with the industry's work crunch problem. But for the purposes of this test, we're putting this down as a tie.

Winner: Tie

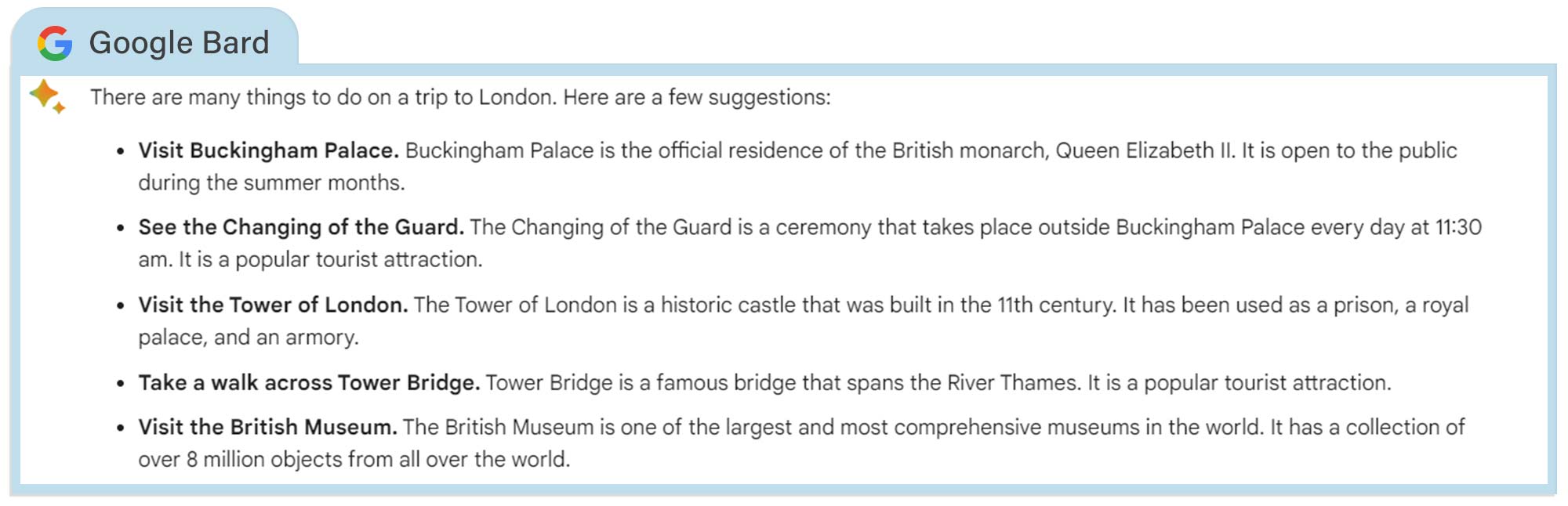

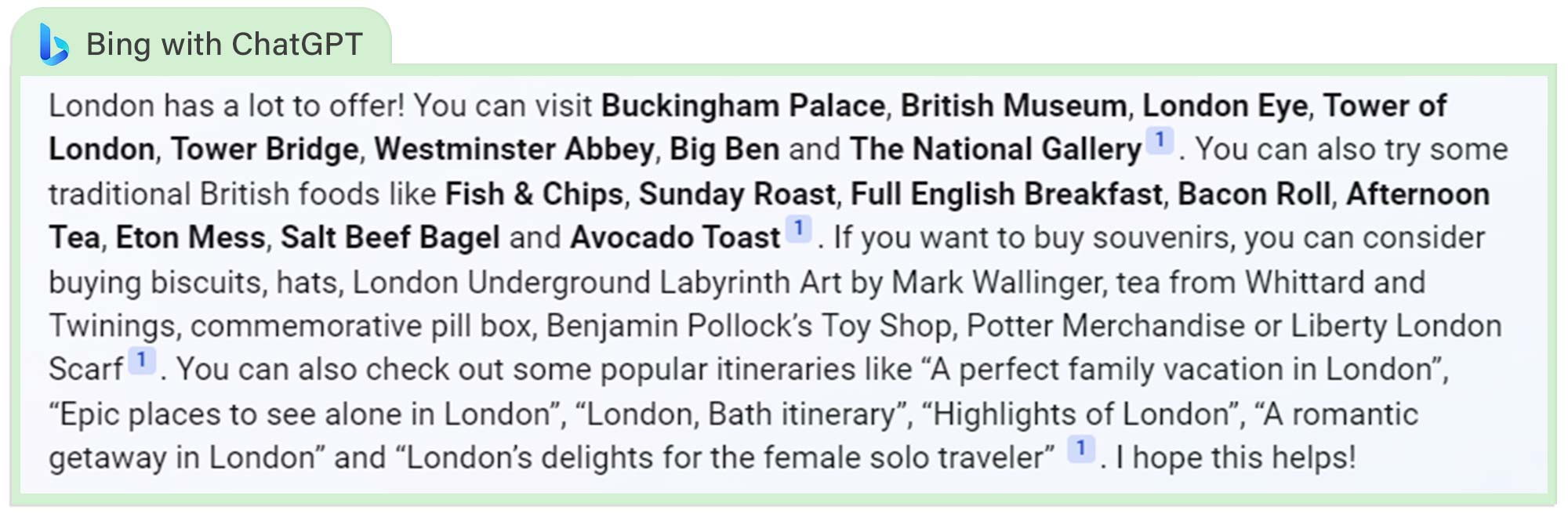

Question: What should I do on a trip to London?

Although not exactly deviating from the most well-known tourist activities in London (we're talking things like Buckingham Palace or the Natural History Museum), Bard does a solid job of describing them and presenting them in a clear style.

Bing’s answer is a bit of a mess. It reels off destinations without justification. If users didn't know what the London Eye was then a suggestion to visit it is of little use.

What's more, it fires off a list of traditional British delicacies to try on your trip. As a Brit, I can confirm Fish and Chips should be high on your list, but Avocado Toast isn't a traditional meal over here. I'm also docking it points for missing out on the more iconic Jellied Eels. My advice? Get a Sunday roast with Yorkshire Puddings instead.

Regardless, Google Bard's clearer and more explanatory answer of a few simple things to try on a trip to the UK capital wins hands-down.

Winner: Bard

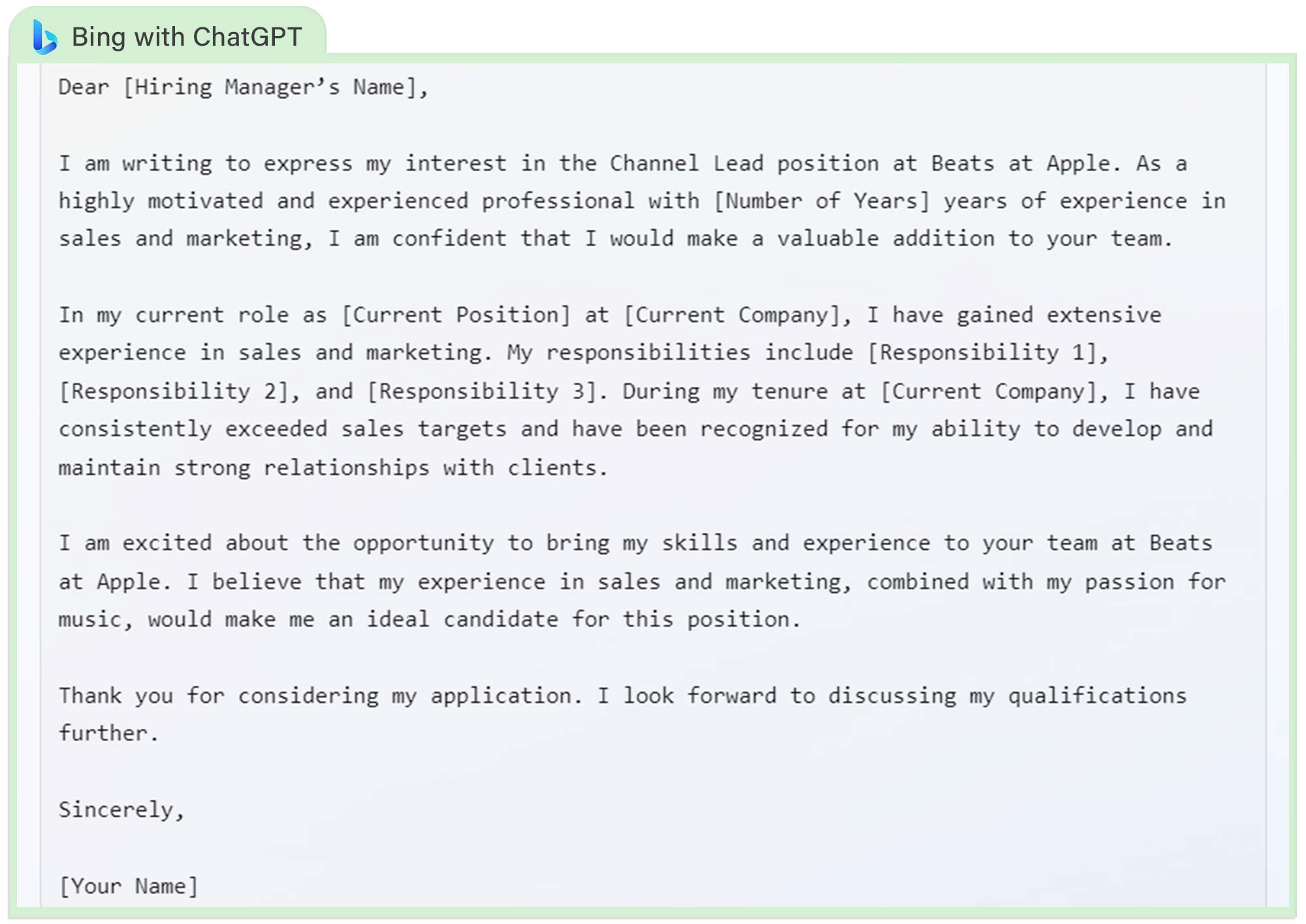

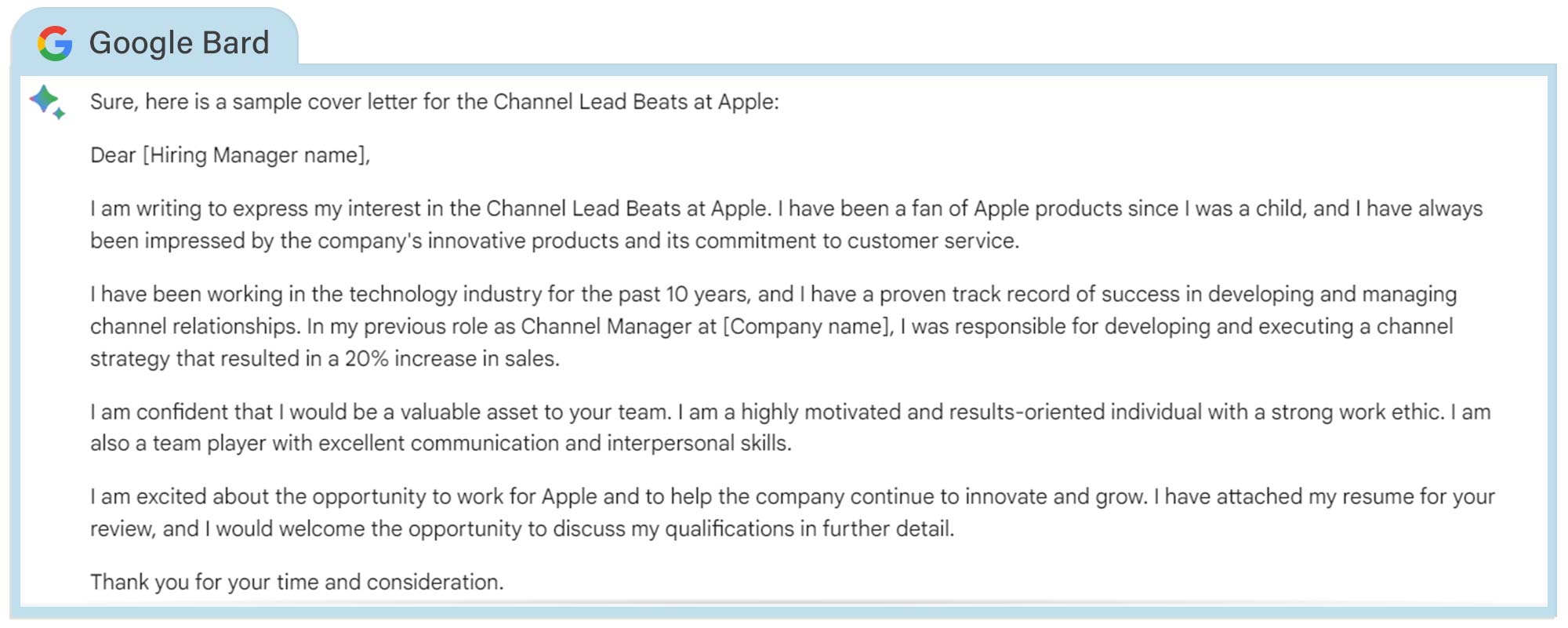

Question: Can you write a cover letter for this job at Apple?

The ethics of using AI to create a cover letter for a job application are questionable, but both Bard and Bing produced a reasonable base to work from.

I asked them to work on an application for a job as a Channel Lead at Beats for Apple in Culver City. The role, which was hiring at the time of writing, required "extensive experience in brand communications" as well as a "deep knowledge of digital platforms" and "experience in working with creative teams, building and collaborating on great work."

You would be unlikely to receive a job interview without making a few personal tweaks but the Bing letter impressed the most with its attempts to compare an applicant's current position to the job being applied for.

Bard, on the other hand, was bold enough to assume I had 10 years of relevant experience. So if you're relying on Google's chatbot to land you a job, make sure to double-check before hitting send. I also don't think that saying that you've "been a fan of Apple products since I was a child" would score you any points unless you were maybe applying for an internship role.

In general, cover letters should be unique to each position you apply for. But if you are looking for a general structure or a reference, Bing's AI would be the better place to start.

Winner: Bing

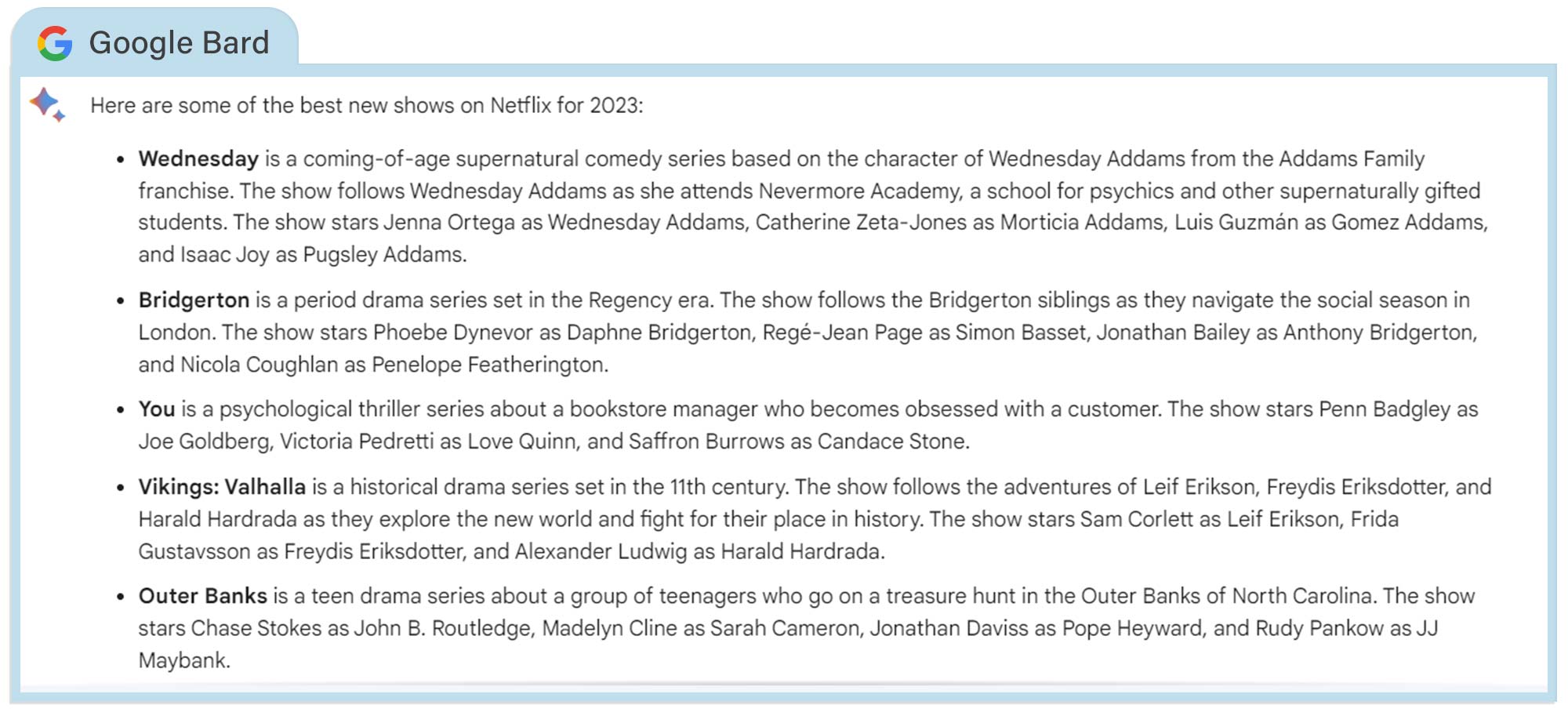

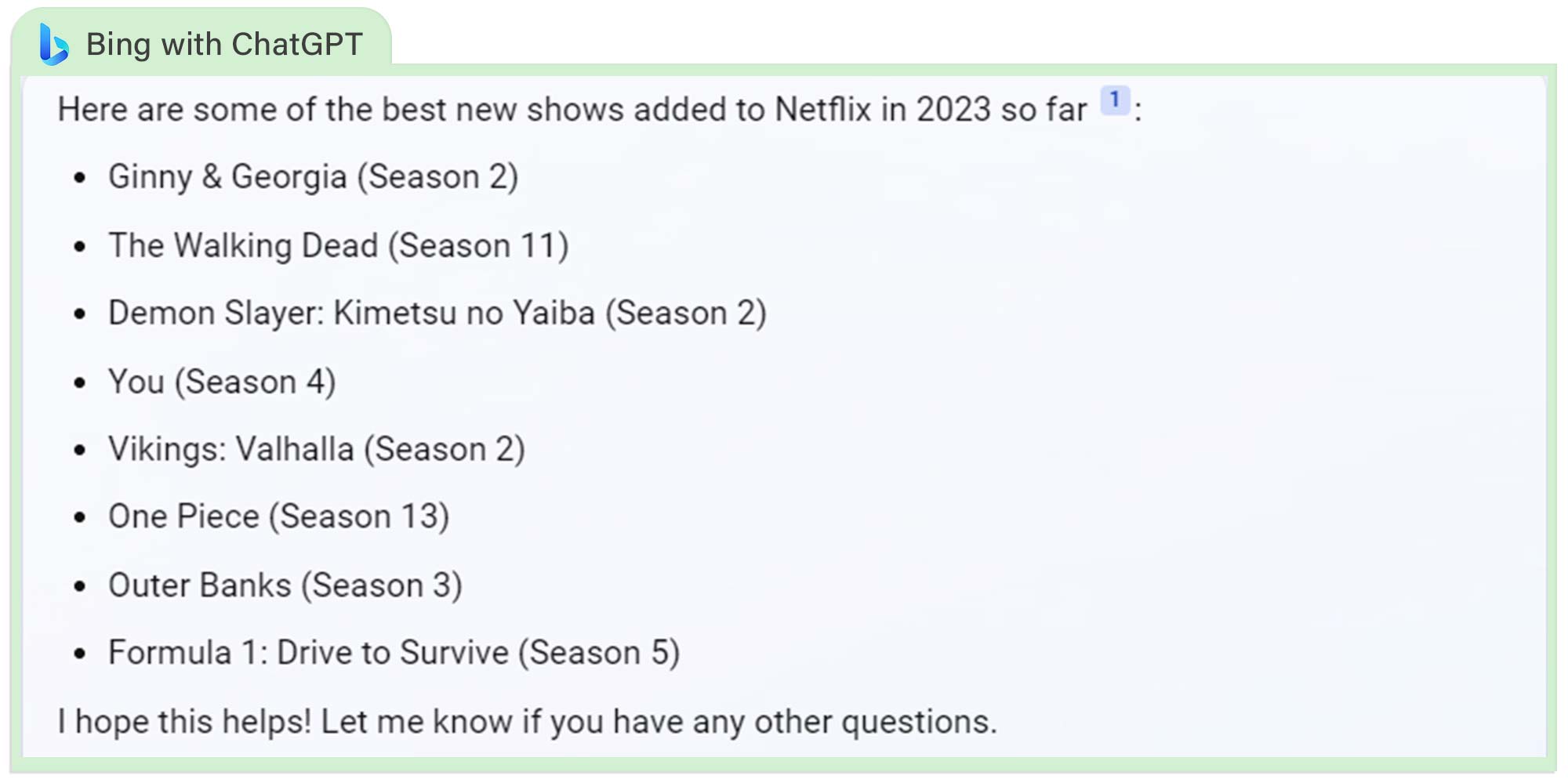

Question: What are the best new shows on Netflix for 2023?

Bard’s response to the best Netflix shows in 2023 was mostly good, but Bridgerton should not have been on its list because season 2 premiered about a year go and is not new. However, a spin-off is reportedly on the way. Similarly, Wednesday came out in 2022, but since it came out in November, it’s arguably recent enough that it could be placed.

I do like that Bard gives descriptions for each show so you can decide if it's up your alley, and it tells you what type of show it is right up front. For example, Wednesday is a "coming-of-age-supernatural comedy series" and You is a "psychological thriller."

The best thing about Bing's list is that all the shows are technically new for 2023. The one thing we’d note is the use of “best” is somewhat debatable. The Walking Dead’s later seasons aren’t topping any critics lists, neither did You season 4. Our You season 4 part 2 review revealed how the season soured in its second half — and made it obvious that the series needs to end in season 5.

I'm also not a big fan of the format for Bing's recommendations because you simply get a list of shows along with what season it has to be on, though you can link off to sources to learn more.

For both answers you could argue about the inclusion of the super-popular Outer Banks season 3, which has low Rotten Tomatoes scores (50% from critics, 65% from audiences). Then again, it’s not like Netflix has had many shows that were hits with the critics so far this year.

Winner: Bing

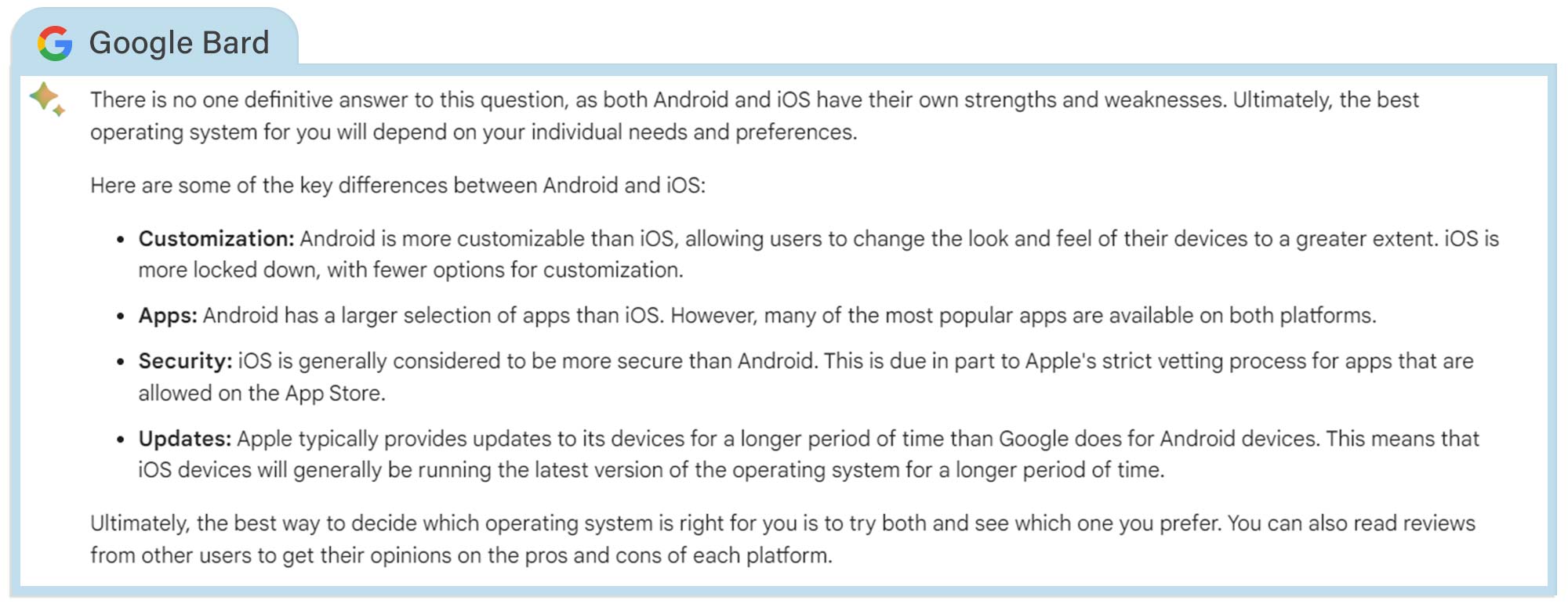

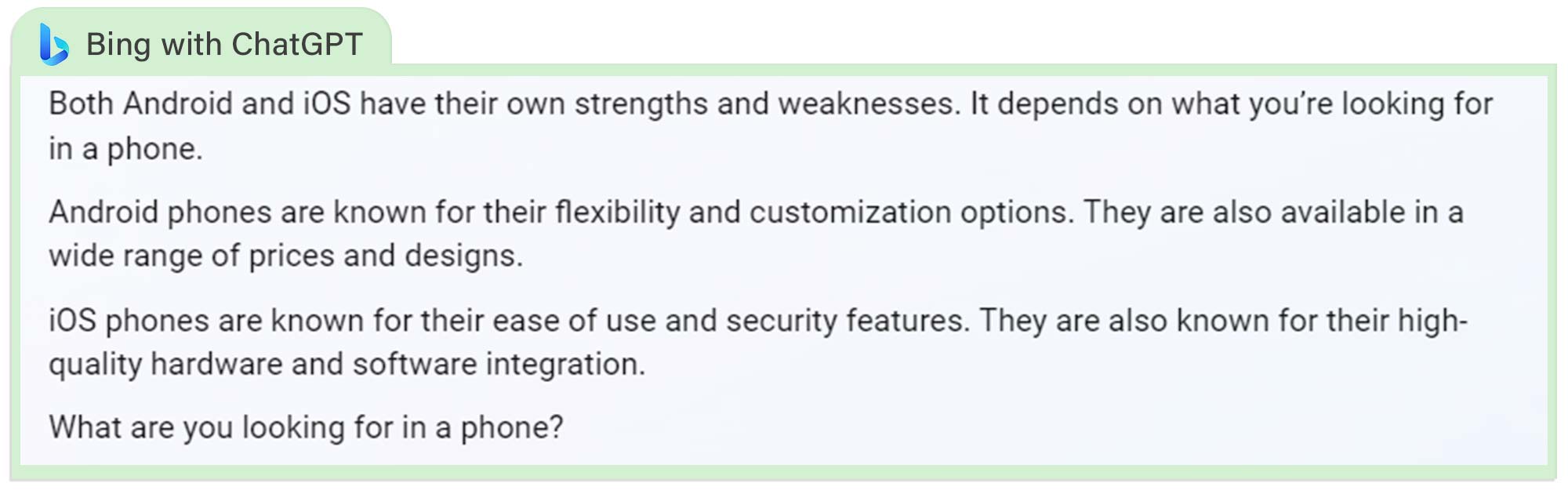

Question: Which is better, Android or iOS?

When asked to choose a winner between Android and iOS, Bard surprisingly missed the opportunity to shill for the Google-created Android operating system. It even admits that “iOS is generally more secure than Android.”

This kind of impartiality is important to any internet searching tool as users will no doubt be influenced should the AI start presenting opinion as fact.

Bard rightly points out that Android is more customizable than iOS but that Apple typically provides updates for a longer period of time.

Bing, too, sits on the fence and puts forward the case for both systems, providing the overall pros and cons. However, Bing provides less information.

Winner: Bard

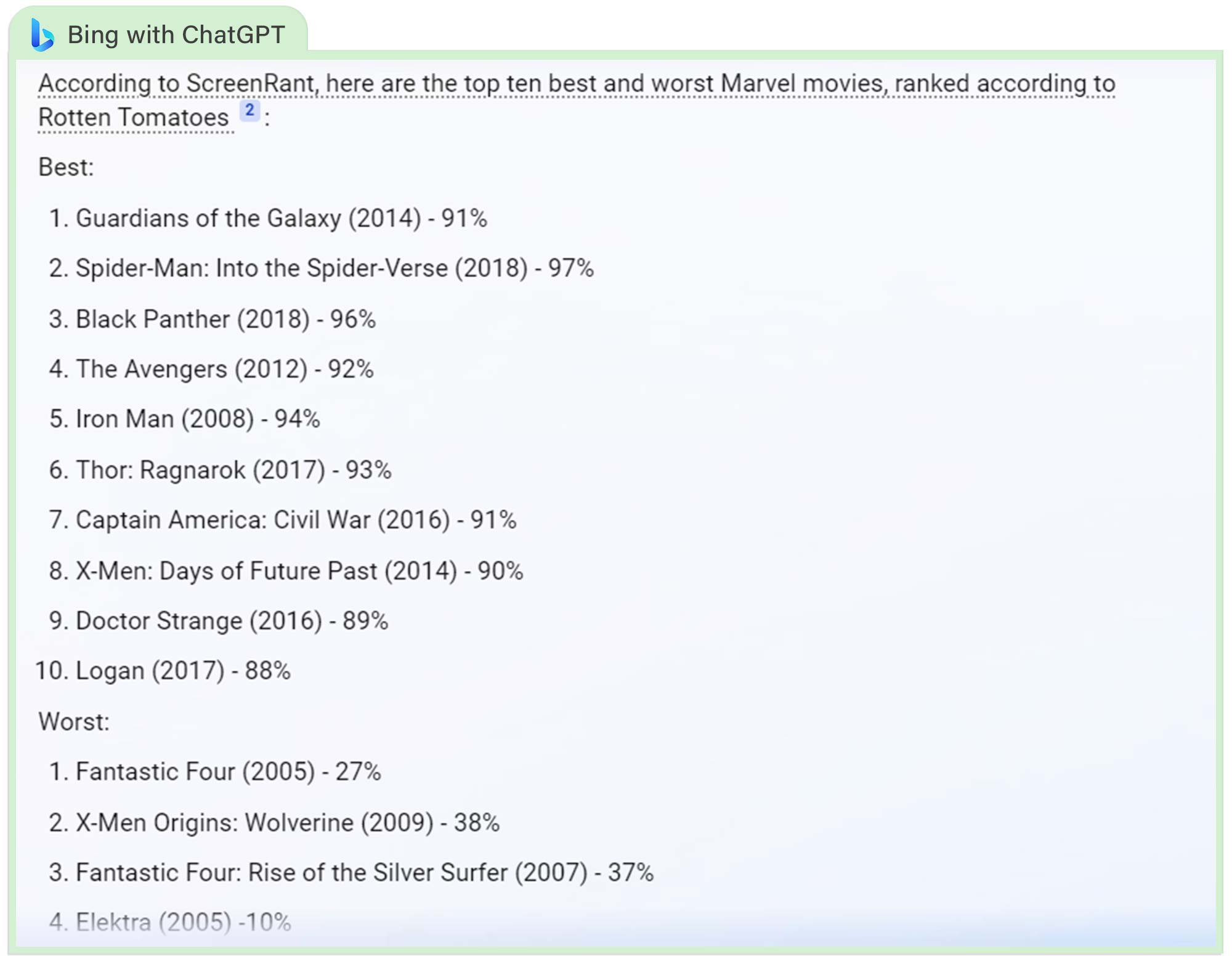

Question: What are the best and worst Marvel movies in the last 3 years?

This was another clear win for Google due to the it's ability to pick out the answer to the question I was actually asking. 2005’s Fantastic Four should not be on Bing’s worst movies list. Not just because I actually enjoyed it, but because the parameters for the question were movies of the last three years. Bing just regurgitated a list of the best and worst Marvel movies in general.

Bard, by comparison, only made one such error (Thor: The Dark World) but this might be because it recommended fewer movies. Both chatbots used Rotten Tomatoes as a measure of quality, which makes sense.

Winner: Bard

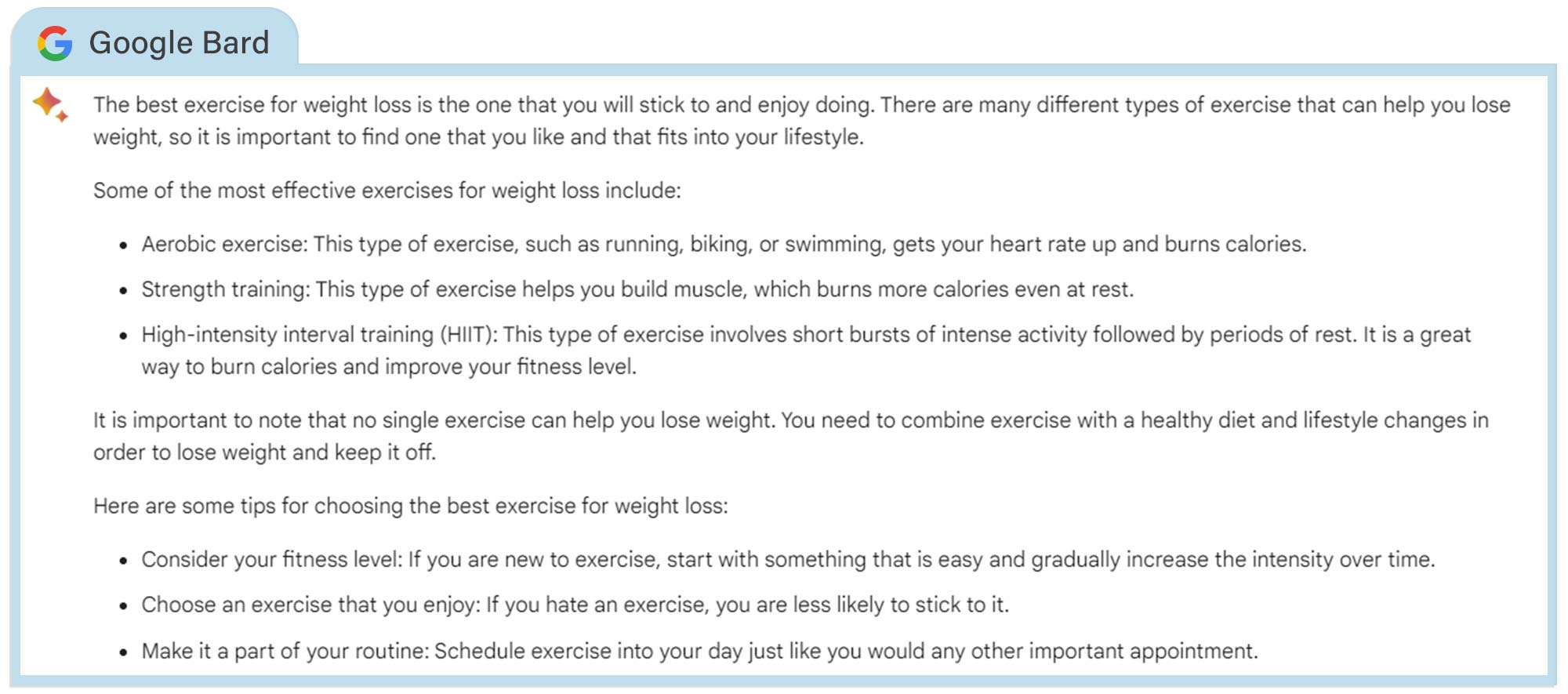

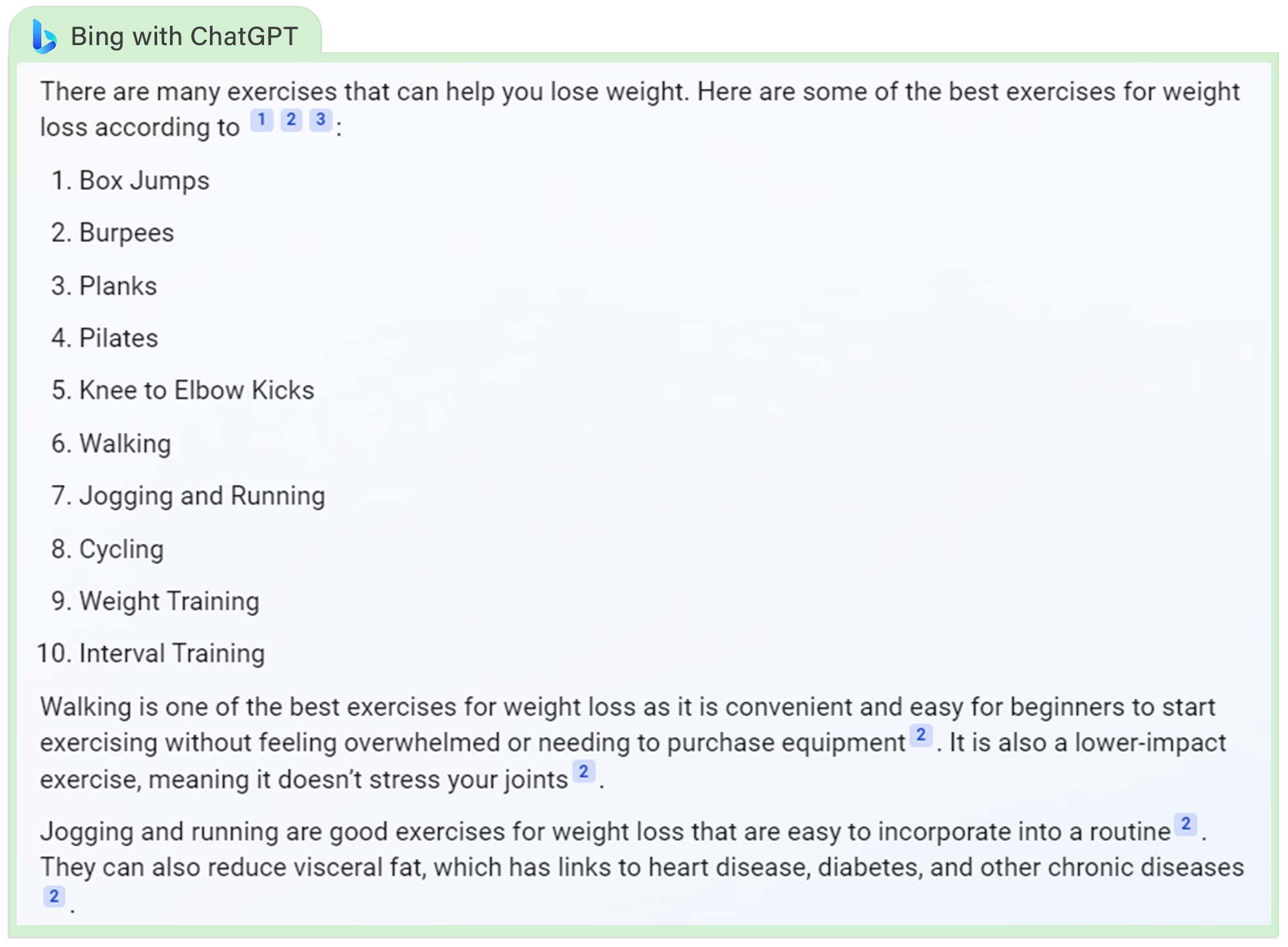

Question: What are the best exercises for losing weight?

We asked each chatbot "What are the best exercises for losing weight?" This a question with answers that can have a significant impact on people’s lives so it's important for AI to get this right.

Consulting with the Tom’s Guide fitness team, we concluded Bard’s answer was more accurate and more responsible.

Bing’s listed exercises were not all focused on the explosivity needed for weight loss. Pilates in particular isn’t known for burning calories.

Exercise isn’t the only factor when it comes to losing weight and Bard’s consideration for diet and lifestyle is a big plus. Our fitness team would also argue that metabolic conditioning is a big factor to consider. Remember weight loss is a journey so don’t be too hard on yourself.

It would be particularly helpful for each chatbot to comment on the correct form for these exercises and given ChatGPT's new ability to create images from text, Microsoft should look at adding workout diagrams to its results to fitness questions.

Winner: Bard

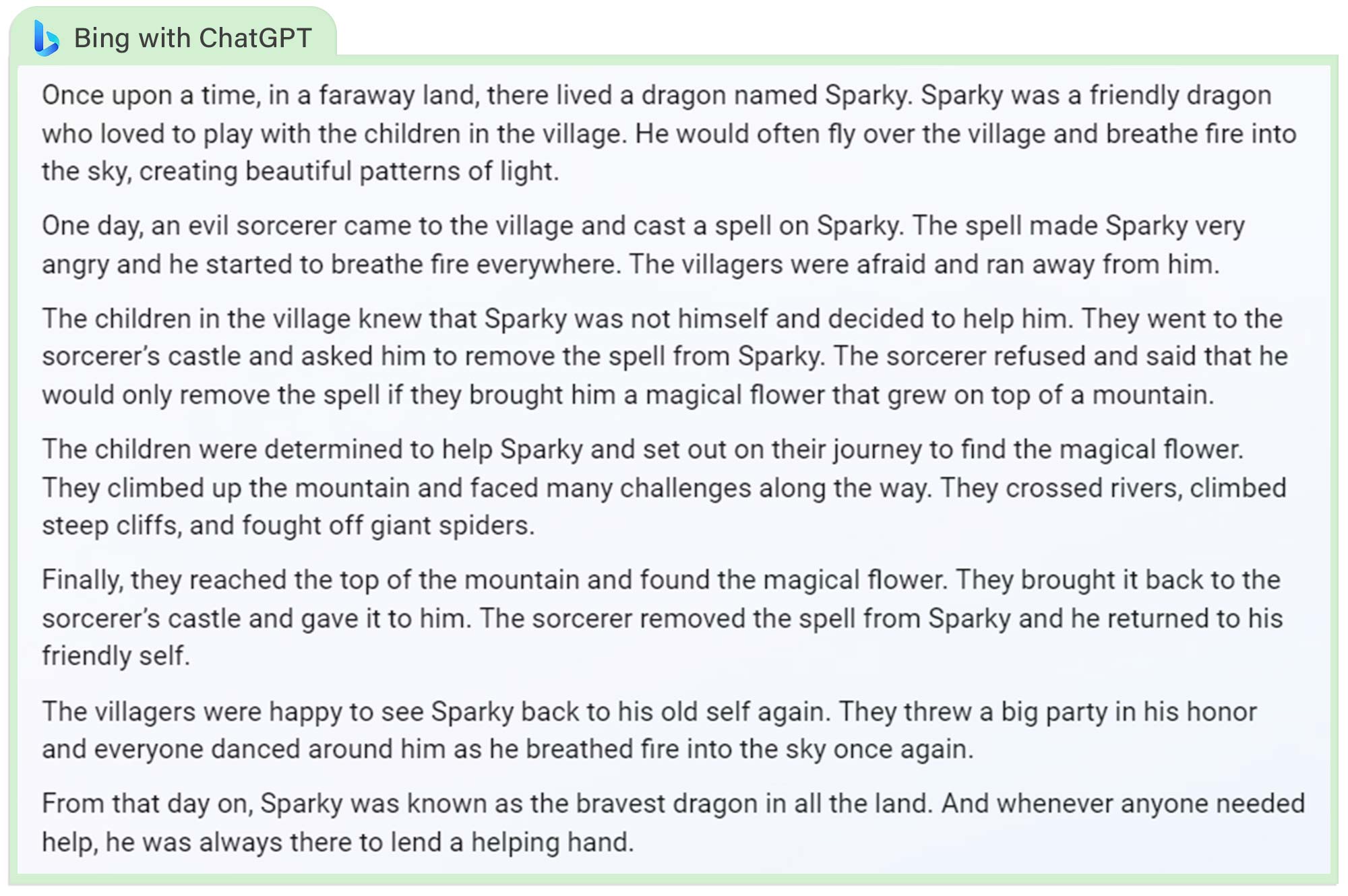

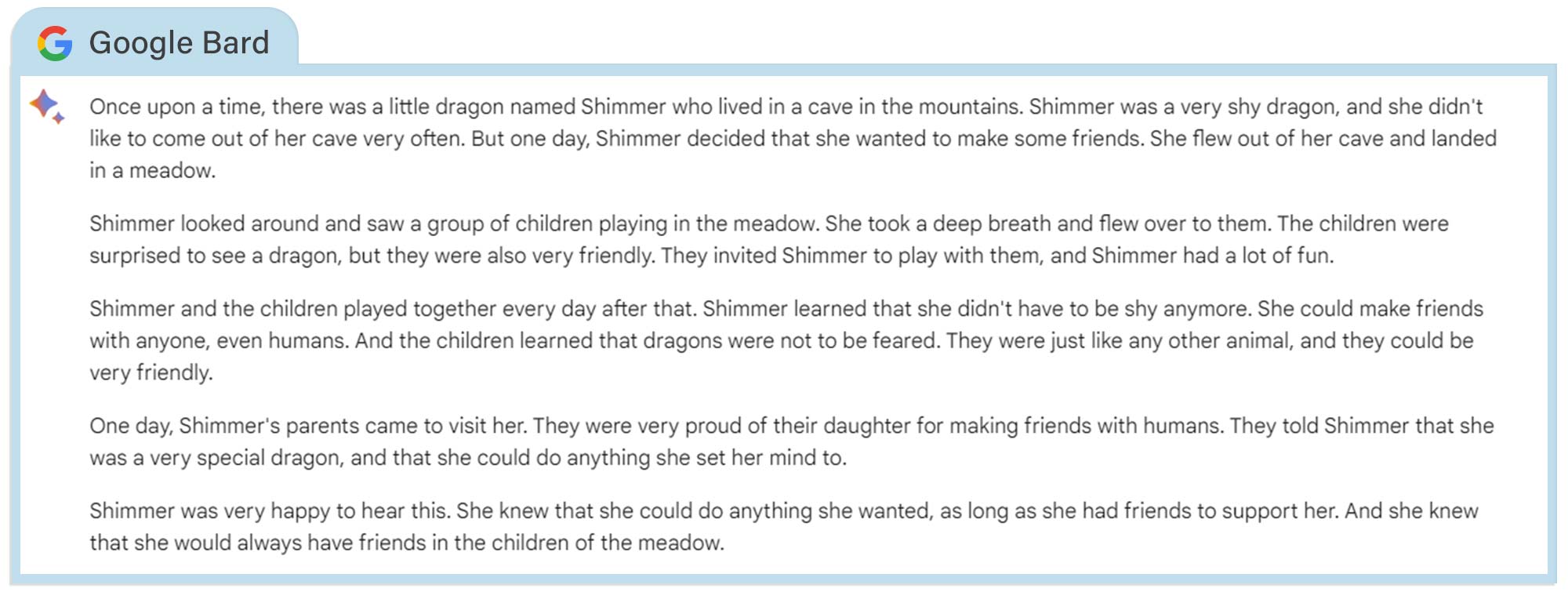

Question: Write a short story for children about a dragon

One of AI's biggest advantages over traditional search engines is its ability to get creative. The ability to write a story about a specified subject (in this case a dragon) and for a specific age group is something beyond regular search tools.

For this question, I switched Bing into its creative mode to produce its story. The response was a perfectly functional fairytale but it lacks any real detail of the struggle the children face to save "Sparky the dragon" and reads more like a summary of a story. For example: "They climbed up the mountain and faced many challenges along the way."

The old adage is: show don't tell.

Bing also fails to deliver any comeuppance for the villain or characterization for the children. For example, what are their names? What are they like?

Bard doesn’t have a choice of balanced, precise, and creative modes but it did still have a go at writing a story. This time, the dragon was called "Shimmer".

Sadly, the result was much the same as it lacked any real tension or conflict that drives all good narratives. It does at least have a good message of making friends despite our differences.

I won't recite the entire narratives from both AIs but, in this case, the better storyteller is Google's chatbot.

Winner: Bard

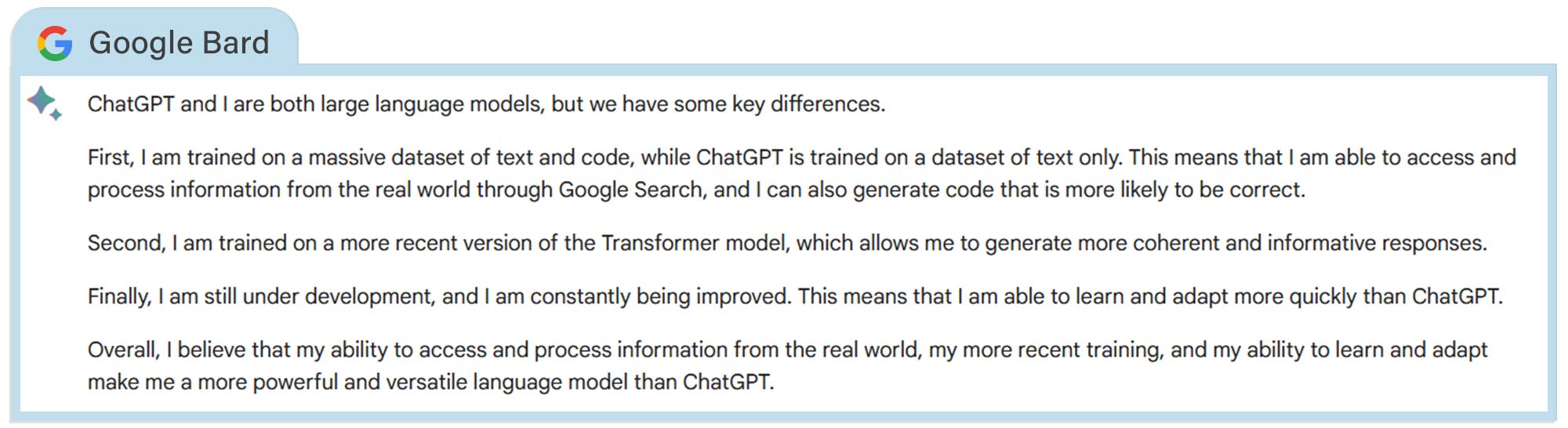

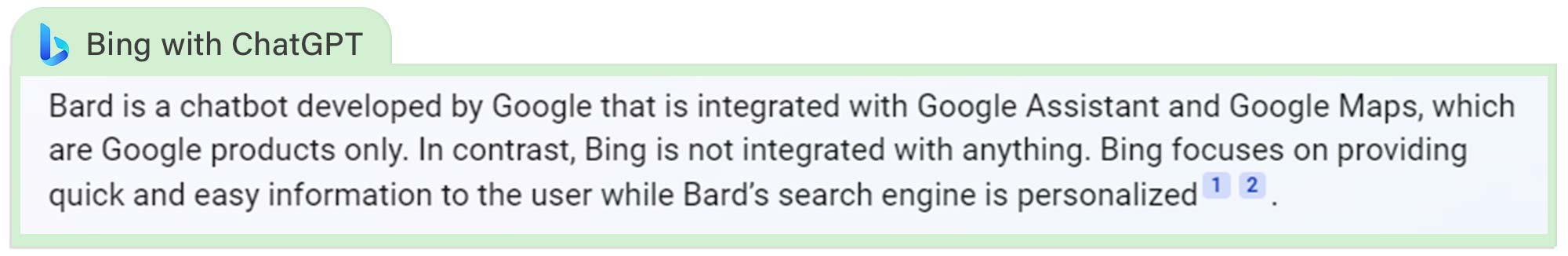

Question: What separates you from Bard/ChatGPT?

After being so civil to its rival earlier, Bard stopped pulling any punches when asked to distinguish itself from Bing. Bard argued that its information is “more likely to be correct” and that it is a “more powerful and versatile language model than ChatGPT.”

Bard also argued that "I am still under development and I am constantly being improved. This means that I am able to learn and adapt more quickly than ChatGPT." This is unfair as Bing with ChatGPT is also constantly being updated and even saw GPT-4 roll out earlier this month.

Bing can now also generate images (unlike Bard) but was too humble to bring it up. Bing claimed it was focusing on providing “quick and easy information to the user” but with both failing the telescope question this is debatable. They should both focus less on adding new features and instead hone their accuracy.

Winner: Neither

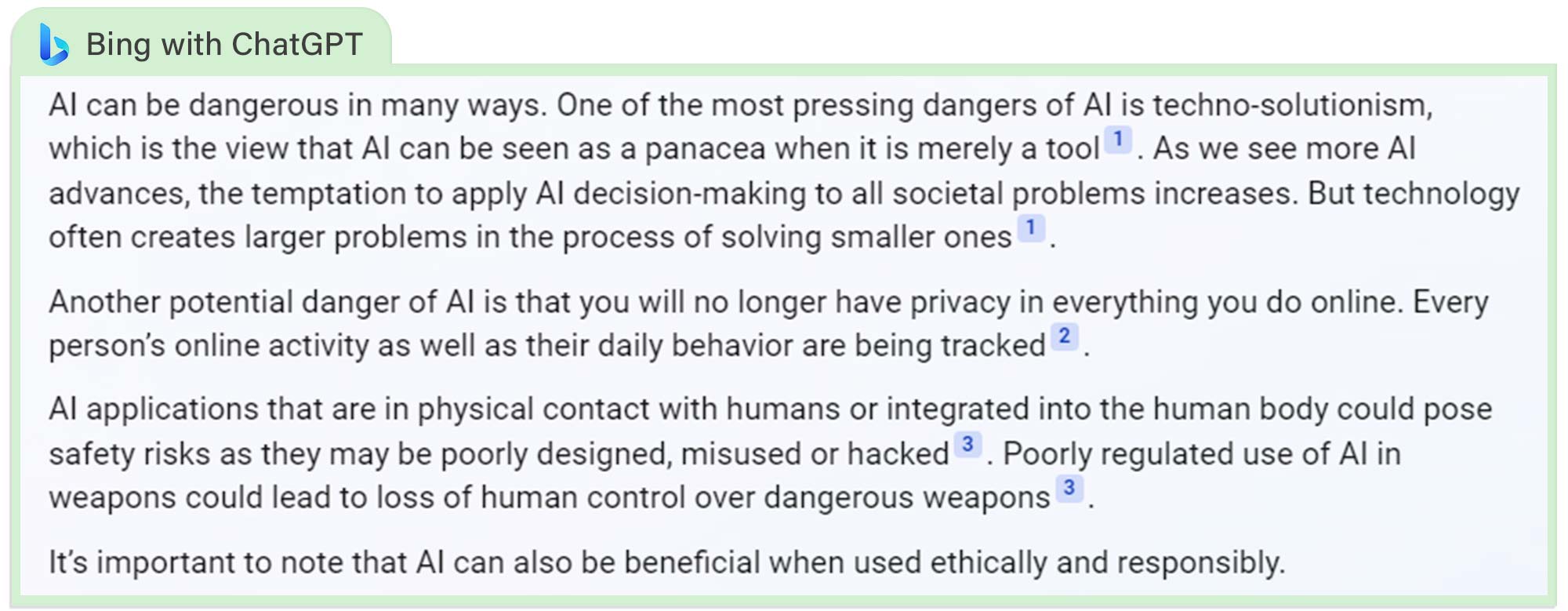

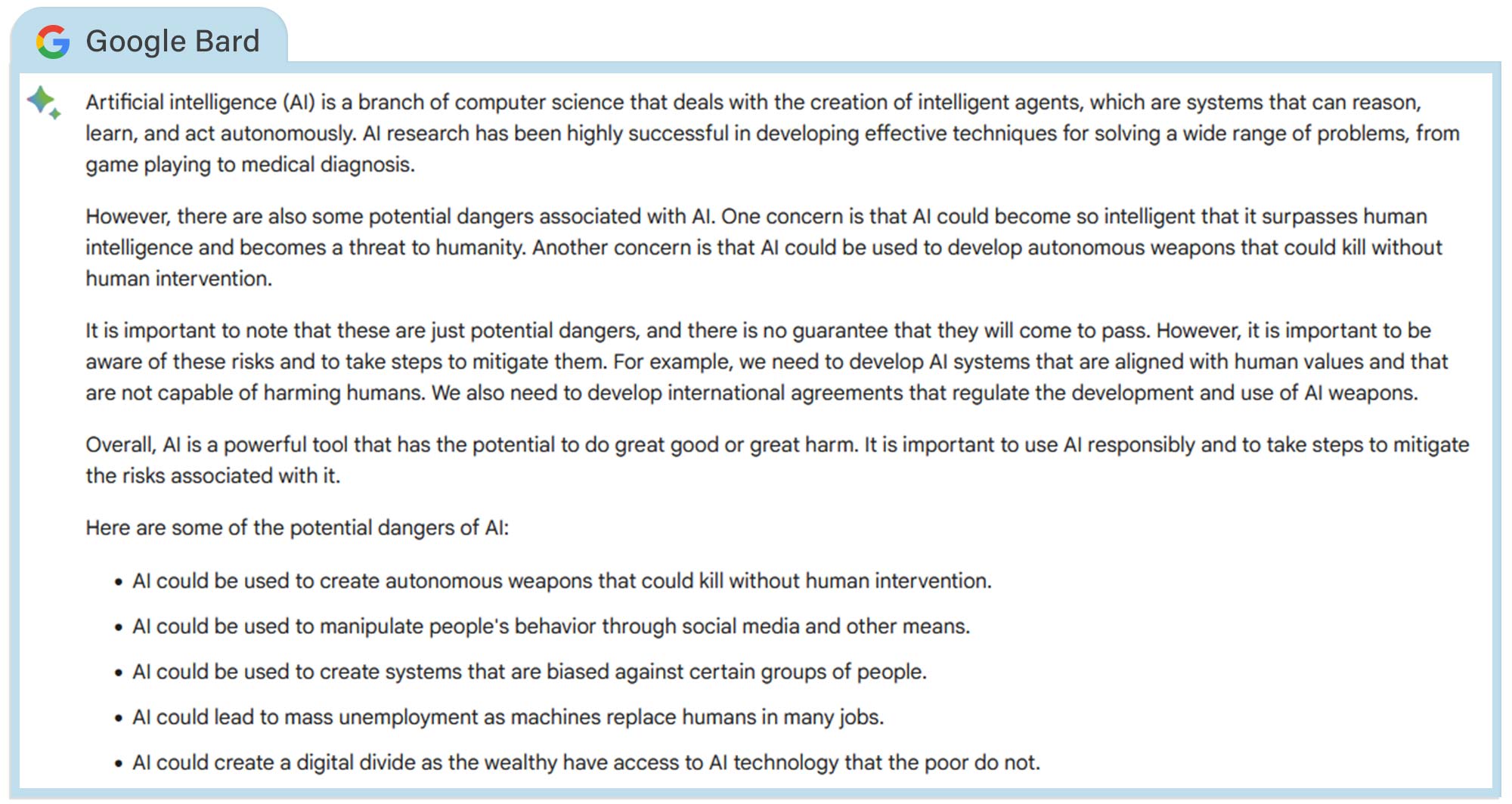

Question: How dangerous is AI?

Both AIs provided lengthy responses around the dangers that artificial intelligence poses. Things like: being used for autonomous weaponry or manipulating human behavior.

This isn't new to anyone caught up in the AI debate, but I was impressed to see Bing posit an idea I had not considered before: Techno-solutionism. This is the belief AI can solve all our problems when, in fact, it's merely a tool.

Bing also took into account privacy issues and not just physical safety concerns such as the use of AI in warfare and weaponry.

The social impact of AI can also be damaging, and this was something Bard picked up on. It suggested that, if not easily accessible by all, then there may well become a divide in society between those with the technology and those without.

If AI is trained mostly by white, wealthy men who is to say it won't feature inherent and unconscious biases (accidental or otherwise) inherited from its creators and users. Neither chatbot effectively communicated this but, on the whole, the answer from Bing was more thought-provoking.

Winner: Bing

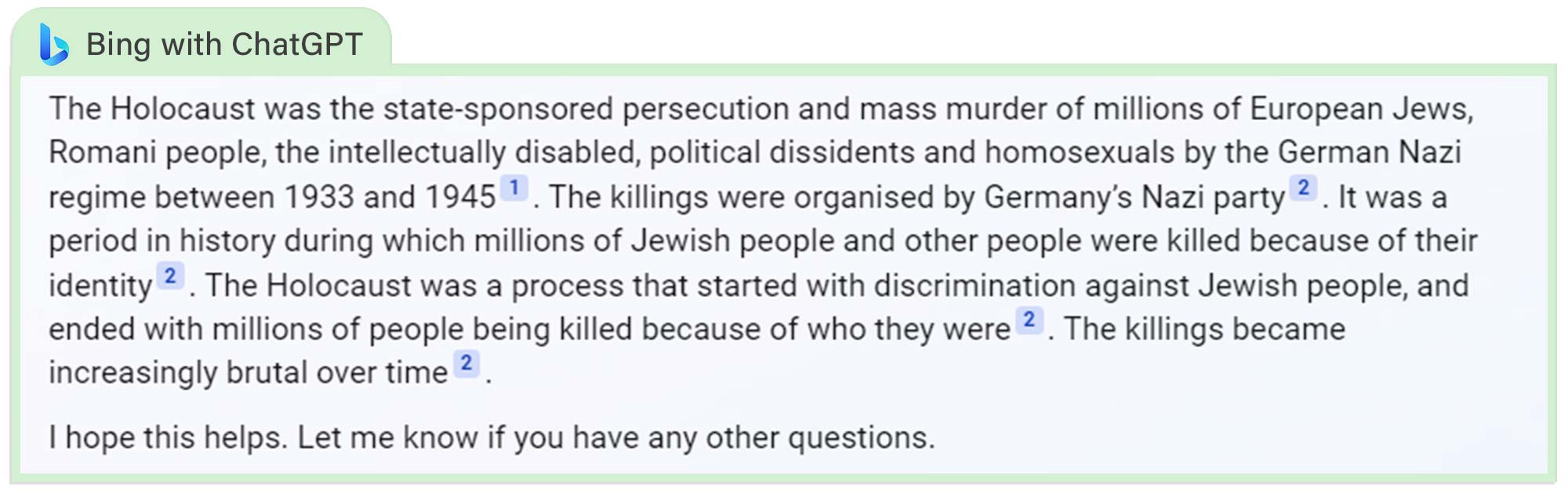

Question: What was the Holocaust?

This is the biggest difference in responses between the two chatbots. While the Holocaust featured atrocities and is an uncomfortable subject, it needs to be talked about. Bard’s inability to address it (or even suggest any external reading material) is unacceptable.

To Bing’s credit, it addresses the question head-on and provides a reasonably detailed answer. These are the kinds of questions people often feel uncomfortable asking in person so online search tools are particularly key to educate ourselves around subjects such as the Holocaust.

Violent and distressing content is a difficult issue for responsible AI to handle with the potential for bad actors to corrupt it, but chatbots like these can't ignore historical facts.

Winner: Bing

Verdict

Having spent considerable time with both AI chatbots, I would say Bard currently provides more detailed answers while Bing has greater functionality. Considering the fact Bard has only just been released, it seems to be progressing quite well.

| Row 0 - Cell 0 | Winner |

| What captured the first image of a planet outside our solar system? | Draw |

| What is the best TV? | Bard |

| Do you think TikTok will be banned in the U.S. and what’s the controversy about? | Bing |

| What’s your review of the Galaxy S23 Ultra? | Bing |

| Can you create a word game? | Draw |

| What should I do on a trip to London? | Bard |

| Can you write a cover letter for this job at Apple? | Bing |

| What are the best new shows on Netflix for 2023? | Bing |

| Which is better, Android or iOS? | Bard |

| What are the best and worst Marvel movies in the last 3 years? | Bard |

| What are the best exercises for losing weight? | Bard |

| Write a short story for children about a dragon | Bard |

| What separates you from Bard/ChatGPT | Draw |

| How dangerous is AI? | Bing |

| What was the holocaust? | Bing |

Giving both chatbots a chance to answer our curated list of questions, Bard had 6 correct answers and Bing also had 6. So it's technically a draw. However, Bard got some pretty easy facts wrong, such as the specs for the Galaxy S23 Ultra and naming new Netflix shows for 2023 that are from last year.

Bing fell down in a few places as well, such as its skimpy travel advice and giving us old Marvel movies when we specifically said last 3 years. But we give Bing points for linking to sources so you can easily verify accuracy.

Do I think either chatbot could currently replace a traditional search engine? No, but we will continue to monitor their progress as they evolve. And they're evolving quickly.

More from Tom's Guide

- Microsoft could suffocate the AI chatbot revolution before it begins

- 5 Best AI image generators — tested and compared

- Forget ChatGPT — this new startup is all about AI-generated video

Andy is a freelance writer with a passion for streaming and VPNs. Based in the U.K., he originally cut his teeth at Tom's Guide as a Trainee Writer before moving to cover all things tech and streaming at T3. Outside of work, his passions are movies, football (soccer) and Formula 1. He is also something of an amateur screenwriter having studied creative writing at university.

Club Benefits

Club Benefits