New video shows iPhone 14 Pro camera has a problem — here’s what you need to know

iPhone 14 Pro camera is giving more artificial-looking photos, here’s what is happening

Apple and Google have consistently gone head-to-head on who has a better camera phone. After years of going with a lower megapixel camera, Apple bumped up its megapixel count on its top tier iPhone 14 models last year. The iPhone 14 Pro and iPhone 14 Pro Max got a 48MP main camera and are one of the best camera phones right now in our list, followed closely by the Pixel 7 Pro. But some users and popular YouTuber Marques Brownlee (MKBHD) have now pointed out that the iPhone 14 Pro could have a problem with its camera.

MKBHD says that in a "scientific testing" of phone cameras that he carried out, the iPhone 14 Pro consistently fell into the middle tier and was nowhere near the top few phones. In fact, the Pixel 6a won this testing, beating Apple to the punch. This made him wonder where Apple was going wrong — and despite having one of the best overall camera systems on a phone, why was it not being able to produce the best looking photos.

In a video titled “What is happening with the iPhone camera” he says that his theory is that iPhone photos are being ruined by excessive post-processing.

Over-processed iPhone 14 Pro images

These days having just a high specced camera on a phone is not enough to guarantee that it will be a good camera phone. The sensor needs to be large enough to capture as much light detail as possible but it is also equally important for phones to have excellent software capabilities to enhance images.

Phones don’t have the space for large camera sensors like DSLRs so manufacturers compensate for this by software processing an image and adjusting them once they are taken.

Many new features launched for cameras these days are purely software driven since there is only so much manufacturers can do with hardware. MKBHD says manufacturers are increasingly relying on software smarts to get consumers good camera quality.

He also indicates that Google had “struck gold” with its camera and software balance right from the Pixel 3. But as soon as the company raised its camera megapixels to a 50MP sensor with the Pixel 6 Pro, things didn’t seem to go right for them. The same thing seems to have happened with Apple now. iPhones have used a 12MP sensor for years now but this time when the iPhone 14 Pro jumped up to a 48MP camera, it threw the balance off between the hardware and the software.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

It seems like the software is overworking, even though it doesn’t need to, now that the phone has a better camera sensor — resulting in over-processed and artificial-looking photos.

Apple has Smart HDR that combines multiple photos in different adjustments into one, allowing the phone to choose the best characteristics of each image and combine them into a single photo. This can sometimes end up looking unrealistic and the iPhone maker seems to highlight humans in these images, leaving the image looking pretty jarring on the whole.

Apple’s new Photonic Engine on the iPhone 14 Pro, which improves on the phone maker’s computational photography for mid- and low-light scenes, sometimes works really well in favorable scenarios like a clear sky or grass or good lighting. This can be seen in our iPhone 14 Pro Max vs Pixel 7 Pro camera shootout. In the below image of the skating rink, the iPhone 14 Pro Max delivers a brighter and warmer picture compared to the Pixel 7 Pro.

But when there are different light sources, colors and textures — the software doesn’t seem to be able to understand what is the best setting for all the elements combined.

We found this to be the case in this picture of Times Square where the Pixel 7 Pro gave us a much more clear and brighter image with different elements and details coming through like the angled glass panes above the ESPN sign. There’s nothing starkly wrong with the iPhone 14 Pro Max’s image per say, it’s just not as good and clear as the Pixel’s image.

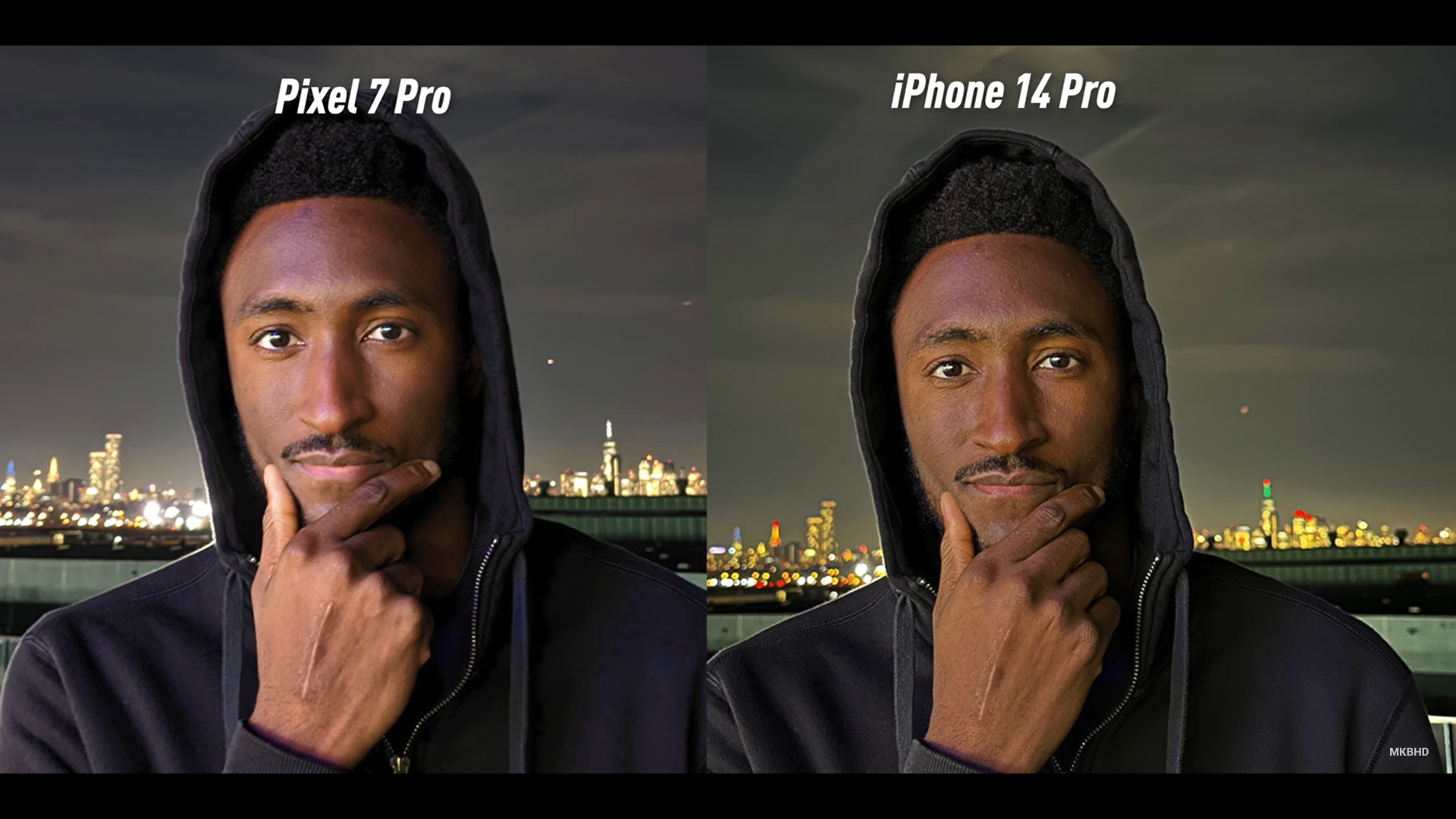

MKBHD says that the software particularly falters on skin tones, and while Google has Real Tone that does a fantastic job in photographing realistic looking skin tones in different lighting conditions, it seems like Apple just evenly lights up faces. This again, can sometimes turn out to be ok, but more often than not, it gives us excessively touched up results like the example below from MKBHD. The iPhone doesn’t account for different white balances or exposures, it just evenly lights up faces.

Multiple Reddit users also agree with MKBHD on this saying that they also noticed this issue with the iPhone 14 Pro. Once an image is taken, it takes a second for the adjustments to be made after which users have said it looks “completely different” or “washed out”.

Some users have also said that the stark difference between the bare image and the touched-up image is noticeable when it is a Live Photo and is played in the Photo Library.

iPhone camera outlook

There's no need to press the panic button just yet. The over-processing of images could probably just fixed by a few software updates by Apple and it doesn't seem to be a major issue or glitch.

Apple will keep trying to reinvent the wheel with its camera smarts. The iPhone 15 is expected to launch this year and rumors are already saying that the iPhone 15 could get a periscope camera for better long-range photography and zoom capabilities. This would be a major hardware upgrade, if true, and we hope the company restores the balance between hardware and software this year.

A few years back many Chinese phone manufacturers had the most artificially enhanced photos. At the time, the iPhone was lauded to have the most natural-looking images along with Pixel. But now it seems like it is the iPhone that has the overly processed images and we wish Apple would let us switch off the processing completely in some cases, in the upcoming iPhone 15.

For now the iPhone 14 Pro Max still holds the crown in our best camera phones list with the best camera system on a phone overall.

Sanjana loves all things tech. From the latest phones, to quirky gadgets and the best deals, she's in sync with it all. Based in Atlanta, she is the news editor at Tom's Guide. Previously, she produced India's top technology show for NDTV and has been a tech news reporter on TV. Outside work, you can find her on a tennis court or sipping her favorite latte in instagrammable coffee shops in the city. Her work has appeared on NDTV Gadgets 360 and CNBC.

Club Benefits

Club Benefits