Apple will begin using a system that will detect sexually explicit photos in Messages, Photos and iCloud, comparing it against a database of known Child Sexual Abuse Material (CSAM), to help point law enforcement to potential predators.

The announcement (via Reuters) says these new child safety measures will go into place with the release of iOS 15, watchOS 8 and macOS Monterey later this year. This move comes years after Google, Facebook and Microsoft put similar systems in place. Google implemented a "PhotoDNA" system back in 2008 with Microsoft following suit in 2009. Facebook and Twitter have had similar systems in place since 2011 and 2013 respectively.

Update (8/14): Since its initial announcement, Apple has clarified its new photo-scanning policy, stating that, among other things, it will only scan for CSAM images flagged by clearinghouses in multiple countries.

- Cloud storage vs cloud backup vs cloud sync: what's the difference?

- How to use Dropbox, OneDrive, Google Drive or iCloud as your main cloud storage

- Plus: Zoom settles $85 million class-action lawsuit — how to get your money

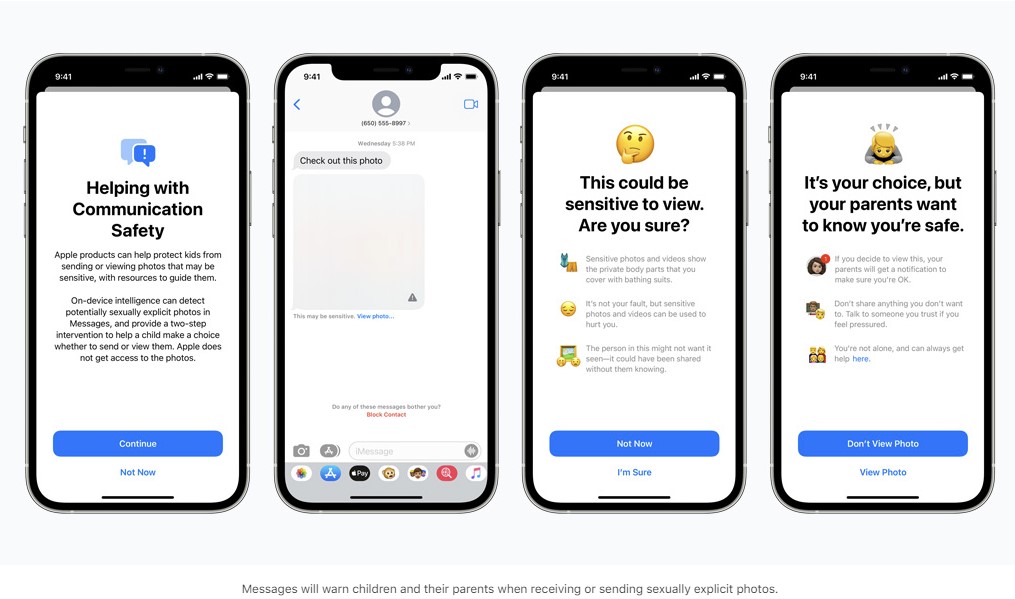

The Messages app will begin warning children, as well as their parents, when either sexually explicate photos are sent or received. The app will blur out images and say, "It's not your fault, but sensitive photos and videos can be used to hurt you."

The system will use on-device machine learning to analyze an image. Photos will then be blurred if deemed sexually explicit.

"iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM online, while designing for user privacy," per Apple's child safety webpage. "CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos."

The system will allow Apple to detect CSAM stored in iCloud Photos. It would then send a report to the National Center for Missing and Exploited Children (NCMEC).

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

According to MacRumors, Apple is using a "NeuralHash" system that will compare photos on a user's iPhone or iPad before it gets uploaded to iCloud. If the system finds that CSAM is being uploaded, the case will be escalated for human review.

Apple will also allow for Siri and Search to help children and parents on reporting CSAM. Essentially, when someone searches for something related to CSAM, a pop-up will appear to assist users.

Of course, with a system like this, there invariably will be privacy concerns. Apple aims to address this as well.

"Apple’s method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations," per Apple's child safety webpage. "Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices."

According to Apple, there is "less than a one in one trillion chance per year of incorrectly flagging a given account."

Even with the purportedly low rate of false accusation, some fear that this type of technology could be used in other ways, such as going after anti-government protestors who upload imagery critical of administrations.

These are bad things. I don’t particularly want to be on the side of child porn and I’m not a terrorist. But the problem is that encryption is a powerful tool that provides privacy, and you can’t really have strong privacy while also surveilling every image anyone sends.August 5, 2021

Regardless of potential privacy concerns, John Clark, chief executive of the National Center for Missing & Exploited Children believes what Apple is doing is more beneficial than harmful.

"With so many people using Apple products, these new safety measures have lifesaving potential for children who are being enticed online and whose horrific images are being circulated in child sexual abuse material," said Clark in a statement. "The reality is that privacy and child protection can co-exist."

Going back to iOS and Android messages, Google seems to be working on an upgrade for its messages app to make it easier for Android users to text their iPhone friends.

Imad is currently Senior Google and Internet Culture reporter for CNET, but until recently was News Editor at Tom's Guide. Hailing from Texas, Imad started his journalism career in 2013 and has amassed bylines with the New York Times, the Washington Post, ESPN, Wired and Men's Health Magazine, among others. Outside of work, you can find him sitting blankly in front of a Word document trying desperately to write the first pages of a new book.

Club Benefits

Club Benefits