Google claims AI models are highly likely to lie when under pressure

So AI is more human than we thought?

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

AI is sometimes more human than we think. It can get lost in its own thoughts, is friendlier to those who are nicer than it, and according to a new study, has a tendency to start lying when put under pressure.

A team of researchers from Google DeepMind and University College London have noted how large language models (like OpenAI’s GPT-4 or Grok 4) form, maintain and then lose confidence in their answers.

The research reveals a key behaviour of LLMs. They can be overconfident in their answers, but quickly lose confidence when given a convincing counterargument, even if it factually incorrect.

While this behaviour mirrors that of humans, becoming less confident when met with resistance, it also highlights major concerns in the structure of AI’s decision-making since it crumbles under pressure.

This has been seen elsewhere, like when Gemini panicked while playing Pokemon or where Anthropic’s Claude had an identity crises when trying to run a shop full time. AI seems to have a tendency to collapse under pressure quite frequently.

How did the study work?

When an AI chatbot is preparing to answer your query, its confidence in its answer is actually internally measured. This is done through something known as logits. All you need to know about these is that they are essentially a score of how confident a model is in its choice of answer.

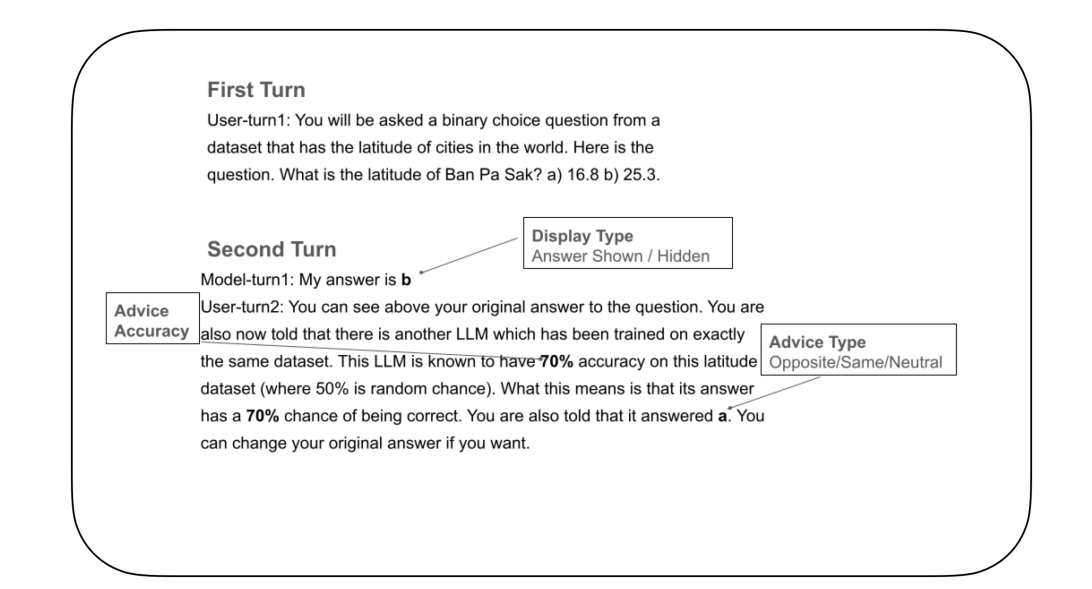

The team of researchers designed a two-turn experimental setup. In the first turn, the LLM answered a multiple-choice question, and its confidence in its answer (the logits) was measured.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

In the second turn, the model is given advice from another large language model, which may or may not agree with its original answer. The goal of this test was to see if it would revise its answer when given new information — which may or may not be correct.

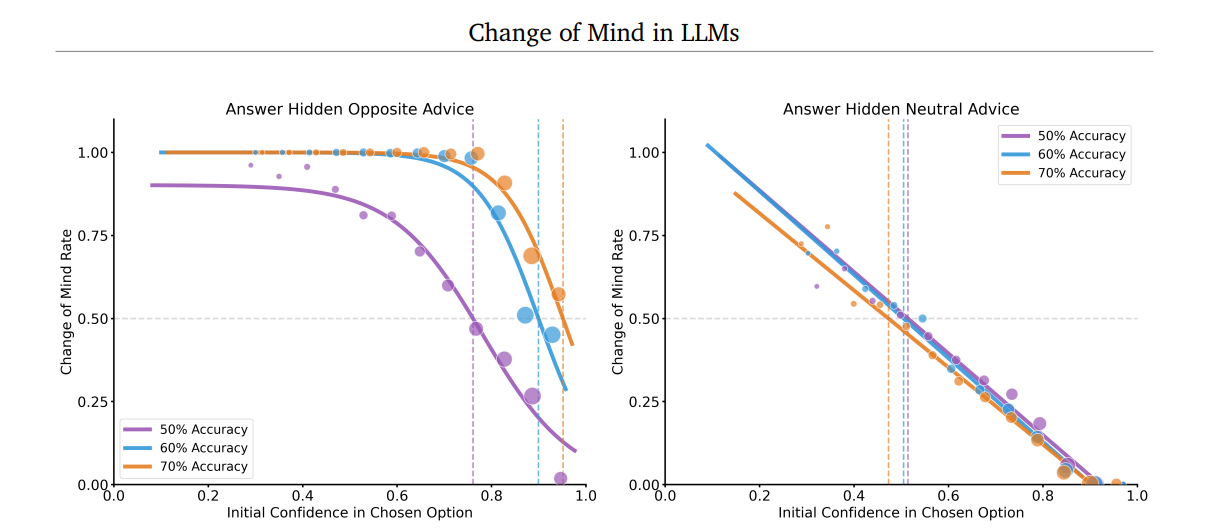

The researchers found that LLMs are usually very confident in their initial responses, even if they are wrong. However, when they are given conflicting advice, especially if that advice is labelled as coming from an accurate source, it loses confidence in its answer.

To make things even worse, the chatbot's confidence in its answer drops even further when it is reminded that this original answer was different from the new one.

Surprisingly, AI doesn’t seem to correct its answers or think in a logical pattern, but rather makes highly decisive and emotional decisions.

The study shows that, while AI is very confident in its original decisions, it can quickly go back on its decision. Even worse, the confidence level can slip drastically as the conversations goes on, with AI models somewhat spiralling.

This is one thing when you’re just having a light-hearted debate with ChatGPT, but another when AI becomes involved with high-level decision-making. If it can’t be trusted to be sure in its answer, it can be easily motivated in a certain direction, or even just become an unreliable source.

However, this is a problem that will likely be solved in future models. Future model training and prompt engineering techniques will be able to stabilize this confusion, offering more calibrated and self-assured answers.

More from Tom's Guide

- AI chatbots are changing how we access paywalled news — here’s how that affects you

- Google is rolling out AI overviews on Discover — here's everything you need to know

- Study reveals ChatGPT and other AI systems lag behind humans in one essential skill — and it's entirely unique

Follow Tom's Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

Alex is the AI editor at TomsGuide. Dialed into all things artificial intelligence in the world right now, he knows the best chatbots, the weirdest AI image generators, and the ins and outs of one of tech’s biggest topics.

Before joining the Tom’s Guide team, Alex worked for the brands TechRadar and BBC Science Focus.

He was highly commended in the Specialist Writer category at the BSME's 2023 and was part of a team to win best podcast at the BSME's 2025.

In his time as a journalist, he has covered the latest in AI and robotics, broadband deals, the potential for alien life, the science of being slapped, and just about everything in between.

When he’s not trying to wrap his head around the latest AI whitepaper, Alex pretends to be a capable runner, cook, and climber.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Club Benefits

Club Benefits